Pages in this blog

▼

Monday, June 30, 2014

Computing as Model for Mind, Games, and the Digital Humanities

Just a quick note here, on my hobby horse about the stark neglect of computer as model for the mind in digital criticism, a hobbyhorse I’ve ridden in this working paper, Two Disciplines in Search of Love.

By digital criticism I mean, of course, the use of digital tools for the study of literature. But that is by no means the alpha and the omega of digital humanities, it’s just what most interesting to me because of my interest in literature. But the digital humanities surely extends to media studies – though I understand there are boundary issues there – and media studies must include games and, of course, film. And these days film includes digital special effects, which entails the simulation of the phenomena being images.

The thing about digital games is that many of them will use the kinds of AI techniques originally developed back in the 1970s and 1980s to investigate narrative and at least some of those techniques were conceived as simulations of human mental processes – toy simulations, but simulations nonetheless. So the concepts that first attracted me to cognitive science are instantiated there in the objects of study. Can scholars who investigate those games really avoid coming to terms with the concepts driving them?

I think not. I see no way for the digital humanities to avoid beginning to think in terms of the computational mind. There will be squirming and rearguard invoking of humanistic imperatives and the like but it’s not the 1970s, when it was easy to side-step the issue. And, as I’ve posted recently, I even see steps in that direction (The Digital Mind Creeps up on Digital Humanities, DH2014: Computing the Literary Mind).

Green Villain 002 on 3 Quarks Daily

My latest essay for 3 Quarks Daily is now up: Graffiti is the most important art form of the last half-century... It features photos of a production on Fairmount St. in Jersey City organized by Green Villain and featuring members of MSK, one thousands of graffiti gangs or collectives.

It’s not simply about graffiti itself, but also about what graffiti enables and provokes. So-called street art followed from graffiti’s liberation of public space though it is often quite different from graffiti.

What will the visual arts become as graffiti continues to percolate through the culture?

Sunday, June 29, 2014

Saturday, June 28, 2014

Children in Search of the Dance

I first published this two years ago, but it's worth thinking about again.An Informal Ethnographic Portrait

Last Thursday evening I went to a concert by the Gordys, a local group in Hoboken, New Jersey, that performs an eclectic mixture of folk, rock, and klezmer music. The performance is an annual event and this was, I believe, the 11th or 12th such concert. The concert was held at 7 in the evening in Frank Sinatra Park, which is located on the Hudson River in full view of Manhattan.

I’d been to a Gordy’s concert two years ago (see photos here) and knew how it would go. In particular, I knew that there’d be lots of families with children and that, near the end of the concert, when they went into klezmer mode, there would be dancing. That’s what interested me this time, the dancing; I wanted to see what the kids did.

It turned out that the most interesting kid action occurred before the klezmer, when the adults got up and danced in circles and the kids managed to fit-in however. Of course, I didn’t realize that until after I got home and started looking through my pictures. Fortunately I did manage to snap enough photos to illustrate what captured my interest.

So, first I’ll say just a little more about the Gordys, then I present a run of photos with descriptive comments, and last I’ll do a bit of thinking.

The Gordys

As I’ve indicated, the Gordys perform and, for the most part, live in Hoboken. They are friends in real life and, consequently, the audience for their concerts tends to be friends, friends of friends, and friends of friends of friends, plus, I assume, a straggler here and there who’s attracted to the music. Here’s a photo I took two years ago that shows more or less the whole group as it existed then (there’ve been one or two personnel changes since then):

The point, then, is simple: These people know one another outside of the performance situation. They know members of the audience, and members of the audience know one another. These concerts happen in a social circle that exists within the larger community of Hoboken, NJ, and here, there, and beyond. These people are not strangers. They’re friends, a village within the city.

The concert took place in Frank Sinatra Park, which faces the Hudson River. The park is designed as a low amphitheatre with the performance area at the edge of the river. There’s four or five levels of seating in a semi-circle around the performance area and, as structured for this performance, thirty feet or so between the stage area and the first level of seating. This in-between is where the circle dancing takes place in the last third or so of the concert.

Friday, June 27, 2014

The New Inquiry on Gojira, almost gets it

Patrick Harrison has an essay about the original Gojira in The New Inquiry. He almost gets it. Yes, he notices that the film is very good, that the spare score is excellent, and the images well crafted. He understands it's about more than nukes, though it is about them, that it's about modernity:

Fuck the traditional Gaia interpretation of Godzilla—“By creating nuclear weapons, we have disturbed the natural order and disrespected traditional life, and Godzilla is our just punishment.” It’s more complicated than that. Godzilla is a metaphor for nuclear weapons, duh. The film is very didactic on this point. He’s radioactive, his rampage was brought on by H-bomb tests, and one character, complaining about having to endure “yet another evacuation,” even says, “What did I survive Nagasaki for?” But nuclear weapons are themselves a metonym for technological and social modernity, and that’s what Godzilla is really about.

And he knows that the love triangle is important:

Godzilla also provided a catharsis by making the relief of defeating Godzilla coincide with the symbolic purging of the war in the resolution of the film’s love triangle subplot. The subplot goes like this: At the start of the film, Takashi Shimura’s daughter, played by Momoko Kochi, is betrothed to Akihito Hirata’s mad scientist World War II veteran but is in love with a humble ship salvager played by Akira Takarada. Hirata wears an eye patch because, we are told, of a war injury. He is scarred by the war, and he is a scar of the war. There is something intense, even crazed about him, a painful excess of affect. He is a social scar. His very existence prevents the happiness of the young couple and unnerves the world. So the war veteran removes himself from it and goes out kamikaze-style, staying behind to detonate the super-weapon that destroys Godzilla so that no one can learn the weapon’s secret.

So close, yet so far. He doesn't understand that the love triangle is a conflict between two forms of marriage – traditional arranged marriage and modern marriage through the couple's choice – and that that conflict would have been very real for a Japanese audience (which I discuss in a number of posts, including this one). Shimura (the actor who played the professor) would have been the one who arranged the marriage. His desire to keep Gojira alive (for study purposes) is equivalent to his support for traditional marriage (the one he arranged for his daughter). And the death of Gojira is also the death of that traditional marriage when Akihito Hirata commits suicide in the process.

It's not just about technology and the means of production. It's also about family, self, and close relations.

Those were the days, analogue photography

An interesting article about black and white film photography. A bit nostalgic, I think, but interesting nonetheless. It's about a particular kind of film that Kodak introduced in 1954. Some photographers still swear by it (it's still manufactured). A paragraph:

It is hard to describe exactly the look of a Tri-X picture. Words like “grainy” and “contrasty” capture something of the effect, but there is more, something to do with the obsidian blacks produced by the film and with a certain unique drama that made the rock photography of the Sixties and Seventies so powerful and distinctive. Steve Schofield, a British photographer, now in Los Angeles, who first encountered Tri-X in the Seventies, has a different word: “I got these incredibly contrasty negatives that still somehow managed to render detail in both the shadows and highlights. It’s got that steely look, not warm like lots of other film bases. It’s that basic look from Tri-X that I’ve tried to incorporate into my work which is now mostly shot digitally and is now colour…that monochromatic palette, but interpreting it with a simple colour base. If I do ever need to shoot black-and-white, I always prefer film and always opt for Tri-X.”

Thursday, June 26, 2014

Why Philosophical Arguments about the Computational Mind Don’t Interest Me

And yet the idea of the computational mind does.

Philosophers have generated piles of arguments about whether or not or what way the mind is computational. I’ve read some of these arguments, but not many. They’re not relevant to my interests, which, as many of you know, are very much about the mind as computer. After all, in my major theoretical and methodological set piece, Literary Morphology: Nine Propositions in a Naturalist Theory of Form, the third proposition is “The form of a given work can be said to be a computational structure” and the fourth: “That computational form is the same for all competent readers.” I’m committed, and have been for years.

But the philosophical arguments, pro and con, have almost nothing to do any of the various models that have been posed and investigated through mathematical analysis and computer implementation. The philosophical arguments thus have no bearing on what interests me, the nuts and bolts of neuro-mental computation. For example, I read Searle’s (in)famous Chinese room argument when it was first published in 1980. Here’s how the Stanford Encyclopedia of Philosophy (SEP) summarizes it:

Searle imagines himself alone in a room following a computer program for responding to Chinese characters slipped under the door. Searle understands nothing of Chinese, and yet, by following the program for manipulating symbols and numerals just as a computer does, he produces appropriate strings of Chinese characters that fool those outside into thinking there is a Chinese speaker in the room. The narrow conclusion of the argument is that programming a digital computer may make it appear to understand language but does not produce real understanding. Hence the “Turing Test” is inadequate. Searle argues that the thought experiment underscores the fact that computers merely use syntactic rules to manipulate symbol strings, but have no understanding of meaning or semantics.

My response then, and now: And…? It simply doesn’t connect with anything you have to do get a model up and running.

The Computational Mind Creeps Up on the Digital Humanities

The following white paper discusses a wide range of technologies. I include some excerpts that speak to one of my current hobby horses, that computational is a good metaphor and model for mental processes and thus digital criticism must extend itself in that direction. That doesn't require that one embrace the idea that the mind or brain IS a digital computer, or any other kind of computer, in any simple sense. I certainly don't subscribe to that view. But I do believe something of what the mind does is best characterized as computation and literary studies will benefit by investigating computation as an approximation to literary processes. I've placed the full executive summary at the end.

Cognitive ComputingA White Paper for the establishment of a Center for Humanities and Technology (CHAT)

Sally Wyatt (KNAW) and David Millen (IBM) (Eds.)

2014 | ISBN 978-90-6984-680-4 | free

KNAW, UvA, VU and IBM are developing a long-term strategic partnership to be operationalized as the Center for Humanities and Technology (CHAT). The members and partners of CHAT will create new analytical methods, practices, data and instruments to enhance significantly the performance and impact of humanities, information science and computer science research.

This White Paper outlines the research mission of CHAT. CHAT intends to become a landmark of frontline research in Europa, a magnet for further public-private research partnerships, and a source of economic and societal benefits.

KNAW: Royal Netherlands Academy of Arts and Sciences

The section on cognitive computing begins with a discussion of IBM's Watson technology which, as we all know, defeated the best human contests at Jeopardy!. Watson's "knowledge" was quite superficial, and the paper is upfront about that. But the report does offer this suggestion (pp. 21-22):

The question of how cognitive systems could be applied to the humanities is necessarily a speculative one. Unlike other more established areas, such as text analytics and visualization, cognitive computing is so new that there are no ex- isting humanities applications from which to extrapolate. Nevertheless, there is considerable potential. The capacity to ingest millions of documents and an- swer questions based on their contents certainly suggests useful capabilities for the humanities scholar. The ability to generate multiple hypotheses and gather evidence for and against each one is suggestive of an ability to discover and summarize multiple perspectives on a topic and to classify and cluster documents based on their perspectives,

While we do not expect a cognitive computing system to be writing an essay on the sources of the ideas in the Declaration of Independence any time soon, it might not be unreasonable to imagine a conversation between a scholar and a future descendant of Watson in which the scholar inquires about the origins and history of notions such as ‘self-evident truths’ or ‘unalienable God-given rights’ and carries on a conversation with the system to refine and explore these concepts in the historical literature historical literature and sources. The resulting evidence gathering and the conclusions reached would be a synthesis of human and machine intelligence.

You might want to look at Dave Ferucci's talk about the return to meaning (embedded in this post). Toward the end he talks about the possibilities for such man-machine interaction. Ferrucci led IBM's Watson team.

These proposals are support stuff, but many of the concepts and techniques involved were originally created to simulate or mimic human mental processes. As humanists explore these techniques for support functions they're likely to wonder whether or not the concepts have more direct implications. When that happens...

These proposals are support stuff, but many of the concepts and techniques involved were originally created to simulate or mimic human mental processes. As humanists explore these techniques for support functions they're likely to wonder whether or not the concepts have more direct implications. When that happens...

Wednesday, June 25, 2014

Epiphenomena? Ramsay on Patterns, Again

About a month ago I posted on Ramsay’s article about patterns in the scene structure of Shakespeare’s plays. Now I’m looking at a more recent piece, The Meandering through Textuality Challenge, from a 2011 MLA panel, “Digging into Data.” Patterns come up again:

I have many times suggested “pattern” as the treasure sought by humanistic inquiry: which is to say, an order, a regularity, a connection, a resonance. I continue to insist that this is, in the end, what humanists in general, and literary critics in particular, are always looking for, whether they’re new critics, new historicists, new atheists, new faculty, or New Englanders...This would be a banal observation ... were it not for the fact that (like these worn phrases, once upon a time) it encourages us see a connection that might otherwise be obscured. For if humanistic inquiry is about pattern, then it isn’t completely crazy to suggest that computers might be useful tools for humanistic inquiry. Because long before computation is about YouTube or Twitter or Google, it is about pattern transduction.

So far so good. I especially like that last bit, that computation is about pattern transduction. I’m not entirely sure what that means, nor am I at all disturbed by that. Whatever it means, it draws the reader’s attention away calculation and number, which is a good thing.

Ramsay goes on to say:

... we do not present the task of literary criticism or historiography as the process of finding some intact, but buried object beneath the surface. That’s because we have for a very long time now conceived of the patterns we’re looking for not as “out there,” but as “in here” — not as preexisting ontological formations, but as emergent textual epiphenomena.

Again I’m not so sure, but I want to think about this uncertainty, just a bit.

When I go looking for patterns in texts as far as I can tell I’m looking for something that’s “out there” in the sense that I am not, as a critic, projecting that pattern onto the text. Ring-composition really is there, whether in the Japanese film Gojira, or in Heart of Darkness, or elsewhere (Coleridge, Tezuka, Coppola). Now I understand that those texts, considered as inscriptions on some surface (whether ink on a page, emulsion on celluloid, or a pattern of light on some surface) must be “read” by a mind in order for the phenomenon to be fully manifest, but that certainly doesn’t make them epiphenomenal.

Tuesday, June 24, 2014

A Boat, Some Rocks, the Shore

This is another of those "ordinary" shots I've come to like so much.

Nothing flashy. It's just there.

The boat's the focal point of the shot, and it's skewed to the left. The orange hull of the kayak (?) is more or less at the center and the bright orange contrasts effectively with the rest of the image. And then we have those rocks in the foreground and skewed to the right. They balance the shit.

Monday, June 23, 2014

Pluralism and an Epistemology of Building

Prompted by a post of Stephen Ramsay's (Postfoundationalism for Life) I've been reading I've been reading James Smythies, Digital Humanities, Postfoundationalism, Postindustrial Culture (DHQ 2014 8.1). Very interesting.

There's a section on the epistemology of building that I like. Heck, I like just that phrase, "the epistemology of building," given my fondness for engineering. A passage:

My purpose is to suggest that his [Willard Mccarty's] cognitive stance has become so widespread it represents a "habit of mind" or, mentalité, that reflects the goals and aspirations of a significant portion of the community, including Franco Moretti (2005), Julia Flanders (2009; 2012), Galey and Ruecker (2010), Ramsay and Rockwell (2012) and several others. The implications of the stance are fascinating. In extended commentaries later encapsulated in Scheinfeldt’s epigrammatic tweet, McCarty suggests that theories of computer coding, modeling and design are capable of providing an epistemological basis for the digital humanities; that rather than being mere by-products of the development process, they "contain arguments that advance knowledge about the world" [Galey and Ruecker 2010, 406]. The argument proffered is that the need to create models of reality (ontologies, database schemas, algorithms and so on), required to allow computers to mathematically parse problems posed by their human operators, offers a radical new methodological basis for future humanities research.

Why not?

As for postfoundationalism:

Postfoundationalism asserts that there is no point asserting either more confidence in our understanding of reality than is justified (as with modernism and logical empiricism) or retreating into a pessimistic view of our ability to grasp any one reality at all (as with postmodernism and postmodern deconstruction) [Ginev 2001, 28]. Rather, in a claim that could perhaps be criticized for claiming to have cut the Gordian knot, postfoundationalism "reject[s] the possibility of facts outside theoretical contexts. All knowledge incorporates both facts and theories" [Bevir 2011a]. It is an intellectual position that balances a distrust of grand narrative with an acceptance that methods honed over centuries and supported by independently verified evidence can lead, if not to Truth itself, then closer to it than we were before.

The Critic's Will to Meaning over the Resistance of the Text

I take as my point of reference the line that Geoffrey Hartman drew between critical reading, on the one hand, and technical structuralism and linguistics, on the other. Of course, I’m referring to the title essay of The Fate of Reading (1975). He’s not the only one to draw such a line. It was obvious enough to all. Thus Jonathan Culler also drew it in Structuralist Poetics (1975) when he asserted that linguistics is not hermeneutic.

But Hartman gave that line a valence that Culler did not. Hartman said that criticism should not, could not, go there. Why? Because “reading” – by which of course Hartman mean hermeneutic analysis and writing conflated with plain old reading – is supposed to bring the critic closer to the text while those disciplines on the other side of that line only position the text farther away.

In what sense closer? Certainly not physically. But in what metaphorical sense? What is this (metaphorical) space in which the text is there, the critic is here, and we can somehow measure the distance between them?

I don’t know, nor, I suspect, does anyone else. But I suggest that if the critic really wants to get closer to the text, why not abandon the activity of constructing a critical commentary? Why not just read the text itself?

Hartman has a reply – that he’s interesting in a more substantial kind of reading – but let’s set that aside. I’m going in a different direction. It seems to me that it is the existence of such things as linguistics and technical structuralism that allows Hartman to assert that the purpose of interpretive analysis (aka hermeneutics, aka reading) is to get closer to the text. He needs them in his metaphorical space as a foil against which he can make the claim that “reading” brings you closer to the text. Without those disciplines “out there” his own philosophically and psychoanalytically informed practice is vulnerable to the criticism that it is based on unnecessary and even scientistic conceptual apparatus.

Note that “distance” is the only criticism that Hartman has of those disciplines. He doesn’t say that they lead to false conclusions, that they posit illusory phenomenon, only that they don’t bring one closer to the text. What kind of objection is that? What’s so important about this metaphorical closeness, whose importance is unquestioned?

More flowers – shooting blind

In two recent photo-posts I've presented "ordinary" shots – nothing fancy, no spectacular colors, strange objects, or self-conscious composition: a friend in his home; three landscapes. Then I posted some shots of flowers where I was shooting blind. Yes, my eyes were open and I did aim the camera, but I wasn't looking through the viewfinder so I didn't know exactly what was in-frame.

Here's some more blind shots of flowers. The first two have been cropped. But still…

It seems unlikely that I'd have taken THAT shot if I'd been looking through the viewfinder. I'd have gotten the whole flower. Of course, I could have cropped it so we didn't have that "empty" area to the left, but I like it.

This one is particularly interesting, as most of it is not in focus:

That's OK. There's no mystery about the fuzzy parts of the image; the color and composition are still there. And I like the play of shadows on the two petals in the foreground.

Sunday, June 22, 2014

Remix: This is how culture changes, East Asian edition

Language Log's Victor Mair has recently been to Macau and Hong Kong, both multi-lingual treasure troves. An example from Hong Kong:

Pui Ling then showed me records of some chats between her and her sister, who works at the counter of an airline at the Hong Kong airport. I was stunned by the long exchanges between them which consisted of Cantonese, Mandarin, and English all mixed together in the same sentences. That is to say, it was natural for them to shift among the three languages in the same sentence. Furthermore, when they resorted to Cantonese, it was written in a mixture of standard characters, special characters used only for Cantonese, and Roman letters. I remember clearly a Cantonese final particle, lu (written just like that in Romanization), at the end of a sentence that was mostly made up of English words.My next experience with Chinese in computers in Hong Kong was when I observed a woman at a Starbucks that I frequented writing characters on the glass of her cell phone. I noticed that she was flailing away at the screen in a way similar to what I have earlier described (though not quite so demonstratively), and that after each frantic flailing at the screen, she would pause to pick — from a list of characters that the program suggested she was trying to write — the one that she really wanted. This was something that I witnessed several times in Hong Kong during this visit. The people who seemed to be fairly good at this method of entering characters attacked the glass with less vehemence and tended to spend less time pausing between characters entered.After watching the woman for several minutes, I asked her if she sometimes used other methods for writing on her cell phone. She said, "Oh, yes. There are this handwriting method, Cangjie (character components), bopomofo (Mandarin phonetic symbols), Hanyu Pinyin (official PRC Romanization), and several others, and I use them all depending upon circumstances." When I asked her which method she preferred above all others, her response floored me, "English," she said, without the slightest hesitation.

Mair concludes:

In sum, I believe that Hong Kong functions as a sort of language lab for the Sinosphere. The trends that I witnessed there on this trip and during many previous visits to the city are a harbinger of things that are spreading throughout the Mainland.

Flowers – Shooting Blind

It's fun to just point the camera and shoot, without looking through the view finder. That's how I got these shots. I wanted to shoot the flowers from below, and that left me little choice but to hold the camera down, point it up, depress the button until I heard the "ding" indicating focus-achieved, and then completing the click.

Saturday, June 21, 2014

DH2014: Computing the Literary Mind – Look at This!

One of the things that’s struck me as I’ve looked into (the so-called) digital humanities in the last year or two – most intensely in the last six months – is how very little there is about the aspect of computing that most attracted me some four decades or so ago, the computer as model and metaphor for the mind. That, after all, is what drove the cognitive revolution, not computer as data-crunching appliance, though that was important as well. In thinking about these matters we would do well to acknowledge that, even as digital humanities traces its roots to the work Roberto Busa initiated in the 1950s, that another computational enterprise originated at rather the same time, one as classically humanist.

I am talking, of course, of machine translation. And surely translation from one language to another – Attic Greek to French, Russian to English, whatever – is a core humanistic skill. While those early researchers didn’t think they were in the business of miming the human mind – at least some of them thought that machine translation would be more straightforward than it proved to be – it turns out that, to a first approximation, that’s where some of them ended up.

And so here we are, moving into the 21st Century and humanistic computing is on the at-long-last rise, and few humanists know much of anything about computing as a model for the mind, not at the nuts and bolts level. That, alas, is not difficult to understand. For one thing, there is a deep strain of techno-skepticism and outright fear in the humanities. For another, it is so much easier, more “natural” if you will, to interpret computing from the outside as just one more cultural medium. One need not know anything about how computers work in order to plumb the socio-cultural significance of computers both real (e.g. IBM’s Watson) and fictional (e.g. Kubrick’s HAL).

That may, however, be about to change. On the one hand Franco Moretti has, in his pamphlet, “Operationalizing”: or, the Function of Measurement in Modern Literary Theory (Stanford Literary Lab, Pamphlet 6), asserted: “Computation has theoretical consequences—possibly, more than any other field of literary study. The time has come, to make them explicit.” More concretely, there are some sessions at this summer’s DH2014 in Lausanne that pitch their tents in classical territory.

The Drunken Monkey – That's Us

Robert Dudley, in The Scientist:

When we think about the origins of agriculture and crop domestication, alcohol isn’t necessarily the first thing that comes to mind. But our forebears may well have been intentionally fermenting fruits and grains in parallel with the first Neolithic experiments in plant cultivation. Ethyl alcohol, the product of fermentation, is an attractive and psychoactively powerful inebriant, but fermentation is also a useful means of preserving food and of enhancing its digestibility. The presence of alcohol prolongs the edibility window of fruits and gruels, and can thus serve as a means of short-term storage for various starchy products. And if the right kinds of bacteria are also present, fermentation will stabilize certain foodstuffs (think cheese, yogurt, sauerkraut, and kimchi, for example). Whoever first came up with the idea of controlling the natural yeast-based process of fermentation was clearly on to a good thing.Using spectroscopic analysis of chemical residues found in ceramic vessels unearthed by archaeologists, scientists know that the earliest evidence for intentional fermentation dates to about 7000 BCE. But if we look deeper into our evolutionary past, alcohol was a component of our ancestral primate diet for millions of years. In my new book, The Drunken Monkey, I suggest that alcohol vapors and the flavors produced by fermentation stimulate modern humans because of our ancient tendencies to seek out and consume ripe, sugar-rich, and alcohol-containing fruits. Alcohol is present because of particular strains of yeasts that ferment sugars, and this process is most common in the tropics where fruit-eating primates originated and today remain most diverse.

The book is published by the University of California Press. Read an excerpt HERE.

Some Ordinary Views from Yesterday's Shoot

I like to shoot ordinary views. Nothing flashy, no tricky composition. Just the world, at least a little piece of it, front and center. Like this tree:

The tree is centered, of course, a simple matter of composition. Anyone could do it. It's the most obvious compositional ploy – "center your subject." The tree is just a tree, nothing special about it – no peculiar twisted trunk or limbs, no brilliant foliage. And the tree's in an ordinary landscape against a typical sky.

"Mind-reading" unravelled?

The notion of "mind-reading" has been bugging me since, I guess, some time in the last decade of the previous century. This is the idea that we humans have a "theory of mind" (TOM) module which we use to "read other people's minds. It's that theory of mind stuff that bugs me, and it really bugged me when literary scholars got ahold of it and started going on about literature as a field for this mind-reading activity. Bollocks!

Yes, we've got minds. And, yes, we're intensely interested in other people and their minds. But talking about his as some kind of THEORY of mind is an invitation for trouble. But this article looks like it might clear things up a bit:

Cecilia M. Heyes, Chris D. FrithScience 20 June 2014:Vol. 344 no. 6190DOI: 10.1126/science.1243091It is not just a manner of speaking: “Mind reading,” or working out what others are thinking and feeling, is markedly similar to print reading. Both of these distinctly human skills recover meaning from signs, depend on dedicated cortical areas, are subject to genetically heritable disorders, show cultural variation around a universal core, and regulate how people behave. But when it comes to development, the evidence is conflicting. Some studies show that, like learning to read print, learning to read minds is a long, hard process that depends on tuition. Others indicate that even very young, nonliterate infants are already capable of mind reading. Here, we propose a resolution to this conflict. We suggest that infants are equipped with neurocognitive mechanisms that yield accurate expectations about behavior (“automatic” or “implicit” mind reading), whereas “explicit” mind reading, like literacy, is a culturally inherited skill; it is passed from one generation to the next by verbal instruction.

The last sentence of the abstract contains a key distinction. Now I'm not at all sure that this learned cultural skill amounts to a theory, certainly not in the sense of a philosopher's theory of mind; but the authors are making a crucial distinction between innate neurocognitive mechanisms for picking up cues from others and something that is culturally built upon them, as chess tactics and strategy are built upon the fundamental rule-given moves of the game.

I have no trouble believing in the existence of those innate mechanisms, which have been built through millions of years of evolution. But there's no need to call them, collectively, a theory of mind or to think that they allow infects to "see into" the minds of others. Not at all. They keep things synched up because that's how the human neuro-cognitive apparatus is.

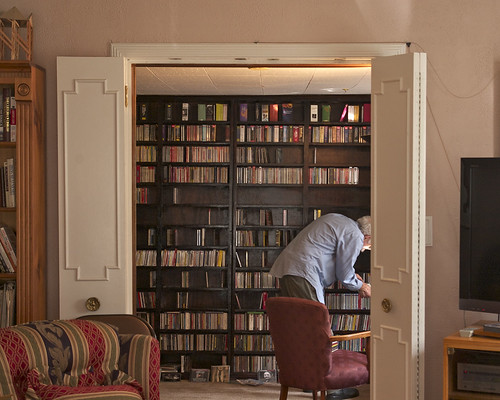

A Simple Domestic Scene

I liked this shot as soon as it came from the camera. Others have liked to as well. It's simple and direct, nothing flash, no self-aware composition:

We're inside a house in a room and looking through double-doors into another room. That other room is dominated by large shelves, which are only partially filled. There's a man standing in that room, bent over, in front of the shelves. In fact, he's arranging CDs on the shelves. I know that because, obviously, I was there. But you don't need to know that. All you need to know is that he's obviously doing something and it has to do with those shelves and the objects on them. He's in dialog with them, if you will. Notice the light on his face, there to the right at the edge of the doorway. And notice the light on the ceiling of that other room. It's coming from the right.

At the lower left we can see a couch in the near room. The fabric has a fairly elaborate pattern, dominated by red. And that red leads the eye to a chair in the far room, the inner sanctum, if you will. That chair has its back to us. It's not for us. It's for the man in the room. Though he's not physically at the center of the picture, he's to the right, the picture nonetheless centers around him. And he's not at all aware of us, absorbed as he is in whatever he's doing. We're overlooking his dialog with the shelves. Intimate and yet remote.

Thursday, June 19, 2014

Ten Russian Cantors Walked into a Bar...

Well, it wasn’t a bar; it was a synagogue. And the synagogue wasn’t in Russia; it was in New Jersey. But there were ten of them; they are Russian, and they are cantors.

Last Sunday, July 16, Temple Sinai in Summit, New Jersey, had a benefit for its musical programs. Sinai’s cantor, Marina Shemesh, invited nine of her colleagues – all classically trained in Russia – to Temple Sinai to put on a show.

And put on a show they did. It was delightful. The first half of the program featured Russian songs, folk and classical, while the second half was based on Broadway tunes, hence the show’s title, “From Red Square to Times Square.”

But the highlight came at the very end. The audience was so appreciative that the cantors decided to do an encore, though one hadn’t been prepared. One of them started playing the piano and we heard the melody that I know as “Those Were the Days,” made popular in the late 1960s by Mary Hopkin. But the words the cantors sang were strange. They weren’t English at all.

They were Russian. The pianist urged us to sing along, either in Russian or English. And so we did.

But damn! those Russians sure were into it. Wonderful!

I figured the song must have been translated into Russian and become a hit there as well. But I was wrong. The song was originally a Russian song, “Дорогой длинною” (literally “By the long road”) written by Boris Fromin with lyrics by Konstantin Podrevskii. The familiar English lyrics, which are not a translation of the Russian, were written by Gene Raskin in the early 1960s.

No wonder the ten cantors, plus the many Russians in the audience, sang the song with such force and conviction. It was their song. And ours, of course, and everyone else’s.

It was that kind of afternoon. We may have been gathered in a synagogue in suburban New Jersey on a Sunday afternoon in the second decade of the twenty-first century, but there was half a world and a century’s worth of history in that one encore. And the musical traditions go back pretty near to the beginning of time.

Monday, June 16, 2014

Avatar: 20 more years?

Billions of dollars are riding on the effort. The effects-heavy sequels will be expensive: Mr. Cameron has vaguely said their combined production cost would be less than $1 billion, though the movies cannot be budgeted until they are written.But in Mr. Cameron, the project is being led by a director who helped to redefine his industry with “The Terminator,” “Titanic” and the immersive 3-D science fiction spectacle “Avatar.”“Jim first and foremost in life is an explorer, and it’s what he does in his movies,” said Jon Landau, Mr. Cameron’s business partner. Speaking by telephone recently, Mr. Landau described what he said had been a yearslong effort to conceptualize an entire “Avatar” universe that would be realized over 20 years or more in various media, some of which have yet to be invented.“We decided to build out the breadth of our world — whether or not it’s in one of the films — now,” he said.“This is not about any one medium,” Mr. Landau added, referring to the elaborate ideas being developed by Mr. Cameron, along with a team of four screenwriters, and by a novelist, Steven Gould.Mr. Gould, known especially for the science fiction book “Jumper,” is weaving those ideas into novels that are meant to read as if they had inspired, rather than were spun off from, “Avatar.”

Will this be a new kind of world-scale myth-making? "World-scale" in two senses: 1) the scope of the fictional universe and 2) it's reach across the earth. On earth, where will the climate debate BE in 20 years and how will that affect this franchise? For that matter, does this franchise have the power to affect debate on the environment?

Thursday, June 12, 2014

Has the Turing Test been Passed?

You've probably heard that claims are being made about a computer passing the Turing Test. The program pretends that it's a 13-year-old Ukranian boy. FWIW, Ray Kurzweil doesn't think that this chatbot has passed the test.

Though I'm skeptical about Kurzweil's general views on AI, I think he's right about this one. In his blog post he talks about the bet he made with Mitch Kapoor that the Turing Test will be passed by 2029. He noted that they had to spend a great deal of time negotiating the specific terms of the text, which is itself rather vague.

What I'm wondering is whether or not the Turing Test is, in fact, just another specialized domain for AI. Given that the conversation is, in principle, open-ended one might not think of it as being specialized. But I'm no so sure. After all, no human can talk well on every topic so we shouldn't expect it of a computer either. And, after all, people can get pretty strange in conversation. So strangeness – whatever that is – wouldn't be a surefire indicator either.

But what would be a surefire indicator? Anything?

No, I'm beginning to think that Turing Testing is a specialized performance domain. The specialization may be rather different from playing Jeopardy, but it's still a specialization. If so, there will come a time when computer performance is so good that the contest holds little further interest.

Made it to the Semi-Final Round of 3QD Contest

…just barely (scroll to the bottom of the list).

I'm talking about the 3 Quarks Daily Arts and Literature contest, of course. My essay: What's Photography About Anyhow?

I'm talking about the 3 Quarks Daily Arts and Literature contest, of course. My essay: What's Photography About Anyhow?

Tuesday, June 10, 2014

Google Does Graffiti

PARIS — There’s a portrait of an anonymous Chinese man chiseled into a wall in Shanghai, a colorful mural in Atlanta and black-and-white photographs of eyes that the French artist JR affixed to the houses of a hillside favela in Rio de Janeiro. These are among the images of more than 4,000 works included in a vast new online gallery of street art that Google is unveiling here on Tuesday….

With the initiative, Google is the latest organization to wade into debates about how or whether to institutionalize, let alone commercialize, art that is ephemeral and often willfully created subversively. A private database of public art, it also poses questions about how to legally preserve what in some cases might be considered vandalism.In a sense, Google is formalizing what street art fans around the world already do: take pictures of city walls and distribute them on social media. Yet for Google to do so could raise concerns, given the criticism of its aggressive surveillance tactics, especially in Europe, where its Street View satellite mapping is widely seen as a violation of privacy.Google is taking pains to avoid offense by setting strict conditions. It will include only images provided by organizations that sign a contract attesting that they own the rights to them. It will not cull through Street View images but will provide the technology to organizations that want to use it to record street art legally. Some groups have provided exact locations of the artworks; others have not.

Monday, June 9, 2014

AI: A Return To Meaning - David Ferrucci

The conclusion to Ferrucci's comment on the video: "This talk draws an arc from Theory-Driven AI to Data-Driven AI and positions Watson along that trajectory. It proposes that to advance AI to where we all know it must go, we need to discover how to efficiently combine human cognition, massive data and logical theory formation. We need to boot strap a fluent collaboration between human and machine that engages logic, language and learning to enable machines to learn how to learn and ultimately deliver on the promise of AI."

The most interesting stuff starts at 1:22:00 or so. Ferrucci talks about an interactive system where the computer uses human input to learn how to build-out an internal knowledge representation, where the internal model is based on classical principles. Watson-style "shallow" technology is an "ingredient" for creating the technology to support the interaction. Ten people could create a qualitative leap forward in three to five years.

The most interesting stuff starts at 1:22:00 or so. Ferrucci talks about an interactive system where the computer uses human input to learn how to build-out an internal knowledge representation, where the internal model is based on classical principles. Watson-style "shallow" technology is an "ingredient" for creating the technology to support the interaction. Ten people could create a qualitative leap forward in three to five years.

Saturday, June 7, 2014

Rens Bod on Patterns

WHO'S AFRAID OF PATTERNS?: THE PARTICULAR VERSUS THE UNIVERSAL AND THE MEANING OF HUMANITIES 3.0. BMGN - Low Countries Historical Review. Vol. 128, No. 4, 2013, 171-180.Rens BodAbstractThe advent of Digital Humanities has enabled scholars to identify previously unknown patterns in the arts and letters; but the notion of pattern has also been subject to debate. In my response to the authors of this Forum, I argue that ‘pattern’ should not be confused with universal pattern. The term pattern itself is neutral with respect to being either particular or universal. Yet the testing and discovery of patterns – be they local or global – is greatly aided by digital tools. While such tools have been beneficial for the humanities, numerous scholars lack a sufficient grasp of the underlying assumptions and methods of these tools. I argue that in order to criticise and interpret the results of digital humanities properly, scholars must acquire a good working knowledge of the underlying tools and methods. Only then can digital humanities be fully integrated (humanities 3.0) with time-honoured (humanities 1.0) tools of hermeneutics and criticism.

What I'm wondering is whether or not pattern is emerging as a fundamental epistemological/ontological entity. I've broached this idea in an earlier post focussed on Moretti, From Quantification to Patterns in Digital Criticism, where I observed, of his network diagrams:

What are those diagrams about? Let me suggest that they are about patterns. Yes, I know, the word is absurdly general, but hear me out.That bar chart depicts a pattern of quantitative relationships, and does so better and more usefully than a bunch of verbal statements. You look at it and see the pattern.The pattern in the network diagram is harder to characterize. It’s a pattern of relationship among characters. What kind of relationships? Dramatic relationships? That, I admit, is weak. But if you read Moretti’s pamphlet, you’ll see what’s going on.The important point is what happens when you get such diagrams based on a bunch of different texts. You can see, at a glance, that there are different patterns in different texts. While each such diagram represents the reduction of a text to a model, the patterns in themselves are irreducible. They are a primary object of description and analysis.And that is my point: patterns.

As I said, the idea of patterns is very general. But that doesn’t make it useless. On the contrary, that generality makes the idea useful and powerful.

It is a commonplace in the cognitive science that the human mind (and brain) is very good at pattern recognition. But digital computers are not so good at it. I note also that the notion of design patterns has been popular in computer programming, which got it from the notion of pattern language articulated by the architect, Christopher Alexander.

Shameless Bleg: Vote for Me for the 4th Annual 3QD Arts & Literature Prize

3 Quarks Daily is offering prizes for the best web essays on the arts and literature. I have shamelessly entered my own essay, What's Photography About, Anyhow? If you agree that that is indeed a smashing essay, vote for it HERE.

T H A N K S !

Wednesday, June 4, 2014

Tuesday, June 3, 2014

The Announcer prepares

Tom Durkin announces horse races. He's preparing to announce the upcoming Belmont Stakes, his last:

He has a dossier on each of the 12 possible starters that together runs more than 20 pages. By post time Saturday, Durkin, the track announcer, will have the pertinent details on each of them littered about his subconscious.

Just as he does before each of the races he calls, Durkin has also assigned this Belmont Stakes a plot — think of it as the frame within which he will paint an evocative word picture.

He's a performer, and performing is a tricky business. It can also provoke anxiety. So:

He had recorded one of his hypnosis sessions, and he plugged in his earbuds to listen to it on Monday, but the bulk of the day was devoted to the painstaking homework that has made Durkin’s vivid narration, in real time, a symphony of words, emotion and triumph.

Durkin’s anxiety may have begun with a flush of panic in 1987 as Ferdinand and Alysheba dueled to a photo finish in the Breeders’ Cup Classic, but he has successfully held it at bay with meditation, prayer and medication to deliver some of the most memorable calls in modern thoroughbred history.

Monday, June 2, 2014

Handwriting Matters

From the New York Times:

The effect goes well beyond letter recognition. In a study that followed children in grades two through five, Virginia Berninger, a psychologist at the University of Washington, demonstrated that printing, cursive writing, and typing on a keyboard are all associated with distinct and separate brain patterns — and each results in a distinct end product. When the children composed text by hand, they not only consistently produced more words more quickly than they did on a keyboard, but expressed more ideas. And brain imaging in the oldest subjects suggested that the connection between writing and idea generation went even further. When these children were asked to come up with ideas for a composition, the ones with better handwriting exhibited greater neural activation in areas associated with working memory — and increased overall activation in the reading and writing networks.My own thought is that writing changes the brain's computational architecture, establishing a matrix for new calculations. See papers David Hays and I have written: Principles and Development of Natural Intelligence (1987, on the brain's computational architecture), and The Evolution of Cognition (1990, on speech, writing, calculation, and computing).

It now appears that there may even be a difference between printing and cursive writing — a distinction of particular importance as the teaching of cursive disappears in curriculum after curriculum. In dysgraphia, a condition where the ability to write is impaired, usually after brain injury, the deficit can take on a curious form: In some people, cursive writing remains relatively unimpaired, while in others, printing does.

Keeping the Groove

From the Harvard Gazette:

Rhythm research has implications for both audio engineering and neural clocks, said Holger Hennig, a postdoctoral fellow in the laboratory of Eric Heller in the Physics Department at Harvard, and first author of a study of the Ghanaian and other drummers in the journal Physics Today. Software for computer-generated music includes a “humanizing” function, which adds random deviations to the beat to give it a more human, “imperfect” feel. But these variations tend to make the music sound “off” and artificial. The fact that listeners are turned off by “humanized” music led Hennig and colleagues at the Max Planck Institute for Dynamics and Self-Organization in Germany to wonder whether human error in musical rhythm might show a pattern. Perhaps the “humanizing” features of computer-generated rhythms fail because they produce the wrong kind of errors — deviations unlike the kind humans produce. There are rhythms inherent in the human brain, which may affect our musical rhythm. The primal bio-rhythm in the neurons of the Ghanaian drummer might be echoed in the rhythm of his music, the physicists suspected.When they analyzed the drummer’s playing statistically, Hennig and colleagues found that his errors were correlated across long timescales: tens of seconds to minutes. A given beat depended not just on the timing of the previous beat, but also on beats that occurred minutes before.

Thhe research article: Hennig H, Fleischmann R, Fredebohm A, Hagmayer Y, Nagler J, et al. (2011) The Nature and Perception of Fluctuations in Human Musical Rhythms. PLoS ONE 6(10): e26457. doi:10.1371/journal.pone.0026457

Abstract: Although human musical performances represent one of the most valuable achievements of mankind, the best musicians perform imperfectly. Musical rhythms are not entirely accurate and thus inevitably deviate from the ideal beat pattern. Nevertheless, computer generated perfect beat patterns are frequently devalued by listeners due to a perceived lack of human touch. Professional audio editing software therefore offers a humanizing feature which artificially generates rhythmic fluctuations. However, the built-in humanizing units are essentially random number generators producing only simple uncorrelated fluctuations. Here, for the first time, we establish long-range fluctuations as an inevitable natural companion of both simple and complex human rhythmic performances. Moreover, we demonstrate that listeners strongly prefer long-range correlated fluctuations in musical rhythms. Thus, the favorable fluctuation type for humanizing interbeat intervals coincides with the one generically inherent in human musical performances.

Sunday, June 1, 2014

Hyperobjects and "Distant Reading"

Two successive tweets from Alan Liu:

— Alan Liu (@alanyliu) May 31, 2014

So, topic models. Is the model the hyperobject or is it simply a description of the hyperobject? Consider one of Morton's core examples, global warming. Surely global warming is the hyperobject while a given climate model simply provides a description of that hyperobject. It's global warming that is "massively distributed in time and space," not a given climate model. The climate model is just a bunch of code running on a computer, which is fairly compact in time and space.

And so it must be with a topic model and whatever cultural hyperobject it models. Thus, when Goldstone and Underwood developed topic models for over a century's worth of articles in literary studies (The Quiet Transformations of Literary Studies: What Thirteen Thousand Scholars Could Tell Us [PDF preprint]) literary studies is the hyperobject and, as such, is distinct from their topic models (which you can explore interactively HERE, introduction HERE).