Pages in this blog

▼

Thursday, March 30, 2017

The "normalization" of digital humanities?

Over at The Stone and the Shell Ted Underwood has a new post, Digital humanities as a semi-normal thing: "In place of journalistic controversies and flame wars, we are finally getting a broad scholarly conversation about new ideas." He goes on to note:

The immediate occasion for this post is a special issue of Genre (v. 50, n. 1) engaging the theme of “data” in relation to the Victorian novel; this follows a special issue of Modern Language Quarterly on “scale and value.” Next year, “Scale” is the theme of the English Institute, and little birds tell me that PMLA is also organizing an issue on related themes. Meanwhile, of course, the new journal Cultural Analytics is providing an open-access home for essays that make computational methods central to their interpretive practice.The participants in this conversation don’t all identify as digital humanists or distant readers. But they are generally open-minded scholars willing to engage ideas as ideas, whatever their disciplinary origin. Some are still deeply suspicious of numbers, but they are willing to consider both sides of that question. Many recent essays are refreshingly aware that quantitative analysis is itself a mode of interpretation, guided by explicit reflection on interpretive theory.

Underwood notes, however, that "distant reading" is unlikely to become "one of those fashions that sweeps departments of English, changing everyone’s writing in a way that is soon taken for granted." Why not? Because it's too hard.

Adding citations to Geertz and Foucault can be done in a month. But a method that requires years of retraining will never become the next big thing. Maybe, ten years from now, the fraction of humanities faculty who actually use quantitative methods may have risen to 5% — or optimistically, 7%. But even that change would be slow and deeply controversial.

Right.

FWIW, it seems to me that cognitive and evolutionary criticism are an easier reach than distant reading, though not so easy as adding citations to Geertz and Foucault. Geertz and Foucault can be assimilated to more or less 'standard' humanities training in discursive and narrative reasoning. Cognitive science and evolutionary psychology cannot. But it is possible to assimilate some ideas from those areas to vague psychology of mental agents or faculties of some sort (such as Theory of Mind) and cognitive metaphor and conceptual blending are not a far stretch from more traditional notions of tropes. But digital reading requires fundamentally different ways of reasoning from evidence to conclusions. And that's not so easy to pick up. And then, of course, there's the process of learning to use digital tools.

Tuesday, March 28, 2017

On the conduct of interdisciplinary work (& memes)

A few years ago I had a post in which I featured an article by Jeremy Burman on the the history of the meme concept, "The misunderstanding of memes" (PDF). The MIT Press blog now has an interview with Burman in which he talks about that article and how he came to write it. In this interview he asserts:

By citation count, Blackmore’s book is the primary text of the popularized meme; the expansion of the bricolage presented in The Mind’s I, in turn made possible—and then endorsed for its “courage”—by Dawkins himself. Indeed, its publication is why the date range in my article runs until 1999 when it could easily have extended further. The peer-reviewed Journal of Memetics was still actively publishing at that time, the secondary literature was growing by leaps and bounds, Dawkins’ The God Delusion and Dennett’s Breaking the Spell had not yet been published, etc.Briefly put, though, I think a case can be made that the popularized view of memes satisfies the criteria for what Lakatos referred to as a degenerating research program. This is supported by a number of observations beyond the sequence of three different meanings. For example: the journal failed in 2005, there are no graduate programs now training students specifically in memetics, and the contemporary meaning of the term—relating to cat pictures with funny captions—is in no way related to the original source.

I agree with Burman that the popular view is "a degenerating research program," which is one reason I eventually decided to drop the term in my own work. If you're interested in this business, by all means read Burman's interview.

But that's not the main reason why I'm linking to it. The last part of the interview is about interdisciplinarity, where he says:

Although I accept that the word is used, I’m not fully convinced that “interdisciplinarity” actually exists either. What we actually have is a kind of openness that has been institutionalized to a greater or lesser degree in individual departments, faculties, and schools. And that’s a good thing. But it’s not identical with the use of the word; it requires action, support, and sustained effort—by people.One important driver of this institutionalized interdisciplinarity is a good and careful editor who is supported by good and careful reviewers. This makes it possible for scholars in more conservative places to produce texts—perhaps even on their own time—that are deemed by a recognized disciplinary institution (the journal) to be of sufficient quality to merit continued recognition and support. In this way, new niches can then be constructed.Misunderstandings are inevitable when moving across boundaries, but openness can serve as a shield against this too. It simply requires good faith on all sides. And the careful appreciation of different perspectives.If reviewers assume that something doesn’t make sense to them because it’s bad, then rejections will result (false negatives). This is the opposite of openness; risk aversion run amok. The danger, though, is on the other side: if an author acts in bad faith, and submits rubbish that nonetheless hits the right notes, then some material will be published that shouldn’t be (false positives).I don’t know how to strike the right balance, unfortunately, except to do as my father has always suggested: “walk softly and carry a big committee.” If you ensure that you have access to a diversity of voices, and empower everyone so that they’re properly heard, then the odds are much better that someone will flag a potential problem before it becomes an embarrassment.

Yes.

Monday, March 27, 2017

Buffy Meets The Donald

In honor of the 20th anniversary of Buffy the Vampire Slayer, the NYTimes has an article about Buffy fanfic. Here's a passage from one of the pieces they feature, "Buffy vs, Donald" by Terri Meeker (aka Piddinhead):

She’d thought the tower had been garish so far, but this enormous room made her redefine the word. The walls were covered in the same gold finish, but more portraits of Drumpf were packed onto every square inch. These were even larger than the ones along the staircase—some ten feet high. Oversized chandeliers crowded the high ceiling, so many that it looked like a lighting fixture store. Perched near gold-tinted sofas and chairs were gold-encrusted tables laden with gigantic candelabras and huge crystal figures.Dominating the entire room, in dead center, was a statue of Donald Drumpf himself. It was thirty feet high and made of gold, naturally. The statue was illuminated by spotlights and dozens of American flags encircled it.“Scrooge McDuck called,” Buffy mumbled. “He thinks you should dial it back a notch.”“Hello there.” A male voice spoke through the maze of gold and crystal. “Did you get lost, little lady?”Buffy strode toward the sound. “Not lost. I came for you, Mr. Mayor.”She rounded a seven-foot vase and saw him. Donald Drumpf, seated behind an enormous desk. In person he was smaller than she expected. He wore a simple black suit, American flag tie and his orange hair was styled in his trademark comb-over. Behind him was a row of television monitors, some tuned to different TV stations, others monitoring his tower.Donald smiled and stood, then made his way around the desk. “I’ve been watching your approach.”Buffy crossed her arms. “Aren’t you going to ask what I’m doing here?”He sniffed. “You’re attracted to me. You can’t help it. All women are.”“That’s not it. I’m here to kick your—”“My tower is very, very amazing,” Donald said, completely talking over her. “But I’m sure you noticed that coming in. Everybody says so, believe me. Thousands of people. Millions of people.” He reached into his trouser pocket and fiddled with something. She couldn’t help but notice that he had freakishly small hands. They were the size of quarters, but orange, with inch-worms for fingers.“Not here about the décor,” Buffy said. “Though you really should give out shades to visitors because all this—”“And my statue?” Donald interrupted again. “You couldn’t miss my statue. It’s very, very phenomenal.”“Yeah, uh …” Buffy cut in. “But I’m here about—”

Saturday, March 25, 2017

Documentary about Lee Morgan

Lee Morgan is one of my favorite trumpet players. When he was in his early thirties he was shot by his common-law wife. A. O. Scott reviews Kasper Collin's documentary in the NYTimes:

“I Called Him Morgan,” a suave and poignant documentary by Kasper Collin, dusts off the details of Morgan’s life and death and brushes away the sensationalism, too. This is not a lurid true-crime tale of jealousy and drug addiction, but a delicate human drama about love, ambition and the glories of music. Edged with blues and graced with that elusive quality called swing, the film makes generous and judicious use of Morgan’s recordings. The scarcity of film clips and audio of Morgan’s voice is made up for by vivid black-and-white photographs and immortal tracks from the Blue Note catalog.

Thursday, March 23, 2017

Department of WTF! Mysteries of memetics edition

Yesterday, with 5,725 vies, this was the most viewed photo at my Flickr site:

I'd uploaded it on August 18, 2012, so it's been online for 4 and a half years, though it reached to top some time ago, I forget just when. It's a good photo. I like it. But I don't really know why it's so popular.

But it's no longer top-dog. At 5730 views, this one is:

I uploaded it on January 14, 2017, a little over two months ago. Obviously enough, it's not a photo, nor is it mine. I forget just where I found it, but I'd uploaded it so I could link it elsewhere on the web.

Of course, there's little mystery about why it's so popular. It's a topical political cartoon that's very relevant to the current situation.

How many more views will it get? Will any of my photos ever get more views than this one? Who knows.

Wednesday, March 22, 2017

Working for 45

Jack Goldsmith has an interesting article at Lawfare: How Hard Is It to Work for President Trump? The problem arises, of course, because Trump spews forth an endless stream of lies, bull shit, and mere exaggerations. Goldsmith notes, "Most senior Executive branch officials see themselves to work for both the President and the nation (or the American people)." With a man like Trump, just how does that go?

Of Comey (FBI) and Rogers (NSA) Goldsmith notes:

...they are both non-political appointees who lead agencies with missions largely independent of the White House. They likely see their jobs as detached from the goings-on in the White House, except to the extent that the White House becomes caught up in the FBI investigation of Russian interference in the election. I also expect that Comey and Rogers believe it is important to stay in their jobs out of commitment to the agencies they serve, and in order to minimize our unconventional President’s damage to their agencies and to national security more generally.

Mattis (Defense) and Kelley (Homeland) are likely similar.

And then we have 45's staff, such as Priebus (Chief of Staff) and McGahn (WH Counsel):

... much of the work of these officials amounts to little more than enabling and protecting the President, personally and politically. That becomes a problem when the enabling and protecting comes in the service of mendacity or in a way inextricable from mendacity.

A good deal of the daily work by these officials in the White House, in other words, is a lower-key version of the work of Sean Spicer, who compromises himself daily in order to prop up the president’s lies and destructive actions. I imagine that these officials have the hardest time telling themselves (and others) a story about why their services are needed to minimize the damage Trump is causing, for these are the officials whose jobs are largely devoted to empowering the President...These jobs will likely grow harder and harder if the Trump presidency continues to accomplish so little, especially if the FBI investigations begin to absorb White House political and legal attention. And these are the jobs about which it will be harder to explain later why one continued in the job after it was clear that the President one worked so hard to support was so unworthy of his office.

Monday, March 20, 2017

On the nature of biology as an intellectual enterprise

Ashutosh Jogalekar, Why Technology Won't Save Biology, over at 3 Quarks Daily:

There have been roughly six revolutions in biology during the last five hundred years or so that brought us to this stage. The first one was the classification of organisms into binomial nomenclature by Linneaus. The second was the invention of the microscope by Hooke, Leeuwenhoek and others. The third was the discovery of the composition of cells, in health and disease, by Schwann and Schleiden, a direct beneficiary of the use of the microscope. The fourth was the formulation of evolution by natural selection by Darwin. The fifth was the discovery of the laws of heredity by Mendel. And the sixth was the discovery of the structure of DNA by Watson, Crick and others. The sixth [seventh?], ongoing revolution could be said to be the mapping of genomes and its implications for disease and ecology. Two other minor revolutions should be added to this list; one was the weaving of statistics into modern genetics, and the second was the development of new imaging techniques like MRI and CT scans.These six revolutions in biology resulted from a combination of new ideas and new tools.

However, today:

In one way biology has become a victim of its success. Today we can sequence genomes much faster than we can understand them. We can measure electrochemical signals from neurons much more efficiently than we can understand their function. We can model the spread of populations of viruses and populations much more rapidly than we can understand their origins or interactions. Moore's Law may apply to computer chips and sequencing speeds, but it does not apply to human comprehension. In the words of the geneticist Sydney Brenner, biology in the heyday of the 50s used to be "low input, low throughput, high output"; these days it's "low input, high throughput, no output". What Brenner is saying is that compared to the speed with which we can now gather and process biological data, the theoretical framework which goes into understanding data as well as the understanding which come out from the other end are severely impoverished. What is more serious is a misguided belief that data equals understanding. The philosopher of technology Evgeny Morozow calls this belief "technological solutionism", the urge to use a certain technology to address a problem simply because you can.

Reductionism worked well for physics and chemistry, but not so effectively for biology.

Emergence is what thwarts the understanding of biological systems through technology, because most technology used in the biological sciences is geared toward the reductionist paradigm. Technology has largely turned biology into an engineering discipline, and engineering tells us how to build something using its constituent parts, but it doesn't always tell us why that thing exists and what relationship it has to the wider world. The microscope observes cells, x-ray diffraction observes single DNA molecules, sequencing observes single nucleotides, and advanced MRI observes single neurons. As valuable as these techniques are, they will not help us understand the top-down pressures on biological systems that lead to changes in their fundamental structures.

Saturday, March 18, 2017

Friday, March 17, 2017

Numbers as cultural tools

Craig Fahman over at FiveThirtyEight has an interview with Caleb Everett, author of Numbers and the Making of Us: Counting and the Course of Human Cultures.

Caleb Everett: My suspicion is that there were many, many times in history when people realized in an ephemeral way that this quantity is the same as that quantity — that this five, in terms of their fingers, is the same as that five, in terms of goats or sheep. It’s no coincidence that many unrelated languages have a numerical structure built around 10 or that the word for five is often the same as the word for hand. Once someone else heard you referring to something as a “hand” of things, it became a cognitive tool that could be passed around and preserved within a particular culture.

Craig Fehrman: Once a particular culture has numbers, what does that allow?

CE: The way our cultures look, and the kinds of technology we have, would be radically different without numbers. Large nation-states aren’t really possible without numbers. Large agricultural societies aren’t possible, either.

Let’s say that two agricultural states in Mesopotamia, more than 5,000 and maybe as many as 8,000 years ago, wanted to trade with each other. To trade precisely, and we can see this in the archaeological record, they needed to quantify. So they cooked up these small clay tokens, with each token representing a certain quantity of a certain commodity like grain or beer. The tokens were then cooked inside a clay vessel that could be transported and cracked open. It was essentially a contract — you owe me this many whatever.

If a culture lacks a number system:

CE: [...] And a few languages and other communications systems, like the Pirahã’s in Brazil, have only imprecise words like hói [one or a couple] and hoí [a few]. Many experiments have shown that without numbers, the Pirahã struggle with basic quantitative tasks. They have a hard time matching one set of objects to another set of objects — lining up, say, eight spools of thread next to eight balloons. It gets even harder when they have to recall an exact quantity later.

It’s important to stress that these people are totally normal and totally intelligent; if you took a Pirahã person and raised them in a Portuguese home, they would learn numbers just fine. But without recourse to a number system, they struggle with counting. It’s an example of how powerful these cognitive tools can be.

Wednesday, March 15, 2017

Tuesday, March 14, 2017

Universal emotions no more?

Lisa Barrett just published a book, How Emotions Are made: The Secret Life of the Brain. Tyler Cowen just published his reactions to to it, including:

Here's a link to a website of supplemental information that accompanies Barrett's book.

Paul Ekman became famous for studies showing that humans experience a relatively small number of emotions that are the same across cultures. Psychologist Lisa Barrett thinks he's wrong. From an article about her work in The Boston Magazine:

2. According to Barrett, the expressions of human emotions are better understood as being socially constructed and filtered through cultural influences: “”Are you saying that in a frustrating, humiliating situation, not everyone will get angry so that their blood boils and their palms sweat and their cheeks flush?” And my answer is yes, that is exactly what I am saying.” (p.15) In reality, you are as an individual an active constructor of your emotions. Imagine winning a big sporting event, and not being sure whether to laugh, cry, scream, jump for joy, pump your fist, or all of the above. No one of these is the “natural response.”So I'm publishing these remarks atop this old post from June 2013.

3. Immigrants eventually acculturate emotionally into their new societies, or at least one hopes: “Our colleague Yulia Chentsova Dutton from Russia says that her cheeks ached for an entire year after moving to the United States because she never smiled so much.” (p.149)

3d. From her NYT piece: “My lab analyzed over 200 published studies, covering nearly 22,000 test subjects, and found no consistent and specific fingerprints in the body for any emotion. Instead, the body acts in diverse ways that are tied to the situation.”

Here's a link to a website of supplemental information that accompanies Barrett's book.

* * * * *

Paul Ekman became famous for studies showing that humans experience a relatively small number of emotions that are the same across cultures. Psychologist Lisa Barrett thinks he's wrong. From an article about her work in The Boston Magazine:

... my emotions aren’t actually emotions until I’ve taught myself to think of them that way. Without that, I have only a meaningless mishmash of information about what I’m feeling. In other words, as Barrett put it to me, emotion isn’t a simple reflex or a bodily state that’s hard-wired into our DNA, and it’s certainly not universally expressed. It’s a contingent act of perception that makes sense of the information coming in from the world around you, how your body is feeling in the moment, and everything you’ve ever been taught to understand as emotion. Culture to culture, person to person even, it’s never quite the same. What’s felt as sadness in one person might as easily be felt as weariness in another, or frustration in someone else.

H/t Daniel Lende, who also published these links:

Lisa Barrett (2006), Are Emotions Natural Kinds? Perspectives in Psychological Science.

Lisa Barrett (2006), Solving the Emotion Paradox: Categorization and the Experience of Emotion. Personality and Social Psychology Review.

Kristen A. Lindquist, Tor D. Wager, Hedy Kober, Eliza Bliss-Moreau and Lisa Feldman Barrett (2012), The brain basis of emotion: A meta-analytic review. Behavioral and Brain Sciences.

Abstract: Researchers have wondered how the brain creates emotions since the early days of psychological science. With a surge of studies in affective neuroscience in recent decades, scientists are poised to answer this question. In this target article, we present a meta-analytic summary of the neuroimaging literature on human emotion. We compare the locationist approach (i.e., the hypothesis that discrete emotion categories consistently and specifically correspond to distinct brain regions) with the psychological constructionist approach (i.e., the hypothesis that discrete emotion categories are constructed of more general brain networks not specific to those categories) to better understand the brain basis of emotion. We review both locationist and psychological constructionist hypotheses of brain–emotion correspondence and report meta-analytic findings bearing on these hypotheses. Overall, we found little evidence that discrete emotion categories can be consistently and specifically localized to distinct brain regions. Instead, we found evidence that is consistent with a psychological constructionist approach to the mind: A set of interacting brain regions commonly involved in basic psychological operations of both an emotional and non-emotional nature are active during emotion experience and perception across a range of discrete emotion categories.

Paul Ekman and Daniel Cordaro (2010), What Is Meant by Calling Emotions Basic. Emotion Review.

Abstract: are discrete, automatic responses to universally shared, culture-specific and individual-specific events. The emotion terms, such as anger, fear, etcetera, denote a family of related states sharing at least 12 characteristics, which distinguish one emotion family from another, as well as from other affective states. These affective responses are preprogrammed and involuntary, but are also shaped by life experiences.

Full articles are behind a pay wall.

Comment: I've known of Ekman's work for some time, and liked it But I have no deep investment in it; that is, I'm not committed to any ideas that depend on the existence of a small number of universal emotions (though my advocacy of Manfred Clynes might suggest otherwise). Barrett's work sounds plausible on the face of it, but I've not read her technical papers.

Monday, March 13, 2017

Journey to 3Tops: Indiana SLuGS and the Land that Time Forgot

Here's a piece from several years ago. Now that I'm involved in The Bergen Arches Project I've decided to bump it to the top of the queue. The graffiti 'landscape" has changed since then, and I've made some notations about that. "WAAGNFNP" is, of course, the We Are All Nuclear Fireball Now Party, which I've written about a bit earlier, and 3Tops is the Party's enforcer.

About two weeks ago (I'm writing this on 23 July 2007) I was checking my Flickr account to see if anyone had commented on any of my photos. I hit pay dirt. One PLASMA SLuGS (red ribbon for WAR} had made the following comment on one of my graffiti photographs: “please if u dont mind tell me where n how to get here.” Bingo!

As some of you party loyalists may know, I've been photographing local graffiti since last fall. The visage of our fine and noble 3Tops is, in fact, one of the pieces I'd found, not to mention other WAAGNFNP notables, such as Toothy. Graffiti, however, is generally illegal, and the people who do it don't leave contact information on the walls. Thus, while I now have hundreds, thousands even, of photographs of graffiti within a mile or so of my apartment, I don't know who painted them. And, since I have no roots in the area, I've got no social network through which I can track them down.

That's one of the reason I'd started posting my photos to Flickr. I figured that some of the writers (a term of art) would see them and perhaps, one day, one of them would contact me about them. SLuGS is the first.

Of course, I told him where the picture was taken - in Jersey City, about a mile in from the Holland Tunnel near the old Long Dock Tunnel. I also offered to take him on a tour of the local graffiti. He took me up on my offer and showed up that Sunday afternoon with a woman he introduced as his wife, a backpack full of spray paint, and a Canon single-lens reflex camera. I revved up Google Earth and showed them where we were, where the graffiti is, and off we went, with the intention of going into the Erie Cut.

On the way there SLuGS did a little painting, with both his wife and I snapping pictures:

|

| Still there, though faded a bit. |

That's the SLuG, his identifying mark that he uses instead of the name that most writers use. It's painted on the base of one of the columns supporting I78 as it comes down off the Jersey Heights (or the Jersey Palisades) and feeds into the Holland Tunnel. He's done thousands of these here and there, mostly I'd guess in the New York City area, but other places as well. He's been to Amsterdam and he's made cooperative arrangements to get the PLASMA SLuG up all over.

Saturday, March 11, 2017

The role of Navajo women in the early semiconductor industry

I learned that from 1965-1975 the Fairchild Corporation’s Semiconductor Division operated a large integrated circuit manufacturing plant in Shiprock, New Mexico, on a Navajo reservation. During this time the corporation was the largest private employer of Indian workers in the U.S. The circuits that the almost entirely female Navajo workers produced were used in devices such as calculators, missile guidance systems, and other early computing devices. [...] How and why did the most advanced semiconductor manufacturer in the world build a state of the art electronics assembly plant on a Navajo reservation in 1965? The short answer is: cheap, plentiful, skillful workers, and tax benefits. A 1969 Fairchild News Release explains that the plant was “the culmination of joint efforts of the Navajo People, the U.S. Bureau of Indian Affairs (B.I.A), and Fairchild.” Navajo leadership helped to push this project forward; Raymond Nakai, chairman of the Navajo Nation from 1963 to 1971, and the self-styled first “modern” Navajo leader, was instrumental in bringing Fairchild to Shiprock. He spoke fervently about the necessity of transforming the Navajo as a “modern” Indian tribe, and what better way to do so than to put its members to work making chips, potent signs of futurity that were no bigger than a person’s fingernail? [...]

The idea that Navajo weavers are ideally suited, indeed hard-wired, to craft circuit designs onto either yarn or metal appeals to a romantic notion of what Indians are and the role that they play in U.S. histories of technology.

This experiment in bringing the high tech electronics industry to the Navajo Reservation at Shiprock ended abruptly. In 1975, protesters associated with the American Indian Movement occupied and shut down the plant, demanding better conditions for workers. Indeed, the industry had experienced a slow down and some workers had been laid off. Though this was part of a national trend and not unique to the Shiprock plant, given the national social context of protest against racism and civil rights violations, it is not surprising that this occupation followed on the heels of AIM’s stand-off at Wounded Knee and the occupation of Alcatraz in California. The protesters viewed the plant as a continuation of the exploitation of native people, and they were correct that the Shiprock area had suffered from economic hardship for many years, hardship that was directly related to the long-standing disempowerment and impoverishment of the Navajo. It was difficult for them to perceive these layoffs as anything but more of the same. The protesters left after a week, but by then Fairchild had already decided to close the plant permanently, focusing instead on its operations in Asia.

Tuesday, March 7, 2017

Monday, March 6, 2017

Trump: The Man, the Oath, the Presidency

In the course of a discussion over at Marginal Revolution, prior_test2 linked to a most interesting post at Lawfare by Benjamin Wittes and Quinta Jurecic entitled “What Happens When We Don’t Believe the President’s Oath?” As title suggests, it centers on the President’s Oath of Office, which is quite brief: “I do solemnly swear . . . that I will faithfully execute the office of President of the United States, and will to the best of my ability, preserve, protect and defend the Constitution of the United States.” Their point is that it is this oath the links the private individual, Donald J. Trump, to the public office, President of the United States, and both binds him to that office and separates his private interests and concerns from that office.

Alas:

There’s only one problem with Trump’s eligibility for the office he now holds: The idea of Trump’s swearing this or any other oath “solemnly” is, not to put too fine a point on it, laughable—as more fundamentally is any promise on his part to “faithfully” execute this or any other commitment that involves the centrality of anyone or anything other than himself.Indeed, a person who pauses to think about the matter has good reason to doubt the sincerity of Trump’s oath of office, or even his capacity to swear an oath sincerely at all. We submit that huge numbers of people—including important actors in our constitutional system—have not even paused to consider it; they are instinctively leery of Trump’s oath and are now behaving accordingly.

And so:

...the presidential oath is actually the glue that holds together many of our system’s functional assumptions about the presidency and the institutional reactions to it among actors from judges to bureaucrats to the press. When large enough numbers of people within these systems doubt a president’s oath, those assumptions cease operating. They do so without anyone’s ever announcing, let alone ruling from the bench, that the President didn’t satisfy the Presidential Oath Clause and thus is not really president. They just stop working—or they work a lot less well.

By way of clarification:

There’s a big, if somewhat ineffable, difference between opposing a president and not believing his oath of office. All presidents face opposition, some of it passionate, extreme, and delegitimizing. All presidents face questions about their motives and integrity. Hating the President is a very old tradition, and many presidents face at least some suggestion that their oaths do not count. Obama, after all, was a foreign-born Muslim to his most extreme detractors.Yet, for a variety of reasons we discuss below, there is something different about the questions about Trump’s oath, and it is how widespread and mainstream the anxiety is. It’s also, and we want to be frank about this, how reasonable the anxiety is when applied to a man whose word one cannot take at face value on the prepolitical trust the oath represents. That person does not get certain presumptions our system normally attaches to presidential conduct.

And so we have:

On the contrary, the belief that there is something different about this president is extraordinarily common among sober commentators of both the left and right—and, even more notably, among many of those with significant experience in government in both career and political roles.So why the doubts? For one thing, Trump’s highly erratic statements and behavior include any number of incidents that seem to reflect a lack of understanding of his office or its weight. It’s not just the tweets. This is a person, after all, who suggested before the election that he might not even serve as president if he prevailed; who then said that he would accept the results of the election “if I win”; who made up a whole lot of voter fraud; who promised to prosecute his opponent; who, in his first public address following the inauguration, stood in front of the CIA’s Memorial Wall and bragged falsely about the number of people who attended his inaugural ceremony; who has made use of the immense power of the bully pulpit to publicly complain about the “unfair” decision by a private retail company to drop his daughter’s fashion line; who approached the process of selecting a Secretary of State, National Security Advisor, and nominee to the Supreme Court in a manner more fit for reality television than the office of the presidency; who refused to accept responsibility for the death of a Navy SEAL in a botched counterterrorism raid conducted on his orders, claiming instead that “this was something they [the generals] wanted to do,” before standing in front of Congress and apparently ad-libbing that the SEAL was looking down with happiness from Heaven because Trump received extended applause during his address; and whose most memorable quotation coming out of the presidential campaign was the inimitable, “Grab ‘em by the pussy”—a phrase he then dismissed as “locker room talk.”Moral seriousness and respect for his office just isn’t his thing.This is also a person whose campaign was rife with promises to commit crimes and to abuse the powers of the very office for which he then took the oath.The combination of the sprawling nature of his business and the intentional obscurity of his finances is also a factor.

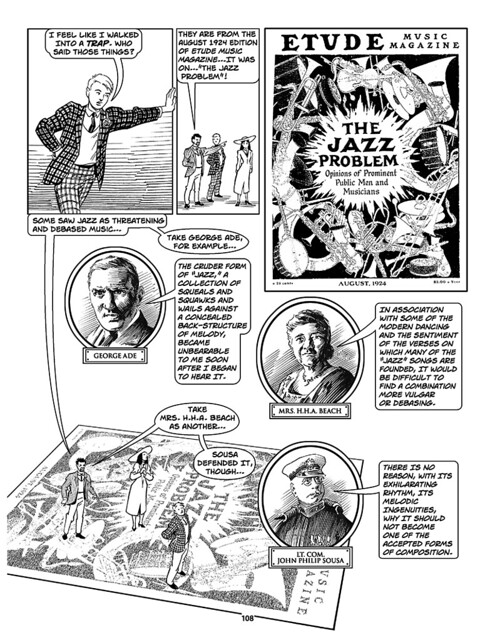

Theft! A History of Music

From Duke University's Center for the Study of the Public Domain:

Here's a sample page:

We are proud to announce the publication of Theft! A History of Music, a graphic novel laying out a 2000 year long history of musical borrowing from Plato to rap. The comic, by James Boyle, Jennifer Jenkins and the late Keith Aoki, is available as a handsome 8.5 x 11″ paperback, and for free download under a Creative Commons license.

About the Book

This comic lays out 2000 years of musical history. A neglected part of musical history. Again and again there have been attempts to police music; to restrict borrowing and cultural cross-fertilization. But music builds on itself. To those who think that mash-ups and sampling started with YouTube or the DJ’s turntables, it might be shocking to find that musicians have been borrowing—extensively borrowing—from each other since music began. Then why try to stop that process? The reasons varied. Philosophy, religion, politics, race—again and again, race—and law. And because music affects us so deeply, those struggles were passionate ones. They still are.

The history in this book runs from Plato to Blurred Lines and beyond. You will read about the Holy Roman Empire’s attempts to standardize religious music with the first great musical technology (notation) and the inevitable backfire of that attempt. You will read about troubadours and church composers, swapping tunes (and remarkably profane lyrics), changing both religion and music in the process. You will see diatribes against jazz for corrupting musical culture, against rock and roll for breaching the color-line. You will learn about the lawsuits that, surprisingly, shaped rap. You will read the story of some of music’s iconoclasts—from Handel and Beethoven to Robert Johnson, Chuck Berry, Little Richard, Ray Charles, the British Invasion and Public Enemy.

To understand this history fully, one has to roam wider still—into musical technologies from notation to the sample deck, aesthetics, the incentive systems that got musicians paid, and law’s 250 year struggle to assimilate music, without destroying it in the process. Would jazz, soul or rock and roll be legal if they were reinvented today? We are not sure. Which as you will read, is profoundly worrying because today, more than ever, we need the arts.

All of this makes up our story. It is assuredly not the only history of music. But it is definitely a part—a fascinating part—of that history. We hope you like it.You can read the book online, download a free PDF, or buy a paperback.

Here's a sample page:

Saturday, March 4, 2017

Once again, language universals

Cathleen O'Grady on Ars Technia:

The original research:

Large-scale evidence of dependency length minimization in 37 languages

Richard Futrell, Kyle Mahowald, and Edward Gibson

PNAS August 18, 2015 vol. 112 no. 33 10336-10341

doi: 10.1073/pnas.1502134112

Significance

Abstract

Language takes an astonishing variety of forms across the world—to such a huge extent that a long-standing debate rages around the question of whether all languages have even a single property in common. Well, there’s a new candidate for the elusive title of “language universal” according to a paper in this week’s issue of PNAS. All languages, the authors say, self-organise in such a way that related concepts stay as close together as possible within a sentence, making it easier to piece together the overall meaning. [...]A lot has been written about a tendency in languages to place words with a close syntactic relationship as closely together as possible. Richard Futrell, Kyle Mahowald, and Edward Gibson at MIT were interested in whether all languages might use this as a technique to make sentences easier to understand.The idea is that when sentences bundle related concepts in proximity, it puts less of a strain on working memory. For example, adjectives (like “old”) belong with the nouns that they modify (like “lady”), so it’s easier to understand the whole concept of “old lady” if the words appear close together in a sentence.

The original research:

Large-scale evidence of dependency length minimization in 37 languages

Richard Futrell, Kyle Mahowald, and Edward Gibson

PNAS August 18, 2015 vol. 112 no. 33 10336-10341

doi: 10.1073/pnas.1502134112

Significance

We provide the first large-scale, quantitative, cross-linguistic evidence for a universal syntactic property of languages: that dependency lengths are shorter than chance. Our work supports long-standing ideas that speakers prefer word orders with short dependency lengths and that languages do not enforce word orders with long dependency lengths. Dependency length minimization is well motivated because it allows for more efficient parsing and generation of natural language. Over the last 20 y, the hypothesis of a pressure to minimize dependency length has been invoked to explain many of the most striking recurring properties of languages. Our broad-coverage findings support those explanations.

Abstract

Explaining the variation between human languages and the constraints on that variation is a core goal of linguistics. In the last 20 y, it has been claimed that many striking universals of cross-linguistic variation follow from a hypothetical principle that dependency length—the distance between syntactically related words in a sentence—is minimized. Various models of human sentence production and comprehension predict that long dependencies are difficult or inefficient to process; minimizing dependency length thus enables effective communication without incurring processing difficulty. However, despite widespread application of this idea in theoretical, empirical, and practical work, there is not yet large-scale evidence that dependency length is actually minimized in real utterances across many languages; previous work has focused either on a small number of languages or on limited kinds of data about each language. Here, using parsed corpora of 37 diverse languages, we show that overall dependency lengths for all languages are shorter than conservative random baselines. The results strongly suggest that dependency length minimization is a universal quantitative property of human languages and support explanations of linguistic variation in terms of general properties of human information processing.

Friday, March 3, 2017

What about all those AI assistants kids interact with these days?

“How they react and treat this nonhuman entity is, to me, the biggest question,” said Sandra Calvert, a Georgetown University psychologist and director of the Children’s Digital Media Center. “And how does that subsequently affect family dynamics and social interactions with other people?”

With an estimated 25 million voice assistants expected to sell this year at $40 to $180 — up from 1.7 million in 2015 — there are even ramifications for the diaper crowd.

Toy giant Mattel recently announced the birth of Aristotle, a home baby monitor launching this summer that “comforts, teaches and entertains” using AI from Microsoft. As children get older, they can ask or answer questions. The company says, “Aristotle was specifically designed to grow up with a child.”

Boosters of the technology say kids typically learn to acquire information using the prevailing technology of the moment — from the library card catalogue, to Google, to brief conversations with friendly, all-knowing voices. But what if these gadgets lead children, whose faces are already glued to screens, further away from situations where they learn important interpersonal skills?

For example:

“We like to ask her a lot of really random things,” said Emerson Labovich, a fifth-grader in Bethesda, Md., who pesters Alexa with her older brother Asher. [...]

Yarmosh’s 2-year-old son has been so enthralled by Alexa that he tries to speak with coasters and other cylindrical objects that look like Amazon’s device. Meanwhile, Yarmosh’s now 5-year-old son, in comparing his two assistants, came to believe Google knew him better.

Etiquette?

In a blog post last year, a California venture capitalist wrote that his 4-year-old daughter thought Alexa was the best speller in the house. “But I fear it’s also turning our daughter into a raging a------,” Hunter Walk wrote. “Because Alexa tolerates poor manners.”

To ask her a question, all you need to do is say her name, followed by the query. No “please.” And no “thank you” before asking a follow-up.

“Cognitively I’m not sure a kid gets why you can boss Alexa around but not a person,” Walk wrote. “At the very least, it creates patterns and reinforcement that so long as your diction is good, you can get what you want without niceties.”

Thursday, March 2, 2017

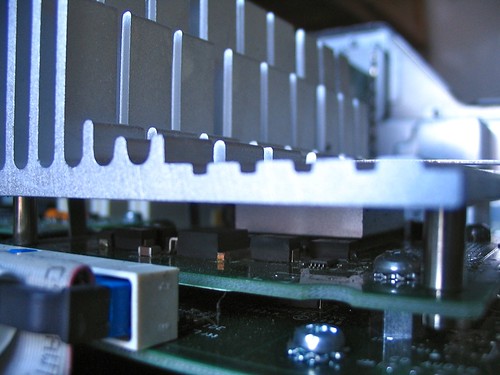

The physicality of computing

Intuitions are very important; they are the foundations of our thinking. But explaining or even merely “grasping” our intuitions is difficult. This is a story about my intuitions about computing. Lightly edited from a recent email:

My programming skills are minimal; and I’ve never done more the small example programs and that was long ago. These days I hand-code my posts, but that’s not programming.

But I’ve spent a lot of time thinking about computing. Back in the 1970s I studied computational semantics under David Hays, who was one of the first generation workers in machine translation. One of the things he impressed on me was the computation, real computation, is a physical process and thus subject to physical constraints. Too much talk about computation and information and the like treats them as immaterial substances, Cartesian res cogitans. Back in those days I bought a textbook on microprocessor design and read through several chapters. Of course what I got out of the exercise was not very deep, but it wasn’t trivial either.

Eventually I bought my first microcomputer, a North Star Horizon (this was before the days of the IBM PC). And I got a content-addressed memory board manufactured by a small (and now defunct) company started by Sidney Lamb (another first-generation MT researcher). One day the display on my computer went kerflooey (not exactly a technical term). Well, I knew that video-display boards had a synch-generator chip and it seemed to me that the problems I was having might have been caused by trouble with that chip. So I examined the circuit diagram for the video board and located the synch-generator. And then opened the box, removed the board, located the synch-generator, and reseated it. When I replaced the board and turned on the machine, the display was working fine. It’d guessed right.

That was years ago but I still remember it. Why? Because that gave me a tangible sense of the physicality of this information processing stuff. And I think all features of the story are important:

1) reading a text on microprocessor design,2) somewhere reading about synch-generators,3) having a problem with my machine and guessing about it’s nature,4) consulting a circuit diagram of one board in my machine,5) removing the board,6) locating the chip on it,7) reseating the chip,8) reassembling the machine, and9) testing it.

It’s all part of the same story. And that’s a story that informs my sense of the physicality of computing. I had to think about what I was doing at every step along the way.

That’s very different from simply believing that whatever happens in your computer is physical because, well, what else could it be? But I don’t know just how I’d characterize the knowledge I got from that experience. It’s not abstract. I hesitate to say that it’s deep or profound. But it’s very very real.