Michael Gavin recently published a fascinating article in Critical Inquiry, Vector Semantics, William Empson, and the Study of Ambiguity [1], one that developed a bit of a buzz in the Twitterverse.

this is the essay pretty much everyone in cultural analytics/DH has been looking for— Richard Jean So (@RichardJeanSo) June 14, 2018

And stay for the fireworks at the very end! "…polemic of the kind that typically surrounds the digital humanities, most of which fetishizes computer technology in ways that obscure the scholarly origins …" https://t.co/tr1RiFNhVu— Ted Underwood (@Ted_Underwood) June 14, 2018

In the wake of such remarks I really had to read the article, which I did. And I had some small correspondence with Gavin about it.

I liked the article a lot. But I was thrown off balance by two things, his use of the term “computational semantics” and a hole in his account of machine translation (MT). The first problem is easily remedied by using a different term, “statistical semantics”. The second could probably be dealt with by the addition of a paragraph or two in which he points out that, while early work on MT failed and so was defunded, it did lead to work in computational semantics of a kind that’s quite different from statistical semantics, work that’s been quite influential in a variety of ways.

In terms of Gavin’s immediate purpose in his article, however, those are minor issues. But in a larger scope, things are different. And that is why I’m writing these posts. Digital humanists need to be aware of and in dialog with that other kind of computational semantics.

Warren Weaver 1949

In this post and in the next I want to take a look at a famous memo Warren Weaver wrote in 1949. Weaver was director of the Natural Sciences division of the Rockefeller Foundation from 1932 to 1955. He collaborated Claude Shannon in the publication of a book which popularized Shannon’s seminal work in information theory, The Mathematical Theory of Communication. Weaver’s 1949 memorandum, simply entitled “Translation” [2], is regarded as the catalytic document in the origin of MT.

He opens the memo with two paragraphs entitled “Preliminary Remarks”.

There is no need to do more than mention the obvious fact that a multiplicity of language impedes cultural interchange between the peoples of the earth, and is a serious deterrent to international understanding. The present memorandum, assuming the validity and importance of this fact, contains some comments and suggestions bearing on the possibility of contributing at least something to the solution of the world-wide translation problem through the use of electronic computers of great capacity, flexibility, and speed.The suggestions of this memorandum will surely be incomplete and naïve, and may well be patently silly to an expert in the field - for the author is certainly not such.

But then there were no experts in the field, were there? Weaver was attempting to conjure a field of investigation out of nothing.

I think it important to note, moreover, that language is one of the seminal fields of inquiry for computer science. Yes, the Defense Department was interested in artillery tables and atomic explosions, and, somewhat earlier, the Census Bureau funded Herman Hollerith in the development of machines for data tabulation, but language study was important too.

Caveat: In this and subsequent posts, I assume the reader understands the techniques Gavin has used. I am not going to attempt to explain them; he does that well in his article.

Weaver on the problem of multiple meaning

Let’s skip ahead to the fifth section, entitled “Meaning and Context” (p. 8):

First, let us think of a way in which the problem of multiple meaning can, in principle at least, be solved. If one examines the words in a book, one at a time as through an opaque mask with a hole in it one word wide, then it is obviously impossible to determine, one at a time, the meaning of the words. "Fast" may mean "rapid"; or it may mean "motionless"; and there is no way of telling which.But if one lengthens the slit in the opaque mask, until one can see not only the central word in question, but also say N words on either side, then if N is large enough one can unambiguously decide the meaning of the central word. The formal truth of this statement becomes clear when one mentions that the middle word of a whole article or a whole book is unambiguous if one has read the whole article or book, providing of course that the article or book is sufficiently well written to communicate at all.The practical question is, what minimum value of N will, at least in a tolerable fraction of cases, lead to the correct choice of meaning for the central word?This is a question concerning the statistical semantic character of language which could certainly be answered, at least in some interesting and perhaps in a useful way.

Weaver goes on to address ambiguity – Gavin’s point of departure – in the next paragraph.

What I find interesting is that Weaver takes the point of view of someone reading a text. One is in the world at a certain place and time with a limited vantage point on a text. Weaver then imagines expanding that vantage point until it encompasses the entire text. One might also imagine reading the text through a window N characters wide, which Weaver does on the next page. Psycholinguistic investigation indicates that that is, in effect, what people do when reading and that is what computers do in developing the vector semantic models that interest Gavin.

The distributional hypothesis

Now let’s turn to Gavin’s article. He begins working his way toward vector semantics with a discussion of Zellig Harris–who, incidentally, was Chomsky’s teacher (pp. 651-652):

In 1954, Harris formulated what has since come to be known as the “distributional” hypothesis. Rather than rely on subjective judgments or dictionary definitions, linguists should model the meanings of words with statistics because “difference of meaning correlates with difference of distribution.” He explains,If we consider oculist and eye doctor we find that, as our corpus of actually occurring utterances grows, these two occur in almost the same environments. ... In contrast, there are many sentence environments in which oculist occurs but lawyer does not; e.g., I’ve had my eyes examined by the same oculist for twenty years, or Oculists often have their prescription blanks printed for them by opticians. It is not a question of whether the above sentence with lawyer substituted is true or not; it might be true in some situation. It is rather a question of the relative frequency of such environments with oculist and with lawyer.This passage reflects a subtle shift in Harris’s thinking. In Structural Linguistics he had proposed that linguists attend to distributional structure as a theoretical principle. Here the emphasis is slightly different. He says that distribution “correlates” to meaning. Oculist and eye doctor mean pretty much the same thing, and they appear in sentences with pretty much the same words. Rather than turn away from meaning, Harris here offers a statistical proxy for it.

A little reflection should tell you that Harris has much the same insight that Weaver had, but from a very different point of view.

Harris does not imagine his nose buried in the reading of a single text. Rather, he has a corpus of texts at his disposal and is comparing the contexts of different words, Those contexts are, in effect, Weaver’s window that extents N words on either side of the focal word. Harris isn’t trying to guess what any word means; he doesn’t really care about that, at least not directly. All he’s interested in is identifying neighbors: “difference of meaning correlates with difference of distribution.” One can count neighbors that without knowing what any words mean. That means that computers can carry out the comparison.

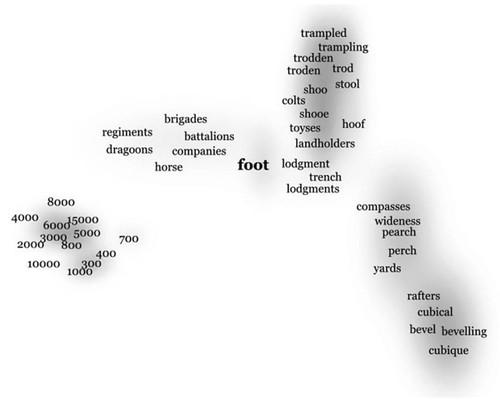

Consider Gavin’s Figure 7 (p. 662), depicting the neighborhood of “foot”:

The first thing that came to mind after I’d scanned it for a second or two is: “What are those numbers doing there? What do they have to do with feet?” Remember, the computer that did the work doesn’t know what any of the words mean, but I do. I understand well enough why “trampled”, “trod”, and “hoof” should turn up in the neighborhood of “foot” (upper right). Gavin explains (pp. 662-663):

In the seventeenth century, foot was invoked primarily in three contexts. Most commonly, it served in military discourse as a synecdoche for infantry, and so it scores highly similar to words like regiments, brigades, battalions, dragoons, and companies, while the cluster of number words near the bottom left suggest the sizes of these companies.

Ah, I see.

Now, indulge me a bit while I belabor the point. Let us start with some suitable corpus and encrypt every word in it with a simple cypher. I’m not interested in secret messages; I just want to disguise word identities. For example, we could replace each letter with the next one in the alphabet, and replacing “z” with “a”. Thus “apple” becomes “bqqmf”; “zinc” becomes “ajod”; “dog” becomes “eph” and “HAL” becomes “IBM”.

Now let’s run our vector semantics program on this encrypted corpus. The result is a Word Space, to use a term of art, that will have exactly the same high-dimensional geometry as we would get by running the program on the unencrypted corpus. Why? Because the corresponding tokens have the same distribution in the corpus. However, because the encrypted tokens are unintelligible, at least upon casual inspection, this Word Space is unusable to us.

The same thing would happen if we were to perform a topic analysis on an encrypted corpus. As you know, in this context, “topic” is a word of art meaning a list of words weighted by their importance in the topic. It is up to the analyst to inspect each topic and determine whether or not it is coherent, where “coherent” means something like, “is a topic in the ordinary sense of the word.” It often happens that some topics aren’t coherent in that sense. If we’re performing a topic analysis on an encrypted corpus, we have no way of distinguishing between a coherent and an incoherent topic. They’re all gibberish.

Why do I harp on this, I hope, obvious point? Because it is as important as it is obvious. But it’s not something we’re likely to have in mind when we look at work like Gavin’s, nor is it necessarily that we do so. But I’m making a methodological point.

And I’m preparing for the my next post. Wouldn’t it be useful to have a computational semantics that got at meaning in a more direct way? That’s what computational linguists were asking themselves in the late 1960s, investigators in artificial intelligence and psycholinguistics too. That is a very deep problem; progress has been made, while much remains obscure. Computational work began on the problem six decades ago and there is an ever so tentative pointer in the direction of that work in Weaver’s 1949 memo.

References

[1] Michael Gavin, Vector Semantics, William Empson, and the Study of Ambiguity, Critical Inquiry 44 (Summer 2018) 641-673: https://www.journals.uchicago.edu/doi/abs/10.1086/698174

Ungated PDF: http://modelingliteraryhistory.org/wp-content/uploads/2018/06/Gavin-2018-Vector-Semantics.pdf

[2] Warren Weaver, “Translation”, Carlsbad, NM, July 15, 1949, 12. pp. Online: http://www.mt-archive.info/Weaver-1949.pdf

Very interesting. I used Shannon's theory as the theoretical basis for the hypotheses in my master's thesis.

ReplyDeleteShannon's ideas have been mega-influential.

Delete