Pages in this blog

▼

Friday, August 31, 2018

Thursday, August 30, 2018

Post-critique & theory fatigue

Brown University's Pembroke Center for Teaching and Research on Women has announced the topic for its 2019-2020 Research Seminar, "The Question of Critique". Here's a paragraph:

Post-critique, for one, does not describe a unified field; it does however share a set of metaphors that underpin its project of “critiquing critique.” The idea of fatigue, in particular of theory fatigue, has functioned as an important component. A second term important to the project has been the critique of suspicion, which has taken place by the recuperation of Paul Ricoeur’s concept of the “hermeneutics of suspicion,” the latter used by the French philosopher to describe the critical projects of Marx, Nietzsche and Freud. The hermeneutics of suspicion has become the blanket term for what post-critical thinkers believe to be – in Rita Felski’s words – the limits of critique, this because dominated by what she claims is a misplaced paranoia. Post-critical thought and the reading practices it propounds rely on spatial metaphors in order to advocate a return to description: if the hermeneutics of suspicion digs deep under ground, post-critique advocates “surface reading” or “distant reading,” among other practices. Finally, and this returns us also to the idea of fatigue, post-critique proposes a move away from the prefix “de-“ (deconstruction, for example, or demystification or destabilization), which is viewed as destructive and exhausting, to the prefix “re.” The latter instead is perceived as an act of reparation and recuperation, whence we gain reparative reading practices that care for their objects of study. In this move, post-critical thinking also embraces a new form of ecological materialism.

This whole business of intellectual exhaustion ("fatigue") interests me as a phenomenon. Why have ideas stopped coming? Are ideas a limited resource?

I like a metaphor of geographical exploration. It's one thing to explore and chart the territory and another thing to settle there in frontier outposts. And there's a difference between precarious outposts and fully domesticated settlements with families, churches, and schools, etc. I figure fatigue sets in sometime between the creation of outposts in all regions and territories, where as far as anyone can tell all the different kinds of geography have been discovered, and the process where the last outposts start settling down into secure settlements. That seems to have happened in the mid-to-late 1990s in literary and cultural criticism.

The trick, of course, would be to move from this metaphor to an account of the cognitive-cultural phenomena in play. The theory of cultural ranks that David Hays and I have sketched out might be of use here. Ideas don't come out of thin air. They are constructed. There are only so many things one can construct from a given repertoire of available materials. When all the basic construction types have been identified, the territory has been sampled. Now we transition to elaborating on those types.

The future of anthropology

But this line of inquiry increases the conceptual strain on anthropology. Let’s state that thought like this: if anthropology was invented to bring the full force of modern rationality to bear on “primitive” societies and cultures, and this under conditions in which the division between the modern and the primitive was most concretely based in the division between reason and magic, what happens to anthropology once the hard division between magic and reason is undone (as it is here), and in the same breath the hard division between “primitive” and “modern” (once again) crumbles?In this situation, anthropology itself, rather than being a vehicle of universal rationality, becomes an expression of a particular society and culture and, indeed, a performance somewhat like a magic performance in which partial, more or less ritually produced statements are passed off and sanctioned as legitimate objective knowledge.This difficulty cannot be bypassed just by directing the “ethnographic gaze” indifferently toward all kinds of institutions and lifeways, “modern” or not, nor even by anthropology’s avowed acceptance that it too participates in the societies it studies rather than merely observes them. [...]The solution to this problem, as Jones’s book also allows us to suppose, is to move away both from the discipline’s Boasian transformation and from Latour’s emphasis on hybridity and de-purification, so as to imagine it differently. [...]Or, to put it more concretely, we are to accept that anthropology is a practice, based in training, in which lifeways are characteristically examined by experiencing them as if simultaneously from the inside and the outside, with a will, then, to describe their regularities, analogies, exclusions, moods, risks, challenges, and so on, ultimately for bureaucratic (i.e., academic) reasons and uses. In such a view of anthropology, the discipline comes to be based not in reified reason and modernity but in presence and experience—in the capacity to live with one’s objects of attention and knowledge simultaneously as a participant and as a bearer of academic office, methods, and duties.In this paradigm, anthropology can make claims to pursue globalized truths not just because its practices can be learned through training, but because universities and their disciplines are now, as a matter of fact, global, the world being divided into nation-states, almost all of which contain universities, and many of these train and hire ethnographers. Magic and reason have nothing to do with it.

Universal liberalism?

Where Egginton sees a threat to democracy in a polity insufficiently and unequally educated in the liberal tradition, Lukianoff and Haidt notice something unprecedented and a lot more frightening: a generation, including its most privileged and educated members — especially these members — that has been politically and socially “stunted” by a false and deepening belief in its own fragility. This is a generation engaged in a meritocratic “arms race” of epic proportions, that has racked up the most hours of homework (and screen time) in history but also the fewest ever of something so simple as unsupervised outdoor play. If that sounds trivial, it shouldn’t. “When adult-supervised activities crowd out free play, children are less likely to develop the art of association,” Lukianoff and Haidt write, along with other social skills central to the making of good citizens capable of healthy compromise. Worse, the consequences of a generation unable or disinclined to engage with ideas and interlocutors that make them uncomfortable are dire for society, and open the door — accessible from both the left and the right — to various forms of authoritarianism.What both of these books make clear from a variety of angles is that if we are going to beat back the regressive populism, mendacity and hyperpolarization in which we are currently mired, we are going to need an educated citizenry fluent in a wise and universal liberalism. This liberalism will neither play down nor fetishize identity grievances, but look instead for a common and generous language to build on who we are more broadly, and to conceive more boldly what we might be able to accomplish in concert. Yet as the tenuousness of even our most noble and seemingly durable civil rights gains grows more apparent by the news cycle, we must also reckon with the possibility that a full healing may forever lie on the horizon. And so we will need citizens who are able to find ways to move on despite this, without letting their discomfort traumatize or consume them. If the American university is not the space to cultivate this strong and supple liberalism, then we are in deep and lasting trouble.

Francis Fukuyama on identity politics

Louis Menand has doubts, in The New Yorker.Q. You’re critical of how political struggles are framed as economic conflicts. In your view, it’s identity that better explains politics.

A. This theme goes back to Plato, who talked about a third part of the soul that demanded recognition of one’s dignity. That has morphed in modern times into identity politics. We think of ourselves as people with an inner self hidden inside that is denigrated, ignored, not listened to. A great deal of modern politics is about the demand of that inner self to be uncovered, publicly claimed, and recognized by the political system.A lot of these recognition struggles flow out of the social movements that began to emerge in the 1960s involving African-Americans, women, the LGBT community, Native Americans, and the disabled. These groups found a home on the left, triggering a reaction on the right. They say: What about us? Aren’t we deserving of recognition? Haven’t the elites ignored us, downplayed our struggles? That’s the basis of today’s populism.Q. Is there anything inherently problematic about minority groups’ demanding recognition?

A. Absolutely not. Every single one of these struggles is justified. The problem is in the way we interpret injustice and how we try to solve it, which tends to fragment society. In the 20th century, for example, the left was based around the working class and economic exploitation rather than the exploitation of specific identity groups. That has a lot of implications for possible solutions to injustice. For example, one of the problems of making poverty a characteristic of a specific group is that it weakens support for the welfare state. Take something like Obamacare, which I think was an important policy. A lot of its opponents interpreted it as a race-specific policy: This was the black president doing something for his black constituents. We need to get back to a narrative that’s focused less on narrow groups and more on larger collectivities, particularly the collectivity called the American people. [...]

Q. You believe identity politics can be steered toward a broader sense of shared identity. What role can universities play?

A. Universities have lost a sense of their role in training American elites about their own institutions. I’m really struck by this at Stanford. If you read through all the things Stanford thinks it’s doing, it’s global this and global that. We’re preparing students to be global leaders. It’s very hard to find any dedication to a mission of promoting constitutional government, rule of law, democratic equality, in our own country. I’m not saying every student should take an American-government class — that’s not going to work for a lot of reasons. But universities need to better prepare future leaders in our own country.

Wednesday, August 29, 2018

Star struck and broken down 3: The case of Paul de Man

Paul de Man was one of the most important "stars" in the firmament of literary Theory. He was born in Belgium in 1919 and immigrated to New York City in 1948. He became a first generation deconstuctionist in the late 1960s and went to Yale in 1970 where he became one of the so-called "Yale Mafia" of deconstructive critics (Jacques Derrida, J. Hillis Miller, and Geoffrey Hartman were the other three). He died in 1983. In the late 80s, however, a cache of his wartime journalism was discovered, and some of the articles were anti-Semitic. The result was a scandal in the academy. Did this history undermine the validity of his later academic work?

The tweet below, brought to my attention by Amardeep Singh, refers to John Guillory, Cultural Capital: The Problem of Literary Canon Formation (2013), which I have not read. Note the second sentence: "That scandal reveals...the fact that the charismatic persona of the master theorist is the vehicle for the dissemination of theory; otherwise the status of deconstructive theory could not rise or fall with the reputation of its master." How many literary critics were "stars" in that sense? Is literary criticism, as a discipline, particularly vulnerable to this charisma, to use Guillory's term?

Hey-we-should-all-be-reading-Cultural-Capital-again-take-5343404359 pic.twitter.com/Ybd2mvWXoI— Merve Emre (@mervatim) August 24, 2018

ADDENDUM (2.21.24): Harold Bloom certainly was a charismatic critical star. Would any variety of criticism fall is some intellectual scandal became attached to him comparable to what happened with de Man? I think not. What does that imply about his status within the academy?

The lay of the land: Bergen Arches, eastern end

This is an abandoned trolly line just below Dickinson High School:

If you continue walking along that path you get here:

The trolly right of way goes through that smaller tunnel to the left. When you get a bit closer you'll see those steps at the left:

They go up to the surface and come out at the parking lot for Dickinson HS. I took this shot standing at the top of those steps looking into the parking lot:

That's Palisades Ave. behind the fence at the right. And this is a view into the trolly tunnel:

Here we've gone through that tunnel and are looking down into the arches, back toward the direction we came from:

Here we are down in the arches. We've gone through the large arch and are looking up at the smaller one, the one for the trolly line:

Note: These photographs are over a decade old. Things have changed a bit since then, but only a bit. The basic layout remains the same.

Bonus: Truck going through the arches in May 2010:

Tuesday, August 28, 2018

Your brain is only as old as you feel

Kwak S, Kim H, Chey J and Youm Y (2018) Feeling How Old I Am: Subjective Age Is Associated With Estimated Brain Age. Front. Aging Neurosci. 10:168. doi: 10.3389/fnagi.2018.00168

Abstract: While the aging process is a universal phenomenon, people perceive and experience one’s aging considerably differently. Subjective age (SA), referring to how individuals experience themselves as younger or older than their actual age, has been highlighted as an important predictor of late-life health outcomes. However, it is unclear whether and how SA is associated with the neurobiological process of aging. In this study, 68 healthy older adults underwent a SA survey and magnetic resonance imaging (MRI) scans. T1-weighted brain images of open-access datasets were utilized to construct a model for age prediction. We utilized both voxel-based morphometry (VBM) and age-prediction modeling techniques to explore whether the three groups of SA (i.e., feels younger, same, or older than actual age) differed in their regional gray matter (GM) volumes, and predicted brain age. The results showed that elderly individuals who perceived themselves as younger than their real age showed not only larger GM volume in the inferior frontal gyrus and the superior temporal gyrus, but also younger predicted brain age. Our findings suggest that subjective experience of aging is closely related to the process of brain aging and underscores the neurobiological mechanisms of SA as an important marker of late-life neurocognitive health.IntroductionSubjective age (SA) refers to how individuals experience themselves as younger or older than their chronological age. Subjective perception of aging does not coincide with the chronological age and shows large variability among individuals (Rubin and Berntsen, 2006). The concept of SA has been highlighted in aging research as an important construct because of its relevance to late-life health outcomes. Previous studies have suggested that SA is associated with various outcomes, including physical health (Barrett, 2003; Stephan et al., 2012; Westerhof et al., 2014), self-rated health (Westerhof and Barrett, 2005), life satisfaction (Barak and Rahtz, 1999; Westerhof and Barrett, 2005), depressive symptoms (Keyes and Westerhof, 2012), cognitive decline (Stephan et al., 2014), dementia (Stephan et al., 2016a), hospitalization (Stephan et al., 2016b) and frailty (Stephan et al., 2015a). Although chronological age is a primary factor in explaining these late-life health outcomes, these studies suggest that SA can be another construct that characterizes individual differences in the aging process.The interoceptive hypothesis posits that a significant number of functions, both physical and cognitive, decline with age and this is subsequently followed by an awareness of such age-related changes (Diehl and Wahl, 2010). In other words, feeling subjectively older may be a sensitive marker or indicator reflecting age-related biological changes. This hypothesis is supported by several studies that have reported significant associations between older SA and poorer biological markers, including C-reactive protein (Stephan et al., 2015c), diabetes (Demakakos et al., 2007), and body mass index (Stephan et al., 2014). Moreover, the indices of biological age (MacDonald et al., 2011) including peak expiratory flow and grip strength, also were associated with SA, even after demographic factors, self-rated health and depressive symptoms were controlled for Stephan et al. (2015b). Among a variety of biological aging markers, a decrease in neural resource constitutes a major dimension of age-related changes in addition to physical, socio-emotional and lifestyle changes (Diehl and Wahl, 2010). Together with the interoceptive hypothesis, the subjective experience of aging may partly result from one’s subjective awareness of age-related cognitive decline. For example, subjective reports of one’s own cognitive decline have received attention as an important source of information for the prediction of subtle neurophysiological changes. Even when no signs of decline are found in cognitive test scores, subjective complaints of cognitive impairment may reflect early stages of dementia or pathological changes in the brain (de Groot et al., 2001; Reid and MacLullich, 2006; Stewart et al., 2008; Yasuno et al., 2015). It is, thus, possible to examine a link between the subjective experience of aging and neurophysiological aging.To assess age-related brain structural changes and widespread loss of brain tissue, neuroanatomical morphometry methods have been widely used (Good et al., 2001; Fjell et al., 2009a; Raz et al., 2010; Matsuda, 2013). Moreover, the large neuroimaging datasets and newly developed machine learning techniques have made it possible to estimate individualized brain markers (Gabrieli et al., 2015; Cole and Franke, 2017; Woo et al., 2017). This approach can be advantageous for interpreting an individualized index for brain age, since predictive modeling can represent multivariate patterns expressed across whole brain regions, unlike massive and iterative univariate testing. In recent studies, estimated brain age was found to predict indicators of neurobiological aging, including cognitive impairment (Franke et al., 2010; Franke and Gaser, 2012; Löwe et al., 2016; Liem et al., 2017), obesity (Ronan et al., 2016) and diabetes (Franke et al., 2013).Although SA has predictive values for future cognitive decline or dementia onset, few studies have examined the neurobiological basis of such outcomes. Combining both regional morphometry and the brain age estimation method, this study will provide an integrated picture of how each individual undergoes a heterogeneous brain aging process and supply further evidence of the neural underpinnings of SA (Kotter-Grühn et al., 2015). Using analyses for voxel-based morphometry (VBM) and age-predicting modeling, we aimed to identify whether younger SA is associated with larger regional brain volumes and lower estimated brain age. We also examined possible mediators, including self-rated health, depressive symptoms, cognitive functions and personality traits that were candidates for explaining the hypothesized relationship between SA and brain structures.

Theorist and disciples, star and fans

Hey-we-should-all-be-reading-Cultural-Capital-again-take-5343404359 pic.twitter.com/Ybd2mvWXoI— Merve Emre (@mervatim) August 24, 2018

Monday, August 27, 2018

Meaning in life

Scott Sumner is an economist in the Mercatus Center at George Mason University. From a recent blog post, What do we mean by meaning?

Let me begin by noting that I often have a sort of “inside view” and an “outside view”. Thus my inside view is, “of course I have free will” and my outside view is, “of course free will doesn’t exist.” Similarly, my inside view of meaning is probably not too dissimilar from the views of others, while my outside view is that meaning doesn’t exit. Life is just one damn mental state after another.

So he's not interested in that outside view.

Because of my outside view, I prefer not to talk about “finding meaning”, as if there is something out there to me found. Rather I’d prefer to say “seeing meaning”, which implies meaning occurs in our minds. I’ve long believed that the very young see more meaning in life than older people, and that meaning gradually drains away as you age. Meaning is also more likely to be visible in dreams, and (I’m told) in psychedelic trips on LSD or mushrooms.

He concludes:

For me, the greatest meaning in life comes from art, broadly defined to include aesthetically beautiful experiences with nature, old cities, and scientific fields like astronomy and physics. The most meaningful experience in my life might have been seeing the film 2001 at age 13. I’ve never tried LSD, but after reading about the experience it reminds me of this film, and indeed the director was someone who experimented with acid. (It might also be the only “psychedelic” work of visual art that’s actually any good. Whereas pop music from the 60s is full of good examples.)H/t Tyler Cowen.

To me, art is “real life” and things such as careers are simply ways of making money in order to have the ability to experience that real life. After art, I’d put great conversation second on the list. And the part of economics that most interests me is the ability to converse with like-minded people (such as at the Cato summer course.)

I’m sort of like a satellite dish, receptive to ideas and sounds and images. My ideal is Borges, who regarded himself more as a great reader than a great writer (of course he was both, and a great conversationalist.) I’d rather be a great reader than a great writer. I’d rather be able to appreciate great music than be able to produce it.

Sunday, August 26, 2018

The persistence of political forms in New York 2140, a coordination problem

As you may know, I've been participating in a group reading of Kim Stanley Robinson's New York 2140, re-reading for me. We're in the wrap-up phase. Bryan Alexander observed:

Politics This has a specific, clear, and well developed programmatic political agenda. In response to unmitigated climate change and escalating income inequality, New York 2140 calls for a massive, global left wing/green revolt. Through it national tax policies would change, major banks nationalized, and the American Democratic party be shifted (dragged) to the left. While its villains get some small voice, it’s clear where the novel stands. As Babette Kraft observes in a comment on last week’s post, “In terms of politics, a democracy of the people is the good fight. It’s the financial system that’s the enemy.”

I responded:

3. On the politics, you’re right, a clear revolt to the left. But the overall framework seems to be pretty much the one we’re operating in now. That framework managed to remain viable through two Pulses and on into 2140. Do I believe (in) that?Didn’t the framework of world politics shift dramatically during and after WWI (the death of old Europe, the Russian revolution) and then again after WWII (dissolution of colonial empires, creation of the UN, rise of Japan)? Does the collapse of the Soviet Union in 89/90 mark another such shift (w/ rise of China and India)? That’s two or three shifts in a century, but then no major shifts over the next century and a quarter?Of course, if there were a couple more shifts, what would they be?

Bryan:

3. The persistence of our political forms seemed strange, and I’ve mentioned it before. Even assuming some American imperial/hyperpower permanence, we do add new offices. Think about the LBJ/Nixon boom in federal departments, or the post-9-11 Homeland Security reorg. Agreed.

I prefaced my next (and last, at the moment) response by referencing these remarks KSR made in Nature:

Here’s how I think science fiction works aesthetically. It’s not prediction. It has, rather, a double action, like the lenses of 3D glasses. Through one lens, we make a serious attempt to portray a possible future. Through the other, we see our present metaphorically, in a kind of heroic simile that says, “It is as if our world is like this.” When these two visions merge, the artificial third dimension that pops into being is simply history. We see ourselves and our society and our planet “like giants plunged into the years”, as Marcel Proust put it. So really it’s the fourth dimension that leaps into view: deep time, and our place in it. Some readers can’t make that merger happen, so they don’t like science fiction; it shimmers irreally, it gives them a headache. But relax your eyes, and the results can be startling in their clarity.

With that in mind:

I suppose one way to think about that strange persistence of political forms is through KSR’s remarks about science fiction as a 3D lens on the present. It’s the present political system that he’s looking at and so, of course, that’s got to be there at the heart of his construction of 2140. When then, is he revealing about the present system? That it depends on the acquiescence of the many, but if somehow the many can communicate their dissatisfaction to one another and coordinate their actions, it’s all over–is that what he’s saying?I keep thinking about what economists call a coordination problem. In the small, it’s like when you’re making a decision with a small group. You’re thinking, “if Ted goes for it, then I’m in.” Ted’s thinking, “if Mary goes for it, I’m in.” Mary’s thinking, “if Jake, then me.” Jake’s thinking, “if only Suzy would tumble.” What’s Suzy thinking? You got it, she’s thinking “I’m with Bryan, what’s he want?” But NONE of you know any of this. Someone’s got to go first, then the rest will know what to do. Who’s it going to be?That’s what’s at the heart of The Emperor’s New Clothes. Everyone knows that the guy doesn’t have any clothes on at all, he’s naked. But they also know that if they show any dissent, they’re in trouble. And then the kid blurts it out, “He’s naked!” Everyone hears the kid, everyone knows that everyone else hears him. And so they tumble.What finally triggers things in NY2140? Amelia gets an impulse in her balloon and calls for a strike. That’s not what they’d planned. Heck, at that point they didn’t have a plen for starting things off. They just knew they were somehow sometime soon going to start a strike. Amelia jumped the gun. She played the role of the little kid who called the Emperor’s bluff. Of course, it wouldn’t have worked if there wasn’t a large population ready to tumble.Now, back in the present, is that what Trump sorta’ did in the 2016 election? He yelled out “the Dems are naked” and it turned out there was a substantial population eager and waiting for that message. So he won the election. I note that, as decisions go, voting for a Donald Trump is a lot easier, has far less risk, than refusing to pay the rent, the mortgage, the college loan, or withdrawing your money from the bank.

Saturday, August 25, 2018

Star struck and broken down 2: Lit crit stars, real or an illusion?

Is the fabled lit crit star of the 1980s and 90s a real phenomenon, or just an ordinary celebrity academic of the sort on finds in other disciplines? That question has come up in online discussions. Moreover, in a piece for The Chronicle Review, Avital Ronell and the End of the Academic Star, Lee Konstantinou notes the David Shumway piece I’d quoted in my previous post on this subject and counters:

In a persuasive critique of Shumway’s article, Bruce Robbins asked whether we should be so quick to complain about the rise of superstars. The definition of celebrity in such jeremiads was often tellingly vague, Robbins wrote, and usually involved "starting from fame and subtracting something: fame minus merit, or fame minus power." But such distinctions falsely presumed that some pure form of merit might be separated from social forces. "Dig deep enough into any instance of merit," Robbins wrote, "and you will discover social determinants, factors like family and friends, lovers and mentors, identities, interests, and institutions that advantaged some and disadvantaged others."In fact, Robbins argued, star power represented a form of prestige that in some sense corrected for the failures of the previous old boys’ club, represented by the prior generation of scholars Shumway celebrated. "By supplying an alternative method for distributing cultural capital, the celebrity cult has served (among its other functions) to open up what remains of those tight, all-male professional circles." This was, Robbins claimed, an "improvement."

Konstantinou goes on to observe that the star system is dying, “No new theoretical school has replaced High Theory”.

Konstantinou’s emphasis was on the effects of the old star system on the job prospects of graduate students and of a student’s willingness (Reitman in this case) to tolerate abuse from a star advisor (Ronell) based on the promise of job-securing recommendations.

What IS the star system about, anyhow?

I hadn’t thought of the old boys network, yes, it was/is certainly real, and unfortunate, so I read Robbins piece, Celeb-Reliance: Intellectuals, Celebrity, and Upward Mobility, (Postmodern Culture, Vol. 9, No. 2, 1999), and it is an interesting piece. But, as its title suggests, it is more concerned about the economics of stardom than about the validation of knowledge, which was central to Shumway’s argument. In 2001 the Minnesota Review (numbers 52-54) dedicated a number of articles to Shumway’s article but, alas, the issue is behind a pay wall, so I didn’t have access beyond first pages. I did, however, read the first page of Shumway’s rejoinder, “The Star System Revisited” where he asserted that his critics seem to have missed his main point, which was how “it functions in authorizing knowledge”.

A bit more searching turned up Frank Donoghue, A Look Back at David Shumway’s ‘Star System’, which turned up in a 2011 blog post at The Chronicle of Higher Education. He observed:

Sadly, many people misread Shumway’s essay, zipping past his subtle definition of stardom toward the admittedly unfair income gap between literary studies’ stars and its worker bees. The essay, as I read it, has nothing to do with income, but rather with what the component parts of stardom are and how stardom happens. Stardom, in literary studies or anywhere else, inheres in intangibles: charisma, a distinctive performative style, sex appeal sometimes, in short, an incentive for fans to emulate the star.Shumway’s pessimistic conclusion is, I think, justifiable: the more we buy into the star system, the less we will responsibly pay attention to our collective activity as literary scholars. That is, the presence or omnipresence of a star (think of Derrida in the 70s or Foucault in the 80s) draws our attention away from the content of what they write, and on to their personalities, and thus scrambles our efforts to do rigorous literary studies. As Shumway puts it in his follow up, “The Star System Revisited”: “instead of putting Lacan or Foucault’s theories to the test, contemporary practice is simply more likely to invoke them as if they were laws.” And that’s a recipe for sloppy scholarship.

So, Donoghue got the key point.

In a subsequent post, Further Reflections on ‘Academostars’, he argues, “the star system in 2011 has been thoroughly commodified. As a result, we still might have a few stars who fit Shumway’s definition, but for the most part we have a lot of research universities with one or more dwarf stars.”

OK. The star system dead, or at least terminally ill. But what about the authorization of knowledge? Was Shumway right about that, or was the system mostly about money and prestige?

Could we test and refine Shumway’s central assertion?

He argued that the star is the irreducible source of intellectual authority, as opposed to the (often informal and implicit) governing conventions of a/the discipline. It’s one thing for a critic to become renowned for their exemplary and original contributions within the disciplinary norms. Stardom is something else. Stars exceed or evade disciplinary norms in one way or another and so establish themselves a reference point.

Let’s go back to his original article (The Star System in Literary Studies, PMLA, Vol. 112, No. 1, 1997, p. 95):

Theory not only gave its most influential practitioners a broad professional audience but also cast them as a new sort of author. Theorists asserted an authority more personal than that of literary historians or even critics. [...] Thus one finds article after article in which Derrida or Foucault or Barthes or Lacan or Žižek or Althusser or Spivak or Fish or Jameson or several of the above are cited as markers of truth. It is common now to hear practitioners speak of “using” Derrida or Foucault or some other theorist to read this or that object; such phrasing may suggest that the theorist provides tools of analysis, but the tools are not sufficient without the name that authorizes the procedure.

That last observation is something we could search for using corpus techniques.

It’s not immediately obvious to me, however, just how to proceed. I suppose we could compile a list of stars and search the literature for in-text references to them and note the specific phrases used. We could then search for those phrases to see if they are also used for non-stars (and perhaps to catch stars we’d missed in our original list).

Suppose we conducted this investigation and it turned out more or less to confirm Shumway’s observation, that stars get this treatment, but others do not. What then? Should we consider Shumway’s thesis confirmed?

I know what my bias is, but I’m not sure I should rest satisfied in having it confirmed. My sense of matters is that such an investigation would be a fair amount of work – what corpus would you use, how many journals and books (for books have an importance in the humanities they don’t have in the social, behavioral, biological, and physical sciences) do you interrogate? I would like to get more from it than mere confirmation.

And just what is it that is confirmed? Was/is the star system really “a recipe for sloppy scholarship”, as Donoghue speculated? Would such confirmation betray a decline from older standards of rigor, or is something else going on? Not decline, but, well, what else could it be? That, perhaps, is still in the (relatively near) future.

I note, that as Konstantinou asserted, there is a general feeling that the star system is over, at least in the sense that no new stars have appeared. That requires an explanation. Has the intellectual landscape of literary criticism, the conditions of knowledge creation and validation, changed in recent years? If so, why?

My guess is that we’re not going to go back to the good old days, that we have in fact learned something from/through Theory. The cultural sphere is not a repository of universal values. Values are specific to time and place, to class and gender, ethnicity, and so on. I don’t think we can or should unlearn that. But how do we carry that lesson forward?

Wayne Shorter

Ethan Iverson writes about “the world’s greatest living jazz composer” Wayne Shorter on his 85th birthday (in the @NewYorker). https://t.co/q3RgtaCe4z— Ted Gioia (@tedgioia) August 25, 2018

Friday, August 24, 2018

Little Women

It is doubtful whether any novel has been more important to America’s female writers than Louisa May Alcott’s “Little Women.” https://t.co/tzLWSVHxcR pic.twitter.com/2wF06KviBD— The New Yorker (@NewYorker) August 24, 2018

Friday Fotos: Crazy shots into the sun

I love these kind of crazy shots. I mean, no sane person points the camera into the morning sun without filters and expects to get a decent shot. The sensor gets flooded with light so these photos tend come come out of the camera very dark, with a bright spot or two – one for the sun and one or two for whatever (camera flare perhaps). Then you have to figure out how to make an image out of it. I start by cranking up the exposure (in Lightroom) and cranking down highlights and see what I've got. The thing is, there's almost no point in trying to get the photo to look like what you saw because 1) you can't remember, and 2) you didn't see it anyhow. That is, you generally don't stare directly into the sun, not for more than a moment or two. So there really is no "natural" look for these images. But you don't want it to look plastic or fake or whatever.

Monochrome works:

Meanwhile:

Is this what I saw:

Or was it this:

Neither? Just what did I see, anyhow?

And what does he see?

Is this what the sun sees?

Life on Mars is no joke, so says Tyler Cowen

When I think of settling other planets, my base case is one of extreme scarcity and fragility, at least at first and possibly for a long time. Those are not the conditions that breed liberty, whether it is “the private sector” or “the public sector” in charge.Maybe corporations will settle space for some economic reason. Then you might expect space living to have the liberties of an oil platform in the sea, or perhaps a cruise ship. Except there would be more of a “we are in this all together” attitude, which I think would favor a kind of corporate autocracy.Another scenario involves a military settling space, possibly for military reasons, and that too is not much of a liberal or democratic scenario.You might also have religiously-motivated settlements, which presumably would be governed by the laws and principles of the religion. Over time, however, this scenario might give the greatest chance for subsequent liberalization.

Play-car on a Finnish train

Amazing - I am in #Finland and this is a playground in a train carriage on an inter-city train. Whole lot of books as well. Excellent idea - far nicer for parents (and kids) than car. @CarolineRussell pic.twitter.com/FFEVl2vP8C— Natalie Bennett (@natalieben) August 23, 2018

Thursday, August 23, 2018

Gaia Redux, WTF!

Bruno Latour talks with James Lovecock and writes an essay, Bruno Latour Tracks Down Gaia, Los Angeles Review of Books.

We cannot hide from the fact that there is a fundamental misunderstanding about Gaia. We think we are using the name of this mythological figure to designate the quite common time-honored idea that the Earth is a living organism. Lovelock is renowned, they say, simply because he recast in cybernetic language the ancient idea that the Earth is finely tuned. The words “regulation” and “feedback” replace the antique idea of “natural balance” or even providence.

Except, Latour is about to inform us, that's not what he did, no, not at all. The idea arose as the result of a thought experiment:

The first Gaia idea came about with the following line of reasoning: “If today’s humans, via their industries, can spread chemical products over the Earth that I can detect with my instruments, then it is certainly possible that all terrestrial biochemistry could also be the product of living beings. If humans can so radically modify their environment in so little time, then other beings could have done it as well over hundreds of millions of years.” Earth is well and truly an artificially conceived kind of technosphere for which living things are engineers as blind as termites. You have to be an engineer and inventor like Lovelock to understand this entanglement.

So Gaia has nothing to do with any New Age idea of the Earth in a millennial balance, but rather emerges, as Lenton emphasizes over dinner, from a very specific industrial and technological situation: a violent technological rupture, blending the conquest of space, plus the nuclear and cold wars, that we were later to summarize under the label of the “Anthropocene” and that is accompanied by a cultural rupture symbolized by California in the 1960s. Drugs, sex, cybernetics, the conquest of space, the Vietnam War, computers, and the nuclear threat: this is the matrix from which Gaia was born, in violence, artifice, and war.

Whoops!

Before Gaia, the inhabitants of modern industrial societies saw nature as a domain of necessity, and when they looked toward their own society they saw it as the domain of freedom, as philosophers might say. But after Gaia these two distinct domains literally don’t exist anymore. There is no living or animated thing that obeys an order superior to itself, and that dominates it, or that it just has to adapt itself to, and this is true for bacteria as much as lions or human societies. This doesn’t mean that all living things are free in the rather simple sense of being individuals, since they are interlinked, folded, and entangled in each other. This means that the issue of freedom and dependence is equally valid for humans as it is for the partners of the above natural world.

Meaning, Theory, and the Disciplines of Criticism

Relevant to a discussion about the rise of "stars" in Academic lit crit in the 1980s and 90s. See especially discussions of Reading and Theory as Critique below, which speak to the erosion of boundaries that allows critics to become stars.In the fifth post, It’s Time to Leave the Sandbox, in my series on the poverty of cognitive criticism I managed to rough out a sketch of academic literary criticism that I rather like. So I’ve decided to present that sketch in a post of its own. I’ve dropped most of the comments on cognitive criticism and added a few things.

The most important additions come from the study Andrew Goldstone and Ted Underwood did of a century-long run of articles in seven journals, The Quiet Transformation of Literary Studies [1]. These are the journals they used: Critical Inquiry (1974–2013), ELH (1934–2013), Modern Language Review (1905–2013), Modern Philology (1903–2013), New Literary History (1969–2012), PMLA (1889–2007), and the Review of English Studies (1925–2012).

I begin by presenting one aspect of the argument that Goldstone and Underwood make, that our current terms of critical art only because established after World War II. Then I present the material I’ve reworked from that older post, this time with some charts from Goldstone and Underwood, and so other things as well.

Post War Shift

Goldstone and Underwood demonstrate that perhaps the most significant change in the discipline happened in the quarter century or so after World War II. Here’s a summary statement (p. 372):

The model indicates that the conceptual building blocks of contemporary literary study become prominent as scholarly key terms only in the decades after the war—and some not until the 1980s. We suggest, speculatively, that this pattern testifies not to the rejection but to the naturalization of literary criticism in scholarship. It becomes part of the shared atmosphere of literary study, a taken-for-granted part of the doxa of literary scholarship. Whereas in the prewar decades, other, more descriptive modes of scholarship were important, the post-1970 discourses of the literary, interpretation, and reading all suggest a shared agreement that these are the true objects and aims of literary study—as the critics believed. If criticism itself was no longer the most prominent idea under discussion, this was likely due to the tacit acceptance of its premises, not their supersession.

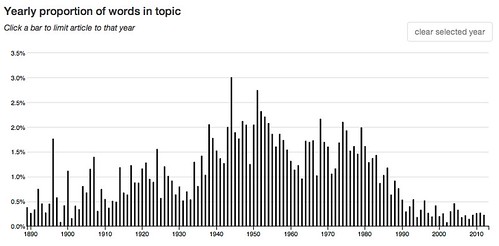

Consider topic 16, where these are the ten most prominent words: criticism work critical theory art critics critic nature method view. This graph shows how that topic evolved in prominence over time:

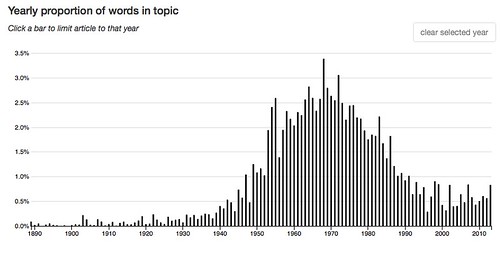

Now consider topic 29: image time first images theme structure imagery final present pattern. Notice “structure” and “pattern” in that list. The (in)famous structualism conference was held at Johns Hopkins in 1966, just before the peak in the chart, while the conference proceedings (The Languages of Criticism and the Sciences of Man) were published in 1970, one year after the peak, 1969:

At the time structuralism was regarded as The Next Big Thing, hence the conference. But Jacques Derrida was brought in as a last minute replacement and his critique of Lévi-Strauss turned out to be the beginning of the end of structuralism and the beginning of the various developments that came to be called capital “T” Theory. The structuralist moment, with its focus on language, signs, and system, was but a turning point.

Wednesday, August 22, 2018

Tuesday, August 21, 2018

The dumbing down of mechanism

Jessica Riskin, Alive and ticking, in Aeon.

The model of nature as a complex, clockwork mechanism has been central to modern science ever since the 17th century. It continues to appear regularly throughout the sciences, from quantum mechanics to evolutionary biology. But for Descartes and his contemporaries, ‘mechanism’ did not signify the sort of inert, regular, predictable functioning that the word connotes today. Instead, it often suggested the very opposite: responsiveness, engagement, caprice. Yet over the course of the 17th century, the idea of machinery narrowed into something passive, without agency or force of its own life. The earlier notion of active, responsive mechanism largely gave way to a new, brute mechanism.Brute mechanism first developed as part of the ‘argument from design’, in which theologians found evidence for the existence of God in the rational design of nature, and therefore began treating nature as an artefact. The natural world of late-medieval and early Renaissance Europe had contained its own spirits and agencies; but arguments from design evacuated these to a location decisively outside the physical realm – leaving a fundamentally passive machinery behind. Since theology and natural science were not yet distinct fields of inquiry, brute mechanist ideas pervaded both. Between the mid-17th and early 19th centuries, the principle of passive matter became a foundational axiom in science, to the point that few people today recall its theological origins.A key moment in the establishment of brute mechanism in science took place one Sunday evening in Edinburgh, in November 1868. The English naturalist Thomas Henry Huxley – friend and defender of Charles Darwin, as well as a professor of natural history, anatomy and physiology – gave a lecture. He chose the subject of protoplasm, or, as he defined it for the uninitiated, ‘the physical basis of life’. Huxley’s main point was simple: we should be able to understand what brings something to life simply by reducing it to its component parts. There should be no need to invoke any special something, any force or power such as ‘vitality’. After all, Huxley quipped, water has extraordinary properties too; but we needn’t rely on something called ‘aquosity’ to explain how hydrogen and oxygen produce water under certain conditions. To be sure, Huxley couldn’t say how the properties of either protoplasm or water arose from their composition; but he was confident that science would find its way to the answer, as clearly as ‘we are now able to deduce the operations of a watch from the form of its parts and the manner in which they are put together’.

Change seems more fundamental than time

From at interview posted at Backreaction. Tam Hunter interviews Carlo Rovelli about the nature of time:

TH: In the quote from your book I mentioned above, what are the “traces” of temporality that are still left over in the windswept landscape “almost devoid of all traces of temporality,” a “world without time,” that has been created by modern physics?CR: Change. It is important not to confuse “time” and “change.” We tend to confuse these two important notions because in our experience we can merge them: we can order all the change we experience along a universal one-dimensional oriented line that we call “time.” But change is far more general than time. We can have “change,” namely “happenings,” without any possibility of ordering sequences of these happenings along a single time variable.There is a mistaken idea that it is impossible to describe or to conceive change unless there exists a single flowing time variable. But this is wrong. The world is change, but it is not [fundamentally] ordered along a single timeline. Often people fall into the mistake that a world without time is a world without change: a sort of frozen eternal immobility. It is in fact the opposite: a frozen eternal immobility would be a world where nothing changes and time passes. Reality is the contrary: change is ubiquitous but if we try to order change by labeling happenings with a time variable, we find that, contrary to intuition, we can do this only locally, not globally.TH: Isn’t there a contradiction in your language when you suggest that the common-sense notion of the passage of time, at the human level, is not actually an illusion (just a part of the larger whole), but that in actuality we live in a “world without time”? That is, if time is fundamentally an illusion isn’t it still an illusion at the human scale?CR: What I say is not “we live in a world without time.” What I say is “we live in a world without time at the fundamental level.” There is no time in the basic laws of physics. This does not imply that there is no time in our daily life. There are no cats in the fundamental equations of the world, but there are cats in my neighborhood. Nice ones. The mistake is not using the notion of time [at our human scale]. It is to assume that this notion is universal, that it is a basic structure of reality. There are no micro-cats at the Planck scale, and there is no time at the Planck scale. [...]TH: I’m surprised you state this degree of certainty here when in your book you acknowledge that the nature of time is one of physics’ last remaining large questions. Andrew Jaffe, in a review of your book for Nature, writes that the issues you discuss “are very much alive in modern physics.”CR: The debate on the nature of time is very much alive, but it is not a single debate about a single issue, it is a constellation of different issues, and presentism is just a rather small side of it. Examples are the question of the source of the low initial entropy, the source of our sense of flow, the relation between causality and entropy. The non-viability of presentism is accepted by almost all relativists.

In part two, “The World without Time”, Rovelli puts forward the idea that events (just a word for a given time and location at which something might happen), rather than particles or fields, are the basic constituents of the world. The task of physics is to describe the relationships between those events: as Rovelli notes, “A storm is not a thing, it’s a collection of occurrences.” At our level, each of those events looks like the interaction of particles at a particular position and time; but time and space themselves really only manifest out of their interactions and the web of causality between them.

Color me sympathetic. Back to Hunt's interview of Rovelli:

TH: You argue that “the world is made of events, not things” in part II of your book. Alfred North Whitehead also made events a fundamental feature of his ontology, and I’m partial to his “process philosophy.” If events—happenings in time—are the fundamental “atoms” of spacetime (as Whitehead argues), shouldn’t this accentuate the importance of the passage of time in our ontology, rather than downgrade it as you seem to otherwise suggest?CR: “Time” is a stratified notion. The existence of change, by itself, does not imply that there is a unique global time in the universe. Happenings reveal change, and change is ubiquitous, but nothing states that this change should be organized along the single universal uniform flow that we commonly call time. The question of the nature of time cannot be reduced to a simple “time is real”, “time is not real.” It is the effort of understanding the many different layers giving rise to the complex phenomenon that we call the passage of time.

Monday, August 20, 2018

Would you jump from an airplane at 25,000 feet without a parachute?

Luke Aikins, the first person to jump out of an airplane without a parachute from a 25,000 feet. Aikins eventually lands in a net. The daring stunt is now in The Guinness Book Of World Records as the first complete planned jump from an airplane without a parachute. pic.twitter.com/FVB23Z6c50— Ken Rutkowski (@kenradio) August 20, 2018

Star struck and broken down: How did literary criticism come to this foul pass? [#AvitalRonell]

Over the weekend the humanities Twitterverse, at least the part of it that I frequent, broke out in a flurry of discussion concerning the Avital Ronell case.

Briefly, Ronell is a very distinguished literary scholar at NYU. In 2017 Nimrod Reitman, a former graduate student, filed a Title IX complaint against her. The university dismissed the sexual assault, stalking, and retaliation charges, but found her responsible for sexual harassment and suspended her for the 2018-2019 academic year. At about that time a group of very senior humanists issued a letter of support, arguing, more or less, that a scholar of such distinction couldn’t possibly be guilty of such behavior.

It was that support letter, its claim of privilege for one of its own, as much as the case itself, that prompted the weekend’s Twitterverse chatter. Ronell was a “star” in the humanities firmament. What is it about the star system that permits and sustains such behavior? Power, yes. Still, where’d we go wrong? Was there something about humanities practice that contributed to this state of affairs?

I think there is, and that’s what this post is about. But first I want to present a tweet by Cory Robin about the Ronell case, one in a string of ten tweets. Robin read the 56-page lawsuit (PDF) that Reitman had filed against both Ronell and NYU and asks us to set the sexual matters aside. There’s something more fundamental at stake:

On the Avital Ronell/Nimrod Reitman case. Part 3 (of 10). pic.twitter.com/fboANfj2jF— corey robin (@CoreyRobin) August 19, 2018

It’s as though Ronell recognized no boundary between herself and Reitman. That’s what this post is about, boundaries, how the collapse of boundaries in academic literary criticism created an milieu that favored personal excess and indulgence.

The stars are born

During the weekend’s Twitter activity Ted Underwood pointed to a 1997 article that David Shumway published in PMLA, The Star System in Literary Studies (Vol. 112, No. 1, 1997, 85-100). Shumway argued (p. 95):

Theory not only gave its most influential practitioners a broad professional audience but also cast them as a new sort of author. Theorists asserted an authority more personal than that of literary historians or even critics. As we have seen, the rhetoric of literary history denied personal authority; in principle, even Kittredge was just another contributor to the edifice of knowledge. Criticism was able to enter the academy only by claiming objectivity for itself, so academic critics could not revel in personal idiosyncrasy. They developed their own critical perspectives, to be sure, but all the while they continued to appeal to the text as the highest authority. In the past twenty years theory has undermined the authority of the text and of the author and replaced it with the authority of systems, as in the structuralist and poststructuralist privileging of langue over parole or in the mystifying readings of Marxism or psychoanalysis. Sometimes the theory seems to be to eschew all authority, as in some renderings of deconstruction. And yet these claims are belied by the actual functioning of the name of the theorist. It is that name, rather than anonymous systems or the anarchic play of signifiers, to which most theoretical practice appeals.

That’s an interesting set of claims. Let’s start with objectivity and use Northrop Frye’s 1957 Anatomy of Criticism as a touchstone text. In his “Polemical Introduction” Frye observes:

If criticism exists, it must be an examination of literature in terms of a conceptual framework derivable from an inductive survey of the literary field. The word "inductive" suggests some sort of scientific procedure. What if criticism is a science as well as an art? Not a “pure” or “exact” science, of course, but these phrases belong to a nineteenth-century cosmology which is no longer with us. The writing of history is an art, but no one doubts that scientific principles are involved in the historian’s treatment of evidence, and that the presence of this scientific element is what distinguishes history from legend. It may also be a scientific element in criticism which distinguishes it from literary parasitism on the one hand, and the superimposed critical attitude on the other. The presence of science in any subject changes its character from the casual to the causal, from the random and intuitive to the systematic, as well as safeguarding the integrity of that subject from external invasions. However, if there are any readers for whom the word “scientific” conveys emotional overtones of unimaginative barbarism, they may substitute “systematic” or “progressive” instead.It seems absurd to say that there may be a scientific element in criticism when there are dozens of learned journals based on the assumption that there is, and hundreds of scholars engaged in a scientific procedure related to literary criticism. Evidence is examined scientifically; previous authorities are used scientifically; fields are investigated scientifically; texts are edited scientifically. Prosody is scientific in structure; so is phonetics; so is philology.

There you have it, literary study is a kind of science, not “pure” or “exact” but science nonetheless,. The repetition of morphological variants of science in this passage is quite stunning, as though Frye wanted to club subjectivity to death with word magic. With all that science going on literary criticism had little choice but to yield to objectivity.

But a decade later things weren’t so clear. All these objective critics kept disagreeing about their interpretations. Is there a standard against which interpretations can be measured so as to determine their validity? Strenuous efforts were made to make authorial intention such a standard, but those efforts failed to win the day. Instead the author was bracketed out in favor of systems, the unconscious, signs, social structure, power, whatever. And objectivity was gone, dismissed as a mirage of scientistic desire.

Another photo of the Met Life tower, plus link to my 3QD reflections of new York 2140

See that slender tower in the middle? That's the Met Life tower, which is one of the central actors – yes, that's clearly how KSR conceived it, following Latour – in Kim Stanley Robinson's New York 2140. I'm standing at the northern end of Hoboken, NJ, across the Hudson River from Lower Manhattan.

I've written about New York 2140 before and I'll likely write about it again. Here's my current piece in 3 Quarks Daily, Through a 3D Glass Starkly, New York 2140 Redux.

Sunday, August 19, 2018

Sacrifice and sunk costs

Human sacrifice - a means to preserve society or an economic system by raising its sunk costs.— Peter Y Paik (@pypaik) August 20, 2018

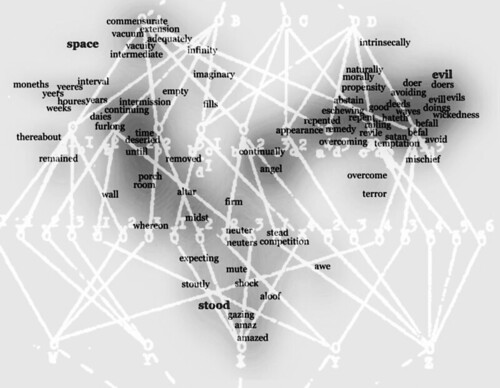

Computation, Semantics, and Meaning: Adding to an Argument by Michael Gavin [#DH]

I've completed a new working paper. Title above. You can download it here:

Abstract: Michael Gavin has published an article in which he uses ambiguity as a theme for juxtaposing close reading, a standard procedure in literary criticism, with vector semantics, a newer technique in statistical semantics with a lineage that includes machine translation. I take that essay as a framework of adding computational semantics to the comparison, which also derives from machine translation. After recounting and adding to Gavin’s account of 18 lines from Paradise Lost I use computational semantics to examine Shakespeare “The expense of spirit”. Close reading deals in meaning, which is ontologically subjective (in Searle’s usage) while both vector semantics and computational semantics are ontologically objective (though not necessarily objectively true, a matter of epistemology).

Introduction: I heard it in the Twitterverse 3

Warren Weaver, “Translation”, 1949 4

Some computational semantics for a Shakespeare sonnet 21

Three modes of literary investigation and two binary distinctions 25

Appendix 2: The subjective nature of meaning 30

Introduction: I heard it in the Twitterverse

- Academic.edu: https://www.academia.edu/37261264/Computation_Semantics_and_Meaning_Adding_to_an_Argument_by_Michael_Gavin

- SSRN: https://ssrn.com/abstract=3235001

Abstract: Michael Gavin has published an article in which he uses ambiguity as a theme for juxtaposing close reading, a standard procedure in literary criticism, with vector semantics, a newer technique in statistical semantics with a lineage that includes machine translation. I take that essay as a framework of adding computational semantics to the comparison, which also derives from machine translation. After recounting and adding to Gavin’s account of 18 lines from Paradise Lost I use computational semantics to examine Shakespeare “The expense of spirit”. Close reading deals in meaning, which is ontologically subjective (in Searle’s usage) while both vector semantics and computational semantics are ontologically objective (though not necessarily objectively true, a matter of epistemology).

Introduction: I heard it in the Twitterverse 3

Warren Weaver, “Translation”, 1949 4

Multiple meaning 5The meanings of words are intimately interlinked 14

The distributional hypothesis 6

MT and Computational semantics 8

A connection between Weaver 1949 and semantic nets 9

Abstraction and topic analysis 12

Two kinds of computational semantics 13

Concepts are separable from words 14Comments on a passage from “Paradise Lost” 17

Models and graphics (a new ontology of the text?) 16

Some computational semantics for a Shakespeare sonnet 21

Three modes of literary investigation and two binary distinctions 25

Semantics and meaning 25Appendix 1: Virtual Reading as a path through a high-dimensional semantic space 28

Two kinds of semantic model 26

Where are we? All roads lead to Rome 27

Appendix 2: The subjective nature of meaning 30

Walter Freeman’s neuroscience of meaning 30

From Word Space to World Spirit? 32

Introduction: I heard it in the Twitterverse

Michael Gavin recently published a fascinating article in Critical Inquiry, Vector Semantics, William Empson, and the Study of Ambiguity , one that has developed some buzz in the Twitterverse. I liked the article a lot. But I was thrown off balance by two things, his use of the term “computational semantics” and a hole in his account of machine translation (MT). The first problem is easily remedied by using a different term, “statistical semantics”. The second could probably be dealt with by the addition of a paragraph or two in which he points out that, while early work on MT failed and so was defunded, it did lead to work in computational semantics of a kind that’s quite different from statistical semantics, work that’s been quite influential in a variety of ways.

In terms of Gavin’s immediate purpose in his article, however, those are minor issues. But in a larger scope, things are different. And that is why I’d composed the posts I’ve gathered into the working paper. Digital humanists need to be aware of and in dialog with that other kind of computational semantics. Gavin’s article provides a useful framework for doing that.

Caveat: In this working paper I’m not going to attempt to explain Gavin’s statistical semantics from the ground up. He’s already done that. I assume a reader who is comfortable with such material.

Warren Weaver, “Translation”, 1949

Let us start with a famous memo Warren Weaver wrote in 1949. Weaver was director of the Natural Sciences division of the Rockefeller Foundation from 1932 to 1955. He collaborated Claude Shannon in the publication of a book which popularized Shannon’s seminal work in information theory, The Mathematical Theory of Communication. Weaver’s 1949 memorandum, simply entitled “Translation” , is regarded as the catalytic document in the origin of machine translation (MT).

He opens the memo with two paragraphs entitled “Preliminary Remarks” (p. 1).

There is no need to do more than mention the obvious fact that a multiplicity of language impedes cultural interchange between the peoples of the earth, and is a serious deterrent to international understanding. The present memorandum, assuming the validity and importance of this fact, contains some comments and suggestions bearing on the possibility of contributing at least something to the solution of the world-wide translation problem through the use of electronic computers of great capacity, flexibility, and speed.

The suggestions of this memorandum will surely be incomplete and naïve, and may well be patently silly to an expert in the field - for the author is certainly not such.

But then there were no experts in the field, were there? Weaver was attempting to conjure a field of investigation out of nothing.

I think it important to note, moreover, that language is one of the seminal fields of inquiry for computer science. Yes, the Defense Department was interested in artillery tables and atomic explosions, and, somewhat earlier, the Census Bureau funded Herman Hollerith in the development of machines for data tabulation, but language study was important too.

A bit later in his memo Weaver quotes from a 1947 letter he wrote to Norbert Weiner, a mathematician perhaps best known for his work in cybernetics (p. 4):

When I look at an article in Russian, I say "This is really written in English, but it has been coded in some strange symbols. I will now proceed to decode."

Weiner didn’t think much of the idea. Yet, as Gavins explains, crude though it was, that idea was the beginning of MT.

The code-breaker assumes the message is in a language they understand but that it has been disguised by a procedure that scrambles and transforms the expression of that message. Once you’ve broken the code you can read and understand the message. Human translators, however, don’t work that way. They read and understand the message in the source language–Russian, for example–and then re-express the message in the target language–perhaps English.

Toward the end of his memo Weaver remarks (p. 11):

Think, by analogy, of individuals living in a series of tall closed towers, all erected over a common foundation. When they try to communicate with one another they shout back and forth, each from his own closed tower. It is difficult to make the sound penetrate even the nearest towers, and communication proceeds very poorly indeed. But when an individual goes down his tower, he finds himself in a great open basement, common to all the towers. Here he establishes easy and useful communication with the persons who have also descended from their towers.

Thus may it be true that the way to translate from Chinese to Arabic, or from Russian to Portuguese, is not to attempt the direct route, shouting from tower to tower. Perhaps the way is to descend, from each language, down to the common base of human communication - the real but as yet undiscovered universal language - and then re-emerge by whatever particular route is convenient.

The story of MT is, in effect, one in which researchers find themselves forced to reverse engineer the entire tower in computational terms, all the way down to the basement where we find, not a universal language, but a semantics constructed from and over perception and cognition.