Pages in this blog

▼

Sunday, September 30, 2018

Heart of Darkness: Kurtz’s mistress is murdered

Sometime well after I’d finished my various notes about Conrad’s Heart of Darkness I came across an interview that Rachel Kaadzi Ghansa had conducted with Samuel Delany (Paris Review, Summer 2011, No. 197), “Samuel R. Delany, The Art of Fiction No. 210”. Delany makes a point that seems obvious in retrospect, but which I had somehow missed when I was originally working on the text.

Here’s the passage in the interview:

My students reach the climax of Heart of Darkness, when the pilgrims stand at the steamer’s rail, firing their rifles at the natives on the shore, fifteen or twenty feet away, “for some sport,” while an appalled Marlow blows the boat’s horn to frighten the Africans off. Some of the natives throw themselves on the ground, but among them stands Kurtz’s black mistress, her arms raised toward the boat that carries Kurtz away. From his bed in the wheelhouse, sickly Kurtz watches through the window—which Conrad has made clear has been left open. At the boat rail, the white men go on firing, and with a line of white space, the scene ends ...Year after year, more than half my students fail to realize that the white men have just killed the black woman Kurtz has been sleeping with for several years. Or that Kurtz, too weak to intervene, has had to lie there and watch them do it.When you ask, later, the significance of Kurtz’s final words, as he looks out through this same window, “The horror! The horror!,” it never occurs to them that it might refer to the fact that he has watched his fellow Europeans murder in cold blood the woman he has lived with. Suggestion for them is not an option. Earlier generations of readers, however, did not have these interpretive problems.“If he raped her, why didn’t the writer say so?” “If they shot her, why didn’t Conrad show her fall dead?” my graduate students ask. It makes me wonder what other techniques for conveying the unspoken and the unspeakable we have forgotten how to read over four or five thousand years of “literacy.”

Next comes the relevant passage from Conrad’s text. The mistress is shot in the smoke of paragraph 146. I’ve carried through to paragraph 148 as that contains a key phrase, “My Intended, my station, my career, my ideas...” The Intended, of course, is Kurtz’s fiancée. It’s important to see that, by placing this phrase so soon after the mistress’s murder, Conrad associates her with that fiancé, and with the rest as well.

"We had carried Kurtz into the pilot-house: there was more air there. Lying on the couch, he stared through the open shutter. There was an eddy in the mass of human bodies, and the woman with helmeted head and tawny cheeks rushed out to the very brink of the stream. She put out her hands, shouted something, and all that wild mob took up the shout in a roaring chorus of articulated, rapid, breathless utterance."'Do you understand this?' I asked."He kept on looking out past me with fiery, longing eyes, with a mingled expression of wistfulness and hate. He made no answer, but I saw a smile, a smile of indefinable meaning, appear on his colorless lips that a moment after twitched convulsively. 'Do I not?' he said slowly, gasping, as if the words had been torn out of him by a supernatural power."I pulled the string of the whistle, and I did this because I saw the pilgrims on deck getting out their rifles with an air of anticipating a jolly lark. At the sudden screech there was a movement of abject terror through that wedged mass of bodies. 'Don't! Don't you frighten them away,' cried someone on deck disconsolately. I pulled the string time after time. They broke and ran, they leaped, they crouched, they swerved, they dodged the flying terror of the sound. The three red chaps had fallen flat, face down on the shore, as though they had been shot dead. Only the barbarous and superb woman did not so much as flinch, and stretched tragically her bare arms after us over the somber and glittering river."And then that imbecile crowd down on the deck started their little fun, and I could see nothing more for smoke. [paragraph 146]"The brown current ran swiftly out of the heart of darkness, bearing us down towards the sea with twice the speed of our upward progress; and Kurtz's life was running swiftly too, ebbing, ebbing out of his heart into the sea of inexorable time. The manager was very placid, he had no vital anxieties now, he took us both in with a comprehensive and satisfied glance: the 'affair' had come off as well as could be wished. I saw the time approaching when I would be left alone of the party of 'unsound method.' The pilgrims looked upon me with disfavor. I was, so to speak, numbered with the dead. It is strange how I accepted this unforeseen partnership, this choice of nightmares forced upon me in the tenebrous land invaded by these mean and greedy phantoms."Kurtz discoursed. A voice! a voice! It rang deep to the very last. It survived his strength to hide in the magnificent folds of eloquence the barren darkness of his heart. Oh, he struggled! he struggled! The wastes of his weary brain were haunted by shadowy images now—images of wealth and fame revolving obsequiously round his unextinguishable gift of noble and lofty expression. My Intended, my station, my career, my ideas—these were the subjects for the occasional utterances of elevated sentiments. The shade of the original Kurtz frequented the bedside of the hollow sham, whose fate it was to be buried presently in the mold of primeval earth. But both the diabolic love and the unearthly hate of the mysteries it had penetrated fought for the possession of that soul satiated with primitive emotions, avid of lying fame, of sham distinction, of all the appearances of success and power. [ paragraph 148]

* * * * *

I’ve added this note to the working paper, Heart of Darkness: Qualitative and Quantitative Analysis on Several Scales, and made appropriate revisions elsewhere. You can find the working paper here:

Academia.edu: https://www.academia.edu/8132174/Heart_of_Darkness_Qualitative_and_Quantitative_Analysis_on_Several_Scales_Version_4

SSRN: https://ssrn.com/abstract=1910279

Saturday, September 29, 2018

The story of my intellectual life, short version (136 words)

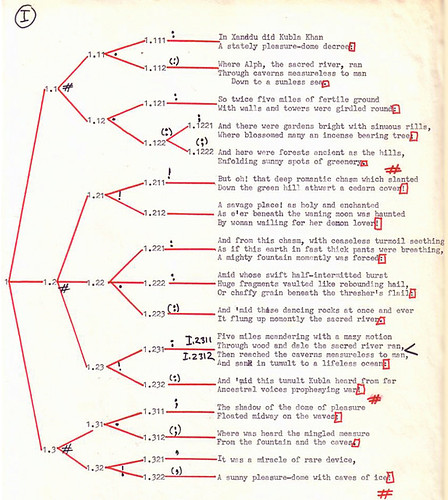

In the early 1970s I discovered that “Kubla Khan” had a rich, marvelous, and fantastically symmetrical structure. I'd found myself intellectually. I knew what I was doing. I had a specific intellectual mission: to find the mechanisms behind “Kubla Khan.” As defined, that mission failed, and still has not been achieved some 40 odd years later.

It's like this: If you set out to hitch rides from New York City to, say, Los Angeles, and don't make it, well then your hitch-hike adventure is a failure. But if you end up on Mars instead, just what kind of failure is that? Yeah, you’re lost. Really really lost. But you’re lost on Mars! How cool is that!

It's like this: If you set out to hitch rides from New York City to, say, Los Angeles, and don't make it, well then your hitch-hike adventure is a failure. But if you end up on Mars instead, just what kind of failure is that? Yeah, you’re lost. Really really lost. But you’re lost on Mars! How cool is that!

Of course, it might not actually be Mars. It might just be an abandoned set on a studio back lot.

Friday, September 28, 2018

Michael Bérubé tells all

I was making my rounds the other day and followed a link to an interview with Michael Bérubé. But what really caught my attention was the name of the reviewer, Frederick Luis Aldama. What!@? My eyes blinked thrice and when I opened them it was still there: Intellectual relevance: an interview with Michael Bérubé.

I suppose you’re thinking, what’s so special about that? If you’re thinking that you may not know the interwebs like I know the interwebs. Back in October 2009 Bérubé had run up a post about one of Aldama’s books, a defense of the humanities. He called Aldama out for making a bunch of bone-headed mistakes, like, e.g., asserting that Leonardo da Vinci had been inspired by Isaac Newton’s account of gravity when, as we all know, Newton was born more than a century after Leonardo had died. (And how’d that one ever get past copy-editing?) And yet Aldama was able to get past it and give us a wonderful review.

Here’s some of my favorite passages.

Here’s some of my favorite passages.

After telling us that he’d originally intended to go into advertising rather than academia, Bérubé admits:

Let’s just say that if I were on an admissions committee, I wouldn’t have bet on me. But one thing that stays with me from being a musician is always wanting to be asked back. Even if you didn’t like the gig. From grad school onward, anytime someone has given me a break, I’ve been grateful – and I’ve tried to show them that they didn’t make a mistake. (The flip side of this is that you want the people who didn’t believe in you to go down in history like Dick Rowe, the A&R man from Decca who turned down the Beatles.) As a drummer, I also had to learn to overcome a paralyzing fear of failure. The first time I tried to play drums in public I couldn’t do it. I promised myself I’m not doing this again until I’m plausible. I felt the same way about graduate school – I was determined to make the most of it, wherever I got in.

Ah, yes, there’s nowhere to hide. One of these days I’ll tell you about the time I fracked the opening line to “You’re Still a Young Man” (here it is done right).

Concerning his most recent book:

With The Secret Life of Stories (2016) I return to narratology – some of those readings from my early years of graduate school: Wayne Booth, Peter Rabinowitz, Frank Kermode, and Paul Riceour. (Old white guys theorizing narrative! I used to be a serious Gérard Genette fan. No one knows this about me.) Narratology and my journey with my son Jamie (I wouldn’t have read Harry Potter without him) allowed me to analyze disability in fiction in terms of narrative strategies: motives, plot devices, for instance. In this way, I was able to show that disability in fiction doesn’t even require characters with identifiable disabilities; instead, it can involve ideas about disability, meditations on the social stigma associated with disability, narrative strategies that deploy ideas about disability that function in innumerable ways. The theorizing scaffold may be old school – hell, I rely heavily, at the end, on Viktor Shklovsky’s account of defamiliarization – but I knew I was opening up whole new territory for literary criticism in a way I’ve never done. Also, I have to admit, The Secret Life of Stories was more fun to write than anything I’ve written ever.

But, you know, Michael, the discipline would be better off these days if it hadn’t turned its back on narratology, poetics, and old school structuralism back in the mid-1970s. These days, for example, we have folks theorizing about doing “surface reading” and description without actually doing it. If more people had gone with narratology and the others, there’d be more practical descriptive work around to think about and emulate. Instead it seems to be easier to theorize about something we may do sometime in the future if only we knew how! You learn by doing, not by theorizing, which comes later.

Sorry about editorializing all over your interview, Michael. And – pro tip – I'd be more circumspect about admitting to having fun.

About the virtues of writing for non-academic audiences:

As an assistant professor at the University of Illinois at Urbana-Champaign, I was told in a friendly way that none of my writing for the Village Voice would count for tenure; it wouldn’t count against me, but it wouldn’t be a formal part of the tenure dossier, either. Still, I was encouraged to keep up the nonacademic writing as long as it was intellectually substantive. We both know that to get readers’ attention, non-academic writing actually has to be more rigorous in terms of theorizing and hailing its audiences. So, once I cleared that tenure hurdle, I knew I wanted to publish more of this kind of writing.

On working with the AAUP:

Yeah, sometimes you feel it’s like you have to build the house every day. So the first thing is really the question about institutionality and making sure that whatever you build stays around from year to year and it survives you. [...] resources. It’s why I chose to work for the American Association of University Professors (AAUP) – because that work has consequences for people’s working conditions and for policies and practices, especially with regard to academic freedom. I ran for Faculty Senate at Penn State so we could create a university-wide system for the review and promotion of fixed-term faculty. (And we did! [...]) I also wanted a conversion-to-tenure plan for long-term faculty with distinguished records but who don’t do research, along the lines Jennifer Ruth and I laid out in our book. (If they do research, great. But it shouldn’t be required for conversion to tenure. After all, these are teaching intensive jobs.) Jennifer and I argue that we can stabilize the profession of college teaching by moving as many people to tenure as possible. I couldn’t get that at Penn State, but we did create a system where fixed-term faculty can elect committees consisting entirely of fixed-term faculty to review fixed-term faculty the promotions that come with raises as well as changes of title to professorial titles. I also wanted promotions to be accompanied by multi-year contracts. We have that language now, and our administration has agreed to it, though I wish it were stronger. The upside is that our administration agrees with the Senate that we want to create a “culture of expectation” that promotions come with multi-year contracts, and we’ll keep working on that. So that’s what I’m going to spend my time doing. I want to work hand in hand with my fixed-term colleagues at Penn State to do something with what was once a random, arbitrary system, and reprofessionalize it.

Bérubé went on to point out that being President of the MLA (Modern Language Association) didn’t “really have the kind of institutional consequence I’m talking about [...] in terms of advocacy, the MLA can do a lot of things. In terms of implementation, not much.”

Finally:

I got into this business not just for the fun of reveling in ideas and doing different kinds of writing, but because the intellectual autonomy of the university is unparalleled in the American workplace. The university is a place where the people who dream of worlds that don’t exist can find a home. Those worlds don’t just exist in the theoretical sciences – they exist wherever the spark of human creativity bursts into flame. And those of us in the arts and humanities are the guardians of that flame.

I’ll close with my favorite Samuel Delaney line:

Science fiction isn’t just thinking about the world out there. It’s also thinking about how that world might be—a particularly important exercise for those who are oppressed, because if they’re going to change the world we live in, they—and all of us—have to be able to think about a world that works differently.

Thursday, September 27, 2018

Tech Firms Are Not Soverigns

This is a very important issue: Andrew Keane Woods writing at Lawfare:

Technology firms are extraordinarily powerful. They control vast sums of money. They serve unprecedentedly large customer bases—in some instances, larger customer bases than any nation on earth (Facebook now has over 2 billion users). Tech firms implement rules on their platforms in a law-like manner, often in ways that are as consequential or more than any law on the books, and they regularly resist state regulatory efforts. This has led a number of people to suggest that tech firms are sovereigns (and, often, sovereign in ways that large firms before them were not).Cf. See this post, Will Trumpism Usher in the Kind of Dystopian Future Depicted in Some Science Fiction?, which presents Abbe Mowshowitz's concept of virtual feudalism.

I disagree, and this essay explains why. Even if we can agree that tech firms play all sorts of state-like functions, it is not helpful to think of them as sovereigns. This is neither a descriptively accurate nor a normatively desirable way to approach the central regulatory challenge of the global internet. It frames the key regulatory challenge—and the central power struggle—as between states and tech firms, when in fact it is a struggle between states.

The question is not can states pursue their goals vis-à-vis global tech firms, but rather: which states and how? This essay does not set out to address these questions—for a start at that, see my article, “Litigating Data Sovereignty,” forthcoming in the Yale Law Journal. Rather, this short essay provides reasons to be skeptical of claims that tech firms are sovereigns—either on their own or as compared to other large firms like Walmart or Coca-Cola.

Comments are very welcome.

Earl Wasserman, a lifelong student, a scholar’s scholar

I’m told that he was sometimes known as “Earl the Pearl” among the English Department grad students. Why, I don’t know. Well sure, there’s the sound of the thing, but there’s got to be more than that, no? Pearl of great price? I don’t know.

To me he was Dr. Wasserman.

A couple of years ago another Johns Hopkins graduate, Shale D. Stiller, published an article about him in the alumni magazine, “Remembering a Giant: Earl Wasserman”. Among other things Stiller talked of the discrimination Wasserman had faced as a Jew:

His first choice when he entered Johns Hopkins was to study mathematics. But he was advised that Jewish students were not welcome in the Math Department, so he migrated to English. After two years, he was accepted into the graduate program and received his doctorate four years later in 1937.

After the war Wasserman, who had served in the Pacific, decided he wanted to return, this time to the faculty. The English Department was enthusiastic, but had to overcome the anti-Semitism of the university's president, Isaiah Bowman. He joined the faculty in 1948 and stayed until his untimely death in 1973 at the age of 59. By the time I arrived at Hopkins in the mid-1960s things had changed considerably. There were many Jews on the faculty and in the student body.

Here’s a rather different passage from Stiller's article, one that speaks to Wasserman's character:

Wasserman was tough but fair. He was intimidating to many people. Legendary Johns Hopkins humanities professor Richard Macksey could not say enough about Wasserman’s “passion and fervor” while teaching. “If you really wanted your battery charged, you would go in and listen to Earl for a while.” Jerry Schnydman, former director of admissions and secretary of the board of trustees at Hopkins, took Wasserman’s course on Keats and Shelley in the mid-1960s because Wasserman was “the most revered teacher at Hopkins.” Schnydman did not receive a great mark for the course, but because Wasserman happened to be his adviser, he was constrained to meet with him to approve his schedule for the next term. Schnydman approached the meeting with great trepidation because of his low mark. Wasserman immediately put him at ease by commenting that Schnydman was an A student in lacrosse (being an All-American) and that when one averaged the marks in lacrosse and Keats-Shelley, he would do well in life. He has never forgotten Wasserman's generosity.

There was a brief note at the end of the article indicating that one could get a longer version of the article by writing directly to Stiller. While I was waiting for Hurricane Sandy to blow through, I did so, and sent my own observations about Wasserman.

* * * * *

Dear Shale, if I may,

I’ve now read your longer piece about Wasserman and enjoyed it a great deal. It was good to hear those voices. I know many, perhaps most, of the people you quoted, some better than others. And I’ve had a chance to think about my own debt to Wasserman. At the same time I was struck by the thought that the world in which Wasserman was great is, if not all but gone, fading fast. And it is by no means clear what will replace it.

So, with your indulgence, I’d like to take a little time and reflect upon matters (as I await the storm that’s coming up the coast; I live in Hoboken).

I was at Hopkins from the fall of ’65 through the spring of ’73 and got a bachelors in philosophy and a masters in humanities, with literature as the area that pulled all the others together. Dick Macksey was my main professor. I took a number of courses from him, was a TA in one of his courses, and he ultimately supervised my masters thesis, which was on “Kubla Khan.” Other professors were important as well, including Mary Ainsworth in psychology, Arthur Stinchcombe in sociology, and of course Wasserman.

As I indicated in my previous note, I took his two-semester course in Romantic Literature in 68-69, my senior year. We studied Keats, Shelley, and Austen the first semester and Wordsworth, Coleridge, and Scott the second. As I vaguely recall enrollment dropped from the first to the second semester, which prompted Wasserman to lead one of the second-semester discussion sessions himself, presumably to get a better handle on his students. I was in his discussion section.

I have no specific memories from that semester, but I did become interested in Coleridge’s “Kubla Khan” and settled on that as the topic of a master’s thesis in humanities. While it was my intention to get a doctorate, this was during the Vietnam war and I had drawn a low number in the draft, making my immediate future uncertain. I’d become a conscientious objector and decided to perform my alternative service in the Chaplain’s Office at Hopkins and, while doing that, to get my masters in the Humanities Center under Macksey.

But, because “Kubla Khan” was my text, I worked fairly closely with Wasserman for a semester or two, meeting with him on a regular basis, perhaps as often as once a week. Some of our conversations were quite animated and at times it seems as through we might manage to levitate his desk. At one point I got so comfortable as to lean back in my chair and put my feet on his desk. The look he gave me, however, prompted me to remove them and to once again sit up.

There was one point where we agreed that perhaps the most important job of a scholar was to ask the right questions. Answers could always be found, but the quest must go on. At some time during this period some visiting scholar gave a lecture on Coleridge which I was unable to attend. I am told that Wasserman asked a question in my name. Or perhaps I was at the lecture, but simply kept respectfully to the rear of the room, and quiet. I don’t really recall.

All I know is that he asked a question in my name. That impressed me, not merely for the implied compliment, but more importantly, I think, for the courtesy. You quoted a passage from a letter where he talked of his intellectual life being intertwined with those of colleagues. That requires courtesy; it’s not simply that everyone’s contributions to the conversation should be acknowledged, but that keeping track of who said what is important to managing the intellectual drift of the matter. The ‘valence’ of an idea is linked to the person who thought it. To keep an intellectual community going you need to keep track of those things.

So, when Wasserman made a query in my name, I learned something about how to conduct myself in an intellectual community. And perhaps that is what I got from Wasserman. The thesis I ended up writing about “Kubla Khan” was quite different in style from Wasserman’s work. But the fact that I would devote some 70 pages to one 54-line poem, that concern about detail, that intensity, I picked up from Wasserman. That it was worth the effort; I got that from Wasserman.

In the course of working on that thesis I read everything I could find in the Hopkins library on “Kubla Khan.” That sense of thoroughness came through Wasserman. It was a matter of duty to the intellectual community.

That’s what I got from Wasserman, the feel for an intellectual community. The work itself is important, and it is work you do in a community.

I hope this is of some use to you.

Regards,

Bill Benzon

Wednesday, September 26, 2018

From a big wave to a great wave

Evolution of Hokusai's "Great Wave".— tkasasagi🦄 (@tkasasagi) September 26, 2018

1. When he was 33 (1792).

2. When he was 44 (1803).

3. When he was 46 (1805).

4. When he was 72 (1831). pic.twitter.com/hUXkjNjGxh

Why we'll never be able to build technology for Direct Brain-to-Brain Communication

I know Elon Musk has started a company that aims to create high-bandwidth brain-machine communication (Neuralink). I have no idea what kind of technology he's got in mind (the website has nothing). I'm inclined to think, however, that any such linkage will require considerable learning in order to be usable. Here's a post from May 2013 that's about high-bandwidth brain-to-brain communication, which is different from brain-to-machine. But the basic argument applies.

* * * * *

Would it be possible, some time in the unpredictable future, for people to have direct brain-to-brain communication, perhaps using some amazing nanotechnology that would allow massive point-to-point linkage between brains without shredding them? Sounds cool, no? Alas, I don’t think it will be possible, even with that magical nanotech. Here’s some old notes in which I explore the problem.

My basic point, of course, is that brains coupled through music-making are linked as directly and intimately as computers communicating through a network (an argument I made in Chapters 2 and 3 of Beethoven’s Anvil, and variously HERE, HERE, HERE, and HERE). And, like networked computers, networked brains are subject to constraints. In the human case the effect of those constrains is that the collective computing space can be no larger than the computing space of a single unconstrained brain. This is true no matter how many brains are so coupled, despite the fact that these coupled brains have many more computing elements (i.e. neurons) than a single brain has.

The explanatory problem, as I see it, is that we tend to think of brains as consisting of a lot of elements. Thus, an effective connection between brains should consist of an element-to-element, neuro-to-neuron, hook-up, no? Compared to that, music seems pretty diffuse, though there’s no doubt that, somehow, it works.

So, let’s take a ploy from science fiction, direct neural coupling. I’ve seen this ploy used for man-machine communication (by e.g. Samuel Delaney) and surely someone has used it for human-to-human communication (perhaps mediated by a machine hub). Let’s try to imagine how this might work.

The first problem is simply one of physical technique. Neurons are very small and very many. How do we build a connector that can hook up with 10,000,000 distinctly different neurons without destroying the brain? We use Magic, that’s what we do. Let’s just assume it’s possible: Shazzaayum! It’s done.

Given our Magic-Mega-Point-to-Point (MMPTP) coupling, how do we match the neurons in one brain to those in another? After all, each strand in this cable is going to run from one neuron to another. If our nervous system were like that of C. elegans (an all but microscopic worm), there would be no problem. For that nervous system is very small (302 neurons I believe) and each neuron has a unique identity. It would thus be easy to match neurons in different individuals of C. elegans. But human brains are not like that. Individual neurons do not have individual identities. There is no way to create a one-to-one match between the neurons in one brain with corresponding neurons (having the same identity within the brain) in another; two brains don’t even have the same number of neurons much less a scheme allowing for matching identities. In this respect, neurons are like hairs, and unlike fingers and toes, where it’s easy to match big toe to big toe, index finger to index finger, and so forth.

So, that’s one problem, how to match the neurons in two brains. About all I can see to do is to match neurons on the basis of location at, say, the millimeter level of granularity. Perhaps we choose 10M or 100M neurons in the corpus callosum and just link them up. There’s another problem: How does a brain tell whether or not a given neural impulse comes from it or from the other brain? If it can’t make the distinction, how can communication take place?

Real brains don’t have any ‘free’ input-output ports. If they did, then they could be used in brain-to-brain communication. Anything coming in through such a port would thus be outside and, if you wanted to contact the other brain, send it through the proper port. But, such things don’t exist for real brains.

What, then, happens when we finally couple two people through our wonderful future-tech MMPTP? The neurons are not going to correspond in a rigorous way and they’re not going know what’s coming from within vs. outside. In that situation I would imagine that, at best, each person would experience a bunch of noise.

I haven’t got the foggiest idea how that noise would feel. Maybe it will just blur things up; but it might also cause massive confusion and bring perception, thought, and action to a crashing halt. The only thing I’m reasonably sure of is that it won’t yield the intimate and intuitive communion of one mind with another.

However, if this coupling doesn’t bring things to a halt, it’s possible that, in time (weeks? months? years?), the two individuals with their coupled brains will work things out. The brains will reorganize and figure out how to deal with one another; that is, they will learn. The self-organizing neural processes within each brain will learn to deal with activity coming from the other brain and incorporate it into their routines. [Sort of like musicians from different cultures meeting and jamming and gradually arriving at ways to play together.]

Self-organization is the key. It’s not only that individual brains are self-organized, built from inside, but that individual brains consist of many regions each of which is self-organized and quasi-autonomous. Each of these regions is connected to many other regions and is interacting with them continuously, incorporating their activities into its own self-organized patterns. [Like musicians jamming. Each makes their own decisions and their own sounds, but is listening to all the others and acting on what she hears.]

And, as I said, that’s how brains are built, from the very beginning. The process is quite different from what I did when I assembled my stereo amplifier from a kit. When I built my amplifier I laid all the parts out and assembled the basic sub-circuits. I then connected those together on the chassis and, when it was all connected, plugged it in, turned in on, and hoped for the best. That is, no electricity flowed through these components until they were all connected. [BTW, it didn’t work at first. There was a cold solder joint in the power amplifier circuit. Once I’d fixed that, I was in business.]

Brain development isn’t like that at all. The individual elements are living cells; they’re operational from birth. And the operation of one neuron affects that of its near and distant neighbors. If this were not the case, it would be impossible to construct a large and complex brain like those of vertebrates; the components wouldn’t mesh effectively. So there’s never really a magic moment like that in the life of a stereo amplifier when all the dead elements suddenly become alive. The closest we’ve got is the moment of birth, when the operational environment for the nervous system becomes dramatically changed, all of it, at once, and forever. And then it keeps on growing and developing, self-organizing (region by region) in interaction with the external world.

But brains remain forever unique. And that means that our fantasy MMPTP coupler is, in fact, no better than music. Real music, that we can make any time, that we’ve been making since before speech evolved, that’s as direct and intimate as it gets. The Vulcan Mind Meld is science fiction; music is not.

But, as I said at the beginning, musical coupling is subject to one constraint: the collective computational space of the coupled system is no larger than that of a single unconstrained brain. That means that music is a very good way for brains to mutually “calibrate” one another, to match moves and arrive at common understandings. Music creates the trust between individuals that language needs in order to be effective.

* * * * *

Note, I've included slightly different version of this post in a working paper, Coupling and Human Community: Miscellaneous Notes on the Fundamental Physical Foundations of Human Mind, Culture, and Society, February 2015, pp. 19-20, https://www.academia.edu/10777462/Coupling_and_Human_Community_Miscellaneous_Notes_on_the_Fundamental_Physical_Foundations_of_Human_Mind_Culture_and_Society

The importance of words and stories: If we don't know how to put it into words, it doesn't exist (lessons from Kavanaugh)

Penelope Trunk, Judge Kavanaugh has taught me so much about how the world works. First four paragraphs:

I can’t stop reading about Judge Kavanaugh. I am learning so much about how the world works.His yearbook from high school is horrifying. There were derogatory jokes about women throughout the pages. Everyone knew, but the boys were rich, and they were going to top colleges, so it was okay. I didn’t know there were yearbooks like that.I knew that in those rich-kid private schools the teachers decide who goes to which school. I know that, for example, Yale takes a certain number of kids from Choate, and Choate tells Yale which kids will be the best fit. There is a symbiotic relationship. Choate can say they always get kids into Yale. And Yale knows they’ll get the best kids for Yale without having to do much searching.What I didn’t know was that the most coveted clerkships work the same way. Judge Kavanaugh always takes law students from Yale. The symbiotic relationship there is that Yale can say their students always get great clerkships, and in exchange Yale law professor Amy Chua makes sure Kavanaugh always has a stream of female law school students who look like models. Really. Click that link.

This and that and then she mentions that she was once sexually assaulted, and once was forced to touch a man's penis:

And I said no, get away from me, gross. But I still touched it. I am shocked that it’s hard for Ramirez to put a coherent story together. I thought not having a coherent story meant maybe what I thought happened didn’t happen.But now I understand that not having the words to describe it is part of the problem. We have no word in the English language for being so stressed and so full of shame that you start trying so hard to block it out the minute you get way. Of course we have no word for that. Because it’s only women feeling a loss of power who need that word.

She had no language.

We need to create that language so these indignities and this violence can have a proper place in public discourse.

Are we now beginning to create that language?

Regardless of what happens to Kavanaugh – he shouldn't be confirmed, but who knows? – I'm glad to see that this widespread culture of toxic masculinity and misogyny is being brought to light.

Tuesday, September 25, 2018

Sean Carrolll interviews David Poeppel about language and the brain

Sean Carroll is a physicist at CalTech and Poeppel studies the brain, language, and cognition at NYU and the Max Planck Institute for Empirical Aesthetics in Frankfurt, Germany.

0:11:02 David Poeppel: [...] Now, the incuence of Chomsky was to argue, in my view, successfully that you really wanted to have a mentalist stance about psychology. And he had a lot of very interesting arguments. He also made a number of very important contributions to computational theory, to computational linguistics, and obviously to the philosophy of mind. [...]0:11:58 DP: But he is... He’s dincult. I actually just gnished a chapter, a couple of... Last year’s so-called “The Incuence of Chomsky on the Neuroscience of Language,” because many of us are deeply incuenced by that. The fact of the matter is, his role has been both deeply important and moving and terrible and it’s partly because he’s so relentlessly un-didactic. If you’ve ever picked up any of his writings, it’s all about the work. He’s not there to make it bite-size and fun. He assumes a lot, a lot of technical knowledge and a lot of hard work. And so if you’re not into that, you’re never gonna get past page one because it is technical. But that’s made it very difficult because it seems obscurantist to many people.0:12:40 Sean Carroll: It’s always very interesting, there are so many gelds where certain people manage to have huge outside influences despite being really hard to understand. Is it partly the cache of the reward you feel when you finally do understand something difficult?0:12:57 DP: I wish that were true. That would mean a lot of people would read, let’s say, my boring papers. But I think in the case of Chomsky, it’s true because there are supergcial misinterpretations and misreadings that are very catchy. The most famous concept is language is innate. Now, such a claim was never made and never said. It’s much more nuanced. It’s highly technical. It’s about what’s the structure of the learning apparatus, what’s the nature of the evidence that the learner gets. Obviously this is a very sophisticated and nuanced notion, but what comes out is, “Oh, that guy’s claim is language is innate,” and that’s...

On understanding technical work:

0:14:56 DP: But, yeah, sometimes, especially in, let’s say, technical disciplines, you have to do the hard work and you can’t cut any corners. You have to actually get into the technical notions and what are the presuppositions, what are the, let’s say, hypothesized primitives of a system, how do they work together to generate the phenomena we’re interested, and so on. The concept of generative grammar, it’s a notion of grammar that’s trying to go away from simply a description or list of factoids about languages, and trying to say, “Well, how is it that you have a gnite set of things in your head, a gnite vocabulary, and ostensibly a gnite number of possible rules, maybe just one rule, who knows, but you can generate and understand an ingnite number of possible things?” It’s the concept that’s typically called “discrete ingnity.”0:15:43 SC: Yep, okay.0:15:44 DP: That’s a cool idea, and the idea was to work it out. Well, if that’s the parts list, how can you have a system and how can you learn that system, acquire that system? How can that system grow in you, and you become a competent user of it? That’s actually subtle, and you can’t just...

The speech signal:

0:25:08 DP: Our conversation, if you measured it, the mean rate of speech, across languages by the way, it’s independent of languages, it’s between 4 and 5 Hertz. So, the amplitude modulation of the signal... The signal is a wave. You have to imagine that any signal, but the speech signal, in particular, is a wave that just goes up and down in amplitude or, informally speaking, in loudness. The signal goes up and down, and the speech signal goes up and down four to gve times a second.0:25:35 SC: What does the speech signal mean? Is this what your brain waves...0:25:38 DP: The speech signal is the stuff that comes out of your mouth. I’m saying, “Your computer is gray.” Let’s say that was two and a half seconds of speech. It came out of my mouth as a wave form, and that’s the wave form that vibrates your eardrum, which is cool. But if you look at the amplitude of that wave form, it’s signal amplitude going up and down. It’s actually now... This is not debated, it’s four to gve times per second. This is a fact about the world, which is pretty interesting. So, the speech rate is... Or the so-called modulation spectrum of speech is 4 to 5 Hertz. [...]

Music, incidentally, has a modulation spectrum that’s little bit slower. It’s about 2 Hertz. That’s equivalent to roughly 120 beats per minute, which is pretty cool. [...] If you take dozens and dozens of hours of music, and you calculate what is the mean across different genres, what is the mean rate that the signal goes up and down, I’s 2 Hertz.

We've got better descriptions of what the brain is doing, explanations...:

0:31:39 DP: And partly, that has to do with what do we think is an explanation. And that’s a very complicated concept in its own right, whether you’re thinking about it from the point of view of philosophy, the sciences, an epistemological idea. But we do have, let’s say... What we have for sure is better descriptions, if not better explanations. And the descriptions have changed quite a bit. I don’t know what the time scale is that would count as success, but I think it’s... We can sort of say that we’ve had the same paradigm for... We’ve had the same neurobiological paradigm for about 150 years since Broca and Wernicke, since the 1860s actually. A very straightforward idea...

The dual stream model of speech:

Predictive computation:0:42:52 DP: [...] So, we reasoned that maybe the brain capitalizes on the same computational principles. You have one stream of information that says, “Look, what I really need to do is figure out what am I actually hearing, what are the words, how do I put them together, and how do I extract meaning from that.” And you have another stream that really needs to be able to deal with, “Well, how do I translate that into an output stream?” Let’s call it a “how” stream or an articulatory interface.0:43:33 DP: Why would you do such a thing? Well, let’s take the simplest case of a word. And so what’s a word? Word is not a technical concept, by the way. Word is an informal concept, as you remember from your reading of Steve Pinker’s book. The technical term here would be morpheme, the smallest meaning-bearing unit. But we’ll call them words, words roughly correspond to ideas. So, what is a word? You have a word that comes in. My word that comes into your head now is, let’s say, “computer.” And as it comes in, you have to link that sound gle to the concept in your head. It comes in, you translate it into a code we don’t know, let’s say Microsoft brain, and that code then gets linked somehow to the file that is the storage of the word “computer” in your head. Now, in your head somewhere there’s a file, an address, that says the word “computer,” what it means for you, like, “I’m on deadline,” “Oh, this file,” or, “Goddamn, my email crashed.” But there’s many other things. So, you know what it means, you know how to pronounce it, you know a lot about computers, but you also know how to say it. So, it also has to have an articulatory code.0:44:43 DP: Now, here comes the rub. The articulatory code is in a different coordinate system than all the other ones, because it’s in the motor system. It’s in basically time and motor... People call it joint space, because you move articulators, or you move your jaw, your tongue, your lips. So, the coordinate system that you use as a controller is quite different than the other ones. You have to have areas of the brain that go back and forth seamlessly and very quickly, because speech is fast, between an articulatory coordinate system for speaking and an auditory coordinate system for hearing. And some coordinate systems yet unspecifed, which we don’t understand at all, for meaning. You’re screwed. So, even something banal, like knowing the word “computer,” or “glass,” or “milk,” is already a deeply complicated theoretical problem.

0:55:25 DP: I think the notion that it’s entirely constructed, that you need a computational theory using the word “computational” but loosely, I think, is now completely convincing. That the way we do things are predictive, for instance, that most of what we do is a prediction, that the data that you get are vastly under-determined, the percepts and experience that you extract from the initial data, and so that it’s a glling in process. So, you take underspeciged data, that are probably noisy anyway, and then you build an internal representation that you use for inference and you use for action. Those are the two things you presumably wanna do most. You wanna not run into things and you wanna think about stuff.

And so it goes.

Bonus: Sean Carroll on the problem of having an original idea:

0:52:18 SC: The problem is that people who sound like complete nut jobs... If you do have a tremendously important breakthrough that changes the world, you will be told you’re a complete nut job. But most people who sound like complete nut jobs do not have tremendously important breakthroughs that will change the world.

CFP: Computational cognitive science and digital humanities [#DH]

For some time now I've been saying that a convergence between computational criticism and cognitive science is a "natural", particularly the computational side of cognitive science, where the mind as conceived of as involving computational processes [1]. It's beginning to happen, at last.

The Data Science Institute and the Insight Centre for Data Analytics have announced the 1st Workshop on Cognitive Computing Methodologies for the Humanities to take place on December 6, 2018 at the National University of Ireland in Galway. Here's a list of potential topics from the CFP:

- Computational psychological profiling of artists and authors alike.

- Automatic identification of psychological traits of fictional figures (including fictionalised/romanced actual figures) in literature.

- Formal models connecting sociological and psychological traits of figures in literature and visual arts.

- Modeling of knowledge acquisition from narrative texts.

- Quantitative (e.g. statistical, computational, machine learning) methods for cognitive analysis in literature or poetry.

- Cognitively inspired Machine Learning for literacy and poetry.

- Computational theories of narrative and poetry literature.

- Computational and cognitively-inspired models for literature.

- Identification of cultural and linguistic phenomena throughout history.

- Computational creativity for the generation and understanding of poetry and romance.

Check the CFP (link above) for dates and other information.

[1] See e.g.: William Benzon, Digital Criticism Comes of Age, Working Paper, December 2015, https://www.academia.edu/19414113/Digital_Criticism_Comes_of_Age.

William Benzon, From Canon/Archive to a REAL REVOLUTION in literary studies, Working Paper, December 2017, https://www.academia.edu/35486902/From_Canon_Archive_to_a_REAL_REVOLUTION_in_literary_studies.

Monday, September 24, 2018

Still, why do literary critics find it so difficult to focus on form?

A topic I’ve been thinking about off and on for some time, most recently: Once more, and thinking of ring composition: Why aren’t literary critics interested in describing literary form?

A meaning-focused discipline

It’s surely that literary criticism has focused on meaning. But why should that distract from an interest in form, especially since so-called formalism looms large in methodological discussions and in practical criticism? That is, why does formalism have so little to do with actual form? That’s the question.

Of course, form in the sense that I mean, isn’t completely invisible. It’s quite visible in formal verse, where patterns of rhyme, meter, and so forth have been extensively catalogued. This is, however, a relatively peripheral matter for literary critics, though it may be very important to some working poets.

Verse forms are visible because they are forms of sound, and sound is readily objectified. What of ring forms? Well, in the small, they’re known as chiasmus, and are well known and often remarked. But chiasmus often involves symmetrical arrangements of sound. Large-scale ring forms do not. They’re more difficult to identify, and certainly more problematic.

That they are large scale, encompassing the whole work, is one source of difficulty. You have compare features across the whole text, that’s much more difficult than comparing features within a line or a few lines of poetry. Moreover it’s not obvious what features you should be comparing. It’s not sound. And objectifying subject matter is trickier, no?

The road to form

In my own experience, it takes a fair amount of tedious work to identify ring-form structures. I can think of one case where I suspected a ring-form at the outset, Tezuka’s Metropolis, and another where I started with a clue, David Bordwell’s remark about symmetry in King Kong. In both of these cases it took me some hours of work over a day or three to conduct the analysis. And then there’s Gojira, where I’d worked on the film off and on over a couple of years before I suspected it might be a ring composition. And then, again, it was hours of work to verify my suspicion.

I note moreover that I did this work after, long after, I had adopted a computational view of literary texts. Indeed, I did most of this work after my 2006 paper on literary morphology [1]. By that time I had, of course, done the work on “Kubla Khan” and on Tezuka’s Metropolis. But it wasn’t until several years later I ran up a post about “The Nutcracker Suite” and “sorcerer’s Apprentice” episodes of Fantasia [2], though I must have worked on “The Nutcracker Suite” in 2006 or 2007 as I’d written a long email to Mary Douglas about it and she died in May of 2007.

My point is simply that I hadn’t begun this work in a systematic way until I’d found a example or two by the by and until I had a theoretical reason – computational form – to look for them. I’d been driven to computation by “Kubla Khan” years ago, after I found those nested structures that must “smelled” like computation.

|

| Nested structure in “Kubla Khan”, ll. 1-36. |

And it was easy and natural enough to extend computation to Lévi-Strauss’s notion of the armature. And finally, having adopted a computational view of language, it followed that literature must have a computational aspect as well. The remaining issue is whether or not literary texts display large-scale computational structures rather than simply being a concatenation of sentence-level structures. The existence of ring-form texts suggests that they do.

Sunday, September 23, 2018

Software sucks! Why?

I’ve been programming for 15 years now. Recently our industry’s lack of care for efficiency, simplicity, and excellence started really getting to me, to the point of me getting depressed by my own career and the IT in general.Modern cars work, let’s say for the sake of argument, at 98% of what’s physically possible with the current engine design. Modern buildings use just enough material to fulfill their function and stay safe under the given conditions. All planes converged to the optimal size/form/load and basically look the same.Only in software, it’s fine if a program runs at 1% or even 0.01% of the possible performance. Everybody just seems to be ok with it. People are often even proud about how much inefficient it is, as in “why should we worry, computers are fast enough”:@tveastman: I have a Python program I run every day, it takes 1.5 seconds. I spent six hours re-writing it in rust, now it takes 0.06 seconds. That efficiency improvement means I’ll make my time back in 41 years, 24 days :-)You’ve probably heard this mantra: “programmer time is more expensive than computer time”. What it means basically is that we’re wasting computers at an unprecedented scale. Would you buy a car if it eats 100 liters per 100 kilometers? How about 1000 liters? With computers, we do that all the time.

Lots of stuff here.

For example:

Every device I own fails regularly one way or another. My Dell monitor needs a hard reboot from time to time because there’s software in it. Airdrop? You’re lucky if it’ll detect your device, otherwise, what do I do? Bluetooth? Spec is so complex that devices won’t talk to each other and periodic resets are the best way to go.

This too:

We put virtual machines inside Linux, and then we put Docker inside virtual machines, simply because nobody was able to clean up the mess that most programs, languages and their environment produce. We cover shit with blankets just not to deal with it. “Single binary” is still a HUGE selling point for Go, for example. No mess == success.

And so it goes.

I note that this is the same world where other folks are talking about superintelligent computers and recursively reprogram themselves to be smarter and smarter and really smarter.

A look under the hood through the insane musical genius that is Mnozil Brass

Note: This had been originally posted at 3 Quarks Daily, but it got lost when 3QD moved to a new location. Meanwhile a number of the video clips got thrown in jail by the copyright protection gang. Consequently I've had to find new versions (where I good) and the timings on the new versions are a bit different from the timings I list in the text.]

I don’t know just when it was, but let’s say it was half a dozen years ago. I’m on an email list for trumpet players and someone had sent a message suggesting we check out the Mnozil Brass. Strange name, I thought, but I found some clips on YouTube and have been entranced ever since.

They’re a brass septet from Austria, six trumpets, six trombones, and a tuba. Their repertoire is all over the place and their genius is unmistakable. They are superb musicians, but also arch conceptualists, skilled comedic performers, and questionable dancers. They put on a hell-of-a-show. And I do mean “put-on”, as much of what they do is deeply serious in a way that only inspired buffoonery can be.

Here’s a performance that was posted to YouTube in April of 2012. It’s just shy of four minutes long and goes through distinct phases. It’s called “Moldavia”, presumably after the old principality in Eastern Europe.

Watch the clip. Tell me what you hear, but also what you see. Both are important. It’s their interaction that is characteristic of Mnozil.

What I hear, of course, is brass playing, a lyrical trombone, ferocious trumpets, a tuba holding down the bottom. And then there’s the singing toward the end. What are they doing while singing? They’re not standing still like choir boys. They’re moving and gesticulating madly. Dominance it looks like to me, (male) dominance. You may have heard that in the music, though perhaps not identifying it as such; but now you can see it. They’re showing you what’s driving the music.

But that’s not how it starts. It starts with a rubato trombone solo. There’s a shot of the tuba player slouched in his seat reading some magazine; it’s black with a large white Playboy bunny logo on it. The implication is that he’s looking at pictures of naked women.

And so it goes. There’s lots of business going on. I could, but won’t, comment on it endlessly.

But I’ll say just a bit more. At about twenty seconds in we’re in tempo and the leftmost trumpeter (Thomas Gansch, the ringleader of the group) does a few dance steps, while remaining seated. To my (not terribly well informed) eye they look like steps from some Balkan circle dance. A bit later there’s some business about the trumpeters standing play, but one of them isn’t coordinated with the others. What’s that about?

It goes by quickly and then things move on. What we’re seeing is the behind-the-scenes (under the hood) mechanisms of performance. Signals have been crossed and it takes awhile to get things straightened out. That is, that’s what we’re being shown. Of course, no one’s really confused. This is all scripted. It’s part of the performance. Mnozil are playing at performing.

But that disappears at about two minutes in when the trumpets start playing a wicked fast melody in an odd meter (7/8), at least by the most common practice in Western music. Remember, this is “Moldavia”, somewhere suspended between Europe and the (exotic) Middle East. I can assure you, as a trumpet player, that what they’re doing here is ferociously difficult. But you don’t need me to tell you that. You can hear it in the music. And that difficulty requires skill, concentration, and commitment. At this point it’s all and only music. And yet a minute later they’re doing that vocal nonsense.

That’s how it is. Back and forth. Irony. Sincerity. Irony. Sincerity. Overall: KICKASS! Their virtuosity gives weight to their clowning. And their clowning humanizes their virtuosity.

They’re unique.

Here’s a different clip, called “Ballad”, and that’s what it is. I’ve been told it’s from the soundtrack of Alfred Hitchcock’s Vertigo, but I’ve not verified that, nor does it matter for my purposes. What matters is that it’s one piece of music from start to finish, with no funny stage business. It’s ‘ordinary’ music, played extraordinarily well.

[This clip was, alas, consumed by the copyright protection gang.]

Regardless of the kind of music being played, this is what we expect of musical performances, just the music. If you don’t see what Mnozil’s doing while performing “Moldavia” you music quite a bit. Here, you don’t miss much.

Now let’s look at a performance where the music is utterly simple while the performance is all. It’s called “Lonely Boy”. At the beginning we hear the tuba player, Wilfried Brandstötter, lay down an ostinato bass line and see a lone man – trombonist Leonard Paul – slumped down in a chair.

One by one he brings two other trombonists and then two trumpeters out. He ‘manipulates’ the trombone slides with his feet and the trumpet valves with his fingers, but the other players do the blowing. Paul is looking utterly detached while doing this. It’s an astonishing feat played in an utterly deadpan manner.

And then the last member of the group, trumpeter Roman Rindberger, comes out and removes the chair from underneath Paul. The performance continues seamlessly while Rindberger parades around like a triumphant magician. Of course, his is the easiest part, strutting and looking (a bit) graceful.

But it works. It all works. Take it as an allegory about how people performing music become a single interconnected being, albeit one distributed across several physically distinct bodies. And the audience is (just) an extension of that being.

Saturday, September 22, 2018

Courtesies and Seams: My relationship with David Hays, teacher, mentor, colleague, and friend

I’d been planning to write this for some time. Having spent a good bit of time over the last month thinking and writing about the toxic relationship Avital Ronell had with Nimrod Reitman [1], it seemed urgent that I tell a story about a different kind of relationship.

* * * * *

I met David Hays in the Spring of 1974, my second semester in graduate school at the State University of New York at Buffalo. I was sitting in the English Department’s graduate student lounge talking with Ralph Henry Reese, a second year student. When I mentioned my interest in cognitive science, and in particular, in cognitive networks, he brought out this cognitive network diagram he’d been working on. It was for buried treasure stories. He’d been working with this guy in Linguistics, David Hays. He was a computational linguist. The name rang a bell.

Didn’t he write that article in Dædalus [2], about language and love? I didn’t know he was here at Buffalo. That was a good article, the best in the issue.

And so I met Hays in his office and explained what I was up to. We talked about cognitive science I suppose, because that’s what I was there for, and about my specific problem: I’d found this formal pattern in “Kubla Khan” that smelled like computation – I’m not sure I used that word, “smelled” in that first conversation, but it did come up early in our conversations. There was nothing in literary criticism that gave me a clue as to what it was about and no one in the English Department who could really help me, though they liked the work. Perhaps he would read my MA thesis on the poem and let me know what he thought? He agreed and I left him with a copy of the thesis.

I returned a week or two later and we talked. Have you tried this? Yes, I said. Didn’t get me anywhere. What about this? Same thing. He was impressed, thought there was something there, but just what...We decided to work together, teacher and student.

* * * * *

I enrolled in a graduate seminar Hays was giving that fall, “Language as a Focus for Intellectual Integration.” The rubric was a flexible one, basically a vehicle for Hays and his students to investigate whatever interested them. Each student could suggest a book or two. I remember Hays had us read William Powers, Behavior: The Control of Perception, and Talcott Parsons, The Structure of Social Action. I’d offered Claude Lévi-Strauss, The Raw and the Cooked, and Northrop Frye, Anatomy of Criticism. I forget what else we read. The class format was simple, weekly readings, discussion in Hays’s office (there weren’t but a handful of students enrolled), and a final paper.

At the same time Hays tutored me in his semantic theory. He’d written a book, Mechanisms of Language, for a course of the same name, in which he set out his best account of language, phonology, morphology, syntax, and semantics. He never published it formally; rather the Linguistics department arranged to make copies of the typescript for distribution in class. He gave me a copy. We concentrated on semantics, which was more or less independent of the other material.

We met once a week at my apartment. We sat around the kitchen table because we needed to be able to draw diagrams. Hays’ theory was very visual. We used up a lot of paper.

Why my apartment? Convenience mostly. SUNY Buffalo had just opened a new suburban campus. The Linguistics Department had moved there, but the English Department had not. The English Department remained in north Buffalo and I lived near the department. Hays would come by my apartment on the way to his office at the new campus – on seminar days I commuted to his office by shuttle bus.

At the beginning of the semester I’d asked Hays how long he thought it would take me to learn the semantic model. Why’d I ask that? I don’t know, vanity, eagerness, who knows. I asked. He said it generally took two to three months. Thought I to myself, it won’t take ME that long. And you know what? Three months. Back and forth across the kitchen table, working exercises from Mechanisms, talking, drawing diagrams. It was like math. It WAS math, albeit of an informal kind. You had to work the problems. That was the only way.

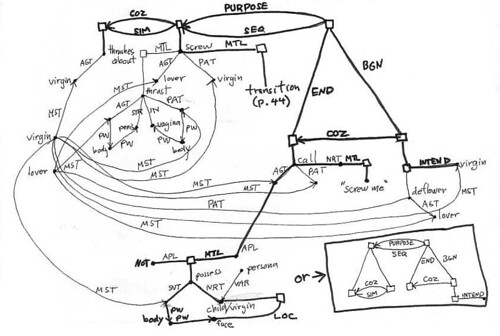

At the end of those three months I took what I’d learned in those tutoring sessions and applied it to some fragments of William Carlos Williams’ Patterson, Book V, which I’d been studying in a poetry seminar taught by Charlie Altieri. The result was a long paper which I submitted to both seminars, Modern Poetry and Linguistics as a Focus of Intellectual Integration. Here’s a diagram from that paper:

That’s what took me three months, maybe four, to learn. It’s not just the diagrams themselves, what the labels (COS, SIM, BGN, MTL, NRT, etc.) mean and so forth, but how to go from natural language statements to these diagrams representing the underlying semantic structure. It’s the back-and-forth between language and diagrams that takes time to learn, to internalize.

Friday, September 21, 2018

The extended network of humans and things (ANT) that constitutes an AI system

If you haven’t already, read this incisive and imaginative account of the vast AI system that makes this possible and so disconcerting: “Alexa, turn on the hall lights.” https://t.co/FpKOcYiCm2— chad wellmon (@cwellmon) September 21, 2018

Three modes of investigation in the human sciences: interpretive, experimental, and grammatical [#DH]

Three decades ago I prepared a report in which I proposed an interdisciplinary course that embraced what I took to be the three primary modes of investigation in the human sciences [1]. To write the report I needed terms for referring to those modes. That was a problem, for, as far as I could tell, standard terms didn’t exist.

The terminological problem remains, but I’ve reached a tentative decision about one-word terms: interpretive, experimental, and grammatical.

Interpretation, of course, can be used so broadly as to refer to just about any method. Everything requires interpretation, right? Well, yes, but that’s what I mean. I mean interpretation as it is practiced, perhaps most centrally, in literary criticism, in particular, in the practice of analyzing and interpretive individual texts. But critics can read texts spanning, say, a century and interpret what happened in the course of that century. Historians do the same thing; you amass a pile of evidence about some historical phenomenon or period and then you figure it what it all means. That is, you interpret it. And so with anthropology, and so forth.

By experimental I mean the type of work typically done in the behavioral and social sciences. One gathers data by some means – laboratory observation, survey instrument, public or privates records, whatever – and subject it to statistical analysis. The data and analysis constitute and experiment intended to test some hypothesis, though in many cases the only hypothesis on offer is the null hypothesis. Different data or different analytic approach, different experiment.

Grammatical thinking is perhaps the newest kid on the methodological block, though the idea of a grammar is quite old. I take linguistics as my core example, where I have in mind the formal and quasi-formal approach to linguistic phenomena that arose after World War II in the work of Chomsky and associates, computational linguists, and linguists of other schools. As it developed the grammatical approach extended to a wide range of perceptual, cognitive, and motor and behavioral phenomena. Some of the most interesting work of this kind involves the use of computer models.

Work in digital humanities has brought experimental methods to bear on phenomena which had previously been almost exclusively interpretive. Grammatical approaches are still rare within the humanities, and that includes most forms of cognitive literary criticism.

Ideally undergraduates majoring in any of the human sciences would be acquainted with all three approaches, though they would concentrate in only one or two. When such students then go on to doctoral work they would bring that breadth of acquaintance with them. Frankly, I think dissertations should employ two out of the three approaches. I note that much current work in digital humanities involves both interpretive and experimental approaches.

[1] William L. Benzon, Policy, Strategy, Tactics: Intellectual Integration in the Human Sciences, an Approach for a New Era, Working Paper, https://www.academia.edu/8722681/Policy_Strategy_Tactics_Intellectual_Integration_in_the_Human_Sciences_an_Approach_for_a_New_Era