Our new paper generalizing the chain, circle and graph of thought prompting strategies--that unleashes the hidden power of LLMs (and graduate students). Hope @_akhaliq picks it up.. 🤞 pic.twitter.com/1iaMX4KwuQ

— Subbarao Kambhampati (కంభంపాటి సుబ్బారావు) (@rao2z) May 20, 2023

Pages in this blog

Wednesday, January 31, 2024

Forest of Jumbled Thoughts Prompting

A short note on LLMs and speech

The linguistic capacities of large language models (LLMs), such as ChatGPT, is remarkable. However, we should remember that it is also NOT characteristically human. Well, of course, not; it’s a computer. But that’s not what I have in mind.

What I’m thinking is that human language is, first of all, speech, and speech is interactive. Speech is interactive. LLMs are, at best, weakly interactive, though one can “converse” with them in short strings.

It is rare for a person to deliver a long string of spoken words. What do I mean by long? I don’t know. But I’m guessing that if we examined a large corpus of spoken language gathered in natural settings that we’d find relatively few utterances over 100 words long, or even 50 words long. Storytellers will deliver long stretches of uninterrupted speech, but they work at it. It’s not something that comes ‘naturally’ in the course of speaking with others. Learning to do it requires System 2 thinking, though the actual oral delivery of a story is likely to be confined to System 1.

Humans do produce long strings of words, but that’s most likely during writing. And writing is not “natural,” One must deliberately learn the writing system in a way that’s quite different from acquiring a first language, and then one must learn to produce texts that are both relatively long, over 500 or 1000 words, and coherent. Thus the fact that LLMs can produce 200, 300, 500 or more words at a stretch is quite unusual. And this is all done in some approximation to System 1 mode.

Tuesday, January 30, 2024

Multi-cellular life is 1.6 billion years old

A new study describing a microscopic, algalike fossil dating back more than 1.6 billion years supports the idea that one of the hallmarks of the complex life we see around us—multicellularity— is much older than previously thought. https://t.co/WWGwaWc8Ww @NewsfromScience

— Science Magazine (@ScienceMagazine) January 30, 2024

The Primal Scene of Artificial Intelligence

I've grabbed this from Cosma Shalizi:

Herbert Simon recalls running Logic Theorist for the first time:

Al [Newell] and I wrote out the rules for the components of the program (subroutines) in English on index cards, and also made up cards for the contents of the memories (the axioms of logic). At the GSIA [= Graduate School of Industrial Administration] building on a dark winter evening in January 1956, we assembled my wife and three children together with some graduate students. To each member of the group, we gave one of the cards, so that each person became, in effect, a component of the LT computer program --- a subroutine that performed some special function, or a component of its memory. It was the task of each participant to execute his or her subroutine, or to provide the contents of his or her memory, whenever called by the routine at the next level above that was then in control.So we were able to simulate the behavior of LT with a computer constructed of human components. Here was nature imitating art imitating nature. The actors were no more responsible for what they were doing than the slave boy in Plato's Meno, but they were successful in proving the theorems given them. Our children were then nine, eleven, and thirteen. The occasion remains vivid in their memories.

(Models of My Life, ch. 13, pp. 206--207 of the 1996 MIT Press edition.)

Cognitive Foundations of Fictional Stories & Their Role in Developing the Self

Dubourg, Edgar, Valentin Thouzeau, Beuchot Thomas, Constant Bonard, Pascal Boyer, Mathias Clasen, Melusine Boon-Falleur, et al. 2024. “The Cognitive Foundations of Fictional Stories.” OSF Preprints. January 26. doi:10.31219/osf.io/me6bz.

Abstract: We hypothesize that fictional stories are highly successful in human cultures partly because they activate evolved cognitive mechanisms, for instance for finding mates (e.g., in romance fiction), exploring the world (e.g., in adventure and speculative fiction), or avoiding predators (e.g., in horror fiction). In this paper, we put forward a comprehensive framework to study fiction through this evolutionary lens.The primary goal of this framework is to carve fictional stories at their cognitive joints using an evolutionary framework. Reviewing a wide range of adaptive variations in human psychology–in personality and developmental psychology, behavioral ecology, and evolutionary biology, among other disciplines –, this framework also addresses the question of interindividual differences in preferences for different features in fictional stories. It generates a wide range of predictions about the patterns of combinations of such features, according to the patterns of variations in the mechanisms triggered by fictional stories. As a result of a highly collaborative effort, we present a comprehensive review of evolved cognitive mechanisms that fictional stories activate. To generate this review, we (1) listed more than 70 adaptive challenges humans faced in the course of their evolution, (2) identified the adaptive psychological mechanisms that evolved in response to such challenges, (3) specified four sources of adaptive variability for the sensitivity of each mechanism (i.e., personality traits, sex, age, and ecological conditions), and (4) linked these mechanisms to the story features that trigger them. This comprehensive framework lays the ground for a theory-driven research program for the study of fictional stories, their content, distribution, structure, and cultural evolution.

You might want to augment and supplement that with a look at an old paper of mine in which I address the development narrative forms in relation to structuring of the self, The Evolution of Narrative and the Self (1993)

Abstract: Narratives bring a range of disparate behavioral modes before the conscious self. Preliterate narratives consist of a loose string of episodes where each episode, or small group of episodes, displays a single mode. With literacy comes the ability to construct long narratives in which the episodes are tightly structured so as to exhibit a character's essential nature. Complex strands of episodes are woven together into a single narrative, with flashbacks being common. The emergence of the novel makes it possible to depict personal growth and change. Intimacy, a private sphere of sociality, emerges as both a mode of experience depicted within novels and as a mode in which people read novels. The novelist constructs a narrator to structure experience for reorganization.

Monday, January 29, 2024

An Israeli Looks at Gaza | Robert Wright & Russ Roberts

1:51 How Israelis think about civilian casualties in Gaza

20:47 Is the war spawning a new generation of terrorists?

34:39 Explaining versus justifying bad behavior

49:39 Can Hamas and other extremist groups be moderated?

1:03:12 Is the world biased against Israel?

1:14:45 Cognitive biases that inflame tribal tensions

1:26:09 The “river to the sea” Rorschach test

1:41:03 On the precipice of World War III?

In listening to this conversation I was reminded of a recent post, Jews and the historical imaginary of half the world’s peoples, where I was talking about the fact that Jews were banned from England between 1290 and sometime late in the 16th century and yet anti-Semitism flourished in that period.

The fact that there were no Jews in Shakespeare’s England was thus no impediment to writing a play about a Jew, one who was a moneylender. Shakespeare had the cultural imaginary of Jews to draw upon and, in so doing, he gave it new force and energy. His moneylender was named “Shylock,” a name which has since served as a token and touchstone for usury.

Jews began returning to England in the middle of the seventeenth century, during the rule of Oliver Cromwell.

As for the Jews in the cultural imaginary, that exploded in Germany and Austria in the middle of the previous century. Those reverberations remain with us to this day.

Arithmetic in Early Modern England

Jay Hancock reviews Jessica Marie Otis, By the Numbers: Numeracy, Religion, and the Quantitative Transformation of Early Modern England (Oxford UP 2024) (H/t Tyler Cowen). The opening paragraphs of the review:

Steam engine entrepreneur James Watt, as responsible as anybody for upgrading the world from poor to rich, left a notebook of his work. Squiggly symbols such as “5” and “2” mark the pages. Without these little glyphs, borrowed by Europeans from medieval Arabs, Watt would not have been able to determine cylinder volumes, pressure forces, and heat-transfer rates. Isaac Newton would’ve struggled to find that gravity is inversely proportional to the square of a planet’s distance from the sun. Calculations for Antoine Lavoisier’s chemistry, Abraham de Moivre’s probability tables, and the Bank of England’s bookkeeping would have been difficult or impossible.

But before Hindu-Arabic numerals could fuel the Enlightenment and the Industrial Revolution, society had to start to think quantitatively. Jessica Marie Otis’ By the Numbers is about scribes starting to write 7 instead of VII, parish clerks counting plague deaths rather than guessing, and gamblers calculating instead of hoping and praying.

Why did some countries become wealthy after 1800? Historians argue about the relative influences of religion, climate, geography, slavery, colonialism, legal systems, and natural resources. But the key, famously shown by economist Robert Solow, who died in December, is technological innovation enabling more and more goods and services to be produced per worker and unit of capital. Innovation needs research, development, and engineering. All those require numbers and numeracy.

This is consistent with the argument that David Hays and I made in The Evolution of Cognition (1990):

The role which speech plays in Rank 1 thought, and writing plays in Rank 2 thought, is taken by calculation in Rank 3 thought (cf. Havelock 1982: 341 ff.). Writing appears in Rank 1 cultures and proves to be a medium for Rank 2 thinking. Calculation in a strict sense appears in Rank 2 and proves to be a medium for Rank 3 thinking. Rank 2 thinkers developed a perspicuous notation and algorithms. It remained for Rank 3 thinkers to exploit calculational algorithms effectively. An algorithm is a procedure for computation which is explicit in the sense that all of its steps are specified and effective in the sense that the procedure will produce the correct answer. The procedures of arithmetic calculation which we teach in elementary school are algorithms.

The algorithms of arithmetic were collected by Abu Ja'far Mohammed ibn Musa al-Khowarizm around 825 AD in his treatise Kitab al jabr w'al-muqabala (Penrose 1989). They received an effective European exposition in Leonardo Fibonacci's 1202 work, Algebra et almuchabala (Ball 1908). It is easy enough to see that algorithms were important in the eventual emergence of science, with all the calculations so required. But they are important on another score. For algorithms are the first purely informatic procedures which had been fully codified. Writing focused attention on language, but it never fully revealed the processes of language (we’re still working on that). A thinker contemplating an algorithm can see the complete computational process, fully revealed.

To be clear, what Hays and I argued goes against a widely held view of the matter, perhaps the standard view, which credits the invention of the printing press with the catalytic role. While the printing press was enormously important, its importance was in facilitating the spread of ideas. As instruments of thought, mechanically printed books offered no affordances that hand-copied books didn't have. But arithmetic, that's a cognitive technology and, as such, can have a direct influence on thought.

Hays and I then go on to discuss the effect of "crossing" algorithmic calculation with the development of mechanisms:

The world of classical antiquity was altogether static. The glories of Greece were Platonic ideals and Euclidean geometry, Phidias's sculptures and marble temples. Although Mediterranean antiquity knew the wheel, it did not know mechanism. Water mills were tried, but not much used. Hero of Alexandria invented toys large and small with moving parts, but nothing practical came of them. Historians generally assert that the ancients did not need mechanism because they had surplus labor, but it seems to us more credible to say that they did not exploit mechanisms because their culture did not tolerate the idea. With the little Renaissance, the first machine with two co-ordinated motions, a sawmill that both pushed the log and turned the saw blade, turned up (White 1978: 80). Was it something in Germanic culture, or the effect of bringing together the cultures of Greece and Rome, of Islam and the East, that brought a sense of mechanism? We hope to learn more about this question, but for the moment we have to leave it unanswered.

What we can see is that generalizations of the idea of mechanism would be fruitful for technology (and they were), but that it would take an abstraction to produce a new view of nature. The algorithm can be understood in just this way. If its originators in India disregarded mechanism, and the north European developers of mechanism lacked the abstraction, it would only be the accidental propinquity of the two that generated a result. Put the abstract version together in one culture with a host of concrete examples, and by metaphor lay out the idea of the universe as a great machine. What is characteristic of machines is their temporality; a static machine is not a machine at all. And, with that, further add the co-ordination of motions as in the sawmill. Galileo discovered that force alters acceleration, not velocity (a discovery about temporality) and during the next few centuries mechanical clocks were made successfully. The notion of a clockwork universe spread across Europe (note that the Chinese had clockworks in the 11th Century, but never developed the notion of a clockwork universe, cf. Needham 1981). For any machine, it is possible to make functional diagrams and describe the relative motions of the parts; and the theories of classical science can be understood as functional diagrams of nature, with descriptions of the relative motions of the parts.

Sunday, January 28, 2024

THC harms human parasites

Why do essentially all adults use "recreational" drugs on a near daily basis? The standard story is that they hijack dopaminergic reward circuits. My colleagues & I are intrigued, however, that all such drugs harm human parasites. Two recent examples (1/2)https://t.co/9u6g5wEa5C pic.twitter.com/1tnWqvVduq

— Ed Hagen (@ed_hagen) January 28, 2024

Patrick Henry Winston, “The Next 50 years: A Personal View”

Winston, Patrick Henry. “The Next 50 years: A Personal View.” Biologically Inspired Cognitive Architectures 1 (July 2012): 92–99. © 2012 Elsevier B.V.

Abstract: I review history, starting with Turing’s seminal paper, reaching back ultimately to when our species started to outperform other primates, searching for the questions that will help us develop a computational account of human intelligence. I answer that the right questions are: What’s different between us and the other primates and what’s the same. I answer the what’s different question by saying that we became symbolic in a way that enabled story understanding, directed perception, and easy communication, and other species did not. I argue against Turing’s reasoning-centered suggestions, offering that reasoning is just a special case of story understanding. I answer the what’s the same question by noting that our brains are largely engineered in the same exotic way, with information flowing in all directions at once. By way of example, I illustrate how these answers can influence a research program, describing the Genesis system, a system that works with short summaries of stories, provided in English, together with low-level common-sense rules and higher-level concept patterns, likewise expressed in English. Genesis answers questions, notes abstract concepts such as revenge, tells stories in a listener-aware way, and fills in story gaps using precedents. I conclude by suggesting, optimistically, that a genuine computational theory of human intelligence will emerge in the next 50 years if we stick to the right, biologically inspired questions, and work toward biologically informed models.

Winston is "old school" symbolic AI and, as such, is dismissive of neural nets. His view of the matter was reasonable until 2012, the year the deep learning took off when AlexNet won the ImageNet Challenge. Note that that's the year Winston published this article. However, if it turns out that symbolic techniques will be necessary to reach AGI (whatever that it), then this article won't appear quite so wrong-headed in its dismissal of neural nets. OTOH, note that he views his approach as being biologically inspired. Nor do I think his emphasis narrative or concept patterns is mistaken. Arguably, though, it's not until GPT-2 or GPT-3 that neural nets could deal with those things.

Saturday, January 27, 2024

Synaptic pruning visualized

Real #FluorescenceFriday this time, with a fresh photo from the microscopy showing one of my favorite events in the brain: synaptic pruning!

— Danielle Beckman (@DaniBeckman) January 27, 2024

Microglia (🟣Iba1) strengthening circuits and removing redundant synapses from neurons (🔵map2): underdo it during development, and you… pic.twitter.com/OnyxvDOJN4

Implant able to record single-neuron activity of neural populations over long periods of time

Leah Burrows, Researchers develop implantable device that can record a collection of individual neurons over months, PhysOrg, Jan. 26, 2024.

Recording the activity of large populations of single neurons in the brain over long periods of time is crucial to further our understanding of neural circuits, to enable novel medical device-based therapies and, in the future, for brain–computer interfaces requiring high-resolution electrophysiological information. But today there is a tradeoff between how much high-resolution information an implanted device can measure and how long it can maintain recording or stimulation performances. Rigid, silicon implants with many sensors, can collect a lot of information but can’t stay in the body for very long. Flexible, smaller devices are less intrusive and can last longer in the brain but only provide a fraction of the available neural information.

Recently, an interdisciplinary team of researchers from the Harvard John A. Paulson School of Engineering and Applied Sciences (SEAS), in collaboration with The University of Texas at Austin, MIT and Axoft, Inc., developed a soft implantable device with dozens of sensors that can record single-neuron activity in the brain stably for months. The research was published in Nature Nanotechnology.

“We have developed brain–electronics interfaces with single-cell resolution that are more biologically compliant than traditional materials,” said Paul Le Floch, first author of the paper and former graduate student in the lab of Jia Liu, Assistant Professor of Bioengineering at SEAS. “This work has the potential to revolutionize the design of bioelectronics for neural recording and stimulation, and for brain–computer interfaces.”

H/t Azra Raza 3QD.

Original research article: Le Floch, P., Zhao, S., Liu, R. et al. 3D spatiotemporally scalable in vivo neural probes based on fluorinated elastomers. Nat. Nanotechnol. (2023). https://doi.org/10.1038/s41565-023-01545-6

Abstract: Electronic devices for recording neural activity in the nervous system need to be scalable across large spatial and temporal scales while also providing millisecond and single-cell spatiotemporal resolution. However, existing high-resolution neural recording devices cannot achieve simultaneous scalability on both spatial and temporal levels due to a trade-off between sensor density and mechanical flexibility. Here we introduce a three-dimensional (3D) stacking implantable electronic platform, based on perfluorinated dielectric elastomers and tissue-level soft multilayer electrodes, that enables spatiotemporally scalable single-cell neural electrophysiology in the nervous system. Our elastomers exhibit stable dielectric performance for over a year in physiological solutions and are 10,000 times softer than conventional plastic dielectrics. By leveraging these unique characteristics we develop the packaging of lithographed nanometre-thick electrode arrays in a 3D configuration with a cross-sectional density of 7.6 electrodes per 100 µm2. The resulting 3D integrated multilayer soft electrode array retains tissue-level flexibility, reducing chronic immune responses in mouse neural tissues, and demonstrates the ability to reliably track electrical activity in the mouse brain or spinal cord over months without disrupting animal behaviour.

Friday, January 26, 2024

We're witnessing the recurrence of apocalyptic fears [elite panics]

Tyler Austin Harper, The 100-Year Extinction Panic Is Back, Right on Schedule, NYTimes, Jan. 26. 2024.

Climate anxiety, of the sort expressed by that student, is driving new fields in psychology, experimental therapies and debates about what a recent New Yorker article called “the morality of having kids in a burning, drowning world.” Our public health infrastructure groans under the weight of a lingering pandemic while we are told to expect worse contagions to come. The near coup at OpenAI, which resulted at least in part from a dispute about whether artificial intelligence could soon threaten humanity with extinction, is only the latest example of our ballooning angst about technology overtaking us.

Meanwhile, some experts are warning of imminent population collapse. Elon Musk, who donated $10 million to researchers studying fertility and population decline, called it “a much bigger risk to civilization than global warming.” Politicians on both sides of the aisle speak openly about the possibility that conflicts in Ukraine and the Middle East could spark World War III. [...]

In a certain sense, none of this is new. Apocalyptic anxieties are a mainstay of human culture. But they are not a constant. In response to rapid changes in science, technology and geopolitics, they tend to spike into brief but intense extinction panics — periods of acute pessimism about humanity’s future — before quieting again as those developments are metabolized. These days, it can feel as though the existential challenges humanity faces are unprecedented. But a major extinction panic happened 100 years ago, and the similarities are unnerving.

The 1920s were also a period when the public — traumatized by a recent pandemic, a devastating world war and startling technological developments — was gripped by the conviction that humanity might soon shuffle off this mortal coil.

Understanding the extinction panic of the 1920s is useful to understanding our tumultuous 2020s and the gloomy mood that pervades the decade.

Back in the day:

Contrary to the folk wisdom that insists the years immediately after World War I were a period of good times and exuberance, dark clouds often hung over the 1920s. The dread of impending disaster — from another world war, the supposed corruption of racial purity and the prospect of automated labor — saturated the period just as much as the bacchanals and black market booze for which it is infamous. The ’20s were indeed roaring, but they were also reeling. And the figures articulating the doom were far from fringe.

On Oct. 30, 1924 — top hat in hand, sporting the dour, bulldog grimace for which he was well known — Winston Churchill stood on a spartan stage, peering over the shoulder of a man holding a newspaper that announced Churchill’s return to Parliament. [...]

Bluntly titled “Shall We All Commit Suicide?,” the essay offered a dismal appraisal of humanity’s prospects. “Certain somber facts emerge solid, inexorable, like the shapes of mountains from drifting mist,” Churchill wrote. “Mankind has never been in this position before. Without having improved appreciably in virtue or enjoying wiser guidance, it has got into its hands for the first time the tools by which it can unfailingly accomplish its own extermination.”

In an eerie foreshadowing of atomic weapons, he went on to ask, “Might not a bomb no bigger than an orange be found to possess a secret power to destroy a whole block of buildings — nay, to concentrate the force of a thousand tons of cordite and blast a township at a stroke?” He concluded the essay by asserting that the war that had just consumed Europe might be “but a pale preliminary” of the horrors to come. [...]

Around the same time that Churchill foretold the coming of “means of destruction incalculable in their effects,” the science fiction novelist H.G. Wells, who in his era was also famous for socialist political commentary, expressed the same doleful outlook. [...]

“Are not we and they and all the race still just as much adrift in the current of circumstances as we were before 1914?” he wondered. Wells predicted that our inability to learn from the mistakes of the Great War would “carry our race on surely and inexorably to fresh wars, to shortages, hunger, miseries and social debacles, at last either to complete extinction or to a degradation beyond our present understanding.” Humanity, the don of sci-fi correctly surmised, was rushing headlong into a “scientific war” that would “make the biggest bombs of 1918 seem like little crackers.”

The pathbreaking biologist J.B.S. Haldane, another socialist, concurred with Wells’s view of warfare’s ultimate destination. [...]

The Czech playwright Karel Capek’s 1920 drama, “R.U.R.,” imagined a future in which artificially intelligent robots wiped out humanity. In a scene that would strike fear into the hearts of Silicon Valley doomers, a character in the play observes: “They’ve ceased to be machines. They’re already aware of their superiority, and they hate us as they hate everything human.”

Elite panics:

One way to understand extinction panics is as elite panics: fears created and curated by social, political and economic movers and shakers during times of uncertainty and social transition. Extinction panics are, in both the literal and the vernacular senses, reactionary, animated by the elite’s anxiety about maintaining its privilege in the midst of societal change. Today it’s politicians, executives and technologists. A century ago it was eugenicists and right-leaning politicians like Churchill and socialist scientists like Haldane. That ideologically varied constellation of prominent figures shared a basic diagnosis of humanity and its prospects: that our species is fundamentally vicious and selfish and our destiny therefore bends inexorably toward self-destruction. [...]

Extinction panics are often fomented by elites, but that doesn’t mean we have to defer to elites for our solutions. We have gotten into the dangerous habit of outsourcing big issues — space exploration, clean energy, A.I. and the like — to private businesses and billionaires. Our survival may well depend on reversing this trend. We need ambitious, well-resourced government initiatives and international cooperation that takes A.I. and other existential risks seriously. It’s time we started treating these issues as urgent public priorities and funding them accordingly.

The first step is refusing to indulge in certainty, the fiction that the future is foretold. There is a perverse comfort to dystopian thinking. The conviction that catastrophe is baked in relieves us of the moral obligation to act. But as the extinction panic of the 1920s shows us, action is possible, and these panics can recede.

There's more at the link.

Invariance and compression in LLMs

One way to thinking about what transformers do is compression. OK. The transformer performs a simple operation on a corpus of texts in such a way that some property of the corpus is preserved in the model. What’s kept invariant between the training corpus and the compressed model?

I think if must be the relationships between concepts. Note that in specifying relationships I mean explicitly to differentiate that from meaning. The process of thinking about LLMs has brought me to think of meaning in the following way:

- Meaning has two major components, intention and semanticity.

- Semanticity has two components, relationality and adhesion.*

Intention resides in the relationship between the speaker and the listener and is not always derivable directly from the semantics (semanticity) of the utterance. Intention in this sense is outside the scope of LLMs. And, of course, there are those who believe that without intention there is no meaning. That’s a respectable philosophical position, but it leaves you helpless to understand what LLMs are doing.

By adhesion I mean whatever it is that links a concept to the world. There are lots of concepts which are defined more or less directly in terms of physical things. That’s not going to be captured in LLMs. Of course we now have LLMs linked to vision models so the adhesion aspect of semantics is being picked up. In the universe of concrete concepts we still have relationships between those concepts, and those relationships between concepts can be captured in language without directly involving the adhesions of those concepts. That apples and oranges are both fruits is a matter of relationships between those three concepts and doesn’t require access to the adhesions of apples and oranges. And so forth and so on for a large number of concepts. Then we have abstract concepts, which can be defined entirely through patterns of other concepts, which may be concrete, abstract, or both.

So, relationality. The mechanisms of syntax are designed to map multi-dimensional relationality onto a one-dimensional string. But syntax only governs relationships between items within a sentence. But that’s not quite adequate, because sentences can consist of more than one clause. The relationship between independent clauses within a sentence is different than that between a dependent clause and the clause on which it depends. Etc. It’s complicated. And then we have the relationship between paragraphs, and so forth.

What I’m attempting to do is figure out a way of thinking about the dimensionality of the semantic system. More or less on general principle, one would like to know how to estimate that. Now, when I talk about the semantic system, I mean the semanticity of words. But transformers must deal with texts, and texts consist of sentences and paragraphs and so forth. Setting metaphorical structures aside, the meaning of a sentence is a composition over the meanings of the words in the sentence. But, as I understand it, a transformer is perfectly capable of relating the meaning of a sentence to a single point in its space. And it can do that with larger strings as well. And, of course, the ordinary mechanisms of language allow us to use a string to define a single word; that’s how abstract definition works.

And that’s as far as I’m going to attempt to take this train of thought. Still, I do think we need to recognize a distinction between what’s happening within sentences (the domain of syntax), and what happens with collections of sentences. Beyond that, it seems to me that where we want to end up eventually is a way of thinking about the relationship between the dimensionality of our semantic space and the size of the corpus needed to resolve the invariant relations in that space.

More later.

*Note: The current literature recognizes a distinction between inferential and referential processing, due, I believe, to Diego Marconi, The neural substrates of inferential and referential semantic processing (2011). The functional significance is similar, but only similar, to my distinction between referentiality and adhesion. Inferential processing depends on the relational structure of texts. Adhesion is about the physical properties of the world, affordances in J.J. Gibson’s terminology that are used to establish referential meaning for concrete concepts. But it is also about the patterns of relationships though which the meaning of abstract concepts is established.

Thursday, January 25, 2024

Carl Barks is the Duck Master

From the YouTube page:

Carl Barks Reading Guide

While all of Barks’ work has merit, the peak period is generally considered from the late 40s through the early 50s. The key volumes are linked here. The first two are currently out of print, but still available digitally. There’s no continuity, so no need to read in order anyway.

Vol. 7: Lost in the Andes - https://amzn.to/427DEkS

Vol. 8: Trail of the Unicorn - https://amzn.to/47GQebY

Vol. 9: The Pixilated Parrot - https://amzn.to/427y6qt

Vol. 10: Terror of the Beagle Boys - https://amzn.to/48RgBwS

Vol. 11: A Christmas for Shacktown - https://amzn.to/3tQEd65

Vol. 12: Only a Poor Old Man - https://amzn.to/48WUaGz

Vol. 13: Trick or Treat - https://amzn.to/493FC8f

Vol. 14: The Seven Cities of Gold - https://amzn.to/4239hvZ

One word of warning: many of the books feature racist depictions of non-white characters, a lot of national origin based humor, and in general reflect an imperialist worldview. Not all of the stories do, of course, but they are scattered among the volumes, and Fantagraphics have chosen not to censor anything as previous editions have.

Other Sources:

Carl Barks Conversations - https://amzn.to/3O9VVZc Funnybooks - https://amzn.to/47Q7uM8 The Osamu Tezuka Story: A Life in Manga and Anime - https://amzn.to/48EQ8Tz

Floyd Gottfredson’s Mickey Mouse Strips: https://amzn.to/3U5Eqg8

FULL SOURCES, REFERENCES & NOTES: https://pastebin.com/aUFrEsM0

A young composer considers his craft

There's definite order what young Gavriil does here. He starts with some not-quite-random plinking.

He (obviously) hears something and sets out to explore it at 0:37, where he plays a repeated note in the left hand, adds a note with the right (0:43), and then opens it up, adding more notes with both hands (0:48).

Pause.

Repeat the sequence (0:56), starting with a higher note.

Repeat (1:24), quickly.

Repeat (1:33), moving higher, with some variations thrown in (1:36).

Repeat (1:47), moving higher (notice how he shifts position on the bench, 1:49) – follow his eyes.

Repeat (2:03), notice how he lays into that first note (even dropping his right arm to the side), adding the right, filling it out, increasing the volume. The repetition slows, becomes more insistent...

Pause, both hands raised (2:37),

Repeat (2:38), really laying it on with the left, adding the right (2:43)

He's thinking (2:51), and now begins developing some new material.

That's the really significant thing, he introduces some new material after having worked through one basic idea seven times (by my count). I leave it as an exercise to the reader to attend to how he deals with this new material, which seems a bit more concerned with what the two hands are doing at the same time.

After a bit be pauses and thinks a bit at 3:35, and is figuring out whether or not he's done (3:45). Ah, not quite. Last note at about 4:00. But he keeps working his arms and hands without sounding notes, moving them back from the keyboard at 4:10, down to his lap (4:15), then back up. 4:24, hearing his mother (I assume), he's done, and moves away.

Is this how young Johan Sebastian and young Wolfgang got started?

Addendum: From a note to a friend:

Let me offer some observations about young Gavriil. At 2 years and 3 months he’s got problems with fine motor control which have to do with the immaturity of his nervous system. Differentiated control of his fingers is tough. I rewatched half the video, focusing on his fingers. He does most of the work with the first and index fingers of both hands. Ring and pinky are curled under and not used. I saw him use the right thumb twice. I can see how small hands make it difficult to use ring and pinky, but not thumb. This is mostly about motor control, not physical reach. For that matter, why not thumb and pinky to span a fourth, perhaps a fifth? Will he be using thumb and pinky alone anytime soon? That’ll be interesting to watch.

Now, what’s he hearing? You have no trouble those neo-soul chords. Your problem is controlling the hands and fingers to produce them. I’m guessing that young Gavriil is still learning to hear and to organize those sounds. Notice that he does avoid hitting two adjacent keys. He’s figured out that much. Has he gotten as far as a triad, which he could easily produce across two hands? I suspect not. If I had a graduate student who could notate everything he’s doing, which fingers and keys, etc. Better, sit him at an electronic keyboard that records every motion. He may well be playing triads in some inversion, but it’s probably hit or miss and he has no sense of harmonic progression. What he’s controlling seems to me to be rhythm speed and density, pitch density, movement up or down the keyboard, and volume. Which is quite a bit.

On differences in criminal codes across red and blue states

Tyler Cowen listed much, but not all, of the abstract for "Red Codes, Blue Codes? Factors Influencing the Formulation of Criminal Law Rules" in a recent post. Here's the full abstract:

The U.S. appears to be increasingly politically divided between “red states” and “blue states,” to the point that many serious public voices on both sides are urging that the country seriously consider separating along a red-blue divide. A range of stark public disagreements over criminal law issues have fed the succession movement. Consider obvious examples such as abortion, decriminalization of marijuana, “stand your ground” statutes, the death penalty, and concealed weapon carry laws. Are red and blue values so fundamentally different that we ought to recognize a reality in which there exists red codes and blue codes?

To answer that question, this study examined the criminal codes of the six largest deep red states and the six largest deep blue states – states in which a single political party has held the governorship and control of both legislative bodies for at least the past three elections. It then identified 93 legal issues on which there appeared to be meaningful difference among the 12 states’ criminal law rules. An analysis of the patterns of agreement and disagreement among the 12 states was striking. Of the many thousands of issues that must be settled in drafting a criminal code, only a handful – that sliver of criminal law issues that became matters of public political debate, such as those noted above – show a clear red-blue pattern of difference.

If not red-blue, then, what does explain the patterns of disagreement among the 12 states on the 93 criminal law issue? What factors have greater influence on the formulation of criminal law rules than the red-blue divide?

The Article examines a range of possible influences, giving specific examples that illustrate the operation of each: state characteristics, such as population; state criminal justice characteristics, such as crime rates; model codes, such as the ALI’s Model Penal Code; national headline events, such as the attempted assassination of President Reagan; local headline cases that over time grow into national movements, such as Tracy Thurman and domestic violence; local headline cases that produced only a local state effect; the effect of legislation passed by a neighboring state; and legislation as a response to judicial interpretation or invalidation.

In other words, not only is the red-blue divide of little effect for the vast bulk of criminal law, but the factors that do have effect are numerous and varied. The U.S. does not in fact have red codes and blue codes. More importantly, the dynamics of criminal law formulation suggest that distinctive red codes and blue codes are never likely to exist because the formulation of most criminal law rules are the product of a complex collection of influences apart from red-blue.

Now we can ask: Are there any issues other than those listed which are likely to be caught up in red-blue polarization? If so, what might they be? What is it that differentiates the polarizing issues from all the many other targets of criminal law? Why, for example, don't red and blue states differ in their treatment of, say, embezzlement, or armed robbery?

That is to say, can we characterize red-blue polarization in a way that is more useful than simply listing the issues on which they differ? Do those issues share some one or three characteristics that all those other issues do not?

Wednesday, January 24, 2024

Dan Wang on Chinese expatriates in Thailand. [Whole Earth revival]

From his 2023 letter, leaving China:

China may have hit its GDP growth target of 5 percent this year, but its main stock index has fallen -17% since the start of 2023. More perplexing were the politics. 2023 was a year of disappearing ministers, disappearing generals, disappearing entrepreneurs, disappearing economic data, and disappearing business for the firms that have counted on blistering economic growth.

No wonder that so many Chinese are now talking about rùn. Chinese youths have in recent years appropriated this word in its English meaning to express a desire to flee. For a while, rùn was a way to avoid the work culture of the big cities or the family expectations that are especially hard for Chinese women. Over the three years of zero-Covid, after the state enforced protracted lockdowns, rùn evolved to mean emigrating from China altogether.

One of the most incredible trends I’ve been watching this year is that rising numbers of Chinese nationals are being apprehended at the US-Mexico border. [...] Many Chinese are flying to Ecuador, where they have visa-free access, so that they can take the perilous road through the Darién Gap. It’s hard to know much about this group, but journalists who have spoken to these people report that they come from a mix of backgrounds and motivations.

I have not expected that so many Chinese people are willing to embark on what is a dangerous, months long journey to take a pass on the “China Dream” and the “great rejuvenation” that’s undertaken in their name.

The Chinese who rùn to the American border are still a tiny set of the people who leave. Most emigrés are departing through legal means. People who can find a way to go to Europe or an Anglophone country would do so, but most are going, as best as I can tell, to three Asian countries. Those who have ambition and entrepreneurial energy are going to Singapore. Those who have money and means are going to Japan. And those who have none of these things — the slackers, the free spirits, kids who want to chill — are hanging out in Thailand.

I spent time with these young Chinese in Chiang Mai. Around a quarter of the people I chatted with have been living in Thailand for the last year or two, while the rest were just visiting, sometimes with the intention to figure out a way to stay.

Spirituality:

Some people had remote jobs. Many of the rest were practicing the intense spirituality possible in Thailand. That comes in part from all the golden-roofed temples and monasteries that make Chiang Mai such a splendid city. One can find a meditation retreat at these temples in the city or in more secluded areas in the mountains. Here, one is supposed to meditate for up to 14 hours a day, speaking only to the head monk every morning to tell him the previous day’s breathing exercises and hear the next set of instructions. After meditating in silence for 20 days, one person told me that he found himself slipping in and out of hallucinogenic experiences from breath exercises alone.

The other wellspring of spiritual practice comes from the massive use of actual psychedelics, which are so easy to find in Chiang Mai. Thailand was the first country in Asia to decriminalize marijuana, and weed shops are now as common as cafés. It seems like everyone has a story about using mushrooms, ayahuasca, or even stronger magic. The best mushrooms are supposed to grow in the dung of elephants, leading to a story of a legendary group of backpackers who have been hopping from one dung heap to another, going on one long, unbroken trip.

Whole Earth Catalog:

I’ve never felt great enthusiasm for crypto. Chatting with these young Chinese made me more accepting of their appeal. They are solutions looking for problems most everywhere in the Western world, but they have real value for people who suffer from state controls. The crypto community in China has attracted grifters, as it has everywhere else. But it is also creating a community of people trying to envision different paths for the future.

That spirit pervades the young people in Chiang Mai. A bookseller told me that there’s a hunger for new ideas. After the slowdown in economic growth and the tightening of censorship over the past decade, people are looking for new ways to understand the world. One of the things this bookshop did is to translate a compilation of the Whole Earth Catalog, with a big quote of “the map is not the territory” in Chinese characters on the cover. That made me wonder: have we seen this movie before? These kids have embraced the California counterculture of the ‘90s. They’re drugs, they’re trying new technologies, and they’re sounding naively idealistic as they do so. I’m not expecting them to found any billion-dollar companies. But give it enough time, and I think they will build something more interesting than coins.

There's much more at the link. Well worth reading.

Tuesday, January 23, 2024

GOAT Literary Critics: Part 3.1, René Girard prepares the way for the French invasion

I had originally intended this essay to cover three thinkers, René Girard, Jacques Derrida, and Claude Lévi-Strauss. As I began working on it the thinking grew knotty and the prose just grew and grew. So I’ve decided to break the essay into two parts. In this part I begin by negotiating a transition from the previous article in this series, which was about how the contemporary discipline of literary criticism emerged after World War Two. I then say a little about my years at Johns Hopkins by way of introducing our three thinkers. After that comes some empirical evidence about the mid-century transition in literary criticism. I then conclude with a look at René Girard. I’ll discuss Jacques Derrida, and Claude Lévi-Strauss in the next part and make some remarks about the aftermath of this mid-century Sturm und Drang.

Do we really have a discipline?

As the previous article ended, Michael Bérubé was informing us that that the disciplinary regime set in place by Northrup Frye would begin unraveling a decade later. That’s what this article is about. But I want to begin by reviewing where we’ve been.

Let us start with Cowen and his interest in the greatest economists. Cowen took the disciplinary existence of economics as a given: In the beginning there was Adam Smith, and the rest followed after. He had to do a bit of tap dancing to fit John Stuart Mill into his scheme, for we generally think of Mill as a philosopher, not an economist, but that is easily done. One might, I suppose, do the same for literary criticism. The deparments exist in colleges and universities; just go back as far as you can.

However, administrative continuity is one thing; intellectual continuity is another. The conceptual focus of academic literary criticism changed in the middle of the previous century. That’s what the previous article is about. Sure, the primary texts are still there, but what academics do with them has changed. There are continuities, to be sure, there always are. Think of astronomy – Lord! I hate what I’m about to do – and its Copernican revolution. The earth, moon, sun, and the other planets are the same things they were before and after the revolution, but our understanding of their relationships has changed. Something like that is what has happened to literary criticism, and the discipline almost knows it and is still thrashing about with the consequences.

Brooks & Warren, taken as a duo, are important because they focused the discipline’s attention on the texts themselves in the most concrete way possible, by gathering a bunch of them together for a reader intended for undergraduates. That in turn brought the teachers of those undergraduates to think about those texts in a new way, to search for the meaning held within each text. In his Anatomy of Criticism Northrup Frye both conducted an inductive survey of the literary field and, in his polemical introduction, explained how an interpretive focus on that field constituted a proper academic discipline. Then, a century and a half before them, Coleridge introduced concepts that brought the literary mind into conceptual focus. That’s a discipline.

It's the focus on meaning that became problematic. For one thing it turns out that critics kept coming up with different meanings for the same texts. How can we call ourselves an academic discipline if we can’t agree on the central objects of our discipline? The problem occasioned a lot of thinking about theory and method. What’s even more problematic, in time it became conceptually difficult to separate the roles of critics and writers in the literary system, if you will. It wasn’t at all obvious that that would happen, and it took a while for awareness of problem to come into view.

It’s in that context that we should consider the well-known symposium that took place at Johns Hopkins in the Fall of 1966: The Languages of Criticism and the Sciences of Man. Notice that phrase, “the Sciences of Man,” from the French “les Sciences de l’Homme.” The concept of the human sciences is European, not American, and covers a range of disciplines that would be divided between the humanities and society sciences in America.

In retrospect that event is recognized as a ‘tipping point’ in the course of American literary criticism. Such things do not tip in the course of four days (October 18-21). They are the culmination, in this case, of a decade of uncertainty about the conceptual nature of literary criticism. Before taking a look at that 1966 symposium, however, I want to step back and insert myself at the edges of the narrative.

A change in perspective

I wrote the previous posts in this series from the “view from nowhere” point-of-view that is the default stance for much intellectual writing. That stance really isn’t available to me for this post, which are about developments that happened early in my career, during my undergraduate years at Johns Hopkins and my graduate study in the English Department at the State University of New York at Buffalo. While I certainly did not play an active role in the events I will be describing, I was an interested, concerned, and involved bystander (see the appendix, "Skin in the game," in the next installment).

Though I never studied with René Girard, I heard him lecture in classes I took with Richard Macksey when I was an undergraduate at Johns Hopkins and I later met with him in connection with book collection that never came to fruition (it was to be a collection of structuralist essays on Shakespeare). The ideas of Jacques Derrida influenced me during my undergraduate years, especially “Structure, Sign, and Play in the Discourse of the Human Sciences,” the paper he delivered at the famous 1966 structuralism symposium. However, the influence of Claude Lévi-Strauss’s work on myth was to prove more intriguing, influential and enduring.

Those are the three thinkers at the center of this part of our story: René Girard, Jacques Derrida, and Claude Lévi-Strauss. All of them were French, through Girard spent most of his life in America. Jacques Derrida was frequently in residence at Yale and other schools and ended his career at the University of California at Irvine. The Nazi occupation of America caused Lévi-Strauss to leave France for in America between 1941, where he stayed until 1947 and then returned to France in 1948. None of them were primarily literary critics.

To be sure, Girard sojourned in literary studies from the late 1950s on into the 1970s. But he had trained as a historian and, by the time of that structuralism conference in the mid-1060s, he was moving through anthropology to become a grand social theorist. Derrida was a philosopher, though he often commented on literary texts. And Lévi-Strauss was an anthropologist, though he famously collaborated with Roman Jakobson, the great linguist, on an analysis of Baudelaire’s “Les Chats.”

What, then, are three non-literary critics doing in a series of posts ostensibly about the GOAT literary critics? They are influencing the course of literary criticism, that’s what. And that’s ultimately what this series of posts is about (for me). Why is literary study like it is? That the answer to that question depends critically on major thinkers outside of literary studies, that tells us something about the peculiar nature of a discipline focused on teasing out the meaning of literary texts.

Turning toward our three thinkers, Derrida arguably had more influence on literary criticism as a whole after 1970 than anyone trained as and writing primarily as a literary critic. It’s not clear to me that that is true of Lévi-Strauss, though he certainly had an influence and Derrida arguably made his bones with a famous essay about Lévi-Strauss. As for Girard, Tyler Cowen thinks he’ll go down in intellectual history as one of the major French thinkers of the last half century. Perhaps so. But that would be more in his person as a social theorist than as a literary critic. There his influence has been real, but limited. Thus he plays a somewhat different role in this story.

Note: In Appendix 1 I present some empirical evidence about the relative importance of these three thinkers.

Transition, the 1970s in literary criticism

Before discussing these three thinkers, however, I want to talk about what happened to academic literary criticism during this period in a general and empirical way. This is possible because of an important article that Andrew Goldstone and Ted Underwood published in 2014 in New Literary History: “The Quiet Transformations of Literary Studies: What Thirteen Thousand Scholars Could Tell Us”. Using topic modeling, a machine learning technique, Goldstone and Underwood analyzed complete runs of seven mainstream journals going back to the late nineteenth century.

This is not the place to explain how topic analysis is done – you’ll find an explanation in the article, or you may consult Appendix 2, where you’ll find what ChatGPT said about topic modeling. In this context “topic” is a term of art and refers to a group of words that tend to occur together in a collection of documents. It is up to the analyst to interpret the overall meaning of the topic.

This chart shows how Topic 16 evolved over time:

Here are the words most prevalent for that topic; criticism work critical theory art critics critic nature method view. The topic seems to be oriented toward method and theory and is most prevalent between roughly 1935 and 1985. The first half of that period likely reflects the rise of the so-called New Criticism while the second half reflects the developments I’ll be examining in this post, which came to a head at the time of that 1966 structuralism conference at Johns Hopkins. I should note as well that the study of poetry was prominent during the era of the New Criticism while disciplinary interested shifted toward the novel in the post-structuralist era. This is not covered by Goldstone and Underwood. I take it as a casual observation from personal correspondence with Franco Moretti.

Now look at Topic 20. Judging from its most prominent words, it seems weighted toward current critical usage: reading text reader read readers texts textual woolf essay Virginia.

That usage, reading as interpretation, becomes more obvious when we compare it with Topic 117, which seems to reflect a more prosaic usage, where reading is mostly just reading, not hermeneutics: text ms line reading mss other two lines first scribe. Note in particular the terms – ms line mss lines – which clearly reference a physical text.

This topic is most prominent prior to 1960 while Topic 20 rises to prominence after 1970. For what it’s worth – not much, but it’s what I can offer – that accords with my personal sense of things going back to my undergraduate years at Johns Hopkins in the later 1960s. I have vague memories of remarking (to myself) on how odd it seemed to refer to interpretative criticism as mere reading when, really, it wasn’t that at all.

Reading isn’t the only word whose meaning shifted during that period. Theory changed as well. Up into the 1960s and even the early 1970s “literary theory” was thinking about the nature of literature, as exemplified by the venerable Theory of Literature (1949), by René Wellek and Austin Warren. By the mid-1970s or so literary theory had come to refer to the use of some kind of theory about mind and/or society in the interpretation of literary texts. That is to say, it wasn’t theoretical discourse about literature. Rather it was a method for creating interpretations of texts, which are readings in the newer sense of the term. That’s where Derrida and Lévi-Strauss come in, as sources of interpretive tools, along with Marx, Freud, Adorno, Barthes, Deleuze, Foucault, and a cast of thousands. Well, I exaggerate the number, but you get the idea.

The rest of this essay, especially the second part coming up in a later post, is about those shifts.

Finally, and in view of recent events at Harvard and elsewhere, I must admit that I’ve ‘plagiarized’ from myself in what I’ve said about Topics 20 and 177. Those words come from a working paper that has a more complete discussion of this transitional period based in part on the work of Goldstone and Underwood. Here’s the paper:

Transition! The 1970s in Literary Criticism, Version 2, January 2017, https://www.academia.edu/31012802/Transition_The_1970s_in_Literary_Criticism

Rene Girard and Mimetic Desire

René Girard was the first professor I heard lecture when I entered Johns Hopkins in the fall of 1965. At one point during freshman orientation we were given a choice of attending one of five lectures. I attended Girard’s lecture on cultural relativism – I forget what the other four were. I remember only two things from that lecture: Girard spoke with an accent and it was the craziest damn thing I ever heard. Yes, that’s how I thought of it at the time, but this guy was a Hopkins professor, so there must be something to it.

In the spring of 1966 I took Richard Macksey’s course on the autobiographical novel. He invited Girard to lecture on mimetic desire, the central idea of his first book, published as Mensonge romantique et verité in 1961 and translated into English as Deceit, Desire, and the Novel in 1966. Here’s a brief account of the argument by John Pistelli:

He posits, therefore, a fundamental geometric relation governing the plots of great novels: the protagonist seems to desire something (wealth, status, a lover, etc.), but in fact really desires the object through a mediator whom the protagonist wishes to emulate or usurp. Desire, then, is mimetic—in the sense of mimicry—and triangular—because it doesn’t go from subject to object but from the subject through the mediator to the object. Girard’s first and clearest example is Don Quixote, who learns to want what a chivalric knight wants through reading about the hero Amadis of Gaul in medieval romances; his quest is less to possess the knight’s rewards than to become Amadis through this possession. Given Don Quixote’s reputation as the inaugural European novel, it’s no surprise to find that the pattern continues in later fiction: Girard’s main examples are the works of Stendhal, Flaubert, Dostoevsky, and Proust.

That book put Girard on the intellectual map, making him a force to be reckoned with.

And he used that force to organize the 1966 symposium at Johns Hopkins: The Languages of Criticism and the Sciences of Man. Girard provided the visibility and the international connections while Richard Macksey and Eugenio Donato did much of the actual organizing. Here’s a few observations Cynthia Haven offered about that symposium in the chapter, “The French Invasion,” in her biography of Girard, Evolution of Desire: A Life of René Girard (2018):

At that historical moment, “structuralism” was the height of intellectual chic in France, and widely considered to be existentialism’s successor. Structuralism had been born in New York City nearly three decades earlier, when French anthropologist Claude Lévi-Strauss, one of many European scholars fleeing Nazi persecution to the United States, met another refugee scholar, the linguist Roman Jakobson, at the New School for Social Research. The interplay of the two disciplines, anthropology and linguistics, sparked a new intellectual movement. Linguistics became fashionable, and many of the symposium papers were cloaked in its vocabulary.

Girard never saw himself as a structuralist. “He saw himself as his own person, not one of the under-lieutenants of structuralism,” said Macksey. Yet structuralism would have had a natural pull for Girard, who was already moving away from literary concerns and toward more anthropological ones by the time of the symposium. Indeed, in this as in other matters, he was indebted to the structuralists. His own metanarratives strove toward universal truths, akin to the movement that endeavored to discover the basic structural patterns in all human phenomena, from myths to monuments, from economics to fashion.

It's that drive toward “more anthropological” issues that interests me.

For it is through his readings in anthropology that Girard was able to enlarge mimetic theory to embrace society-wide dynamics of conflict and the resolution of conflict through the mechanism of sacrifice. Just how he was able to do that is not my concern here, any more than I was concerned about the validity of Northrup Frye’s archetypal criticism in the previous essay in this series. That led Girard to write his second book, Violence and the Sacred, which came out in English translation in 1977. And from there, apparently – for my own attention was elsewhere – he came up with what is somewhat derisively called a “theory of everything,” albeit one that was convincing enough that he was elected to the Académie Française in 2005. I prefer to think of him has a grand social theorist in the 19th century manner of, say, Karl Marx, Herbert Spencer, or somewhat later, Oswald Spengler. Whether history will remember him as such in 50 years, that’s another matter.

“But,” one might ask, “why did he do it?”

“Well,” might come the response, “there is curiosity.”

“But that’s not enough given the strictures of the modern academy.”

“But it wasn’t always thus, was it? Earlier thinkers had more latitude, no?”

“Yes, but...”

Speculation about Maestro's Oscars prospects

Oscar nominations have been announced. Maestro has been nominated in the following categories:

- Best picture

- Best actor, Bradley Cooper

- Best actress, Cary Mulligan

- Original screenplay, Bradley Cooper and Josh Singer

- Cinematography

- Makeup and Hairstyling

- Sound

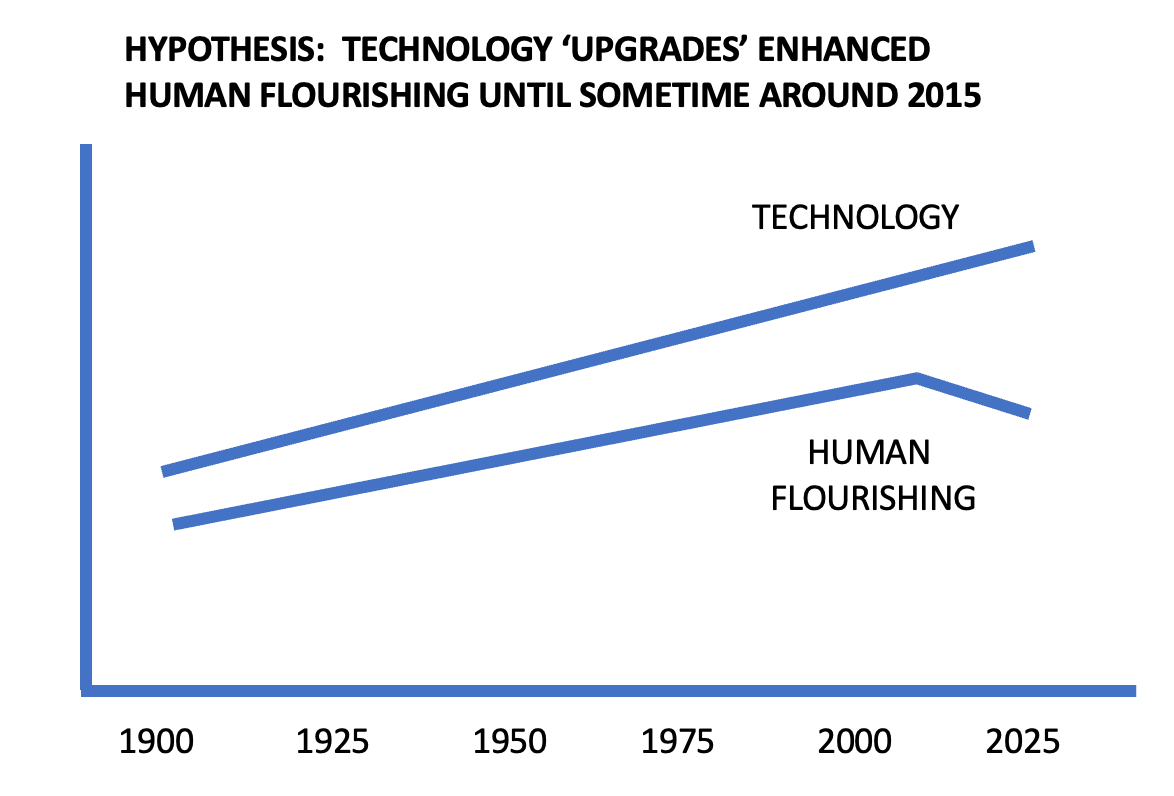

Ted Gioia believes that progress stopped in 2015 and that technocrats don't know diddly squat about human flourishing

Ted Gioia has just published an interesting polemic: I Ask Seven Heretical Questions About Progress. I'll leave you to read his seven heretical questions for yourself. But I'm quoting in full the final section of his most interesting post, with which I am deeply sympathetic. I am afraid that much current public discourse about progress is being driven by people whose minds are stuck in the 19th century world of the industrial revolution. The coupling of that mindset with current technology, particularly artificial intelligence, is a recipe of human disaster.

* * * * *

But let me move on to my radical hypotheses. (I’m running out of space, so these are much shorter.)

But each of these is a huge issue—and my views get more heterodox as you work your way down the list.

So I will need to write about this more in the future.

Progress should be about improving the quality of life and human flourishing. We make a grave error when we assume this is the same as new tech and economic cost-squeezing.

There was a period when new tech improved the quality of life, but that time has now ended. In the last decade, we’ve seen new tech harming the people who use it the most—hence most so-called innovations are now anti-progress by any honest definition.

There was a time when lowering costs improved quality of life—raising millions of people out of poverty all over the world. But in the last decade, cost-squeezing has led to very different results, and is increasingly linked to a collapse in the quality of products and services. Some people get richer from these cost efficiencies, and a larger group move into more intensely consumerist lifestyles—but none of these results (crappy products, super-rich elites, mass consumerist lifestyles, etc.) deserve to be called progress.

The discourse on progress is controlled by technocrats, politicians and economists. But in the current moment, they are the wrong people to decide which metrics drive quality of life and human flourishing.

Real wisdom on human flourishing is now more likely to come from the humanities, philosophy, and the spiritual realms than technocrats and politicians. By destroying these disciplines, we actually reduce our chances at genuine advancement.

Things like music, books, art, family, friends, the inner life, etc. will increasingly play a larger role in quality of life (and hence progress) than gadgets and devices.

Over the next decade, the epicenter for meaningful progress will be the private lives of individuals and small communities. It will be driven by their wisdom, their core values, and the courage of their convictions—none of which will be supplied via virtual reality headsets or apps on their smartphones.

I still have more to say on this. But that’s plenty to chew on for the time being.