Pages in this blog

▼

Friday, May 29, 2015

Ayahuasca Variations

This working paper was originally published in Human Nature Review 3 (2003) 239-251: http://human-nature.com/nibbs/03/shanon.html

You can also find it at:

- Academica.edu: https://www.academia.edu/12667500/Ayahuasca_Variations

- Social Science Research Network: http://ssrn.com/abstract=2611959

Abstract: This is a review essay of Benny Shanon, The Antipodes of the Mind: Charting the Phenomenology of the Ayahuasca Experience (Oxford University Press, 2002), which is a detailed phenomenological account of what happens when one takes ayahuasca, a psychoactive concoction from South America. Ayahuasca is traditionally taken in the company of others and accompanied by music, which paces the visions and affects their content. The effect is that of entering another world. I offer some speculation about underlying neural processes, much based on the work of Walter Freeman’s speculation that consciousness is organized as short discontinuous whole-hemisphere states. I also speculate on the similarity between the dynamics of ayahuasca experience, as Shanon has described it, and the dynamics of skilled jazz improvisation; and I point out that what Shanon reports as a second-order vision seems to be involved in Coleridge’s “Kubla Khan”.

Contents

What the Brain Does 2

Another World 3

Some Temporal Effects 5

Musical Performance 6

Xanadu 9

Sensing the Real 13

Notes 14

Acknowledgements 15

References 15

Introduction: What the Brain Does

Benny Shanon, The Antipodes of the Mind: Charting the Phenomenology of the Ayahuasca Experience, Oxford University Press, 2002; ISBN: 0199252920

Sometime within the past two or three years I came upon a paper by Eleanor Rosch (1997) in which she observed that “William James speculated about the stream of consciousness at the turn of the century, and the portrayal of stream of consciousness has had various literary vogues, but experimental psychology has remained mute on this point, the very building block of phenomenological awareness.” My impression is that much the same could be said about the recent flood of consciousness studies. The authors of this work tend to treat consciousness as a homogenous metaphysical substance. They are quite interested in the relationship between this substance and the brain but show little interest in the varieties of conscious experience, in how consciousness evolves from one moment to the next.

How is it possible, for example, that I may simultaneously prepare breakfast while thinking about consciousness? Even as I break an egg into a mixing bowl, add some pancake mix, pour in some milk, and begin beating the mixture, I am also thinking about Shanon’s book, Walter Freeman’s neurodynamic theories, Coleridge’s drug-inspired “Kubla Khan” – and then! I look out the window and notice how bright and sunny it is. I have little subjective sense of doing this, then thinking about that, and then back to doing this, and so forth. These seem to be simultaneous streams of attention, like two or three interacting contrapuntal voices in a Bach fugue. If “the mind is what the brain does” (Kosslyn and Koenig 1995, p. 4) then the conscious mind flits from one thing to another in a most interesting way.

Walter Freeman (1999a, pp. 156-158; cf. Varela 1999) speculates that consciousness arises as discontinuous whole-hemisphere states succeeding one another at a “frame rate” of 6 Hz to 10 Hz. Each attention stream would thus consist of a set of discontinuous macroscopic brain states interleaved with the states for the other streams. As an analogy, imagine cutting three different films into short segments of no more that a half dozen or so frames per segment. Join the segments together so that each second or two of projected film contains segments from all three films. Now watch this intercut film. Your mind automatically assigns each short segment to the appropriate stream so that you experience three non-interfering movies more-or-less at once. La Strada, Seven Samurai, and Toy Story, as it were, unfold in your mind each in its own context.

That is the mind in action. Yet, as Rosch has noted, cognitive science and consciousness studies have little to say about it. Benny Shanon’s The Antipodes of the Mind: Charting the Phenomenology of the Ayahuasca Experience brings this neglect into sharp relief by presenting us with an account of the different modes of consciousness that emerge when one has taken ayahuasca.

Ayahuasca is a psychoactive drink concocted in South America that induces very strong visions. Shanon is a cognitive psychologist who stumbled on the practice by accident and became interested in the nature of these visions. He started systematically exploring the experience, often through rituals organized by indigenous animist groups and by more recent syncretic groups (the Church of Santo Daime, União de Vegetal, Barquinha), but also with less formal groups involving “independent drinkers” and in sessions by himself. Shanon has participated in over 130 sessions himself and has interviewed 178 other users of which “16 were indigenous or persons of mixed race, 106 were residents of urban regions of South America, and 56 were foreigners (that is, persons residing outside South America)” (p. 44).

As the book’s subtitle indicates, it is mostly an extensive investigation of what happens once one has ingested the brew. Much of it consists of more or less richly annotated lists of the sorts of things one sees and experiences. Shanon is not interested in interpreting these visions in the way a literary critic or a Jungian psychologist would. Nor is he interested in explaining what happens in neural terms, about which he has little to say. By the same token, he does not take these experiences at face value either. Ayahuasca visions generally impress themselves on people as sojourns in another world, a world that is at least somehow separate from the mundane world, if not ontologically superior to it (that is, more real). While Shanon feels the pull of such a view, he knows that it is not appropriate to a scientific study. Whatever is happening, it cannot usefully be explained by appeals to the supernatural

Thursday, May 28, 2015

The universe across eight thresholds of complexity

David Christian discusses Big History at Edge.org. Here's some of that discussion. Since he identifies eight (8) thresholds I've inserted numbers in brackets to help identify them:

We tell it—this is just a convenience—across eight thresholds of increasing complexity. [1] The first is the Big Bang itself, the creation of the universe. [2] The second is the creation of stars. Once you have stars, already the universe has much more diversity. Stars have structure; galaxies have structure. You now have rich gradients of energy, of density, of gravity, so you've got flows of energy that can now build more complex things.[3] Dying stars give you the next threshold, which is creating a universe with all of the elements of the periodic table, so it's now chemically richer. You can now make new materials. You can make the materials of planets, moons, and asteroids. On some planets, particularly rocky planets, you get an astonishing chemical diversity....Life is a [5] fifth threshold; planets are a [4] fourth threshold. One of the wonderful things about this story is that, as you widen the lens, I'm increasingly convinced that all these very big questions that we're asking that seem impossible when seen from within the disciplinary silos begin to look manageable from the large scale. Let me give two examples. One is life itself.I have a feeling that within this story it's possible to offer a fairly simple but powerful definition of what makes life a different level of complexity from the complexity of, say, simple chemical molecules or stars, or galaxies. With life, you get complex entities appearing in extremely unstable environments. Stars create their own environments so that they can work mechanically. If you have complex things in very unstable environments, they need to be able to manage energy flows to maintain their complexity. If the environments are constantly changing, they need some mechanism for detecting changes. That is the point at which information enters the story.What were just variations in the universe suddenly become information, because something is responding in a complex way to those variations. Something like choice enters the story because no longer do living organisms make choices mechanically; they make choices in a more complex way. You can't always guarantee that they're going to make the same choice. That's where natural selection kicks in.

And now we seem to have a slip in the numbering:

If you move on to human beings ( [5] our fifth threshold of increasing complexity) you can ask the question, which students are dying to ask: What makes humans different? It's a question that the humanities have struggled with for centuries. Again, I have the hunch that within this very broad story, there's a fairly clear answer to that. If all living organisms use information about their environments to control and manage the energy flows they need to survive—biologists call it metabolism—or to constantly adjust—homeostasis—then we know that most living organisms have a limited repertoire. When a new species appears, its numbers will increase until it's using the energy that its particular metabolic repertoire allows it to fill.Yet look at graphs of human population growth and something utterly different is going on. Here, you have a species that appears in probably the savanna lands of East Africa, but it doesn't stay there. During the Paleolithic—over perhaps 200,000 years—you can watch the species, certainly in the last 60,000 years, slowly spreading into new niches; coastal niches in South Africa. Blombos Cave is a wonderful site that illustrates that. Then eventually desert lands, forest lands, eventually into ice age Siberia, across to Australia. By 10,000 years ago our species had spread around the world.This is utterly new behavior. This is a species that is acquiring more, and more, and more information. That is the key to what makes us different.

At this point I'm going to switch over to the website for The Big History Project and just list the rest:

[6] Collective Learning: How humans are different.

[7] Agriculture: How farming sows the seeds of civilization.

[8] The Modren Revolution: Why change accelerates faster and faster.

* * * * *

From time to time I've taken a somewhat different look at the big picture. For example:

- Living with Abundance in a Pluralist Cosmos: Some Metaphysical Sketches

- The Abundance Principle and The Fourth Arena

Physicist Sean Carroll on Complexity – Skating on the eye between science and philosophy

At Edge.org:

You can think about the universe as a cup of coffee: You're taking cream and you're mixing it into the coffee. When the cream and the coffee are separate, it's simple and it's organized; it's low entropy. When you've mixed them all together, it's high entropy. It's disorganized but it's still simple everything is mixed together. It's in the middle, when the swirls of cream are mixing into the swirls of coffee, that you get this intricate, complex structure. You and I—human beings—are those intricate swirls in the cup of coffee. We are the little epiphenomena that occur along the way from a simple low entropy past to a simple high entropy future. We are the complexity along the way….My medium-scale research project these days is understanding complexity and structure and how it arises through the workings out of the laws of physics. My bigger picture question is about how human beings fit into this. We live in part of the natural world. We are collections of molecules undergoing certain chemical processes. We came about through certain physical processes. What are we going to do about that? What are we going to make of that? Are we going to dissolve in existential anxiety, or are we going to step up to the plate and create the kind of human scale world with value and meaning that we all want to live in?

On causality:

But the idea A precedes B and, therefore, A causes B is a feature of our big macroscopic world. It's not a feature of particle physics. In the underlying microscopic world you can run forward and backward in time just as easily one way as the other.This is something we all think is true. It is not something we understand at this level of deriving one set of results from another. If you want to know why notions of cause and effect work in the macroscopic world even though they're absent in the microscopic world, no one completely understands that. It has something to do with the arrow of time and entropy and the fact that entropy is increasing. This is a connection between fundamental physics, and social science, and working out in the world of sociology or psychology why does one effect get traced back to a certain kind of cause. A physicist is going to link that to the low entropy that we had near the Big Bang.

Inflation and many universes:

Think about how this picture developed: we have our observations, we have our universe, and we're trying to explain it. We come up with a theory to explain it, and we predict the existence essentially of other universes, and then the question is a combination of science and philosophy once again. What do we make of these other universes that we don't see? They're predicted by our theory. Do we take them seriously or not? That's a question that's hard to adjudicate by traditional scientific methods. We don't know how to go out and look for these universes. We don't even think it's possible maybe to do so.

H/t 3QD.

Wednesday, May 27, 2015

Could Heart of Darkness have been published in 1813? – a digression from Underwood and Sellers 2015

Here I’m just thinking out loud. I want to play around a bit.

Conrad’s Heart of Darkness is well within the 1820-1919 time span covered by Underwood and Sellers in How Quickly Do Literary Standards Change?, while Austen’s Pride and Prejudice, published in 1813, is a bit before. And both are novels, while Underwood and Sellers wrote about poetry. But these are incidental matters. My purpose is to think about literary history and the direction of cultural change, which is front and center in their inquiry. But I want to think about that topic in a hypothetical mode that is quite different from their mode of inquiry.

So, how likely is it that a book like Heart of Darkness would have been published in the second decade of the 19th century, when Pride and Prejudice was published? A lot, obviously, hangs on that word “like”. For the purposes of this post likeness means similar in the sense that Matt Jockers defined in Chapter 9 of Macroanalysis. For all I know, such a book may well have been published; if so, I’d like to see it. But I’m going to proceed on the assumption that such a book doesn’t exist.

The question I’m asking is about whether or not the literary system operates in such a way that such a book is very unlikely to have been written. If that is so, then what happened that the literary system was able to produce such a book almost a century later?

What characteristics of Heart of Darkness would have made it unlikely/impossible to publish such a book in 1813? For one thing, it involved a steamship, and steamships didn’t exist at that time. This strikes me as a superficial matter given the existence of ships of all kinds and their extensive use for transport on rivers, canals, lakes, and oceans.

Another superficial impediment is the fact that Heart is set in the Belgian Congo, but the Congo hadn’t been colonized until the last quarter of the century. European colonialism was quite extensive by that time, and much of it was quite brutal. So far as I know, the British novel in the early 19th century did not concern itself with the brutality of colonialism. Why not? Correlatively, the British novel of the time was very much interested in courtship and marriage, topics not central to Heart, but not entirely absent either.

The world is a rich and complicated affair, bursting with stories of all kinds. But some kinds of stories are more salient in a given tradition than others. What determines the salience of a given story and what drives changes in salience over time? What had happened that colonial brutality had become highly salient at the turn of the 20th century?

Tuesday, May 26, 2015

Why I like this photo of an iris

I can imagine that, on first seeing it, some might be puzzled about just what this photograph depicts. Of course, I’m not puzzled, because I was there when I took the photo. I know it depicts an iris, or at any rate, parts of some iris blossoms. But there is no single blossom solidly in view; just fragments at various positions, angles, and degrees of focus.

The photo is a bit of a puzzle, though I didn’t shoot it in order to pose riddles. I’m not trying to fool or mystify you. I just want you to look at, and enjoy, the photo.

I can imagine that someone who is intimately familiar with irises would clarify (subject of) the image more readily than someone who is not. Someone who’d never seen an iris, except perhaps in a Japanese print, might not recognize the irises at all. And if they’d never even heard of irises, much less seen them, how could they possibly recognize them in the photo? But they would surely conclude that they’re looking at some kind of flower, some petals and what not.

As you surely are.

What’s at the in-focus center of the photo in shades of light tan? I believe it is a dead blossom, that has dried and lost its color. And then to the right below, a large area of shaded purples, a near petal that’s out of focus. At the upper edge of that purple and to the right, a bit of yellow, and then whites a lavender. The various petals to the left are more sharply focused.

Then there’s the play of light and shadow in and among the petals. That, as much as the petals themselves, is what this photograph is about.

And that is why I like this photograph.

Monday, May 25, 2015

Underwood and Sellers 2015: Beyond Whig History to Evolutionary Thinking

Evolutionary processes allow populations to survive and thrive in a world of contingencies

In the middle of their most interesting and challenging paper, How Quickly Do Literary Standards Change?, Underwood and Sellers have two paragraphs in which they raise the specter of Whig history and banish it. In the process they take some gratuitous swipes at Darwin and Lamarck and, by implication, at the idea that evolutionary thinking can be of benefit to literary history. I find these two paragraphs confused and confusing and so feel a need to comment on them.

Here’s what I’m doing: First, I present those two paragraphs in full, without interruption. That’s so you can get a sense of how their thought hangs togethe. Second, and the bulk of this post, I repeat those two paragraphs, in full, but this time with inserted commentary. Finally, I conclude with some remarks on evolutionary thinking in the study of culture.

Beware of Whig History

By this point in their text Underwood and Sellers have presented their evidence and their basic, albeit unexpected finding, that change in English-language poetry from 1820-1919 is continuous and in the direction of standards implicit in the choices made by 14 selective periodicals. They’ve even offered a generalization that they think may well extend beyond the period they’ve examined (p. 19): “Diachronic change across any given period tends to recapitulate the period’s synchronic axis of distinction.” While I may get around to discussing that hypothesis – which I like – in another post, we can set it aside for the moment.

I’m interested in two paragraphs they write in the course of showing how difficult it will be to tease a causal model out of their evidence. Those paragraphs are about Whig history. Here they are in full and without interruption (pp. 20-21):

Nor do we actually need a causal explanation of this phenomenon to see that it could have far-reaching consequences for literary history. The model we’ve presented here already suggests that some things we’ve tended to describe as rejections of tradition — modernist insistence on the concrete image, for instance — might better be explained as continuations of a long-term trend, guided by established standards. Of course, stable long-term trends also raise the specter of Whig history. If it’s true that diachronic trends parallel synchronic principles of judgment, then literary historians are confronted with material that has already, so to speak, made a teleological argument about itself. It could become tempting to draw Lamarckian inferences — as if Keats’s sensuous precision and disillusionment had been trying to become Swinburne all along.We hope readers will remain wary of metaphors that present historically contingent standards as an impersonal process of adaptation. We don’t see any evidence yet for analogies to either Darwin or Lamarck, and we’ve insisted on the difficulty of tracing causality exactly to forestall those analogies. On the other hand, literary history is not a blank canvas that acquires historical self-consciousness only when retrospective observers touch a brush to it. It’s already full of historical observers. Writing and reviewing are evaluative activities already informed by ideas about “where we’ve been” and “where we ought to be headed.” If individual writers are already historical agents, then perhaps the system of interaction between writers, readers, and reviewers also tends to establish a resonance between (implicit, collective) evaluative opinions and directions of change. If that turns out to be true, we would still be free to reject a Whiggish interpretation, by refusing to endorse the standards that happen to have guided a trend. We may even be able to use predictive models to show how the actual path of literary history swerved away from a straight line. (It’s possible to extrapolate a model of nineteenth-century reception into the twentieth, for instance, and then describe how actual twentieth-century reception diverged from those predictions.) But we can’t strike a blow against Whig history simply by averting our eyes from continuity. The evidence we’re seeing here suggests that literary- historical trends do turn out to be relatively coherent over long timelines.

I agree with those last two sentences. It’s how Underwood and Sellers get there that has me a bit puzzled.

Oh woe is us! Those horrible horrible AIs are out to get us

These days some Very Smart, Very Rich, and Very Intellectual people are worried that the computers will do us in the first chance they get. Nonsense!

We would do well to recall that Nick Bostrom is one of these thinkers. He first gained renown from his fiendishly constructed "we are The Matrix argument", in which he went from

1) super-intelligent AI and mega-ginormous capacity computers are inevitableto2) the people who create them will likely devote a lot of time to Whole-World simulations of their pastto3) more likely than not, we're just simulated creatures having simulated life in one of these simulations.

From that it follows that the existential dangers posed by advanced AI are only simulated dangers posed by simulated advanced AI. Why should we worry about that? After all, we're not even real. We're just simulations.

The REAL problem is that so many people seem to think this is real thought, even profound thought, rather than superstitious twaddle. We look back at medieval theologians who worried about how many angels could dance on the head of a pin and think, "How silly." Yes, it's silly, but it seemed real enough to advanced thinkers at the time. These fears of superintelligent evil computers are just as silly. Get over it.

Sunday, May 24, 2015

Advanced AI, friend or foe? Answers from David Ferrucci and from Benzon and Hays

David Ferrucci:

“To me, there’s a very deep philosophical question that I think will rattle us more than the economic and social change that might occur,” Ferrucci said as we ate. “When machines can solve any given task more successfully than humans can, what happens to your sense of self? As humans, we went from the chief is the biggest and the strongest because he can hurt anyone to the chief is the smartest, right? How smart are you at figuring out social situations, or business situations, or solving complex science or engineering problems. If we get to the point where, hands down, you’d give a computer any task before you’d give a person any task, how do you value yourself?”

Ferrucci said that though he found Tegmark’s sensitivity to the apocalypse fascinating, he didn’t have a sense of impending doom. (He hasn’t signed Tegmark’s statement.) Some jobs would likely dissolve and policymakers would have to grapple with the social consequences of better machines, Ferrucci said, but this seemed to him just a fleeting transition. “I see the endgame as really good in a very powerful way, which is human beings get to do the things they really enjoy — exploring their minds, exploring thought processes, their conceptualizations of the world. Machines become thought-partners in this process.”

This reminded me of a report I’d read of a radical group in England that has proposed a ten-hour human workweek to come once we are dependent upon a class of beneficent robot labor. Their slogan: “Luxury for All.” So much of our reaction to artificial intelligence is relative. The billionaires fear usurpation, a loss of control. The middle-class engineers dream of leisure. The idea underlying Ferrucci’s vision of the endgame was that perhaps people simply aren’t suited for the complex cognitive tasks of work because, in some basic biological sense, we just weren’t made for it. But maybe we were made for something better.

From Benjamin Wallace-Wells, Jeopardy! Robot Watson Grows Up, New York Magazine, 20 May 2015.

William Benzon and David Hays:

One of the problems we have with the computer is deciding what kind of thing it is, and therefore what sorts of tasks are suitable to it. The computer is ontologically ambiguous. Can it think, or only calculate? Is it a brain or only a machine?

The steam locomotive, the so-called iron horse, posed a similar problem for people at Rank 3. It is obviously a mechanism and it is inherently inanimate. Yet it is capable of autonomous motion, something heretofore only within the capacity of animals and humans. So, is it animate or not? Perhaps the key to acceptance of the iron horse was the adoption of a system of thought that permits separation of autonomous motion from autonomous decision. The iron horse is fearsome only if it may, at any time, choose to leave the tracks and come after you like a charging rhinoceros. Once the system of thought had shaken down in such a way that autonomous motion did not imply the capacity for decision, people made peace with the locomotive.

The computer is similarly ambiguous. It is clearly an inanimate machine. Yet we interact with it through language; a medium heretofore restricted to communication with other people. To be sure, computer languages are very restricted, but they are languages. They have words, punctuation marks, and syntactic rules. To learn to program computers we must extend our mechanisms for natural language.

As a consequence it is easy for many people to think of computers as people. Thus Joseph Weizenbaum (1976), with considerable dis-ease and guilt, tells of discovering that his secretary "consults" Eliza--a simple program which mimics the responses of a psychotherapist--as though she were interacting with a real person. Beyond this, there are researchers who think it inevitable that computers will surpass human intelligence and some who think that, at some time, it will be possible for people to achieve a peculiar kind of immortality by "downloading" their minds to a computer. As far as we can tell such speculation has no ground in either current practice or theory. It is projective fantasy, projection made easy, perhaps inevitable, by the ontological ambiguity of the computer. We still do, and forever will, put souls into things we cannot understand, and project onto them our own hostility and sexuality, and so forth.

A game of chess between a computer program and a human master is just as profoundly silly as a race between a horse-drawn stagecoach and a train. But the silliness is hard to see at the time. At the time it seems necessary to establish a purpose for humankind by asserting that we have capacities that it does not. To give up the notion that one has to add "because . . . " to the assertion "I'm important" is truly difficult. But the evolution of technology will eventually invalidate any claim that follows "because." Sooner or later we will create a technology capable of doing what, heretofore, only we could.

From William Benzon and David G. Hays, The Evolution of Cognition, Journal of Social and Biological Structures 13(4): 297-320, 1990.

Saturday, May 23, 2015

The Art of Rotoscope: Taylor Swift Remade

H/t Nina Paley.49 University of Newcastle Australia animation students were each given 52 frames of Taylor Swift's Shake it Off music video, and together they produced 2767 frames of lovingly hand-drawn rotoscoped animation footage. http://bit.ly/1PzUznI Thank you to all the students of DESN2801: Animation 1 for your enthusiasm, good humour and terrific roto skills!

Friday, May 22, 2015

The life of a hired intellectual gun

Xerox was developing a new operating system for its ill-fated line of computers. Their testing group was falling behind. Learning I had a statistical background as well as one in programming, I was asked to do two things. First, tell us why no matter how many testers we hire, we never seem to get ahead. Second, tell us when the testing will be complete.

The second question was the easiest. Each week, the testing group was discovering more and more errors at an accelerating rate. “Never, the testing will never be complete” was the answer.

The first question was also easy. They already knew the answer. They just wanted me, their consultant, that is, their patsy to be the one responsible for pointing it out. Seems the testing group had been given exactly four computers. Whenever a bug was discovered, the offending computer would sit idle until a member of the programming group could swing by and do a dump. So mostly, the testers played cards, gossiped or used the phones. When we got further and further behind, we were asked to come in Saturdays. The programming group never worked on Saturdays, so that really wasn’t much of a plan.

A sage observation about the consulting biz:

With a large well-established firm, there is one and only one reason you are being hired. The project is a disaster and every knowledgeable employee has already bailed.

Underwood and Sellers 2015: Cosmic Background Radiation, an Aesthetic Realm, and the Direction of 19thC Poetic Diction

I’ve read and been thinking about Underwood and Sellers 2015, How Quickly Do Literary Standards Change?, both the blog post and the working paper. I’ve got a good many thoughts about their work and its relation to the superficially quite different work that Matt Jockers did on influence in chapter nine of Macroanalysis. I am, however, somewhat reluctant to embark on what might become another series of long-form posts, which I’m likely to need in order to sort out the intuitions and half-thoughts that are buzzing about in my mind.

What to do?

I figure that at the least I can just get it out there, quick and crude, without a lot of explanation. Think of it as a mark in the sand. More detailed explanations and explorations can come later.

19th Century Literary Culture has a Direction

My central thought is this: Both Jockers on influence and Underwood and Sellers on literary standards are looking at the same thing: long-term change in 19th Century literary culture has a direction – where that culture is understood to include readers, writers, reviewers, publishers and the interactions among them. Underwood and Sellers weren’t looking for such a direction, but have (perhaps somewhat reluctantly) come to realize that that’s what they’ve stumbled upon. Jockers seems a bit puzzled by the model of influence he built (pp. 167-168); but in any event, he doesn’t recognize it as a model of directional change. That interpretation of his model is my own.

When I say “direction” what do I mean?

That’s a very tricky question. In their full paper Underwood and Sellers devote two long paragraphs (pp. 20-21) to warding off the spectre of Whig history – the horror! the horror! In the Whiggish view, history has a direction, and that direction is a progression from primitive barbarism to the wonders of (current Western) civilization. When they talk of direction, THAT’s not what Underwood and Sellers mean.

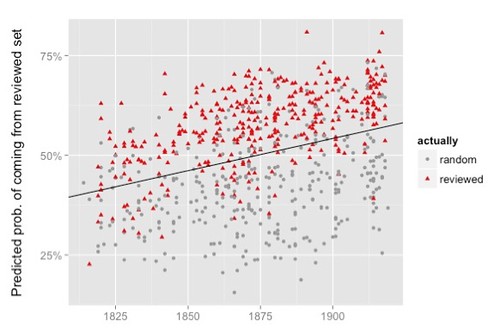

But just what DO they mean? Here’s a figure from their work:

Notice that we’re depicting time along the X-axis (horizontal), from roughly 1820 at the left to 1920 on the right. Each dot in the graph, regardless of color (red, gray) or shape (triangle, circle), represents a volume of poetry and its position on the X-axis is volume’s publication date.

But what about the Y-axis (vertical)? That’s tricky, so let us set that aside for a moment. The thing to pay attention to is the overall relation of these volumes of poetry to that axis. Notice that as we move from left to right, the volumes seem to drift upward along the Y-axis, a drift that’s easily seen in the trend line. That upward drift is the direction that Underwood and Sellers are talking about. That upward drift was not at all what they were expecting.

Drifting in Space

But what does the upward drift represent? What’s it about? It represents movement in some space, and that space represents poetic diction or language. What we see along the Y-axis is a one-dimensional reduction or projection of a space that in fact has 3200 dimensions. Now, that’s not how Underwood and Sellers characterize the Y-axis. That’s my reinterpretation of that axis. I may or may not get around to writing a post in which I explain why that’s a reasonable interpretation.

Mad Max: Fury Road – notes toward a psycho-kinetic reading

This is a quick note I posted to a private online forum. I may or may not expland on it later on, but I wanted to get the note out here in public space as well. Why? If you've seen it, you know that it's basically a two-hour chase scene. As I watched it I kept asking myself: Why? The last paragraph of this note hints at an answer to that question.

* * * * *

Have you seen the recent Mad Max movie, Charles? I ask, Charles, because some shots from it came to mind when you talked of the hope and fervor of millennialism. As you know, the films are set in a rather dystopian post apocalyptic desert world. In the current entry in the MM franchise, the 4th film and the first in 30 years, the fervor is expressed by War Boys who spray chrome paint around their mouths and on their lips and teeth. Their hope is to be transported to Valhalla when they die in battle.

There are two other things that make the film interesting. The surprising emergence of feminism in the film and the gorgeousness of much of it.

And, as I think about it, I guess there's a third thing. The film is basically a two-hour chase scene. I can tell you that, more than once, I said to myself: "Jeez! Are we have to have no relief? Aren't we going to get something other than chase chase chase!?"

I'm now thinking that that unrelenting chase is the psycho-kinetic sea in which the millennial fervor of the War Boys floats. Of course, the War Boys are not on the side of THE GOOD in this film, a side that develops that unexpected feminist aspect as the chase rolls along. But one of the War Boys gets isolated from his fellows and he converts to the Mad Max/Furiosa/feminist cause.

* * * * *

That's the note. If I get around to commenting, I'll be wanting to argue that it's not only the War Boys' apocalyptic hope that floats on that psycho-kineticism, but the feminist alternative. And that in some way that psycho-kineticism merges the two, the War Boys and the newly empowered Breeders, and Max and Furiosa (the one-armed).

Tuesday, May 19, 2015

Does hot water freeze faster than cold?

In some circumstances, it does. The question is a old one, dating back at least to Aristotle, and turns out to be surprisingly complex. This paper is a good study in the subtleties of getting clear answers from Nature.

Monwhea JengPhysics Department, Box 1654, Southern Illinois University Edwardsville, Edwardsville, IL, 62025

H/t Faculty of Language.We review the Mpemba effect, where intially hot water freezes faster than initially cold water. While the effect appears impossible at first sight, it has been seen in numerous experiments, was reported on by Aristotle, Francis Bacon, and Descartes, and has been well-known as folklore around the world. It has a rich and fascinating history, which culminates in the dramatic story of the secondary school student, Erasto Mpemba, who reintroduced the effect to the twentieth century scientific community. The phenomenon, while simple to describe, is deceptively complex, and illustrates numerous important issues about the scientific method: the role of skepticism in scientific inquiry, the influence of theory on experiment and observation, the need for precision in the statement of a scientific hypothesis, and the nature of falsifiability. We survey proposed theoretical mechanisms for the Mpemba effect, and the results of modern experiments on the phenomenon. Studies of the observation that hot water pipes are more likely to burst than cold water pipes are also described.

Monday, May 18, 2015

When birds talk, other creatures listen

From the NYTimes:

Studies in recent years by many researchers, including Dr. Greene, have shown that animals such as birds, mammals and even fish recognize the alarm signals of other species. Some can even eavesdrop on one another across classes. Red-breasted nuthatches listen to chickadees. Dozens of birds listen to tufted titmice, who act like the forest’s crossing guards. Squirrels and chipmunks eavesdrop on birds, sometimes adding their own thoughts. In Africa, vervet monkeys recognize predator alarm calls by superb starlings.

Dr. Greene says he wants to better understand the nuances of these bird alarms. His hunch is that birds are saying much more than we ever suspected, and that species have evolved to decode and understand the signals. He acknowledged the obvious Dr. Dolittle comparison: “We’re trying to understand this sort of ‘language’ of the forest.”...

Dr. Greene, working with a student, has also found that “squirrels understand ‘bird-ese,’ and birds understand ‘squirrel-ese.’ ” When red squirrels hear a call announcing a dangerous raptor in the air, or they see such a raptor, they will give calls that are acoustically “almost identical” to the birds, Dr. Greene said. (Researchers have found that eastern chipmunks are attuned to mobbing calls by the eastern tufted titmouse, a cousin of the chickadee.)

Other researchers study bird calls just as intently. Katie Sieving, a professor of wildlife ecology and conservation at the University of Florida, has found that tufted titmice act like “crossing guards” and that other birds hold back from entering hazardous open areas in a forest if the titmice sound any alarm. Dr. Sieving suspects that the communication in the forest is akin to an early party telephone line, with many animals talking and even more listening in — perhaps not always grasping a lot, but often just enough.

Sunday, May 17, 2015

Women of the Upper East Side: A world just across the river but beyond my experience

A wife bonus, I was told, might be hammered out in a pre-nup or post-nup, and distributed on the basis of not only how well her husband’s fund had done but her own performance — how well she managed the home budget, whether the kids got into a “good” school — the same way their husbands were rewarded at investment banks. In turn these bonuses were a ticket to a modicum of financial independence and participation in a social sphere where you don’t just go to lunch, you buy a $10,000 table at the benefit luncheon a friend is hosting.Women who didn’t get them joked about possible sexual performance metrics. Women who received them usually retreated, demurring when pressed to discuss it further, proof to an anthropologist that a topic is taboo, culturally loaded and dense with meaning.But what exactly did the wife bonus mean? It made sense only in the context of the rigidly gendered social lives of the women I studied. The worldwide ethnographic data is clear: The more stratified and hierarchical the society, and the more sex segregated, the lower the status of women.

Saturday, May 16, 2015

Friday, May 15, 2015

Friday Fotos: What’s an Aesthetic? – 3

The two previous posts in this series – What’s an Aesthetic? and What’s an Aesthetic? – 2 – used simple visual material and were mostly about light and color. We had a simple object in the foreground and it was cleanly and dramatically separated from a simple background. The second post, however, ended with an image involving fairly complex geometric manipulation (though it was simple enough to do in Photoshop). And that manipulation gave me the idea for the images in this post.

What, if instead of simple visual material, I start with complex visual material? I decided to work with a photograph of an iris, but not a standard shot. I took this shot blind, from below – I held the camera low to the ground, angled it up toward the flower, and snapped the shot when the auto-focus had clicked:

We’ve got rich colors and a complex floral form that almost blurs into the background at points.

Now we start messing with the geometry. If you just saw this image alone, with no commentary, it might take you awhile to notice that it has been manipulated:

The most obvious evidence is at the bottom center. In this image I’ve manipulated the color in an anti-realistic way:

I’m not sure what I think of that one, but . . . I decided to cross it with a more natural seeming one to get this:

Notice the green areas in the blue/purple petals at the left and right.

Are these images visually interesting, even compelling? Yes, on the first, not sure on the second. It’s worth playing with, though I’m not sure to what end.

Memories of a King, the Beale Street Blues Boy

It’s possible that the first photo I ever saw of B. B. King was on the cover of Charlie Keil’s Urban Blues, an ethnographic study that spilled over into common discourse and made Charlie’s career. And I’m sure I read more about him in that book than I’ve read about him since then. But I don’t recall when I first heard King’s music and I only ever saw him live but twice in my life, once at the Saratoga Performing Arts Center (SPAC) in upstate New York in the late 1970s or early 1980s and then a bit later in Albany, New York, when I opened for him as a member of The Out of Control Rhythm and Blues Band.

I don’t remember much about the SPAC performance except that before long he had us dancing in the aisles, at least those of us close enough to the aisles that we could get out there and dance. The rest of the rather considerable audience had to be content with giggling and grooving in or in front of their seats. By this time, of course, King’s days of struggling were over and his audiences were mostly white, as are most of the people in the USA – though those days will come to an end some time later in this century.

Dancing in the aisles: that’s the point, isn’t it? The music enters your body, lifts it up, and you become spirit. The blues? Why not, the blues?

My memories of the Albany gig are a bit richer. To be sure, as I recall, King’s music was better at that SPAC gig, for the music comes and goes even with the best of them. Our manager (and saxophonist) Ken Drumm had seen to it that King had champagne waiting for him when he arrived in his dressing room and that got us an opportunity to meet him after the gig. But we had to line up with everyone else – mostly middle-aged ladies in big hats and Sunday dresses – and wait our turn. We didn’t have more than a minute, if that, in the man’s presence.

And that meeting is worth thinking about in itself. King was the son of Mississippi sharecroppers. I don’t know about the rest of his band, but I recall a thing or two about Out of Control’s line-up at the time: two lawyers (I suppose we could call them ‘Big City’ lawyers for contrast, though Albany isn’t that big of a city), an advertising executive (that would be Mr. Drumm), a commercial photographer, a Berklee College drop-out, a car salesman, and an independent scholar (me). All brought together in the same place at the same time to worship in the church of the blues. I suppose I could invoke the melting pot cliché, but there was no melting going on, though the music was hot enough. As for the pot, to my knowledge the Out of Control boys were clean that night. I have no knowledge of BB’s band.

Thursday, May 14, 2015

Knowledge of art history allows you to see photographic opportunities in the world

Art Wolfe is an acclaimed nature photographer. In this lecture, which he delivered at Google, he explains how his knowledge of art history – Seurat, Monet, van Gogh, Picasso, Georgia O'Keefe – taught him to see the world.

Wednesday, May 13, 2015

Cotton and Capitalism

Writing in Foreign Affairs, Jeremy Adelman reviews three recent books on the origins of capitalism. Two espouse an "internalist" story, locating capitalism entirely within the West and the third, Empire of Cotton, by Sven Berkert, tells an "externalist" story, locating capitalism in a complex set of relations between Europe and the rest of the world.

A narrative as capacious as this threatens to groan under the weight of heavy concepts. In fact, Beckert dodges and weaves between the big claims and great detail. His portrait of Liverpool, “the epicenter of a globe-spanning empire,” puts readers on the wharves and behind the desks of the credit peddlers. His description of the American Civil War as “an acid test for the entire industrial order” is a brilliant example of how global historians might tackle events—as opposed to focusing on structures, processes, and networks—because he shows how the crisis of the U.S. cotton economy reverberated in Brazil, Egypt, and India. The scale of what Beckert has accomplished is astonishing.

Beckert turns the internalist argument on its head. He shows how the system started with disparate parts connected through horizontal exchanges. He describes how it transformed into integrated, hierarchical, and centralized structures—which laid the foundations for the Industrial Revolution and the beginnings of the great divergence between the West and the rest. Beckert’s cotton empire more than defrocks the internalists’ happy narrative of the West’s self-made capitalist man. The rise of capitalism needed the rest, and getting the rest in line required coercion, violence, and the other instruments of imperialism. Cotton, “the fabric of our lives,” as the jingle goes, remained an empire because it, like the capitalist system it produced, depended on the subjugation of some for the benefit of others.

Adelman notes that, in general, sorting out causality is tough:

To explain why some parts of the world struggled, one should not have to choose between externalist theories, which rely on global injustices, and internalist ones, which invoke local constraints.

Indeed, it’s the interaction of the local and the global that makes breakouts so difficult—or creates the opportunity to escape. In between these scales are complex layers of policies and practices that defy either-or explanations. In 1521, the year the Spanish defeated the Aztec empire and laid claim to the wealth of the New World, few would have predicted that England would be an engine of progress two centuries later; even the English would have bet on Spain or the Ottoman Empire, which is why they were so committed to piracy and predation. Likewise, in 1989, as the Berlin Wall fell and Chinese tanks mowed through Tiananmen Square, “Made in China” was a rare sight. Who would have imagined double-digit growth from Maoist capitalism? Historians have trouble explaining success stories in places that were thought to lack the right ingredients. The same goes for the flops. In 1914, Argentina ranked among the wealthiest capitalist societies on the planet. Not only did no one predict its slow meltdown, but millions bet on Argentine success. To find clues to success or failure, then, historians should look not at either the world market or local initiatives but at the forces that combine them.

I suspect, and certainly hope, that a good formulation of cultural evolutionary processes will help sort out the causal mechanisms. Just why I believe this is not so easy for me to formulate, but it has to do with thinking about social groups as "transmitters" of economic and political forces, the flow of goods, labor, and control. It's about scale, from individuals to companies, and states.

H/t 3QD.

H/t 3QD.

Moore's "Law" at 50

It's not a law, of course; it's an empirical generalization. But it's held up remarkably well. Gordon Moore, then head of research for Fairchild Semiconductor, made his prediction back in 1965. He spoke to Tom Friedman of the NYTimes:

“The original prediction was to look at 10 years, which I thought was a stretch. This was going from about 60 elements on an integrated circuit to 60,000 — a thousandfold extrapolation over 10 years. I thought that was pretty wild. The fact that something similar is going on for 50 years is truly amazing. You know, there were all kinds of barriers we could always see that [were] going to prevent taking the next step, and somehow or other, as we got closer, the engineers had figured out ways around these. But someday it has to stop. No exponential like this goes on forever.”

The federal government, alas, has slowed down its investment in basic research:

“I’m disappointed that the federal government seems to be decreasing its support of basic research,” said Moore. “That’s really where these ideas get started. They take a long time to germinate, but eventually they lead to some marvelous advances. Certainly, our whole industry came out of some of the early understanding of the quantum mechanics of some of the materials. I look at what’s happening in the biological area, which is the result of looking more detailed at the way life works, looking at the structure of the genes and one thing and another. These are all practical applications that are coming out of some very fundamental research, and our position in the world of fundamental science has deteriorated pretty badly. There are several other countries that are spending a significantly higher percentage of their G.N.P. than we are on basic science or on science, and ours is becoming less and less basic.”

What’s an Aesthetic? – 2

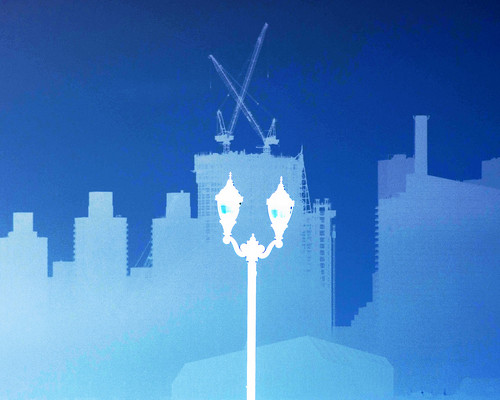

The source image in this post is very much like the source image in my previous post, What’s an Aesthetic? Early morning, misty, shooting from Hoboken toward Manhattan. So we’ve got the Manhattan skyline ghostlike in the background and, in the foreground, we have a street lamp (rather than a pair of banners).

Here’s three variations. Obviously I’m not trying to make these look like something your eye might have seen at the spot. I’m just playing around with the additive primary colors: red, green and blue.

Here I simply “inverted” the first image (swapping the original colors for their complements), which is easy to do in photoshop:

That too has its interest.

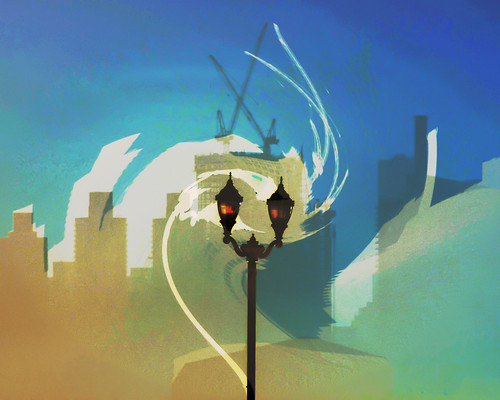

But this is something else, and rather more interesting, no?

Photoshop has a number of distortion filters. To get this image I used one called Twirl: Imagine the image floating on the surface of a pond. Now dip a stick in the pond and twirl it. I took the twirled version and blended it with the original, which is also easy to do in Photoshop. There are in fact endless ways to do it. So many I don’t remember just which one I used to get this one. I might not be able to reproduce it from the original.

Is that a feature or a bug?

* * * * *

Many of the posts tagged “photography” raise similar issues.

Tuesday, May 12, 2015

Monday, May 11, 2015

Which one is "real" and why?

Both, of course, have been through Photoshop. That's not the issue.

What is? And what kind of issue is it?

Follow-up on Dennett and Mental Software

This is a follow-up to a previous post, Dennet’s WRONG: the Mind is NOT Software for the Brain. In that post I agreed with Tecumseh Fitch [1] that the hardware/software distinction for digital computers is not valid for mind/brain. Dennett wants to retain the distinction [2], however, and I argued against that. Here are some further clarifications and considerations.

1. Technical Usage vs. Redescription

I asserted that Dennett’s desire to talk of mental software (or whatever) has no technical justification. All he wants is a different way of describing the same mental/neural processes that we’re investigating.

What did I mean?

Dennett used the term “virtual machine”, which has a technical, if a bit diffuse, meaning in computing. But little or none of that technical meaning carries over to Dennett’s use when he talks of, for example, “the long-division virtual machine [or] the French-speaking virtual machine”. There’s no suggestion in Dennett that a technical knowledge of the digital technique would give us insight into neural processes. So his usage is just a technical label without technical content.

2. Substrate Neutrality

Dennett has emphasized the substrate neutrality of computational and informatic processes. Practical issues of fabrication and operation aside, a computational process will produce the same result regardless of whether or not it is implemented in silicon, vacuum tubes, or gears and levels. I have no problem with this.

As I see it, taken only this far we’re talking about humans designing and fabricating devices and systems. The human designers and fabricators have a “transcendental” relationship to their devices. They can see and manipulate them whole, top to bottom, inside and out.

But of course, Dennett wants this to extend to neural tissue as well. Once we know the proper computational processes to implement, we should be able to implement a conscious intelligent mind in digital technology that will not be meaningfully different from a human mind/brain. The question here, it seems to me, is: But is this possible in principle?

Dennett has recently come to the view that living neural tissue has properties lacking in digital technology [3, 4, 5]. What does that do to substrate neutrality?

I’m not sure. Dennett’s thinking centers on neurons. On the one hand real neurons are considerably more complex than the McCulloch-Pitts logic gates that captured his imagination early in his career. That’s one thing. And if that’s all there is to it, then one could still argue that substrate neutrality extends to neural tissue. We just have to recognize that neurons aren’t the simple primitive computational devices we’d thought them to be.

But there’s more going on. Following others (Terrence Deacon and Tecumseh Fitch) Dennett stresses the fact that neurons are agents with their own agendas. As agents go, they may be relatively simple, and their agendas simple; but still, they have a measure of causal autonomy.

What’s an Aesthetic?

I was discussing this question with a writer friend over the weekend, though my end of the question was mostly about my aesthetic as a photographer. That’s what I want to discuss here.

Photography, so commonsense would have it, is about representing the (visual) world as it really is. Any thoughtful person who’s spent time with photography knows that that’s problematic and I note that, in painting, “photorealism” is but a form of realism. There are subtleties.

But let’s start with a crude distinction, between representation and abstraction. Photography is a medium for representing the visual world. Consider this recent photograph:

What does it represent? Of course, I know, because I took the photo, but it might not be so obvious to a stranger to that scene. Still, it doesn’t seem like a great mystery, does it?

The background looks rather like a skyline. As for the foreground, we have a vertical post flanked by a pair of panels. There’s some figuration on the panels and we see “P13R” at the bottom of the left-hand panel. That, perhaps, is about as far as we can go starting from nothing – though no doubt Sherlock Holmes could do better.

Just that much is enough, I suppose. The image represents something. Something of what it represents is obvious, while leaving other things somewhat mysterious. Those markings on the panels, what are they? At least two of them are letters, P and R, and two of them are numerals, 1 and 3. The others are sorta like that, but not specifically identifiable.

The fact is, I didn’t take that shot out of a desire to represent whatever it is in the photo. I’m not particularly interested in showing something specific to you, though I’m interested in the fact that the images DO represent something specific.

Sunday, May 10, 2015

Irises: blue and green

I like this shot, despite its compositional flaws. What're those flower fragments doing at the top? Of course, I knew they'd be there when I framed the shot, but I didn't care. And those buds. Nor are the two main flowers centered top-to-bottom.

What I mainly like is the color contrast: Blue against green. The green isn't flat, of course. These aren't flowers posed in a studio. They're flowers I flicked in the wild. Well, not exactly wild, as they're in a garden in an island on 11th Street in Hoboken, NJ. So once can seem to articulation, some structure, in the green.

The flowers are in focus, hence sharp. The background is not, hence blurred.

And now poker: AI battles humans to a draw

NBC:

A poker showdown between professional players and an artificial intelligence program has ended with a slim victory for the humans — so slim, in fact, that the scientists running the show said it's effectively a tie. The event began two weeks ago, as the four pros — Bjorn Li, Doug Polk, Dong Kim and Jason Les — settled down at Rivers Casino in Pittsburgh to play a total of 80,000 hands of Heads-Up, No-Limit Texas Hold 'em with Claudico, a poker-playing bot made by Carnegie Mellon University computer science researchers....

No actual money was being bet — the dollar amount was more of a running scoreboard, and at the end the humans were up a total of $732,713 (they will share a $100,000 purse based on their virtual winnings). That sounds like a lot, but over 80,000 hands and $170 million of virtual money being bet, three-quarters of a million bucks is pretty much a rounding error, the experimenters said, and can't be considered a statistically significant victory.

Note that poker is a very different kind of game from chess. Chess is a game of perfect information; both players know everything there is to know about the state of play. Poker is not; each player has cards invisible to the other players. Moreover, Chess is a finite game; poker is not.

Hence, it's taken computers longer to be competitive at poker than at chess. Now the day seems to be arriving. We'll see.

H/t Alex Tabarrok.

Saturday, May 9, 2015

Friday, May 8, 2015

The Rhythm of Consciousness

Recently, however, scientists have flipped this thinking on its head. We are exploring the possibility that brain rhythms are not merely a reflection of mental activity but a cause of it, helping shape perception, movement, memory and even consciousness itself.

What this means is that the brain samples the world in rhythmic pulses, perhaps even discrete time chunks, much like the individual frames of a movie. From the brain’s perspective, experience is not continuous but quantized.

It seems that we entrain ourselves to the world's rhythms:

This is not to say that the brain dances to its own beat, dragging perception along for the ride. In fact, it seems to work the other way around: Rhythms in the environment, such as those in music or speech, can draw neural oscillations into their tempo, effectively synchronizing the brain’s rhythms with those of the world around us.

Consider a study that I conducted with my colleagues, forthcoming in the journal Psychological Science. We presented listeners with a three-beat-per-second rhythm (a pulsing “whoosh” sound) for only a few seconds and then asked the listeners to try to detect a faint tone immediately afterward. The tone was presented at a range of delays between zero and 1.4 seconds after the rhythm ended. Not only did we find that the ability to detect the tone varied over time by up to 25 percent — that’s a lot — but it did so precisely in sync with the previously heard three-beat-per-second rhythm.

Dennet’s WRONG: the Mind is NOT Software for the Brain

And he more or less knows it; but he wants to have his cake and eat it too. It’s a little late in the game to be learning new tricks.

I don’t know just when people started casually talking about the brain as a computer and the mind as software, but it’s been going on for a long time. But it’s one thing to use such language in casual conversation. It’s something else to take it as a serious way of investigating mind and brain. Back in the 1950s and 1960s, when computers and digital computing were still new and the territory – both computers and the brain – relatively unexplored, one could reasonably proceed on the assumption that brains are digital computers. But an opposed assumption – that brains cannot possibly be computers – was also plausible.

The second assumption strikes me as being beside the point for those of us who find computational ideas essential to thinking about the mind, for we can proceed without the somewhat stronger assumption that the mind/brain is just a digital computer. It seems to me that the sell-by date on that one is now past.

The major problem is that living neural tissue is quite different from silicon and metal. Silicon and metal passively take on the impress of purposes and processes humans program into them. Neural tissue is a bit trickier. As for Dennett, no one championed the computational mind more vigorously than he did, but now he’s trying to rethink his views, and that’s interesting to watch.

The Living Brain

In 2014 Tecumseh Fitch published an article in which he laid out a computational framework for “cognitive biology” [1]. In that article he pointed out why the software/hardware distinction doesn’t really work for brains (p. 314):

Neurons are living cells – complex self-modifying arrangements of living matter – while silicon transistors are etched and fixed. This means that applying the “software/hardware” distinction to the nervous system is misleading. The fact that neurons change their form, and that such change is at the heart of learning and plasticity, makes the term “neural hardware” particularly inappropriate. The mind is not a program running on the hardware of the brain. The mind is constituted by the ever-changing living tissue of the brain, made up of a class of complex cells, each one different in ways that matter, and that are specialized to process information.

Yes, though I’m just a little antsy about that last phrase – “specialized to process information” – as it suggests that these cells “process” information in the way that clerks process paperwork: moving it around, stamping it, denying it, approving it, amending it, and so forth. But we’ll leave that alone.

One consequence of the fact that the nervous system is made of living tissue is that it is very difficult to undo what has been learned into the detailed micro-structure of this tissue. It’s easy to wipe a hunk of code or data from a digital computer without damaging the hardware, but it’s almost impossible to do the something like that with a mind/brain. How do you remove a person’s knowledge of Chinese history, or their ability to speak Basque, and nothing else, and do so without physical harm? It’s impossible.

Thursday, May 7, 2015

Green Villain Karma: Urban Buddhism in Jersey City #GVM004

The painting continues on the Pep Boys site, as you can see in these flicks, taken 5 May 2015. Notice the incomplete Goomba head (upper left):

And the front façade is almost completely redone:

But that’s not what’s on my mind. What’s on my mind is impermanence, one of the eternal themes of philosophy. Tibetan Buddhists celebrate this theme with sand mandalas. Here’s a short Wikipedia paragraph:

The Sand Mandala … is a Tibetan Buddhist tradition involving the creation and destruction of mandalas made from colored sand. A sand mandala is ritualistically dismantled once it has been completed and its accompanying ceremonies and viewing are finished to symbolize the Buddhist doctrinal belief in the transitory nature of material life.