Saturday, October 31, 2015

Tinker Bell and Fairy Dust: Joseph Carroll Doesn’t Know what He Doesn’t Know

Several years ago the journal Style devoted a double issue to evolutionary criticism. It had a target article by Joseph Carroll, responses from over thirty other scholars, and then a final reply by Carroll. One of the respondents was Ellen Spolsky [1].

In her first paragraph she notes that Carroll’s article lays out an evolutionary model of human nature in only 1600 words. She wonders by what authority Carroll presents this summary – does he think of himself as an evolutionary psychologist or an outsider to the field? – and then goes on to note (p. 285):

Beyond my general ability to follow rational argument, how can I evaluate the claims made therein? The most surprising is the claim that he reports finding a “consensus” model. Am I being asked to believe that although my own academic area of literary theory is cross-hatched by contested claims, the field of evolution and evolutionary psychology is blissfully free of conflict and competition? Especially when it comes to a model (of all things!) of human nature? I am being asked to believe in Tinkerbell [sic].

Those of you who know Carroll’s work will not be surprised if I tell you can he manages a superficially plausible response. He’s a skilled rhetorician. For one thing, no, he isn’t claiming that those 1600 words are all there is. There’s more to it, but, alas, you’re going to have to do some reading in the field [2].

Yet for all the apparent plausibility of Carroll’s response, I’ve read enough of his work, and know enough psychology (from reading it as well as using it in my work over the past four decades), to believe that Spolsky’s metaphorical dismissal is apt. Carroll believes in a Tinker Bell version of the newer psychologies and his arguments use generous sprinklings of fairy dust to make them glitter and sparkle.

Cognitive Science in Its Early Phases

In reading through several of Carroll's publications I found an astonishing statement near the end of Graphing Jane Austen [3] – authored with three others. By this point the main argument has concluded and Carroll is simply differentiating his approach from other psychological approaches to literary study. After running through Freud and Jung he comes to the recent interest in cognitive science. It’s in that context that he asserts (p. 167): “In its early phases, research in cognitive science typically operated in the discursive mode of formalistic speculative philosophy, and it was much preoccupied with models of the mind derived from analogies with computers.” If that statement were accurate if would score heavily for literary Darwinism. But it isn’t accurate. Rather, it is extravagantly – dare I say, defensively? – inaccurate.

It’s a bit like saying: “Little is known about Shakespeare. Consequently identifying the man behind the name has been a major project for Shakespeare scholars.” The first statement is true. And, while it is also true that people have worried about whether or not someone other than the man from Avon wrote plays under Shakespeare’s name, this is almost entirely the work of amateurs; it is not a focus of Shakespeare studies.

Another comparison: “For several centuries prior to Darwin biologists took notes, drew pictures, and collected specimens.” That’s true, though they weren’t called biologists then. And the statement completely misses classification and thus gives no sense of the systematic nature of the work. Nor does it indicate that description and taxonomy are major aspects of the foundation on which Darwin built his theory of evolution. Without it he’d have had no way to do the necessary comparative work.

Another comparison: “For several centuries prior to Darwin biologists took notes, drew pictures, and collected specimens.” That’s true, though they weren’t called biologists then. And the statement completely misses classification and thus gives no sense of the systematic nature of the work. Nor does it indicate that description and taxonomy are major aspects of the foundation on which Darwin built his theory of evolution. Without it he’d have had no way to do the necessary comparative work.

Getting back to Carroll, yes in “its early phases” cognitive science was interested in computational models of mind. But characterizing that work as “formalistic speculative philosophy” is ludicrous, so ludicrous I can’t imagine how that statement got into print. Any time within the last decade a college sophomore could have gotten a more accurate picture in only five or ten minutes online. Carroll is not a sophomore. He’s a senior scholar with two-decades invested in the study of a psychological movement – evolutionary psychology – with substantial roots in cognitive science, including the early phases. How could he be so wrong about that?

By any reasonable account cognitive science in its early phases extends back into the 1950s and 60s. These thinkers, among others, became active and prominent in the 1950s [4]:

Noam Chomsky: The publication of Syntactic Structures in 1957 brought new interest to linguistics and stimulated psychologists to test his syntactic theories by experimental research.Herbert Simon eventually won a Nobel Prize in economics but he is best known for his wide-ranging work in artificial intelligence, much of it in conjunction with his colleagueAllen Newell. In 1975 the Association for Computing Machinery awarded the Turing Award to Newel and Simon for that work.Marvin Minsky has spent his career at MIT and is one of the best-known researchers in artificial intelligence and neural networks.John McCarthy was a Stanford mathematician who organized the first international conference on artificial intelligence in 1956 and later invented the LISP programming language, which became the language of choice for work in artificial intelligence.George A. Miller first became known for the use of information theory in studying language. His 1956 paper, “The magical number seven, plus or minus two”, is one of the best known in modern psychology. In 1960 he published Plans and the Structure of Behavior, in conjunction with another psychologist, Eugene Galanter, and a neuroscientist, Karl Pribram. I’ll return to this book in a minute.

These men were not doing speculative philosophy. There’s no quick and easy way to characterize what they were doing, but “cognitive science” is a good phrase, coined in 1973 by Christopher Longuet-Higgins [5], who was originally trained as a chemist. Just when did cognitive science pass out of its “early phases”? Who knows? And for the purpose of this post it doesn’t make any difference. That list alone makes it clear that Carroll’s characterization of those early phases is wrong.

Friday, October 30, 2015

Friday Fotos: Running with my New Toy, Part 2

Continuing from yesterday, I set my new camera (a Lumix used DMC-ZS7) to shoot in a 4 by 3 aspect ratio and went out for some evening and night-time shooting, mostly along the river. That lamp up there is one shot. I like it. The texture in the glass is nicely defined. Of course, there isn’t much problem with light, as I’m shooting directly into it.

I’ve been shooting street lamps for years. So this shot is a calibration shot, getting a sense of the camera. This whole run is a calibration run, calibrating the camera and of course the photographer, me.

This is a very different shot, south along the Hudson River, with the tip of Manhattan on the left, Hoboken and Jersey City on the right, and the Verrazano Narrows Bridge in between:

Here light IS a problem. And that’s the point: How’s the camera perform in low light? Well, if I were trying to sell photos to National Geographic or to airline in-flight magazines, then the answer obviously is: not very well. My Pentax would do better, but I couldn’t sell those photos to National Geographic either. This photo was very grainy as shot. To get rid of the grain I had to do quite a bit of smoothing, and that killed whatever detail the camera managed to capture.

I’m not sure what I think of the result. That’s OK. Next picture:

This is a pretty standard “shaky-cam” style photo, though I didn’t do any deliberate shaking. There’s a car moving across the foreground and some bottles in the windows. I like this.

How Does Discursive Thought Maintain a Sense of Reality?

This isn’t about mundane reality, e.g. is that lake in the distance real or a mirage? This isn’t about the reality that stage magicians fool around with. This is about the reality defined by professional intellectual activity.

That reality, like even mundane reality, is circumscribed by a world view. We’ve got these “representations” “in our head” and see the world through them. What Thomas Kuhn called normal science has its paradigms, which it “tests” by making hypotheses and then looking for evidence to confirm those hypotheses, or not. Thus does normal science maintain a sense of reality.

How do discursive thinkers, historians, anthropologists, and, my particular concern, interpretive literary critics – how do these thinkers maintain a sense of reality? There are, of course, various kinds of things that literary critics do. They prepare editions of texts – something more prevalent 50 years ago than now – and they inquire into the history of books, authors, and literary practices. Set that aside.

What about the interpretive critics? How does one maintain a sense of the real if one is in the business of interpreting texts? If anything goes, then there is no reality, is there? It’s just whatever the critic thinks.

That was the question that confronted academic literary criticism in the 1960s and 1970s. Those who argued for fidelity to authorial intention were saying, in effect, That’s where reality is, the author’s intention. That’s the REAL meaning. But, as I suggested yesterday, that was never a very strong appeal about the gathering and weighing of evidence. It was more of a metaphysical stance.

Where, in the process of writing, within discursivity itself, did one seek reality? Is that what deconstruction was about? Is that the rationale behind the verbal ploys? And when deconstruction gave way to various (often identity based) political criticisms

Our "experience of duration is a signature of [...] coding efficiency"

David M. Eagleman, and Vani Pariyadath, Is subjective duration a signature of coding efficiency? (PDF) Phil. Trans. R. Soc. B (2009) 364, 1841–1851 doi:10.1098/rstb.2009.0026

Perceived duration is conventionally assumed to correspond with objective duration, but a growing literature suggests a more complex picture. For example, repeated stimuli appear briefer in duration than a novel stimulus of equal physical duration. We suggest that such duration illusions appear to parallel the neural phenomenon of repetition suppression, and we marshal evidence for a new hypothesis: the experience of duration is a signature of the amount of energy expended in representing a stimulus, i.e. the coding efficiency. This novel hypothesis offers a unified explanation for almost a dozen illusions in the literature in which subjective duration is modulated by properties of the stimulus such as size, brightness, motion and rate of flicker.

Hmmm.... I wonder. Time and again I've noticed that, when going somewhere unfamiliar, the trip there seems longer than the trip back. When I'm going THERE for the first time, the route is strange; I've got to code it into my mind for the first time. But when I'm coming back coding is more efficient because I've already laid down a record of the landmarks and directions. All I have to do coming back is lay down some reverse pointers.

Looking through the bibliography, however, I notice that they don't cite Robert Ornstein's 1969 On the Experience of Time (reprinted in 1997), which makes pretty much the same argument. Perhaps 1969 is too far back to go in a literature search? Or perhaps the fact the Ornstein's later career has had a New Age edge got in the way? I don't know. But Ornstein was there back in the late 1960s.

Here's a synopsis of the book from Questia:

How do we experience time? What do we use to experience it? In a series of remarkable experiments, Robert Ornstein shows that it is difficult to maintain an "inner clock" explanation of the experience of time & postulates a cognitive, information-processing approach. This approach alone makes sense out of the very different data of the experience of time & in particular of the experience of duration-the lengthening of duration under LSD, for example, or the effects of an experience felt to be a success rather than a failure, time in sensory deprivation, the time-order effect, or the influence of the administration of a sedative or stimulant drug. Contents: The Problem of Temporal Experience. The "Sensory Process" Metaphor. The "Storage Size" Metaphor. Four Studies of the Stimulus Determinants of Duration Experience. Two Studies of Coding Processes & Duration Experience. Three Studies of Storage Size. Summary, Conclusion, & Some Speculation on Future Directions.

The Bass is the Place

Trainor and her colleagues have recently published a study in the Proceedings of the National Academy of Sciences suggesting that perceptions of time are much more acute at lower registers, while our ability to distinguish changes in pitch gets much better in the upper ranges, which is why, writes Nature, “saxophonists and lead guitarists often have solos at a squealing register,” and why bassists tend to play fewer notes. (These findings seem consistent with the physics of sound waves.) To reach their conclusions, Trainer and her team “played people high and low pitched notes at the same time.” Participants were hooked up to an electroencephalogram that measured brain activity in response to the sounds. The psychologists “found that the brain was better at detecting when the lower tone occurred 50 MS too soon compared to when the higher tone occurred 50 MS too soon.”The study’s title perfectly summarizes the team’s findings: “Superior time perception for lower musical pitch explains why bass-ranged instruments lay down musical rhythms.” In other words, “there is a psychological basis,” says Trainor, “for why we create music the way we do. Virtually all people will respond more to the beat when it is carried by lower-pitched instruments.”

Thursday, October 29, 2015

Biological Evolution May be More Rapid Than We'd Thought

A new study of chickens overturns the popular assumption that evolution is only visible over long time scales. By studying individual chickens that were part of a long-term pedigree, the scientists led by Professor Greger Larson at Oxford University's Research Laboratory for Archaeology, found two mutations that had occurred in the mitochondrial genomes of the birds in only 50 years. For a long time scientists have believed that the rate of change in the mitochondrial genome was never faster than about 2% per million years. The identification of these mutations shows that the rate of evolution in this pedigree is in fact 15 times faster. In addition, by determining the genetic sequences along the pedigree, the team also discovered a single instance of mitochondrial DNA being passed down from a father. This is a surprising discovery, showing that so-called 'paternal leakage' is not as rare as previously believed.

Authorial Intention in Literary Study, an Informal Note

Why have literary critics been so concerned about authorial intention? I say “have been” because these discussions were most vigorous back in the 1960s and 1970s, but now are not so important – though the issue is central to Joseph Carroll, the Darwinian critic.

One might think it was about how one reasons to an interpretation of a work. That’s certainly how it seemed back in the 60s and 70s. But I don’t believe that any more. For one thing, there are a few important cases where an appeal to the author has little traction. How do you appeal to the author of Iliad or Odyssey? We don’t know who Homer was, if indeed he was a single individual. While we do know who Shakespeare was – I give no credence to the arguments that Shakespeare was someone else – we know so little about him that an appeal to Shakespearean plausibility is little more than an appeal to the Elizabethan world view. And then in one peculiar and particular case where we know a great deal about the author – I’m thinking about Coleridge and “Kubla Khan” – critics feel free to ignore or reason away the one thing he told us about the text, that it is incomplete.

As a practical matter, whatever else we might know about a given author, whatever materials we might have – drafts, correspondence, essays, whatever ¬– the text itself is going to be out best source of evidence. The really tricky problems that arise with interpreting a literary texts – I’m thinking here of de Man’s essay, “Form and in Intent in the American New Criticism” – also exist in dealing with any form of secondary material, whether by the author or others. Something else is going on.

A Test Run with My New Toy

It’s a used Lumix DMC-ZS7. It’s a mid-range point-and-shoot digital camera. That’s its ghostly presence in the photo above.

I got it because I wanted a camera that was small enough that I could slip into my pocket whenever I went out. That way I could snap off a shot whenever one presented itself. And the world’s always doing that, presenting itself.

The camera arrived yesterday and I took a few shots with it, like the one above. But I didn’t really give it a spin until this morning. I looked at the manual briefly, but didn’t read much of anything. Who wants to read manuals?

That’s probably why I didn’t notice I was shooting in 16 by 9 aspect ratio until I started working on the photos in Lightroom. I had no intention of using the photos straight out of the camera. Though I could do so, as it won’t shoot in RAW format, a drawback. To tell the truth, I’d wanted to get a high-end point-and-shoot so I could get RAW images, but the budget wouldn’t permit it.

What’s so important about RAW format? Information. The camera captures more information about each pixel than any monitor can display or than any printer can print. In order to produce an image that can be displayed or printed half the captured information has to be thrown away. That’s what happens when an image is converted to a JPG format. This camera does that automatically. If it shot RAW images, I’d be able to choose what information is tossed.

On the other hand, a JPG that I have because I had a camera in my pocket is more useful than a RAW that I don’t have because I don’t want to carry my Pentax K7 around with me.

Wednesday, October 28, 2015

The Japanese Abacus & the "Extended" Mind

For the last year or so I've been watching a bunch of YouTube clips in a series called Begin Japanology, which is an NHK series about Japanese culture and society. I've just been watching a fascinating one about the Japanese abacus, aka the soroban:

The whole thing is worthwhile (it's less than a half hour), but here are some segments to pay attention to:

- How it works: c. 6:15-8:53: the sensorimotor basics. If you want to think about the "extended" mind, you have to start here.

- Mental calculation: c. 12:35-13:23: people can do sorobon calculations without actually having a sorobon. They do the calculations mentally, though they may move their fingers over an imaginary sorobon.

- Divination: 16:23-17:00: In the old days math was used more for divination than for solving practical problems.

And of course there's the usual stuff about how very fast experts are, beating ordinary electromechanical and electronic calculators.

Me, Joseph Carroll, and the Search for Precision in Literary Criticism

I’ve been thinking a lot about Joseph Carroll recently and have been drafting material for posts that I may or may not put online [1]. It’s hard, very hard. And if I post them, it’s likely to be brutal in stretches, long stretches, for we are very different thinkers.

But we do share a motivation. Here’s a passage from Graphing Jane Austen [2], a recent book he co-authored with three other scholars (pp. 61-62):

It is the author who stipulates the features of characters and situations, describes a setting, delineates a sequence of actions, and orchestrates the whole to produce a continuous sequence of recognitions and emotional responses that vary little from reader to reader. If the reader is competent, the reader registers precisely what is in the text, just as an observer; if he or she is alert, registers precisely the physical features and expressive character of some actual person.

Forget about the author. That’s a matter on which we disagree quite a bit. To a first approximation, I think they’re (methodologically) useless; he thinks they’re (theoretically) the whole ball of wax.

It’s the second sentence that interests me. One word in particular: “precisely”. He thinks there is something precise about literary texts and so do I. He thinks it’s meaning that is precise. I think that centering one’s desire for precision on meaning is hopeless, and so I abandoned that quest some time ago. I’m centering my desire for precision on form [3].

To say much more than this would require entering into a conversation that will be long and difficult. I can’t do that now. Still, the conviction that there is something very precise about literary texts and literary phenomena, that is not a trivial matter. On the contrary, it’s at the heart of how literature is central to culture and society, to human life.

* * * * *

[1] I’ve had a bit to say about Darwinian literary criticism, and have gathered those posts into a working paper: On the Poverty of Literary Darwinism (2015) 45 pp., URL: https://www.academia.edu/15853288/On_the_Poverty_of_Literary_Darwinism

[2] Joseph Carroll, Jonathan Gottschall, John Johnson, Daniel Kruger. Graphing Jane Austen: The Evolutionary Basis of Literary Meaning. New York: Palgrave Macmillan, 2012. FWIW this book is Carroll’s most significant contribution to literary criticism to date. Why? Because it is empirical, though it is by no means the first empirical study of literature. There is a long history of that. Given that it is empirical, it gives us a new kind insight into a significant body of texts. Though I’ve not taken the time to study it carefully, it seems to me that there is solid work here, work that can and should be built upon. FWIW this book is Carroll’s most significant contribution to literary criticism to date. Why? Because it is empirical, though it is by no means the first empirical study of literature. There is a long history of that. Given that it is empirical, it gives us a new kind insight into a significant body of texts. Though I’ve not taken the time to study it carefully, it seems to me that there is solid work here, work that can and should be built upon.

[3] My central, and rather long, statement on the matter: Literary Morphology: Nine Propositions in a Naturalist Theory of Form. PsyArt: An Online Journal for the Psychological Study of the Arts, August 2005, Article 060608. http://www.psyartjournal.com/article/show/l_benzon-literary_morphology_nine_propositions_in

You can download the text here: https://www.academia.edu/235110/Literary_Morphology_Nine_Propositions_in_a_Naturalist_Theory_of_Form

Skills and Division of Labor Among Hunter-Gatherers

Paul L. Hooper, Kathryn Demps, Michael Gurven, Drew Gerkey, Hillard S. Kaplan. Skills, division of labour and economies of scale among Amazonian hunters and South Indian honey collectors. Philosophical Transactions B. Published 26 October 2015.DOI: 10.1098/rstb.2015.0008

Abstract: In foraging and other productive activities, individuals make choices regarding whether and with whom to cooperate, and in what capacities. The size and composition of cooperative groups can be understood as a self-organized outcome of these choices, which are made under local ecological and social constraints. This article describes a theoretical framework for explaining the size and composition of foraging groups based on three principles: (i) the sexual division of labour; (ii) the intergenerational division of labour; and (iii) economies of scale in production. We test predictions from the theory with data from two field contexts: Tsimane' game hunters of lowland Bolivia, and Jenu Kuruba honey collectors of South India. In each case, we estimate the impacts of group size and individual group members' effort on group success. We characterize differences in the skill requirements of different foraging activities and show that individuals participate more frequently in activities in which they are more efficient. We evaluate returns to scale across different resource types and observe higher returns at larger group sizes in foraging activities (such as hunting large game) that benefit from coordinated and complementary roles. These results inform us that the foraging group size and composition are guided by the motivated choice of individuals on the basis of relative efficiency, benefits of cooperation, opportunity costs and other social considerations.

Tuesday, October 27, 2015

"Japanism"

Japanology Plus, Episode 19: Festivals.

An expert on Japanese festivals, Tetsuya Yamamoto, says (c. 14:26):

In Japan we say that there are eight million deities. They reside in plants, water, in fire, insects, animals. Deities are everywhere. They imbue everything with life. In Japan we go to a Shinto shrine at the New Year. At Christmas our thoughts are directed at Christ. And when we die, nearly everybody will arrange to have a Buddhist funeral. It’s not that we have no religion. But that different elements have become unified into a single unique faith. Japanism, if you like.

Finger Knowledge, A Note About Me and My Machines

A couple of months ago, before I got my new machine, two keys on my keyboard failed. First the left Shift key failed, and then the left Command key (on a Macintosh). Both are important frequently used keys. But, as there are two of each, I decided not to get a new keyboard – who knows, maybe they’d come back.

Continuing one, of course, meant that I had to change my keyboarding habits, which I figured I could do. The fact is, though I’m a semi-competent touch typist, I never really got competent secretary-class good. Though I do a lot of writing, I never go for long stretches of flat-out typing. So, while changing my keyboarding habits was a PITA, it was no more than that.

It was an inconvenience, not a disaster.

But it meant that I actually had to think about what my fingers were doing while typing. I couldn’t simply let them go on autopilot. And I had to think about just what workarounds I’d use for various situations. For example, you need the Left Shift key to type a capital “i” or to type left and right parentheses, each of which I use fairly often. Now what do I do?

What I did isn’t important. What I’m getting at is that, now that I’ve got a fully functioning keyboard, I’ve got to erase those workarounds. And that takes conscious thought and effort. I couldn’t just flip some mental switch – zhupp! – and go back to the old routines.

It seems that, in order to learn these new routines, I had to “erase” those old routines. So, now I’ve got to erase the new routines and consciously “relearn” the old routines. And I’m particularly aware of that as I type this note.

That”s what interests me. That’s what this post is about. That mental threshold between being able to switch back and forth between two ways of doing something and having to learn, unlearn, and relearn “low level” routines. I’m sure that has to do with how the nervous system works, but I don’t want to think very hard about that and the moment.

I’m pretty sure that I could be a piece of software that would remap my keyboard to the more physically efficient Dvorak mapping – for all I know, something like that might actually be an option somewhere in the Mac OS operating system. If I did that I would have to expend considerable effort to learn to use that mapping. Let’s imagine that I did so. Would I then be able to “turn it off” and will and return to the standard mapping. Or would I have to “relearn” the standard mapping, just as I am, to some extent, relearning the standard uses of Left Shift and Left Command keys?

I don’t know.

Orchestral trumpet players have to do something like that. When you learn to read trumpet music you learn a certain mapping between the written notes and the (right hand) finger action – which of the three valves do you depress? – you need to execute that note. But there are times when you might want to play every note consistently higher or lower than what’s written. To do this you need to learn a different mapping between the written notes and your finger actions.

Sure, you can “calculate” in your mind what you have to do. And if the music you are playing is simple enough, this will rework. But there are situations where that’s not fast enough. To cope with those situations you need to learn a new mapping. Orchestral and other “legit” trumpet players do it all the time.

I’ve never learned to do that, because I’ve never needed the skill. How do these trumpeters keep the different mappings separate in their minds? How do they keep from slipping into the wrong mapping? If they played while drunk, would they slip?

Interesting questions.

Monday, October 26, 2015

Humanistic Thought as Prose-Centric Thought

Back on March 17, 2010 I made a short post to The Valve: “Style Matters”. I reposted it to New Savanna in June of 2012 and them bumped it to the top of the queue on September 6 of this year. That history suggests that the idea is important to me, yet until quite recently ¬– the last few weeks – it was just an isolated observation. Finally, however, I’ve incorporated it into the ongoing line of investigation.

It’s a line of thinking about the nature of humanistic investigation that I’ve been pursuing off and on since, well, forever, or at any rate since my undergraduate years at Johns Hopkins during the Sturm und Drang of Structuralism and deconstruction when I was led to believe, willingly I might add, that Western metaphysics was in shambles and it was up to us to create a new world. For me that meant cognitive science. Yes, I know, cognitive science and deconstruction don’t have much in common, then or now. But, strange as it may seem in retrospect, we didn’t know that BACK THEN. No one told me not to do it. My humanist teachers certainly didn’t say “no”, even if they didn’t really understand this cognitive science stuff, and some of them even encouraged me.

Little did I know that, when I’d opted for cognitive science, for all intellectual purposes I’d left the profession – by which I mean academic literary criticism. On the contrary, as things worked out, I was able to study with a world-class cognitive scientist while pursuing my Ph.D. in the English Department at SUNY Buffalo in the mid-1970s. Hays was in the Linguistics Department – had been its founding chairman – and the English Department, which was very experimental, was perfectly happy for me to study with Hays. And so I wrote a dissertation – Cognitive Science and Literary Theory – which was as much a technical exercise in cognitive science as it was a contribution to literary theory.

I figured that THAT would be my contribution to the discipline, my calling card. It got me my first and only academic job, and that was it. Not even a handful of publications, some in first-class journals, could get me a second academic job. By the mid-1980s my career was over. I’d left the profession.

But, as I said, in another sense I’d left the profession by the time I’d decided to pursue cognitive science in the course getting of my doctorate in English literature. While it was obvious to everyone at the time that my work was quite unusual for a literary scholar, it wasn’t at all obvious that it was out of bounds. Because it wasn’t.

Not back then. As I’ve said already, that boundary hadn’t been drawn. Oddly enough, though cognitive science now has a following in literary criticism, and has had one for at least two decades, the work I did back then is still out of bounds. Even though it wasn’t out of bounds when I did it.

Boundaries are strange things.

Go figure.

Here Come the Sun – On Photographing a Sunrise

There is this word, “photorealism”, which refers to the quality of an image that has been drawn or painted. Not only does such an image represent some object or scene in a “realistic” manner, as opposed, say, to an impressionist or cubist manner, but it does so as well as a photograph. “Photorealism” designates a standard, one defined by a technology, photography. One of the defining characteristics of this technology is that the image is imposed on a surface, a chemical emulsion or an array of electronic senses, in a purely mechanical way that is true to the geometry of the scene as conveyed by light.

But, in what sense does the photographic image depict the visual world as it “really” is?

Here’s a photograph of a sunrise as it came out of my camera and into Adobe’s Lightroom rendering program:

That’s not what I saw as I took the photograph. It’s much too dark.

Which am I to believe, the truth-telling camera or my lying eyes?

Both.

The reason that image is so dark is that, by pointing my camera directly at the rising sun, I flooded it with light. That’s one thing. But there’s something else. The sensor can handle a wider dynamic range than any monitor can display and than can be realized on any photographic paper ¬– this is a problem that Ernst Gombrich explored at some length in Truth and Illusion, though he was interested in painting, not photography. So the software has to compress the image in order to display it. That darkness is the result of that compression.

Here’s the same image after I’ve done a thing or two with it:

This image is brighter over all and it has much more definition. The buildings of the skyline are no longer lost in dark shadow. You can now see distinct buildings and even see features of some.

Bruno Latour on Laudato Si! – " The Immense Cry Channelled by Pope Francis"

The first two paragraphs, as translated by Stephen Muecke:

The audacity of the Laudato Si! encyclical is equalled only by the multiple efforts to deaden as much as possible its message and effects. Once again, ecological questions, as soon as they are introduced into the regular course of our familiar thought patterns, modify from top to bottom the attitudes of all the protagonists. ‘How can a Pope dare to speak of ecology?’ ask both the faithful who expect an encyclical either to reinforce a doctrinal matter or clarify some moral question, and the indifferent who have never touched an encyclical in their lives, nor expected anything at all from the magisterium of the church. Many of the faithful block their ears so as not to hear the voice calling for radical conversion (§-114. “All of this shows the urgent need for us to move forward in a bold cultural revolution”) while the indifferent prick up their ears to listen to the voice of someone who they don’t for a second imagine could be ‘on their side’ (§-145. “The imposition of a dominant lifestyle linked to a single form of production can be just as harmful as the altering of ecosystems.”)Like all major religious or political texts, Laudato Si! requires a realignment of all established positions and requires one to take a stand in the midst of battles that one did not know to be so violent, nor that the Church could play a part in them. The church has long been alienated from political, moral or intellectual innovation, and until now limited to a more or less strict preservation of the ‘treasure of faith’ and to bringing in the moral police. And now it is sending a message putting it at the heart of the most vital arguments as if it were still present in history. What? Has the Pope written a new Communist Party Manifesto? Some are scandalized, others rejoice. Everyone is surprised. We must shut this down immediately! The Vatican belongs to the past, it can’t be in the present…

Find the rest at Academic.edu: https://www.academia.edu/17285495/_trans._Bruno_Latour_The_Immense_Cry_Channelled_by_Pope_Francis_

Sunday, October 25, 2015

Measurement: IQ and 5 Personality Factors

I've been reading around in Joseph Carroll recently, Graphing Jane Austen (2012) in particular, which he coauthored with John Johnson, Jonathan Gottschall, and Daniel Kruger. As you may know, the book reports results of an empirical study of how readers assess roughly 2000 characters in 202 19th century British novels. (FWIW, I'm one of 1,492 people who filled out a protocol for this study.) One aspect of the study was to assess the personality of each character. We did this using an instrument based on the so-called five factors model of personality, which, we're told is the preferred model these days. This post represents a speculation of mind about what's going on "under the hood" in this kind of assessment. My key notion is tricked out in orange text. [This is a repost from 2011.]

* * * * *

One of the things psychologists like to do is measure things: reaction time, sensitivity to temperature, racial bias, or, say, personality and intelligence. The last, of course, is one of the most contentious topics in psychology: Just what is intelligence anyhow? Does IQ measure it? Does IQ measure anything at all? Are IQ tests little more than a means by which elites dominate others? The measurement of personality hasn’t produced so much controversy, but it’s still an iffy business.

This post is an oblique comment on those two types of measurement: IQ and personality. Both involve complex technical issues that I’m not qualified to judge. So, for the purposes of this post I’m simply going to assume that IQ tests and personality inventories do in fact measure something real. Given that, what might those somethings be?

I ask that question in the context of contemporary cognitive science and neuroscience. Cognitive science is rich in models of mental processes that are based on an analogy with computing. Such models typically consist of ‘modules’ each of which performs a specific and limited task. A model capable of simulating or explaining interesting behavior is likely to consist of several to many interacting modules. Similarly, the neurosciences give evidence of a wide variety of functional capabilities variously localized throughout the brain.

Against THAT background, one is tempted to ask: Which are the modules responsible for intelligence and which for personality? The point of the analogy I’m about to suggest is that these tests don’t measure the performance of any specific modules at all. Rather, they measure the joint performance of all modules taken together.

The Analogy: Automobile Performance

The analogy I have in mind is that of automobile performance. Automobiles are complex assemblies of mechanical, electrical, electronic and (these days) computational devices. We have various ways of measuring the overall performance of these assemblages.

Think of acceleration as a measurement of an automobile’s performance. It’s certainly not the only measurement; but it is real, and that’s all that concerns me. As measurements go, this is a pretty straightforward one. There’s no doubt that automobiles do accelerate and that one can measure that behavior. But, just where in the overall assemblage is one to locate that capability?

Does the automobile have a physically compact and connected acceleration system? No. Given that acceleration depends, in part, on the mass of the car, anything in the car that has mass has some effect on the acceleration. Obviously enough the engine has a much greater effect on acceleration than the radio does. Note only does the engine contribute considerably more mass to the vehicle, but it is the source of the power needed to move the car forward. The transmission is also important, but so is the car’s external shape, which influences the amount of friction it must overcome. And so forth.

Some aspects of the automobile are clearly more important than others in determining acceleration. But, as a first approximation, it seems best to think of acceleration as a diffuse measure of the performance of the entire assemblage. As I’ve already indicated, there’s nothing particularly mysterious about what acceleration is, why it’s important, or how you measure it. Nor, for that matter, is there any particular mystery about how the automobile works and how various traits of components and subsystems affect acceleration. This is all clear enough, but that doesn’t alter the fact that we cannot clearly assign acceleration to some subsystem of the car. Acceleration is a global measure of performance.

The Analogy Applied

And so, it seems to me, that if intelligence is anything at all, it must be a global property of the mind/brain. You aren’t going to find any intelligence module or intelligence system in the brain. IQ instruments require performance on a variety of different problems such that a wide repertoire of cognitive modules embedded in many different neurofunctional areas are recruited. The IQ score measures the joint performance of all those areas.

The same, I suggest, is true of personality. However, whereas intelligence is generally measured by a single number, an IQ score, these days personality is measured by five numbers, one each for five factors: extroversion-introversion, neuroticism, agreeableness, conscientiousness, and openness. My sense is that the neural correlates to these things are likely to be distribution of neuro-chemicals and relative sizes of various neural regions. That is, they’re going to be diffuse properties of the nervous (and endocrine) system, not the properties of a specific personality subsystem.

The five factors are simply measures of how the whole system performs in various situations. We can’t track any factor down to some particular subsystem; we aren’t going to find the personality module by dissecting the brain. Nonetheless it makes perfectly good sense to talk of personality as a real thing as long as we don’t reify the dimensions of measurement.

In this case, however, I want to offer one further suggestion. We are social creatures. We are constantly interacting with one another. And so we must be constantly appraising one another’s moods and desires and forming estimates of how others are likely respond if we act in this or that way. In making such estimates, such guesses, such predictions, it would certainly be useful to have some representation, some sense, of the other’s personality in order to do so.

So, how is it that we acquire a sense of someone’s personality? What are the terms, in ‘mentalese’ if you will, in which we represent that sense? Where in the brain do we perform such estimates and retain the results? Note that this last question is QUITE DIFFERENT from the question of what brain system generates or performs personality. I’ve already rejected the notion that such a system exists; that was the point of the automobile analogy. No, the system I’m talking about is quite different. It is, in effect, a bit like a sensory system.

We have a visual system that detects shapes and colors. The auditory system detects sounds, pitches, volumes, timbres. Well, why not a personality appraisal system? Let us put aside the question of just how such a system might work and simply assume that such a system exists. My suggestion about the five-factory personality model, then, is simply that those five factors are the dimensions (in Gärdenfors’s* sense) along which we evaluate and retain personality judgments.

In support of this guess I note that the whole business of personality measurement started with “what is now known as the Lexical Hypothesis. This is the idea that the most salient and socially relevant personality differences in people’s lives will eventually become encoded into language. The hypothesis further suggests that by sampling language, it is possible to derive a comprehensive taxonomy of human personality traits.” Just as language encodes color and shape in visual space, and pitch, loudness, and timbre in auditory space, so it encodes extroversion-introversion, neuroticism, agreeableness, conscientiousness, and openness in personality space.

If I’m right about this, then we aren’t going to find any personality modules. But we should find some brain region or regions specialized for global assessments of people’s behavioral proclivities, that is, personality. For all I know, such a region or regions has already been identified.

Does anyone know the relevant literature?

*Peter Gärdenfors, Conceptual Spaces: The Geometry of Thought, MIT Press 2000; The Geometry of Meaning, MIT Press 2014.

Saturday, October 24, 2015

The transition to digital cinema has cut down on the availability of classics

Granted, it is easier and cheaper to produce and manage a DCP [digital cinema package] than a 35mm print. And some classics did find their way to independent or repertoire theatres (the few who survived the digital switch, that is). Several archives, ours included, are enjoying these benefits of the digital switchover. And advertising an 8K or 20K or whatever-K restoration can bring in audiences. But many of us also have tons of perfectly fine 35mm of classics, and they cannot be shown any more. Why not?*35mm projectors are basically gone*Some catalogue owners permit screening only their “restored DCP” (and strictly with English or French subtitles – so much for cultural diversity!)At the end of the day the number of available classic titles went down, not up.We could talk about this situation a long time, but let’s home in on one effect of the digital transition. The story I want to tell today is one about those “technical details” that we seem never able to get right. Only the Gods of Cinema know why.We could talk about screen ratios in this connection, but not today. Today we talk about frame rates.

If It's Not Online and Free, Then It's Not Published

That's by Scott F. Johnson and it's on his Academia.edu site:

The explosion of free PDFs of scholarship published before 1922 facilitatedby Google Books as well as Archive.org has had a strange, perhapsunexpected, effect on the nature of brand new scholarship that is fully under copyright. It has fostered the tendency among students and scholars alike, at least in the Humanities, to assume that if a book or articleis not online and not freely available then it is not published at all.

Many will be quick to guffaw at this statement but I increasinglyexperience its truth in the academic world.

I've not yet read the piece, but I'm biased in favor of the idea. FWIW, ever since my undergraduate years at Johns Hopkins I've been listening to senior scholars complain about how the publication system is now broken. Now, of course, I AM a senior scholar, and I publish to the web.

Publish your way out of jail

The Economist reports that Romania has come up with an interesting variation on "publish or perish".

THE makers of the Romanian edition of the board game Monopoly may want to consider altering the “Get out of jail free” card to one reading “Wrote a book in jail”. A change in the law in 2013 allows convicts to claim 30 days off their sentences for every work they publish while in prison. This has led Romanian tycoons and politicians imprisoned on corruption charges to indulge in a frenzy of scribbling. It is a system as corrupt as they are. [...]Prisoners are not allowed computers and prison libraries are basic. Manuscripts must be written with pen and paper. According to Romanian journalists, wealthy prisoners generally hire outside academics as “research supervisors”. They, or other ghostwriters, do the actual writing; the work is then smuggled into jail, where the prisoner copies it out by hand. A publisher is paid to print a few copies, which are presented to the parole board, which (with no guidelines or expertise) judges whether it is worthy of a reduced sentence.Most of the work has met with derision. Mr Copos, who wrote about the matrimonial alliances of medieval Romanian rulers, was accused of plagiarism. Mr Becali produced a picture-heavy book about his relationship with Steaua Bucharest, the football team which he controls. Realini Lupsa, a pop singer, wrote about stem cells in dental medicine. No one knows how many people have taken advantage of the system. One recent report put the figure at 73, with some prisoners producing up to five books in only a few months.

Friday, October 23, 2015

Friday Fotos: Some Modest Graffiti Under the Arches

This past week seems to have become expedition week in the photo section of New Savanna. So it's only fitting that I close out the week with some photos of some modest graffiti down there in the arches. This is at the western end (near Tonnelle Avenue) and has now been all but wiped out by the weather and by traffic of various kinds:

I'm pretty sure this is gone as well, though "PC" is still all over the place on those walls:

I love this wall. It looks so ancient:

And there's a story for each of those marks, Medow, Act, 5 (in construction orange), the snail (it appears), and the rest.

Emotion & Magic in Musical Performance - quia pacis tempore regnat musica

A person who gives this some thought and yet does not regard music as a marvelous creation of God, must be a clodhopper indeed and does not deserve to be called a human being; he should be permitted to hear nothing but the braying of asses and the grunting of hogs.– Martin Luther

Over the years I've collected anecdotes about 'interesting' experiences people have had with music, mostly as performers, but some as listeners. I'd enter them into a document so I could find them easily when it came time to write this or that about music. I recently decided to post a slightly edited version of that document to the web so others could appreciate and work with these stories. You can find that document at my Academia.edu site:

The document is in three parts. The first is some experiences I’ve had as a musician which give me some insight into how emotion & magic arise in musical performance. The second part contains a few statements by listeners. The third part is a list of passages by various performers that I’ve collected from various sources over the years.

I've listed two entries from the document, one about five jazz musicians, the other by Martin Luther, and the table of contents.

* * * * *

Earl Hines, Art Hodes, Roy Eldridge, Ornette Coleman, Sid Catlett

Whitney Balliett. American Musicians: 56 Portraits in Jazz. New York, Oxford: Oxford University Press: 1986.

p. 87, Earl Hines talking:

I'm like a race horse. I've been taught by the old masters – put everything out of your mind except what you have to do. I've been through every sort of disturbance before I go on the stand, but I never get so upset that it makes the audience uneasy. . . . I always use the assistance of the Man Upstairs before I go on. I ask for that and it gives me courage and strength and personality. It causes me to blank everything else out, and the mood comes right down on me no matter how I feel.

p. 151, Art Hodes talking:

Now, you can play the blues and just go through the changes and not feel it. That has happened to me for periods of time, and I can fool anybody but me. Right now I'm in a blues period. The blues heal you. When I play, I ignore the audience. I bring all my attention to bear on what I'm playing, bring all me feelings to the front. I bring my body to bear on the tune. If it's a fast tune – a rag – I have to make my hands be where they should be. . . . I'm trying to get lost in what I'm doing, and sometimes I do, and it comes out beautiful.

p. 176, Roy Eldridge talking, about playing the Paramount Theatre with Gene Krupa:

When the stage stopped and we started to play, I'd fall to pieces. The first three or four bars of my first solo, I'd shake like a leaf, and you could hear it. Then this light would surround me, and it would seem as if there wasn't any band there, and I'd go right through and be all right. It was something I never understood.

p. 407, Ornette Coleman talking:

I don't think about feeling, seeing, or thinking. I try to have the player and the listener have the same sound experience. I'm not thinking about mood or emotion. Emotion should come into you instead of going out.

p. 187, Mel Powell, talking about Sidney Catlett:

He'd fasten the Goodman band into the tempo with such power and gentleness that one night I was absolutely transported by what he was doing.

Martin Luther

Forward to Georg Rhau's Symphoniae, a collection of chorale motets published in 1538, as follows:

I, Doctor Martin Luther, wish all lovers of the unshackled art of music grace and peace from God the Father and from our Lord Jesus Christ! I truly desire that all Christians would love and regard as worthy the lovely gift of music, which is a precious, worthy, and costly treasure given to mankind by God. The riches of music are so excellent and so precious that words fail me whenever I attempt to discuss and describe them.... In summa, next to the Word of God, the noble art of music is the greatest treasure in the world. It controls our thoughts, minds, hearts, and spirits... Our dear fathers and prophets did not desire without reason that music be always used in the churches. Hence, we have so many songs and psalms. This precious gift has been given to man alone that he might thereby remind himself that God has created man for the express purpose of praising and extolling God. However, when man's natural musical ability is whetted and polished to the extent that it becomes an art, then do we note with great surprise the great and perfect wisdom of God in music, which is, after all, His product and His gift; we marvel when we hear music in which one voice sings a simple melody, while three, four, or five other voices play and trip lustily around the voice that sings its simple melody and adorn this simple melody wonderfully with artistic musical effects, thus reminding us of a heavenly dance, where all meet in a spirit of friendliness, caress and embrace. A person who gives this some thought and yet does not regard music as a marvelous creation of God, must be a clodhopper indeed and does not deserve to be called a human being; he should be permitted to hear nothing but the braying of asses and the grunting of hogs.and

.... if one sings diligently with skill and application, then music can make man good and at peace with himself and his fellows by providing him a view of beauty. Music drives away the devil and makes people happy; it induces one to forget all wrath, unchastity, arrogance, and other vices, quia pacis tempore regnat musica (for music reigns in times of peace).

Thursday, October 22, 2015

Orson Welles: Beyond the Fat Man a Great Man?

It seems they're rethinking the career of Orson Welles. The War of the Worlds broadcast was one of the century's great stunts. Citizen Kane was brilliant but heartless. The greatest film ever made? A nonsense appellation. As for Welles, he was his own man. A.S. Harmah reviews three recent books:

But this year, the Welles centennial, an appreciation for Welles—even the late, bloated, talk-show-guest Welles—is gathering force. Karp’s book, along with Patrick McGilligan’s remarkable, eye-opening biography Young Orson and A. Brad Schwartz’s Broadcast Hysteria, provide a deep, nuanced portrait of the director at the start and finish of his career. By skipping his better-known and much-studied years as an actor-director in Hollywood in the heyday of the studio system, and his years in the ’50s and ’60s as a nomadic filmmaker in Europe, these studies offer a new image of Welles, one that re-radicalizes him as an artist and sets him against the backdrop of the Depression and the early days of World War II. Focusing on his work in the theater and radio in New York and elsewhere in the ’30s, then cutting, Kane-like, to the New Hollywood of the ’70s reveals an unwavering Welles, committed to a kaleidoscopic vision that was also a style of work and a way of being in the world. If he failed to find a way to direct his films with Hollywood funding and approval, he went elsewhere—a rebuke the movie industry saw as disrespectful, self-sabotaging, and grotesque.

The next paragraph gives us this gem of an observation: "The Welles of TV talk shows and wine commercials is in fact an indictment of how the second half of the twentieth century failed to live up to the promises of the first half."

Prospects: The Limits of Discursive Thinking and the Future of Literary Criticism

Another working paper. The usual deal, links, abstract, contents, and introduction.

Abstract: After considering future prospects for literary criticism in terms of conceptual possibilities, and preferred intellectual style, this picture emerges: 1. Ethical criticism is the only criticism we can do that is entirely prose-centric. 2. Naturalist criticism requires considerable intellectual investment outside of literary study and is quite limited if one insists on prose-centric thinking. 3. Description has relatively few extra-literary prerequisites, but requires tables or diagrams. It is not consonant with a prose-centric orientation. 4. Computational criticism is giving us new phenomena to examine, but it is not prose-centric. From this we may conclude that discursivity, prose-centric thinking, is the primary obstacle preventing further development of literary criticism. It stands in the way of computational criticism and description, neither of which is fundamentally discursive, and constrains the development of naturalist criticism.

CONTENTS

Introduction: Beyond Discursive Thought

Meaning, Theory, and the Disciplines of Criticism

Some Notes on Ethical Criticism, with Commentary on J. Hillis Miller and Charlie Altieri

Ethical Criticism: Blakey Vermeule on Theory, Cornel West in the Academy, Now What?

Literary Form, the Mind, and Computation: A Brief Note (Boiling It Down)

Description as a Mode of Literary Criticism

The Only Game in Town: Digital Criticism Comes of Age

The Disciplines of Psychology, the Study of Literature, and an Ecology of Cultural Beings

Latour, Language, and Translation

Appendix 1: An Annotated Guide to my Writing about the Profession

Appendix 2: Critical Disciplines, the Short Version

Appendix 3: Critical Method: the Four-Fold Way, from Then to Now

Introduction: Beyond Discursive Thought

Abstractly considered, the future of academic literary criticism has three aspects: 1) intellectual capabilities and methods, 2) institutional arrangements, and 3) the preferences of those seeking to do literary research and publication. Most of my thinking and writing about the matter has focused on the first issue, though I’ve made some scattered remarks here and there on the second. But I’ve mostly neglected the third – well, not quite. It’s complicated.

I’ve written a bit about ethical criticism, a term I’ve taken from Wayne Booth, and that is certainly partially motivated by that third issue. Why do people become professional literary scholars? Because they want to do ethical criticism. They may not call it that, but that’s what most people in the profession do under the guise of interpretation, Certainly that is what critique is about.

Style Matters

But the issue has another aspect, and that has to do with how people think. When, some 40 years ago, I turned toward the cognitive sciences and away from structuralism and post-structuralism, deconstruction, and the rest, the turn was driven as much by intellectual style as by epistemological conviction. No, I didn’t have much affection for the predicate calculus, which I learned in a course in symbolic logic (it fulfilled my math requirement), but I did like the intellectual style I found in linguistics books, the sense of rigor and explicit order. I also liked the diagrams. A lot.

There were large sections in my dissertation — Cognitive Science and Literary Theory [1] — where the major burden of the argument was carried by the diagrams. I’d work out the diagrams first and then write prose commentary on them. That modus operandi pleases me a great deal. In the preface to Beethoven’s Anvil (the book had some diagrams, but not many) I refer to my thinking in that book as speculative engineering. I like that term: speculative engineering [2].

There are other intellectual styles, obviously. Some very different from my diagrammatic and speculative engineering style. New historicism, for example, is, or can be, a very writerly style. One gathers stories, vignettes, and passages from various writers, literary and not, and arranges them more according to rhythm, surprise, and repose than for logical progression and finality — though such matters come into play as well. It is a style that can be a bit like literature itself, at least prose fiction, though one can sneak in some lyrical passages here and there, and maybe even a bit of insistent rhythm.

I’ve been told, and have no reason to doubt, that new historicism is the closest thing academic literary criticism currently has to a dominant methodological practice. I can’t help but thinking that this preference is as much about intellectual style as about epistemological conviction. Yes, the varieties of Theory are also prose-centric, but they are more insistently argumentative, if not polemical, and so don’t offer the (often unrealized?) possibilities for lyrical expression that flow from new historicism.

Consequently, I’ve got two suspicions about intellectual style:

• In anyone’s intellectual ecology, style preferences are deeper and have more inertia than explicit epistemological beliefs.• Some of the pigheadedness that often crops up in discussions about humanities vs. science is grounded in stylistic preference that gets rationalized as epistemological necessity.

I think/suspect/fear that is the case with a lot of literary critics. And that affects the first issue, intellectual capabilities and methods. For many of the most exciting intellectual opportunities require that one think in modes other than discursive prose.

* * * * *

What I would like to do in the rest of this introduction, then, is consider the future possibilities of literary criticism in light of two considerations: intellectual possibilities, and conceptual style. First I offer a sketch of how prose-centricity has affected the evolution of literary criticism over the past half-century or so and then I look at a four-fold division of intellectual possibilities in light of that evolution.

Wednesday, October 21, 2015

Roads Not Taken: A Study in Poetic Mechanism

Another working paper posted; links, abstract, and introduction as usual.

- Academia.edu: https://www.academia.edu/17120360/Roads_Not_Taken_A_Study_in_Poetic_Mechanism

- SSRN: http://ssrn.com/abstract=2677363

* * * * *

Abstract: Robert Frost’s “The Road Not Taken” is a ring composition three levels deep: 1 2 Ω 2’ 1’. The central section consists of lines 9 through 12 cuts across the boundary between the second (ll. 6-10) and third (ll. 11-14) stanzas. There is a subtle shift in tense in line 16 in which the poet in effect travels back into the past, at the moment of decision captured in the poem, so that he can anticipate the present moment in which the poem unfolds. Thus the end of the poem rejoins the beginning, not merely through the repetition of a line, but through a trick in time.

CONTENTS

Introduction: Another Ring Discovered 2

Preliminaries: Describing Form, and a Precedent 2

A Road Literary Critics Don’t Travel 3

A Parallel in “Kubla Khan” 3

Frost’s Text: The Road Not Taken 5

Robert Frost, Time Traveler: The Road Not Taken 5

Poetic Mechanism 6

But What About Meaning? 10

Further Thoughts 11

Alignment in “The Road Not Taken” 11

More Frostiness 14

More About the Poem 16

Introduction: Another Ring Discovered

Robert Frost’s “The Road Not Taken” is one of the best-known poems in the English language and a secondary school favorite in America. It would be a bit much to assert that every schoolchild has read the poem, but many have, for they’ve had no choice. Many critics written about the poem as well and there seems to be widespread agreement that the poem is a bit deceptive, as poems are wont to be.

Still, when, prompted by a post in 3 Quarks Daily, I set out to read it again, long after my schoolboy years, I wasn’t expecting to discover something in the poem that, apparently, other critics have missed. Yes, I know I know, the great texts yield endless riches. But what I discovered was something that was, to me anyhow, obvious, something about the poem’s form. Yes, four stanzas of five lines, rhymed ABAAC – that too is obvious. We learn how to do that kind of description in secondary school.

But that’s about it as far as formal description goes. After that the search for meaning takes over and never lets up. And so most of the discussion of this poem, as of others, is about meaning, and its formal features are either ignored or treated as ornamentation.

What was obvious about this poem, almost as soon as I’d reread it, is that it is a ring-composition, which I explain in detail a bit later. But I shouldn’t have had to discover that. Just as it is commonly known that dogs have four legs and a tail, so I should be commonly known that “The Road Not Taken” is a ring-composition. Once you see that, then you can see how the poem has three structural principles operating in parallel, I write about that as well.

Such a simple poem, such a rich text. So much that’s not been observed.

When WILL we open our eyes and ears?

Tuesday, October 20, 2015

What the Green Villain Irregulars Discovered in their Journey to Another Galaxy Far Far Away

Yesterday I showed some pictures of the Green Villain Irregulars on their journey to some other place. Now I want to show some pictures of artifacts they discovered. This one appears to be some kind of transport device like the Tardis, only smaller:

You slip it over your head, climb in – like the Tardis, the inside is larger than the outside – and then travel wherever your heart desires. Mind you, we didn't actually try it, that's just what our exoarchaelogists conjecture to be true of this most interesting and colorful device.

This appears to be some kind of repository of esoteric knowledge:

Notice the strange symbols all over it. They appear to be layered on top of one another and we conjecture that there are, in fact, countless layers that are invisible to the naked eye but that will appear if one wears the appropriate vision augmentation devices. That blue symbol to the right appears to be the talisman of a particularly influential guild of mystics.

Our experts were puzzled by this contraption for the longest time:

We still don't have a definitive analysis; there are more tests to be done, more mages to be consulted. At the moment we think it's some kind of food locker.

The Disciplines of Psychology, the Study of Literature, and an Ecology of Cultural Beings

Psychoanalysis has been the psychological discipline that has had the most influence on literary criticism, along with Jungian depth psychology and perhaps Gestalt psychology as well. In the middle 1950s and into the 1960s computation hit the behavioral sciences and a movement that came to be called cognitive science emerged. The neurosciences came of age and 1970s sociobiology gave way to 1980s evolutionary psychology. This post is about these newer psychologies.

But I don’t intend an in-depth discussion or even a brief survey of how these psychologies are being used in literary criticism. My purpose is more abstract and schematic.

Psychology: The Five-Fold Way

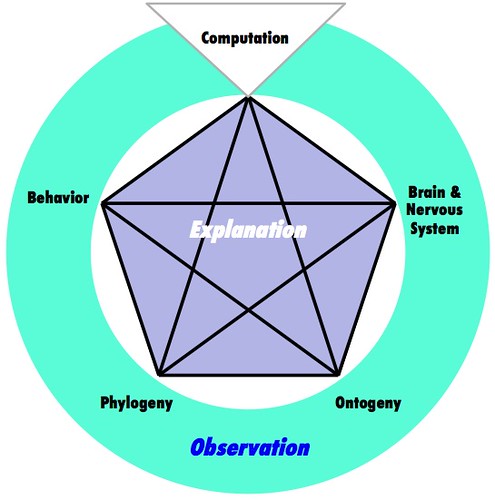

In 1978 I filed a dissertation on “Cognitive Science and Literary Theory” [1]. Since cognitive science was a rather new development at that time – the term itself was coined by Christopher Longuet-Higgins in 1973 – I devoted a chapter to explaining what cognitive science was. My account was necessarily idiosyncratic. Cognitive science has never been more than a loosely associated agglomeration of themes and interests ultimately impelled by the idea of computation. For the arguments in my dissertation I needed a bit more than was then (and even now) implied by “cognitive science” argued that it was about investigating a five-way correspondence between: 1) computation, 2) behavior, 3) the brain and nervous system, 4) ontogeny, and 5) phylogeny. And the dissertation itself discussed each of those.

Consider this informal diagram:

I’ve arranged each of those five concerns at the corners of a pentagram. Except for computation, they are fields of phenomena to be observed and explained. Computation is introduced as a set of devices for crafting explanations of observed phenomena.

Psychology is most centrally concerned with explaining human behavior. What kinds of computational mechanisms would explain this or that observed human behavior? Moving counter-clockwise around the circle, one can ask the same question for the behavior of any animal and so develop a computational approach to comparative psychology and to the evolution of behavior. Similarly, one can develop a computational approach to the behavior of a creature at any phase of its life cycle, ontology. Computational approaches to language acquisition have been under study for decades. And computational neuroscience – brain & nervous system – is richly developed.

Just how these research possibilities are apportioned into organized disciplines – departments, professional societies, journals, conventions, and so forth – that’s a secondary matter. As a practical matter while cognitive science has its journals and conventions, there are few departments of cognitive science. It exists in universities mostly as interdepartmental programs.

Subscribe to:

Comments (Atom)