Friday, September 29, 2017

Objectivity and Intersubjective Agreement

Consider this an addendum to yesterday’s:

Let’s start with a passage from my open letter to

Charlie Altieri [1]:

I am of the view that the rock-bottom basic requirement for knowledge is intersubjective agreement. Depending how that agreement is reached, it may not be a sufficient condition, but it is always necessary. Let’s bracket the general issue of just what kinds of intersubjective agreement constitute knowledge. I note however that the various practices gathered under the general rubric of science are modes of securing the intersubjective agreement needed to constitute knowledge.

As far as I know there is no one such thing as scientific method – on this I think Feyerabend has proven right. Science has various methods. The practices that most interest me at the moment were essential to Darwin: the description and classification of flora and fauna. Reaching agreement on such matters was not a matter of hypothesis and falsification in Popper’s sense. It was a matter of observing agreement between descriptions and drawings, on the one hand, and specimens (examples of flora and fauna collected for museums, conservatories, and zoos) and of creatures in the wild.

John Wilkins calls that a process of “experienced observation”

[2].

Now I want to add Searle on objectivity and subjectivity

with respect to ontology and epistemology [3]. Here’s where I’m going: Reaching

intersubjective agreement is obviously and epistemological matter. But, and

this is where things get tricky, it is possible to reach intersubjective

agreement about phenomena that are ontologically

subjective and as well ontologically objective. The so-called hard sciences (physics, chemistry,

astronomy, perhaps biology, and others) are about matters that are ontologically

objective in kind. They exist ‘out there’ in the world completely independent

of human desires, perceptions, and thoughts. The human sciences, if you will,

(most?) often deal with phenomena that are ontologically subjective but about

which competent observers can reach substantial intersubjective agreement (aka

objective knowledge). Turn it arount: we have intersubjective agreement (aka

objective knowledge) about matters that are ontologically subjective.

Consider the case of color. We know that it is NOT a simple

function of wavelength – there’s a substantial literature on this, google it

[4]. It seems to me, however, once we start arguing whether or not color is

subjective or objective, we’re in a different ballgame. Color depends on the perceiving

system. In that sense it’s subject. And what of camera’s, whether analog or

digital?

But I’m not really interested in color (oh geez! really? except when I’m a photographer, then I am). I’m

interested in describing literary texts. And they take the physical form of

strings. But those strings exist for

human consciousness. They are meant to be read. The meaning of those strings is

thus ontologically subjective, always. For the meaning exists (only) in the

minds of subjects (human beings). But, depending on various things, it is

possible to reach substantial intersubjective agreement on that meaning (see,

for example, Attridge and Stratton, The

Craft of Poetry, 2015).

My argument about form and description (e.g. in the post I

referenced at the beginning of this one) is that if we focus our descriptive

efforts on form, it should be possible to reach such a high degree of

intersubjective agreement that we are entitled to think of literary form as ontologically objective. Where there is

high intersubjective agreement about a phenomenon, we say that it is

objectively real. That’s what experienced observation is about.

References

[1] Literary Studies

from a Martian Point of View: An Open Letter to Charlie Altieri, New Savanna,

blog post, accessed Sept. 29, 2017, http://new-savanna.blogspot.com/2015/12/literary-studies-from-martian-point-of.html

[2] I discuss this in: Experienced observation (&

another brief for description as the way forward in literary studies), New

Savanna, blog post, accessed Sept. 29, 2017, http://new-savanna.blogspot.com/2017/09/experienced-observation-another-brief.html

[3] John R. Searle, The Construction of Social Reality,

Penguin Books, 1995.

[4] And I’ve got a blog post or two, e.g. Color the Subject,

New Savanna, accessed September 29, 2017, http://new-savanna.blogspot.com/2016/07/color-subject.html

Photographing the Eclipse and the Problematics of Color [#Eclipse2017], New Savanna, accessed September 29, 2017, http://new-savanna.blogspot.com/2017/08/photographing-eclipse-and-problematics.html

Photographing the Eclipse and the Problematics of Color [#Eclipse2017], New Savanna, accessed September 29, 2017, http://new-savanna.blogspot.com/2017/08/photographing-eclipse-and-problematics.html

Thursday, September 28, 2017

Describing structured strings of characters [literary form]

I recently argued that Jakobson’s poetic function can be

regarded as a computational principle [1]. I want to elaborate on that a bit in

the context of the introduction to Representation’s

recent special issue on description:

Sharon Marcus, Heather Love, and

Stephen Best, Building a Better Description, Representations 135. Summer 2016. 1-21. DOI:

10.1525/rep.2016.135.1.1.

That article ends with six suggestions for building better

descriptions. The last three have to do with objectivity. It is the sixth and

last suggestion that interests me.

Rethink objectivity?

Here’s how that suggestion begins (p. 13):

6. Finally, we might rethink objectivity itself. One way to build a better description is to accept the basic critique of objectivity as impossible and undesirable. In response, we might practice forms of description that embrace subjectivity, uncertainty, incompleteness, and partiality. But why not also try out different ways of thinking about objectivity? Responsible scholarship is often understood as respecting the distinction between a phenomenon and the critical methods used to understand it; the task of the critic is to transform the phenomenon under consideration into a distinct category of analysis, and to make it an occasion for transformative thought. Mimetic description, by contrast, values fidelity to the object; in the case of descriptions that aim for accuracy, objectivity would not be about crushing the object, or putting it in perspective, or playing god, but about honoring what you describe.

OK, but that’s rather abstract. What might this mean,

concretely? They offer an example of bad description, though the fact that it is placed well

after a blank line on the page (p. 14 if you must know) suggests that it’s meant

to illustrate (some combination of) the six suggestions taken collectively

rather than only the last.

Here’s the example (taken from one of the articles in the

issue, pp. 14-15):

In her criticism of the objectivity imperative in audio description, Kleege explains that professional audio describers are instructed to avoid all personal interpretation and commentary. The premise is that if users are provided with an unbiased, unadorned description, they will be able to interpret and judge a film for themselves. Kleege writes, “In extreme instances, this imperative about absolute objectivity means that a character will be described as turning up the corners of her mouth rather than smiling.” For Kleege, reducing the familiar act of smiling to turning up the corners of one’s mouth is both absurd and condescending. The effort to produce an objective, literal account only leads to misunderstandings, awkwardness, and bathos. This zero-degree description is the paradoxical result of taking the critique of description, with its mistrust of interpretation and subjectivity, to one logical extreme. Tellingly, the professional audio describer’s “voice from nowhere” is not only weirdly particular; it also fails to be genuinely descriptive, since its “calm, controlled, but also cheerful” tone remains the same no matter what is being described.

OK, that makes sense. Let me suggest that smiles are

objectively real phenomena and so we don’t need to rethink objectivity in order

to accommodate this example.

To be sure, there are cases where one needs to know that

smiling means “turning up the corners of her mouth”. If one is investigating

the nature of smiles as communicative signals one might begin with that bit of description. But that

itself is not enough to differentiate between spontaneous smiles and deliberate

“fake” smiles – something that has been investigated I’m sure. In that contexts smiles cannot be taken at

face value, as it were.

But that’s not the context we’re dealing with. We’re dealing

with someone watching a film and describing what the actors are doing. We’re

dealing with (images of) the natural context of smiling, human communication.

Those configurations of facial features and gestures exist for other people and so it is appropriate, indeed it is necessary,

that we frame our descriptions in terms commensurate phenomena under

observation (“fidelity to the object”).

But what has that to do with describing literary texts?

Texts, after all, are quite different from human beings interacting with one

another.

The problem with software

It’s been said that software is “eating the world.” More and more, critical systems that were once controlled mechanically, or by people, are coming to depend on code. This was perhaps never clearer than in the summer of 2015, when on a single day, United Airlines grounded its fleet because of a problem with its departure-management system; trading was suspended on the New York Stock Exchange after an upgrade; the front page of The Wall Street Journal’s website crashed; and Seattle’s 911 system went down again, this time because a different router failed. The simultaneous failure of so many software systems smelled at first of a coordinated cyberattack. Almost more frightening was the realization, late in the day, that it was just a coincidence.

“When we had electromechanical systems, we used to be able to test them exhaustively,” says Nancy Leveson, a professor of aeronautics and astronautics at the Massachusetts Institute of Technology who has been studying software safety for 35 years.

What's the problem?

The problem is that software engineers don’t understand the problem they’re trying to solve, and don’t care to,” says Leveson, the MIT software-safety expert. The reason is that they’re too wrapped up in getting their code to work. “Software engineers like to provide all kinds of tools and stuff for coding errors,” she says, referring to IDEs [integrated development environment]. “The serious problems that have happened with software have to do with requirements, not coding errors.” When you’re writing code that controls a car’s throttle, for instance, what’s important is the rules about when and how and by how much to open it. But these systems have become so complicated that hardly anyone can keep them straight in their head. “There’s 100 million lines of code in cars now,” Leveson says. “You just cannot anticipate all these things.”

It's just too complicated.

The problem is that programmers are having a hard time keeping up with their own creations. Since the 1980s, the way programmers work and the tools they use have changed remarkably little. There is a small but growing chorus that worries the status quo is unsustainable. “Even very good programmers are struggling to make sense of the systems that they are working with,” says Chris Granger, a software developer who worked as a lead at Microsoft on Visual Studio, an IDE that costs $1,199 a year and is used by nearly a third of all professional programmers. He told me that while he was at Microsoft, he arranged an end-to-end study of Visual Studio, the only one that had ever been done. For a month and a half, he watched behind a one-way mirror as people wrote code. “How do they use tools? How do they think?” he said. “How do they sit at the computer, do they touch the mouse, do they not touch the mouse? All these things that we have dogma around that we haven’t actually tested empirically.”

The findings surprised him. “Visual Studio is one of the single largest pieces of software in the world,” he said. “It’s over 55 million lines of code. And one of the things that I found out in this study is more than 98 percent of it is completely irrelevant. All this work had been put into this thing, but it missed the fundamental problems that people faced. And the biggest one that I took away from it was that basically people are playing computer inside their head.”

There's a big disconnect in the process:

By the time he gave the talk that made his name, the one that Resig and Granger saw in early 2012, [Bret] Victor had finally landed upon the principle that seemed to thread through all of his work. (He actually called the talk “Inventing on Principle.”) The principle was this: “Creators need an immediate connection to what they’re creating.” The problem with programming was that it violated the principle. That’s why software systems were so hard to think about, and so rife with bugs: The programmer, staring at a page of text, was abstracted from whatever it was they were actually making. [...] For him, the idea that people were doing important work, like designing adaptive cruise-control systems or trying to understand cancer, by staring at a text editor, was appalling. And it was the proper job of programmers to ensure that someday they wouldn’t have to.

Seeing is believing, and controlling:

Victor wanted something more immediate. “If you have a process in time,” he said, referring to Mario’s path through the level, “and you want to see changes immediately, you have to map time to space.” He hit a button that showed not just where Mario was right now, but where he would be at every moment in the future: a curve of shadow Marios stretching off into the far distance. What’s more, this projected path was reactive: When Victor changed the game’s parameters, now controlled by a quick drag of the mouse, the path’s shape changed. It was like having a god’s-eye view of the game. The whole problem had been reduced to playing with different parameters, as if adjusting levels on a stereo receiver, until you got Mario to thread the needle. With the right interface, it was almost as if you weren’t working with code at all; you were manipulating the game’s behavior directly.

When the audience first saw this in action, they literally gasped. They knew they weren’t looking at a kid’s game, but rather the future of their industry. Most software involved behavior that unfolded, in complex ways, over time, and Victor had shown that if you were imaginative enough, you could develop ways to see that behavior and change it, as if playing with it in your hands.

Hefner Rising: The Playboy Story [RIP Hef]

Hugh Hefner has died, so I'm moving this to the head of the queue. R.I.P. He'll be buried next to Marilyn Monroe.Amazon has just released American Playboy: The Hugh Hefner Story, a ten episode series about Hefner and the magazine he founded in 1953. Playboy was profitable from its first issue and gave birth to one of the most recognizable brands in the world. I encountered Playboy sometime in the early 1960s, during my teen years, and read it regularly for a decade or more and I do mean “read.” Yes, I looked at the pictures, of course I looked at the pictures, but I really did read it, for there was much to read, especially the interviews.

The series has been assembled from documentary materials, including footage, clippings, and photos from Hefner’s archive, testimonials from former executives and associates, including Christie Hefner and Jesse Jackson, and dramatic reenactments. The reenactments are OK, but no more. It’s the story itself that’s fascinating. It’s told from Hefner’s obviously biased point of view, but there’s no secret about that. You might want to counterpoint it with, say, Mad Men, which is set among the men at the center of the Playboy target demographic. Did Don Draper read playboy? What about the one who smoked a pipe (Ken?), did he get the idea from Hef?

The series is densest over the magazine’s first quarter century, which is fine, as that’s where the most action is. Hefner’s original conception was simply a men’s lifestyle magazine, advice to the male consumer: no more, but no less – which is to say, pictures of naked women were central. Out of and in addition to that came liberal editorial content. The magazine had to defend itself, editorially and in court. So censorship became an issue.

Hefner liked jazz. The sophisticated man liked jazz. So Miles Davis became the subject of the first Playboy interview. When Hefner wanted Nat Cole and Ella Fitzgerald on his first television show, TV stations in the South said they wouldn’t air the show. He called their bluff; it turns out they weren’t bluffing, but the show did fine anyhow. Civil Rights entered Playboy’s editorial portfolio, including interviews with Martin Luther King and Malcolm C. Then the war in Vietnam – Playboy was against it, but was happy to entertain the troops – women’s rights, and then AIDS.

Yes, one of the episodes deals with feminist criticism, including footage from a 1970 Dick Cavett show where Susan Brownmiller and Sally Kempton handed him his head. We also learn of Gloria Steinem’s undercover exposé of working conditions at the New York Playboy Club. Obviously, though, if feminist critique is what you want, this series is not the place for it. You might, as an exercise, ask yourself how Mad Men’s Peggy Olson would have fared working at Playboy. That’s a tricky one. To be sure, Christie Hefner took over in 1988 and ran the company until 2009, two decades after the period covered in Mad Men. If she had gone to work for Hef in 1963?

* * * * *

On the whole the series seems a bit long for the material it covers. But that material is worthwhile as social history, for the overview of the phenomena that followed from the desire to read a magazine with picture of naked women. And the archival material is at the center of it all.

Wednesday, September 27, 2017

Helicopter landing in a slanted city

As you may know, there are lots of helicopters in the air space over and around New York City. While a few may contain tourists seeing the sights I suspect that most ferrying one-percenter executives about their daily rounds. The whirlybirds make a terrible racket.

Every once in awhile I like to track one through the sky with my camera. It's not a particularly interesting sight. Nor will my camera allow me to zoom in really close so I can see their faces as they read their reports or sip their scotch or whatever it is they do while being masters of the universe. I suppose I track them for the fun and (minor) challenge of following a helicopter while taking photos.

So, I picked one up Sunday afternoon:

And followed it as it passed one of those aerial construction sites on Manhattan's West Side:

Notice how those buildings seem to be leaning to the left (North). Isn't that strange?

Well of course, I know that. The buildings aren't leaning. I'm tilting my camera. But not deliberately.

I didn't think about that at the time. Wasn't trying to tilt it, didn't notice I was. I was just tracking the helicopter and snapping photos. Why'd I tilt the camera?

I also do it when tracking boats zipping along on the Hudson, though not so much. Am I unconsciously leaning into the direction of motion? Perhaps.

However, look at the helicopter in the third and fourth photos. It appears to be pretty level. I say "appears" because it can't be. It may be level with respect to the top and bottom frames of the picture, but the camera is tilted. And that implies that the helicopter is tilted with respect to the ground. Was I unconsciously tilting the camera so as to normalize the helicopter's orientation?

Who knows.

Do you think I can learn to keep the camera level when tracking helicopters – and birds and boats? It's not easy, not when you're moving. There's a reason photographers mount cameras on tripods. Perhaps if I did enough of this I could train myself. But it would be tricky. For one thing I'd have to remember to do it. When I'm out taking photographs I'm not thinking about such things. I'n thinking about what's in front of my eyes. And even if I remember to keep the camera level, that's more easily said than done. After all, I'm moving, not just my hands, but my trunk, perhaps my whole body. I'm moving quickly and the difference between rock solid level and off just so much as you'd notice, that's a relatively small difference.

Tricky.

Tuesday, September 26, 2017

Anthony Bourdain in Rome, and that hoochie coochie song pops up

Here it is, I've cued it up in the video (c. 18:46). It starts with a guitar riff and apparently is about two Hindu brothers, thus keeping its oriental provenance intact:

I wonder what other versions are floating about in Rome, in Italy, Europe...the world.

I discuss this ancient bit of music in Louis Armstrong and the Snake-Charmin’ Hoochie-Coochie Meme.

Monday, September 25, 2017

Over the weekend the Nacirema Nationals trounced the Trumptastic Bombers

Sunday was the most important sports day since Ali decided not to fight in Vietnam.

–Richard Lapchick, Director

Institute for Diversity and Ethics in Sport,

Institute for Diversity and Ethics in Sport,

The Trumpistas went up against the Nacirema and were creamed. How's this going to work out? What makes the question an interesting one is that many Trumptistas are also partisans of the Nacirema ( = "America" spelled backward). I grew up in Western PA, Trump country, but also football country. It'll be interesting to see how this works out. Will Trump double-down after his defeat by the NFL? Will the players persist? What of the owners, many of whom are Trump partisans?

Across the Nation

Writing in The New York Times (Sept 24, 2017) Ken Belson reports:

On three teams, nearly all the football players skipped the national anthem altogether. Dozens of others, from London to Los Angeles, knelt or locked arms on the sidelines, joined by several team owners in a league normally friendly to President Trump. Some of the sport’s biggest stars joined the kind of demonstration they have steadfastly avoided.It was an unusual, sweeping wave of protest and defiance on the sidelines of the country’s most popular game, generated by Mr. Trump’s stream of calls to fire players who have declined to stand for the national anthem in order to raise awareness of police brutality and racial injustice.What had been a modest round of anthem demonstrations this season led by a handful of African-American players mushroomed and morphed into a nationwide, diverse rebuke to Mr. Trump, with even some of his staunchest supporters in the N.F.L., including several owners, joining in or condemning Mr. Trump for divisiveness.

However:

But the acts of defiance received a far more mixed reception from fans, both in the stadiums and on social media, suggesting that what were promoted as acts of unity might have exacerbated a divide and dragged yet another of the country’s institutions into the turbulent cross currents of race and politics.At Lincoln Financial Field in Philadelphia, videos posted on social media showed some Eagles fans yelling at anti-Trump protesters holding placards. At MetLife Stadium in East Rutherford, N.J., before the Jets played the Dolphins, many fans, a majority of them white, said they did not support the anthem protests but also did not agree with the president’s view that players should be fired because of them.

Moreover, there is a rule:

The Steelers, along with the Tennessee Titans and the Seattle Seahawks, who were playing each other and similarly skipped the anthem, broke a league rule requiring athletes to be present for the anthem, though a league executive said they would not be penalized.

In other sports:

In a tweet Friday, Mr. Trump disinvited the Golden State Warriors, the N.B.A. champions, to any traditional White House visit, after members of the team, including its biggest star, Stephen Curry, were critical of him. But on Sunday, the N.H.L. champion Pittsburgh Penguins said they would go to the White House, and declared such visits to be free of politics.

Nascar team owners went a step further, saying they would not tolerate drivers who protested during the anthem.

American Ritual

If you google “football as ritual” you’ll come up with a bunch of hits. It’s a natural. It’s played in special purpose-build facilities and the players wear specialized costumes. The teams have totemic mascots and the spectators will wear team colors and emblems of the totem. Ritual chants are uttered throughout the game and there’s lots of music and spectacle. I could go on and on, but you get the idea. When anthropologists go to faraway places and see people engaging in such activities they call it ritual. We call it entertainment. It’s both.

While not everyone actually plays football, a very large portion (mostly male) of the population has done so at one time in their life. When I was in secondary school touch football was one of the required activities in boys gym class. Those who don’t play the game participate vicariously as spectators. The game is associated with various virtues and so participating contributes to moral development.

Other sports are like this as well and other sports have been involved in anti-Trumpista activity. But let’s stick to football as that’s where most of the action has been so far.

Extended Adolescence – Adulthood Revised?

An analysis by researchers at San Diego State University and Bryn Mawr College reports that today’s teenagers are less likely to engage in adult activities like having sex and drinking alcohol than teens from older generations.The review, published today in the journal Child Development, looked at data from seven national surveys conducted between 1976 and 2016, including those issued by the U.S. Centers for Disease Control and Prevention and the National Institutes of Health. Together, the surveys included over eight million 13- to 19-year-olds from varying racial, economic and regional backgrounds. Participants were asked a variety of questions about how the they spent their time outside of school and responses were tracked over time.Beyond just a drop in alcohol use and sexual activity, the study authors found that since around 2000, teens have become considerably less likely to drive, have an after-school job and date. By the early 2010s, it also appeared that 12th graders were going out far less frequently than 8th graders did in the 1990s. In 1991 54 percent of high schoolers reported having had sex at least once; in 2015 the number was down to 41 percent. What’s more, the decline in adult activity was consistent across all populations, and not influenced by race, gender or location.

Affluence:

The analysis found adolescents were more likely to take part in adult activities if they came from larger families or those with lower incomes. This mirrors so-called “life history theory,” the idea exposure to an unpredictable, impoverished environment as a kid leads to faster development whereas children who grow up in a stable environment with more resources tend to have a slower developmental course.In families with means there is often more anticipation of years of schooling and career before one necessarily has to “grow up”—there’s plenty of time for that later. As Twenge and Park conclude, despite growing income disparities, a significant percentage of the U.S. population has on average become more affluent over the past few decades and are living longer. As a result, people are waiting longer to get married and have children. We’re also seeing a higher parental investment in fewer children—or, in the parlance of our times, more “helicopter parenting.”

Erik Erikson talked of adolescence as a “psychosocial moratorium”, however

See these posts for further discussion on extended adolescence:Yet many child psychologists believe today’s children seem to be idling in this hiatus period more so than ever before. “I'm keenly aware of the shift, as I often see adolescents presenting with some of the same complaints as college graduates,” says Columbia University psychologist Mirjana Domakonda, who was not involved in the new study. “Twenty-five is the new 18, and delayed adolescence is no longer a theory, but a reality. In some ways, we’re all in a ‘psychosocial moratorium,’ experimenting with a society where swipes constitute dating and likes are the equivalent of conversation.”

- The benefits of extended adolescence

- Morphosis: John Green: the Antecession of Adolescence

- It's time to create new adulthoods for the new worlds now emerging

- Adulthood in Flux

- The Next Level: Universal Children and a New Humanity

Is but one aspect of a new mode of life? You may recall that, in Western history, childhood wasn't recognized as a distinct phase of life until the early modern era (Philippe Ariès, Centuries of Childhood) and adolescence itself didn't emerge until the late 19th and early 20th centuries.

Sunday, September 24, 2017

Too good to last, the New Savanna hit streak is over

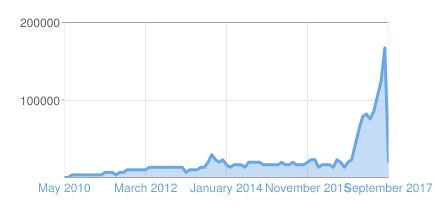

Back at the end of January 2017 I’d noticed that traffic was up, with some days topping 3K or 4K. On August 11 New Savanna got 11,109 hits and on August 20 hits seemed to be averaging well over 4K per day since then. And then there was a drop.

Was it an end of summer slump or more permanent?

It appears to be more permanent. I’ve been logging daily hits for the last month. We’ve averaged 834 hits per day over the last 30 days, with only five days over 1000; the highest was 1369. This is how traffic looks over the life of the blog:

The six-month high period is obvious and over there at the right you can see the drop. I expect there will be a visible leveling-off in the graph in a couple of month.

But what was that six-month run about?

The hermeneutics of Ta-Nehisi Coates

Peter Dorman at Naked Capitalism, (originally EconoSpeak):

The thing is, he seldom makes arguments in the sense I understand that term. There isn’t extended reasoning through assumptions and implications or careful sifting through evidence to see which hypotheses are supported or disconfirmed. No, he offers an articulate, finely honed expression of his worldview, and that’s it. He is obviously a man of vast talents, but he uses them the same way much less refined thinkers simply bloviate.But that raises the question, why is he so influential? Why does he reach so many people? What’s his secret?No doubt there are multiple aspects to this, but here’s one that just dawned on me. Those who respond to Coates are not looking for argumentation—they’re looking for interpretation.The demand for someone like Coates reflects the broad influence that what might be called interpretivism has had on American political culture. This current emerged a few decades ago from literature, cultural studies and related academic home ports. Its method was an application of the interpretive act of criticism. A critic “reads”, which is to say interprets, a work of art or some other cultural product, and readers gravitate toward critics whose interpretations provide a sense of heightened awareness or insight into the object of criticism. There’s nothing wrong with this. I read criticism all the time to deepen my engagement with music, art, film and fiction.But criticism jumped channel and entered the political realm. Now events like elections, wars, ecological crises and economic disruptions are interpreted according to the same standards developed for portraits and poetry. And maybe there is good in that too, except that theories about why social, economic or political events occur are subject to analytical support or disconfirmation in a way that works of art are not.

Saturday, September 23, 2017

Military ontology

.@andrewiliadis on the Basic Formal Ontology and its domain-specific permutations - e.g., Military Ontology https://t.co/vEsfNkwZWn pic.twitter.com/rqEVb3Fii3— Shannon Mattern (@shannonmattern) September 23, 2017

Pete Turner, colors – the world

Richard Sandomir writes his obituary in The New York Times:

Altering reality was nothing new for Mr. Turner. Starting in the pre-Photoshop era, he routinely manipulated colors to bring saturated hues to his work in magazines and advertisements and on album covers.“The color palette I work with is really intense,” he said in a video produced by the George Eastman House, the photographic museum in Rochester that exhibited his work in 2006 and 2007. “I like to push it to the limit.”Jerry Uelsmann, a photographer and college classmate who specializes in black-and-white work, said in an email that when he saw Mr. Turner’s intense color images, he once told him, “I felt like I wanted to lick them.”

Friday, September 22, 2017

Deep Learning through the Information Bottleneck

Tishby began contemplating the information bottleneck around the time that other researchers were first mulling over deep neural networks, though neither concept had been named yet. It was the 1980s, and Tishby was thinking about how good humans are at speech recognition — a major challenge for AI at the time. Tishby realized that the crux of the issue was the question of relevance: What are the most relevant features of a spoken word, and how do we tease these out from the variables that accompany them, such as accents, mumbling and intonation? In general, when we face the sea of data that is reality, which signals do we keep?“This notion of relevant information was mentioned many times in history but never formulated correctly,” Tishby said in an interview last month. “For many years people thought information theory wasn’t the right way to think about relevance, starting with misconceptions that go all the way to Shannon himself.” [...]Imagine X is a complex data set, like the pixels of a dog photo, and Y is a simpler variable represented by those data, like the word “dog.” You can capture all the “relevant” information in X about Y by compressing X as much as you can without losing the ability to predict Y. In their 1999 paper, Tishby and co-authors Fernando Pereira, now at Google, and William Bialek, now at Princeton University, formulated this as a mathematical optimization problem. It was a fundamental idea with no killer application.

But, you know, the emic/etic distinction is about relevance. What are phonemes, they are "the most relevant features of a spoken word".

To the most recent experiments:

In their experiments, Tishby and Shwartz-Ziv tracked how much information each layer of a deep neural network retained about the input data and how much information each one retained about the output label. The scientists found that, layer by layer, the networks converged to the information bottleneck theoretical bound: a theoretical limit derived in Tishby, Pereira and Bialek’s original paper that represents the absolute best the system can do at extracting relevant information. At the bound, the network has compressed the input as much as possible without sacrificing the ability to accurately predict its label.Tishby and Shwartz-Ziv also made the intriguing discovery that deep learning proceeds in two phases: a short “fitting” phase, during which the network learns to label its training data, and a much longer “compression” phase, during which it becomes good at generalization, as measured by its performance at labeling new test data.

However:

For instance, Lake said the fitting and compression phases that Tishby identified don’t seem to have analogues in the way children learn handwritten characters, which he studies. Children don’t need to see thousands of examples of a character and compress their mental representation over an extended period of time before they’re able to recognize other instances of that letter and write it themselves. In fact, they can learn from a single example. Lake and his colleagues’ models suggest the brain may deconstruct the new letter into a series of strokes — previously existing mental constructs — allowing the conception of the letter to be tacked onto an edifice of prior knowledge. “Rather than thinking of an image of a letter as a pattern of pixels and learning the concept as mapping those features” as in standard machine-learning algorithms, Lake explained, “instead I aim to build a simple causal model of the letter,” a shorter path to generalization.

On 'deconstructing' letterforms into strokes, see the work of Mark Changizi [1].

For a technical account of this work, see Ravid Schwartz-Ziv and Naftali Tishby, Opening the black box of Deep Neural Networks via Information: https://arxiv.org/pdf/1703.00810.pdf

[1] Mark A. Changizi, Qiong Zhang, Hao Ye, and Shinsuke Shimojo, The Structures of Letters and Symbols throughout Human History Are Selected to Match Those Found in Objects in Natural Scenes, vol. 167, no. 5, The American Naturalist, May 2006

http://www.journals.uchicago.edu/doi/pdf/10.1086/502806

Cheap criticism & cheap defense: Can machines think?

Searle’s Chinese room argument is one of the best-known thought experiments in analytic philosophy. The point of the argument as I remember it (you can google it) is that computers can’t think because they lack intentionality. I read it when Searle published in Brain and Behavioral Science back in the Jurassic Era and thought to myself: So what? It’s not that I thought that computers really could thing, someday, maybe – because I didn’t – but that Searle’s argument didn’t so much as hint at any of the techniques used in AI or computational linguistics. It was simply irrelevant to what investigators were actually doing.

That’s what I mean by cheap criticism.

But then it seems to me that, for example, Dan Dennett’s staunch defense of the possibility of computers thinking is cheap in the same way. I’m sure he’s read some of the technical literature, but he doesn’t seem to have taken any of those ideas on board. He’s not internalized them. Whatever his faith in machine thought is based on, it’s not based on the techniques investigators on the matter have been using or on extrapolations from those techniques. That makes his faith as empty as Searle’s doubt.

So, if these guys aren’t arguing about specific techniques, what ARE they arguing about? Inanimate vs. animate matter? Because it sure can’t be spirit vs. matter, or can it?

I think like a Pirahã (What's REAL vs. real)

Something I'd recently posted to Facebook.

I just realized that in one interesting aspect, I think like a Pirahã. I’m thinking about their response to Daniel Everett’s attempts to teach the Christian Gospel:

Pirahã: “This Jesus fellow, did you ever meet him?”Everett: “No.”

Pirahã: “Do you know someone who did?”Everett: “Um, no.”Pirahã: “Then you don’t know that he’s real.”

As far as the Pirahã are concerned, if you haven't seen it yourself, or don't know someone who has, then it's not REAL (upper case).

This recognition of the REAL takes a somewhat different form for me, after all, I recognize the reality (lower case) of lots of things of which I have no direct experience and don't know anyone who has. Thus, to give but one example, I've not set foot on the moon and I don't know anyone who has. But I don't believe that the moon landings were faked. Yada yada.

But I’ve been thinking about the REAL for awhile. One example, the collapse of the Soviet Union in the last decade of the millennium. For someone born in 1990, say, that’s just something they read about in history books. They know it’s real, and they know it’s important. But it just doesn’t have the “bite” that it does for someone, like me, who grew up in the 1950s when the Cold War was raging. It’s not simply events that appeared in newspapers and on TV, it’s seeing Civil Defense markers on buildings designated as fall-out shelters, doing duck-and-cover drills in school, reading about home fall-out shelters in Popular Mechanics and picking a spot in the backyard where we should build one. I fully expected to live in the shadow of the Soviet Union until the day I died. Some when it finally collapsed – after considerable slacking off in the Cold War – that was a very big deal. It’s REAL for me in a way that it can’t be for someone born in 1990 or after (actually, that date’s probably a bit earlier than that).

This recognition of the REAL takes a somewhat different form for me, after all, I recognize the reality (lower case) of lots of things of which I have no direct experience and don't know anyone who has. Thus, to give but one example, I've not set foot on the moon and I don't know anyone who has. But I don't believe that the moon landings were faked. Yada yada.

But I’ve been thinking about the REAL for awhile. One example, the collapse of the Soviet Union in the last decade of the millennium. For someone born in 1990, say, that’s just something they read about in history books. They know it’s real, and they know it’s important. But it just doesn’t have the “bite” that it does for someone, like me, who grew up in the 1950s when the Cold War was raging. It’s not simply events that appeared in newspapers and on TV, it’s seeing Civil Defense markers on buildings designated as fall-out shelters, doing duck-and-cover drills in school, reading about home fall-out shelters in Popular Mechanics and picking a spot in the backyard where we should build one. I fully expected to live in the shadow of the Soviet Union until the day I died. Some when it finally collapsed – after considerable slacking off in the Cold War – that was a very big deal. It’s REAL for me in a way that it can’t be for someone born in 1990 or after (actually, that date’s probably a bit earlier than that).

[Yeah, I know, I didn't see it with my own eyes. But then is something like the Soviet Union something you can see? Sure, you can see the soil and the buildings, etc. But they're not the Soviet Union, nor are the people. The USSR is an abstract entity. And I can reasonably say that I witnessed the collapse of that abstract entity in a way that younger people have not. That makes it REAL. Or should that be REALreal? It's complicated.]

This sense of REALness is intuitive. And I’d think it is in fact quite widespread in the literate world, but mostly overwhelmed by “book learnin’”.

Another example. Just the other day I read a suite of articles in Critical Inquiry (an initial article, 5 comments in a later issue, and a reply to comments). It was about the concept of form in literary criticism, which is very important, but also very fuzzy and much contested. What struck me is that, as far as I can tell, only one of the people involved is old enough to have been thinking about literary criticism at the time when structuralism (a variety of thinkers including Lévi-Strauss and, of course, Roman Jakobson) and linguistics (Chomsky+) was something people read about and took seriously, as in: “Maybe we ought to use some of this stuff.” That phase ran from roughly the mid-1960s to the mid-1970s. Any scholar entering their 20s in, say, 1980 and after would think of structuralism as something in the historical past, as something the profession had considered and rejected. Over and done with.

For those thinkers structuralism and linguistics aren’t REAL in the sense I’m talking about. They know that work was done and that some of it was important; they’re educated in the history of criticism. They know that linguistics continues on, and they’ve probably heard about the recursion debates. But they’ve never even attempted to internalize any of that as a mode of thinking they could employ. It’s just not REAL to them.

Why is this important in the context of that Critical Inquiry debate? Because linguists have a very different sense of form than literary critics do. The spelling’s the same, but the idea is not. Yet literary critics are dealing with language.

A brief note on interpretation as translation

I’ve come to think of interpretation as a kind of translation, and translation doesn’t use description. When you translate from, say, Japanese into English, you don’t first describe the Japanese utterance/text and then make the translation based on that description. You make the translation directly. So it is with interpretation. I’ve come to think of the devices used to make the source text present into the critical text (quotation, summary, paraphrase) as more akin to observations than descriptions. Of course, we also have a descriptive vocabulary, the terms of versification, rhetoric, narratology, poetics, and others, but that’s all secondary.

Hence the longstanding practice of eliding the distinction between “reading” in the ordinary sense of the word and “reading” as a term of art for interpretive commentary. We like to pretend that this often elaborate secondary construction is, after all, but reading. Even after all the debate over not having immediate access to the text we still like to pretend that we’re just reading the text. Do we know what we’re doing? Blindness and insight, or the blind leading the blind?

Thursday, September 21, 2017

The effects of choir & solo singing

Front. Hum. Neurosci., 14 September 2017 | https://doi.org/10.3389/fnhum.2017.00430

Choir versus Solo Singing: Effects on Mood, and Salivary Oxytocin and Cortisol Concentrations

T. Moritz Schladt, Gregory C. Nordmann, Roman Emilius, Brigitte M. Kudielka, Trynke R. de Jong and Inga D. Neumann

Abstract: The quantification of salivary oxytocin (OXT) concentrations emerges as a helpful tool to assess peripheral OXT secretion at baseline and after various challenges in healthy and clinical populations. Both positive social interactions and stress are known to induce OXT secretion, but the relative influence of either of these triggers is not well delineated. Choir singing is an activity known to improve mood and to induce feelings of social closeness, and may therefore be used to investigate the effects of positive social experiences on OXT system activity. We quantified mood and salivary OXT and cortisol (CORT) concentrations before, during, and after both choir and solo singing performed in a randomized order in the same participants (repeated measures). Happiness was increased, and worry and sadness as well as salivary CORT concentrations were reduced, after both choir and solo singing. Surprisingly, salivary OXT concentrations were significantly reduced after choir singing, but did not change in response to solo singing. Salivary OXT concentrations showed high intra-individual stability, whereas salivary CORT concentrations fluctuated between days within participants. The present data indicate that the social experience of choir singing does not induce peripheral OXT secretion, as indicated by unchanged salivary OXT levels. Rather, the reduction of stress/arousal experienced during choir singing may lead to an inhibition of peripheral OXT secretion. These data are important for the interpretation of future reports on salivary OXT concentrations, and emphasize the need to strictly control for stress/arousal when designing similar experiments.

What interests you, or: How’d things get this way in lit crit?

This isn’t going to be another one of those long-form posts where I delve into the history of academic literary criticism in the United States since World War II. I’ve done enough of that, at least for awhile [1]. I’m going to assume that account.

Rather, I want to start with the individual scholar, even before they become a scholar. Why would someone want to become a professional literary scholar? Because they like to read, no? So, you take literature courses and you do the work you’re taught how to do. If you really don’t like that work, then you won’t pursue a professional degree [2]. You’ll continue to read in your spare time and you’ll study something else.

If those courses teach you how to search for hidden meanings in texts, whether in the manner of so-called close reading or, more recently, the various forms of ideological critique, that’s what you’ll do. If those courses don’t teach you how to analyze and describe form, then you won’t do that. The fact is, beyond versification (which is, or at least once was, taught in secondary school), form is hard to see.

Some years ago Mark Liberman had a post at Language Log which speaks to that [3]. He observes that it’s difficult for students to analyze sentences into component strings:

But when I first started teaching undergraduate linguistics, I learned that just explaining the idea in a lecture is not nearly enough. Without practice and feedback, a third to a half of the class will miss a generously-graded exam question requiring them to use parentheses, brackets, or trees to indicate the structure of a simple phrase like "State Department Public Relations Director".

In that example Liberman is looking for something like this: [(State Department) ((Public Relations) Director)].

Well, such analysis, which is central to the analysis of literary form (as I conceive and practice it), is difficult above the sentence level as well. If you aren’t taught how to do it, chances are you won’t try to figure it out yourself. Moreover, you may not even suspect that there’s something there to be described.

What we’ve got so far, then, is this: 1) Once you decide to study literary criticism professionally, you learn what you’re told. 2) It’s difficult to learn anything outside the prescribed path. There’s nothing surprising here, is there? Every discipline is like that.

Let’s go back to the history of the discipline, to a time when critics didn’t automatically learn to search out hidden meanings in texts, to interpret them. Without that pre-existing bias wouldn’t it have been at least possible that critics would have decided to focus on the description of form? And some did, in a limited way – I’m thinking of the Russian Formalists and their successors.

Still, formal analysis is difficult, and what’s it get you? Formal analysis, that’s what. The possibility of formal analysis is likely not what attracts anyone to literature, not now, not back then. You’re attracted to literary study because you like to read, and your reading is about love, war, beauty, pain, joy, suffering, life, the world, and the cosmos! THAT’s what you want to write about, not form.

And, sitting right there, off to the side, we’ve got a long history of Biblical hermeneutics stretching back to the time before Christianity differentiated from Judaism. Why not refit that for the study of meaning in literary texts? Now, I don’t think that’s quite what happened – the refitting of Biblical exegesis to secular ends – but that tradition was there exerting its general influence on the humanistic landscape. Between that and the ‘natural’ focus of one’s interest in literature, the search for literary meaning was a natural.

So that’s what the discipline did. And now it’s stuck and doesn’t know what to do.

More later.

References

[1] See, for example, the following working papers: Transition! The 1970s in Literary Criticism, https://www.academia.edu/31012802/Transition_The_1970s_in_Literary_Criticism

An Open Letter to Dan Everett about Literary Criticism, June 2017, 24 pp. https://www.academia.edu/33589497/An_Open_Letter_to_Dan_Everett_about_Literary_Criticism

[2] I figure we’ve all got our preferred intellectual styles. Some of us like math, some don’t and so forth. Take a look at this post: Style Matters: Intellectual Style, March 18, 2017, Style Matters: Intellectual Style, https://new-savanna.blogspot.com/2012/06/style-matters-intellectual-style.html

I make the following assertions:

1.) In anyone’s intellectual ecology, style preferences are deeper and have more inertia than explicit epistemological beliefs.

2.) Some of the pigheadedness that often crops up in discussions about humanities vs. science is grounded in stylistic preference that gets rationalized as epistemological belief.

[3] Mark Liberman, Two brews, Language Log, February 6, 2010, http://languagelog.ldc.upenn.edu/nll/?p=2100

See also my blog post quoting Liberman’s post,

Form is Hard to See, Even in Sentences*, November 29, 2015, http://new-savanna.blogspot.com/2015/11/form-is-hard-to-see-even-in-sentences.html

The origins of (the concept of) world literature

On the afternoon of 31 January 1827, a new vision of literature was born. On that day, Johann Peter Eckermann, faithful secretary to Johann Wolfgang von Goethe, went over to his master’s house, as he had done hundreds of times in the past three and a half years. Goethe reported that he had been reading Chinese Courtship (1824), a Chinese novel. ‘Really? That must have been rather strange!’ Eckermann exclaimed. ‘No, much less so than one thinks,’ Goethe replied.A surprised Eckermann ventured that this Chinese novel must be exceptional. Wrong again. The master’s voice was stern: ‘Nothing could be further from the truth. The Chinese have thousands of them, and had them when our ancestors were still living in the trees.’ Then Goethe reached for the term that stunned his secretary: ‘The era of world literature is at hand, and everyone must contribute to accelerating it.’ World literature – the idea of world literature – was born out of this conversation in Weimar, a provincial German town of 7,000 people.

Later: "World literature originated as a solution to the dilemma Goethe faced as a provincial intellectual caught between metropolitan domination and nativist nationalism."

And then we have a passage from The Communist Manifesto (1848):

In a stunning paragraph from that text, the two authors celebrated the bourgeoisie for their role in sweeping away century-old feudal structures:By exploiting the world market, the bourgeoisie has made production and consumption a cosmopolitan affair. To the annoyance of its enemies, it has drawn from under the feet of industry the national ground on which it stood. … These industries no longer use local materials but raw materials drawn from the remotest zones, and its products are consumed not only at home, but in every quarter of the globe. … In place of the old local and national seclusion and self-sufficiency, we have commerce in every direction, universal interdependence of nationals. And as in material so also in intellectual production. The intellectual creations of individual nations become common property. National one-sidedness and narrow-mindedness become increasingly impossible, and from the numerous national and local literatures there arises a world literature.World literature. To many contemporaries, it would have sounded like a strange term to use in the context of mines, steam engines and railways. Goethe would not have been surprised. Despite his aristocratic leanings, he knew that a new form of world market had made world literature possible.

Rolling along:

Ever since Goethe, Marx and Engels, world literature has rejected nationalism and colonialism in favour of a more just global community. In the second half of the 19th century, the Irish-born critic Hutcheson Macaulay Posnett championed world literature. Posnett developed his ideas of world literature in New Zealand. In Europe, the Hungarian Hugó Meltzl founded a journal dedicated to what he described as the ‘ideal’ of world literature.In India, Rabindranath Tagore championed the same idealist model of world literature. Honouring the Ramayana and the Mahabharata, the two great Indian epics, Tagore nevertheless exhorted readers to think of literature as a single living organism, an interconnected whole without a centre. Having lived under European colonialism, Tagore saw world literature as a rebuke to colonialism.

After World War II:

In the US, world literature took up residence in the booming post-war colleges and universities. There, the expansion of higher education in the wake of the GI Bill helped world literature to find a home in general education courses. In response to this growing market, anthologies of world literature emerged. Some of Goethe’s favourites, such as the Sanskrit play Shakuntala, the Persian poet Hafez and Chinese novels, took pride of place. From the 1950s to the ’90s, world literature courses expanded significantly, as did the canon of works routinely taught in them. World literature anthologies, which began as single volumes, now typically reach some 6,000 pages. The six-volume Norton Anthology of World Literature (3rd ed, 2012), of which I am the general editor, is one of several examples.In response to the growth of world literature over the past 20 years, an emerging field of world literature research including sourcebooks and companions have created a scholarly canon, beginning with Goethe, Marx and Engels and through to Tagore, Auerbach and beyond. The World Literature Institute at Harvard University, headed by the scholar David Damrosch, spends two out of three summers in other locations.

And now:

Today, with nativism and nationalism surging in the US and elsewhere, world literature is again an urgent and political endeavour. Above all, it represents a rejection of national nativism and colonialism in favour of a more humane and cosmopolitan order, as Goethe and Tagore had envisioned. World literature welcomes globalisation, but without homogenisation, celebrating, along with Ravitch, the small, diasporic literatures such as Yiddish as invaluable cultural resources that persevere in the face of prosecution and forced migration.There is no denying that world literature is a market, one in which local and national literatures can meet and transform each other. World literature depends, above all, on circulation. This means that it is incompatible with efforts to freeze or codify literature into a set canon of metropolitan centres, or of nation states, or of untranslatable originals. True, the market in world literature is uneven and not always fair. But the solution to this problem is not less circulation, less translation, less world literature. The solution is a more vibrant translation culture, more translations into more languages, and more world literature education.The free circulation of literature is the best weapon against nationalism and colonialism, whether old or new, because literature, even in translation, gives us unique access to different cultures and the minds of others.

Wednesday, September 20, 2017

African Music in the World

Another working paper available at Academia.edu: https://www.academia.edu/34610738/African_Music_in_the_World

Title above, abstract, table of contents, and introduction below.

* * * * *

Abstract: Sometime in the last million years or so a band of exceedingly clever apes began chanting and dancing, probably somewhere in East Africa, and thereby transformed themselves into the first humans. We are all cultural descendants of this first African musicking and all music is, in a genealogical sense, African music. More specifically, as a consequence of the slave trade African music has moved from Africa to the Americas, where it combined with other forms of music, from Europe but indigenous as well. These hybrids moved to the rest of the world, including back to Africa, which re-exported them.

Canceling Stamps 1

African Music 1

The Caribbean and Latin America 2

Black and White in the USA 3

Afro-Pop 5

Future Tense 6

Acknowledgements 7

References 7

Canceling Stamps

In 1975 an ethnographer recorded music made by postal workers while canceling stamps in the University of Ghana post office (Locke 1996, 72-78). One, and sometimes two, would whistle a simple melody while others played simple interlocking rhythms using scissors, inkpad, and the letters themselves. The scissors rhythm framed the pattern in much the way that bell rhythms do in a more conventional percussion choir.

Given the instrumentation and the occasion, I hesitate to categorize this music as traditional; but the principles of construction are, for all practical purposes, as old as dirt. What is, if anything, even more important, this use of music is thoroughly sanctioned by tradition. These men were not performing music for the pleasure and entertainment of a passive audience. Their musicking—to use a word coined by Christopher Small (1998)—served to assimilate their work to the rhythms of communal interaction, thus transforming it into an occasion for affirming their relationships with one another.

That, so I’ve argued at some length (Benzon 2001), is music’s basic function, to create human community. Sometime in the last million years or so a band of exceedingly clever apes began chanting and dancing, probably somewhere in East Africa, and thereby transformed themselves into the first humans. We are all cultural descendants of this first African musicking and all music is, in a genealogical sense, African music. That sense is, of course, too broad for our purposes, but it is well to keep it in mind as we contemplate Africa’s possible futures.

Tuesday, September 19, 2017

Jakobson’s poetic function as a computational principle, on the trail of the human mind

Not so long ago I argued that Jakobson’s poetic function could be extended beyond

the examples he gave, which came from poetry, to other formal features, such as

ring composition [1]. I now want to suggest that it is a computational principle

as well. What I mean by computation [2]? That’s always a question in these

discussions, isn’t it?

When Alan Turing formalized the idea of computation he did

so with the notion of a so-called Turing Machine [3]: “The machine operates on

an infinite memory tape divided into discrete cells.

The machine positions its head over a cell and ‘reads’

(scans) the symbol there.” There’s more to it than that, but that’s all we

need here. It’s that tape that interests me, the one with discrete cells, each

containing a symbol. Turing defined computation as an operation on the contents

of those cells. Just what kind of symbols we’re dealing with is irrelevant as

long as the basic rules governing their use are well-specified. The symbols

might be numerals and mathematical operators, but they might also be the words

and punctuation marks of a written language.

Linguists frequently refer to strings; an utterance is a

string of phonemes, or morphemes, or words, depending on what you’re interested

in. Of course it doesn’t have to be an utterance; the string can consist of a

written text. What’s important is that it’s a string.

Well, Jakobson’s poetic function places restrictions on the

arrangement of words on the string, restrictions independent of those made by

ordinary syntax. Here’s Jakobson’s definition [4]:

The poetic function projects the

principle of equivalence from the axis of selection into the axis of

combination. Equivalence is promoted to the constitutive device of the

sequence.

The sequence, of

course, is our string. As for the rest of it, that’s a bit obscure. But it’s

easy to see how things like meter and rhyme impose restrictions on the

composition of strings. Jakobson has other examples and I give a more careful

account of the restriction in my post, along with the example of ring

composition [1]. Moreover, in a working paper on ring composition, I have

already pointed out how the seven rules Mary Douglas gave for characterizing

ring composition can be given a computational interpretation [5, pp. 39-42].

* * * * *

LitCrit: Getting my bearings, the lay of the land

Another quick take, just a place filler.

I’ve been playing around with this chart. Nothing’s set in

stone. Terms are likely to change (especially the first column), move about, add another line, etc.

Observe the Text

|

Translation/ Interpretation

|

|

Object of

Observation

|

Meaning

|

|

Grounding Metaphor

|

Space (inside,

outside, surface, etc.)

|

|

Source of Agency

|

Human Subject

|

Psychological Mechanisms

|

For the Agent

|

Advice/How do we

live?

|

Explanation/How do

things work?

|

The point, of course, is that ethnical and naturalist criticism are different enterprises, requiring different methods, different epistemologies, and different philosophical accounts. The discipline (literary criticism) as it currently exists mixes the two and is skewed toward ethical criticism. Ethical criticism addresses itself to the human subject, which is why it is all-but forced to employ the thin spatial metaphors of standard criticism and why it must distance itself from the explicit (computational) mechanisms of linguistics and of the newer psychologies. That is also why, despite the importance of the concept of form, it has no coherent conception of form and cannot/will not describe formal features of texts beyond those typical of formal poetry and a few others.

The recent Critical Inquiry mini-symposium [1] inevitably mixes the two but is, of course, biased toward ethical criticism (without, however, proclaiming its ethical nature). All contributions assume the standard spatial metaphors while the world of newer psychologies, much less that of linguistics (computation and psychological mechanisms in the above chart) doesn't exist. Post-structuralism/post-modernism is the (tacitly) assumed disciplinary starting point. My guess is that, except for Marjorie Levinson [2], none of the participants is old enough to remember when structuralism was a viable option. Linguistics, cognitive science, etc. simply aren't real for most of these scholars. They belong over there, where those others can deal with them.

It is strange, and a bit sad, to see a discipline that is centered on texts to be so oblivious of language itself and of its study in other disciplines.

The recent Critical Inquiry mini-symposium [1] inevitably mixes the two but is, of course, biased toward ethical criticism (without, however, proclaiming its ethical nature). All contributions assume the standard spatial metaphors while the world of newer psychologies, much less that of linguistics (computation and psychological mechanisms in the above chart) doesn't exist. Post-structuralism/post-modernism is the (tacitly) assumed disciplinary starting point. My guess is that, except for Marjorie Levinson [2], none of the participants is old enough to remember when structuralism was a viable option. Linguistics, cognitive science, etc. simply aren't real for most of these scholars. They belong over there, where those others can deal with them.

It is strange, and a bit sad, to see a discipline that is centered on texts to be so oblivious of language itself and of its study in other disciplines.

As always, more later.

[1] Jonathan Kramnick and Anahid Nersessian, Form and Explanation, Critical Inquiry 43 (Spring 2017). Five replies in Critical Inquiry 44 (Autumn 2017).

[2] Marjorie Levinson, Response to Jonathan Kramnick and Anahid Nersessian, “Form and Explanation”, Critical Inquiry 44 (Autumn 2017).

Subscribe to:

Comments (Atom)