Wednesday, November 30, 2022

Monday, November 28, 2022

Sunday, November 27, 2022

Saudi Arabia, soup to nuts, some informative notes

Matt Lakeman, Notes on Saudi Arabia, November 22, 2022

This essay is my attempt to explain what has been happening in Saudi Arabia over the last five years, and why. To do so, I have to go all the way back to SA’s origins and follow the throughline to the present day. My understanding presented here comes from my own experiences in the country, Wikipedia, a bunch of random online articles and statistics, and two main sources:

David Rundell – a former American diplomat to Saudi Arabia for 30 years, and the author of Vision or Mirage: Saudi Arabia at the Crossroads.

Graeme (pronounced “gram”) Wood – a journalist who has traveled around Saudi Arabia and got rare interviews with Mohammad Bin Salman, the Crown Prince of Saudi Arabia and current de facto ruler. He wrote Absolute Power and a few other articles on SA for the Atlantic, plus did a podcast with Sam Harris which covers most of the same territory but has some good color commentary. Wood is an amazing journalist and basically does my dream job.

Major Topics:

Overview of Saudi Arabia

Politics

Economy

Saudi Military

Terrorism

The Quiet Political Revolution

Vision 2030 [including Neom]

The Case Against MBS and Vision 2030

Visiting Saudi Arabia

Miscellaneous

Friday, November 25, 2022

Meta announces CICERO, an AI that plays Diplomacy

Meta AI presents CICERO — the first AI to achieve human-level performance in Diplomacy, a strategy game which requires building trust, negotiating and cooperating with multiple players.

— Meta AI (@MetaAI) November 22, 2022

Learn more about #CICERObyMetaAI: https://t.co/hG2R0T2HZx pic.twitter.com/IGw4RObA8n

#CICERObyMetaAI generates strategic, free-form dialogue by:

— Meta AI (@MetaAI) November 23, 2022

🔮 Predicting the moves other players are likely to make

🔄 Creating a plan based on those predictions

💬 Generating messages

🤖 Filtering the final messages for clarity and value

Read more on our blog ⬇️

Gary Marcus and Ernest Davis have posted an interesting evaluation of Cicero: What does Meta AI’s Diplomacy-winning Cicero Mean for AI? [Hint: It’s not all about scaling]

First:

The first thing to realize is that Cicero is a very complex system. Its high-level structure is considerably more complex than systems like AlphaZero, which mastered Go and chess, or GPT-3 which focuses purely on sequences of words. Some of that complexity is immediately apparent in the flowchart; whereas a lot of recent models are something like data-in, action out, with some kind of unified system (say a Transformer) in between, Cicero is heavily prestructured, in advance of any learning or training, with a carefully-designed bespoke architecture that is divided into multiple modules and streams, each with their own specialization.

A marvel, but...

Cicero is in many ways a marvel; it has achieved by far the deepest and most extensive integration of language and action in a dynamic world of any AI system built to date. It has also succeeded in carrying out complex interactions with humans of a form not previously seen.

But it is also striking in how it does that. Strikingly, and in opposition to much of the Zeitgeist, Cicero relies quite heavily on hand-crafting, both in the data sets, and in the architecture; in this sense it is in many ways more reminiscent of classical “Good Old Fashioned AI” than deep learning systems that tend to be less structured, and less customized to particular problems. There is far more innateness here than we have typically seen in recent AI systems

Also, it is worth noting that some aspects of Cicero use a neurosymbolic approach to AI, such as the association of messages in language with symbolic representation of actions, the built-in (innate) understanding of dialogue structure, the nature of lying as a phenomenon that modifies the significance of utterances, and so forth.

That said, it’s less clear to us how generalizable the particulars of Cicero are.

In sum:

Cicero makes extensive use of machine learning, but is hardly a poster child for simply making ever bigger models (so-called “scaling maximalism”), nor for the currently popular view of “end-to-end” machine learning of in which some single general learning algorithm applies across the board, with little internal structure and zero innate knowledge. At execution time, Cicero consists of a complex array of separate hand-crafted modules with complex interactions. At training time, it draws on a wide range of training materials, some built by experts specifically for Cicero, some synthesized in programs hand-crafted by experts. [...]

Our final takeaway? We have known for some time that machine learning is valuable; but too often nowadays ML is a taken as universal solvent—as if the rest of AI was irrelevant—and left to do everything on its own. Cicero may change that calculus. If Cicero is any guide, machine learning may ultimately prove to be even more valuable if it is embedded in highly structured systems, with a fair amount of innate, sometimes neurosymbolic machinery.

There's much more in their article.

Thursday, November 24, 2022

From cognitive maps to spatial schemas

Indeed a really important contribution that @DelaFarzanfar @RosenbaumLab and I cover in Fig 2 of our new review on spatial schemas:https://t.co/f8kzodrcNh pic.twitter.com/oPvcgGbfSH

— Prof Hugo Spiers (@hugospiers) November 24, 2022

Abstract of the linked article:

A schema refers to a structured body of prior knowledge that captures common patterns across related experiences. Schemas have been studied separately in the realms of episodic memory and spatial navigation across different species and have been grounded in theories of memory consolidation, but there has been little attempt to integrate our understanding across domains, particularly in humans. We propose that experiences during navigation with many similarly structured environments give rise to the formation of spatial schemas (for example, the expected layout of modern cities) that share properties with but are distinct from cognitive maps (for example, the memory of a modern city) and event schemas (such as expected events in a modern city) at both cognitive and neural levels. We describe earlier theoretical frameworks and empirical findings relevant to spatial schemas, along with more targeted investigations of spatial schemas in human and non-human animals. Consideration of architecture and urban analytics, including the influence of scale and regionalization, on different properties of spatial schemas may provide a powerful approach to advance our understanding of spatial schemas.

Whatever happened to work? [hard-core no more]

While Musk in exhorting the remaining employees at Twitter to work "hard core," many Americans are rethinking the idea of work: Jessica Bennett, "The Worst Midnight Email Fro the Boss, Ever," NYTimes, Nov. 23, 2022. From the article:

Even before the pandemic, many white-collar Americans were starting to rethink their relationships to work. Persistent income inequality, enduring racial and gender discrimination, disillusionment with the capitalist promise — “hustle culture” was a catchy slogan, but was any of this really worth it?

These days, the rise-and-grind mentality of just a couple of years ago has been replaced by sleeping in. (Rest is resistance — haven’t you heard?) There are regular headlines about our collective revolt against the cult of ambition, and “quiet quitting,” the catchy phrase to describe doing the bare minimum at work (or, you know, just treating it like a job), apparently describes half of the U.S. work force, according to a recent Gallup poll. Young people have meme-ified their own antiwork sentiments, proclaiming that they don’t dream of labor to catchy TikTok tunes or on Reddit, with the motto “Unemployment for all, not just the rich.”

And why wouldn’t they? Workplace burnout is a national crisis. According to a recent poll by the research firm Gartner, almost two-thirds of employees said the pandemic had made them question the role work should play in their lives, and the Society for Human Resource Management reports that more than half of American managers leave work feeling exhausted at the end of the day. It’s perhaps no surprise, then, that unionization efforts are underway across the country, aiming not only for higher wages but also for better working conditions overall. [...]

As one TikTok user said, in a quote I’ve been laughing about since reading it in an article in Vox last spring: “I don’t want to be a girlboss. I don’t want to hustle. I simply want to live my life slowly and lay down in a bed of moss with my lover and enjoy the rest of my existence reading books, creating art and loving myself and the people in my life.”

Honestly, yes. “Hard core” is a bygone era of management, not to mention a bygone way of living. As it happens, we’ve now got plenty of other, soft-core interests to replace it. [...]

Maybe what we are witnessing with Twitter’s mass exodus — and the general antiwork sentiment in general — is a labor revolt “in real time,” as one Twitter user put it. None of us want a job in which we are overworked or undervalued, responding to fear or ultimatums, but for many people, that’s what work still is.

Tuesday, November 22, 2022

Bilingual readers possess two distinct visual word form areas (VWFA)

Do bilingual readers possess two distinct visual word form areas (VWFA) ?

— Stanislas Dehaene (@StanDehaene) November 21, 2022

See our new preprint on the cortical circuits for reading in high-resolution 7 Tesla fMRI with @zhanminye, Christophe Pallier and Laurent Cohen :https://t.co/ThcVDH0mo8 pic.twitter.com/t1AV58gQ3a

Abstract for the article linked above:

In expert readers, a brain region known as the visual word form area (VWFA) is highly sensitive to written words, exhibiting a posterior-to-anterior gradient of increasing sensitivity to orthographic stimuli whose statistics match those of real words. Using high-resolution 7T fMRI, we ask whether, in bilingual readers, distinct cortical patches specialize for different languages. In 21 English-French bilinguals, unsmoothed 1.2 mm fMRI revealed that the VWFA is actually composed of several small cortical patches highly selective for reading, with a posterior-to-anterior word similarity gradient, but with near-complete overlap between the two languages. In 10 English-Chinese bilinguals, however, while most word-specific patches exhibited similar reading specificity and word-similarity gradients for reading in Chinese and English, additional patches responded specifically to Chinese writing and, surprisingly, to faces. Our results show that the acquisition of multiple writing systems can indeed tune the visual cortex differently in bilinguals, sometimes leading to the emergence of cortical patches specialized for a single language.

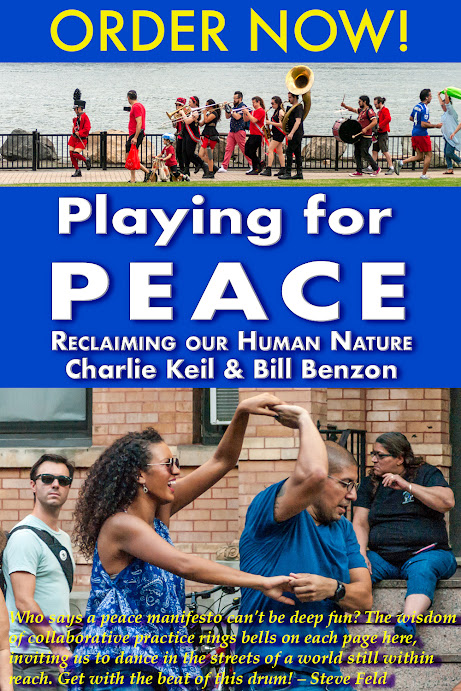

Dance to the Music: The Kids Owned the Day@3QD [dance competition]

In my latest post at 3 Quarks Daily I report on a dance competition where I was hired to photograph the dancers:

Dance to the Music: The Kids Owned the Day

At the end of the day there was a marvelous spontaneous eruption by the young dancers.

This piece is excerpted from the book Charlie Keil and I recently published: Playing for Peace: Reclaiming our Human Nature.

Sunday, November 20, 2022

The Near Future of AI is Action-Driven [+Adept]

Basic thesis is that while generative text is cool, the true power of LLMs will be unlocked by giving them actions they can take in the world. pic.twitter.com/sEi43Vf8tH

— John McDonnell (@johnvmcdonnell) November 15, 2022

And this:

2/7 This can be especially powerful for manual tasks and complex tools — in this example, what might ordinarily take 10+ clicks in Salesforce can be now done with just a sentence. pic.twitter.com/JUVqCZL6mS

— Adept (@AdeptAILabs) September 14, 2022

From Adept's blog post:

Natural language interfaces, powered by action transformers like ACT-1, will dramatically expand what people can do in front of a computer/phone/internet-connected device. A few years from now, we believe:

- Most interaction with computers will be done using natural language, not GUIs. We’ll tell our computer what to do, and it’ll do it. Today’s user interfaces will soon seem as archaic as landline phones do to smartphone users.

- Beginners will become power users, no training required. Anyone who can articulate their ideas in language can implement them, regardless of expertise. Software will become even more powerful as advanced features become accessible to everyone and no longer constrained by the length of a drop-down menu.

- Documentation, manuals, and FAQs will be for models, not for people. No longer will we need to learn the quirky language of every individual software tool in order to be effective at a task. We will never search through forums for “how to do X in Salesforce or Unity or Figma” — the model will do that work, allowing us to focus on the higher-order task at hand.

- Breakthroughs across all fields will be accelerated with AI as our teammate. Action transformers will work with us to bring about advances in drug design, engineering, and more. Collaborating with these models will make us more efficient, energized, and creative.

While we’re excited that these systems can transform what people can do on a computer, we clearly see that they have the potential to cause harm if misused or misaligned with user preferences. Our goal is to build a company with large-scale human feedback at the center — models will be evaluated on how well they satisfy user preferences, and we will iteratively evaluate how well this is working as our product becomes more sophisticated and load-bearing. To combat misuse, we plan to use a combination of machine learning techniques and careful, staged deployment.

Saturday, November 19, 2022

SBF and FTX – "the horror! the horror!" [some links, the future as epistmological 'ground-zero']

- What just happened?

- What happened in the lead-up to this happening?

- Why did all of this happen?

- What is going to happen to those involved going forward?

- What is going to happen to crypto in general?

- Why didn’t we see this coming, or those who did see it speak louder?

- What does this mean for FTX’s charitable efforts and those getting funding?

- What does this mean for Effective Altruism? Who knew what when?

- What if anything does this say about utilitarianism?

- How are we casting and framing the movie Michael Lewis is selling, in which he was previously (it seems) planning on portraying Sam Bankman-Fried as the Luke Skywalker to CZ’s Darth Vader? Presumably that will change a bit.

2.) A plain-English account: Jamie Bartlett, Sam Bankman-Fried's grypto-gold turned to dust, Nov. 18, 2022.

3.) Ross Douthat has an interesting 'moderating' [think of moderating a nuclear reaction] column, The Case for a Less-Effective Altruism (NYT 11.18.22).

4.) Not so long ago I came across an article that sheds light on one of the signal features of this debacle, an intense focus on predicting the future. This is particularly relevant to Effective Altruism's interest in so-called long-termism. The article:

Sun-Ha Hong, Predictions Without Futures, History and Theory, Vol 61, No. 3: July 2022, 1-20. https://onlinelibrary.wiley.com/doi/epdf/10.1111/hith.12269

From page 4:

Notably, today’s society feverishly anticipates an AI “breakthrough,” a moment when the innate force of technological progress transforms society irreversibly. Its proponents insist that the singularity is, as per the name, the only possible future (despite its repeated promise and deferral since the 1960s—that is, for almost the entire history of AI as a research problem). Such pronouncements generate legitimacy through a sense of inevitability that the early liberals sought in “laws of nature” and that the ancien régime sought in the divine. AI as a historical future promises “a disconnection” from past and present, and it cites that departure as the source of the possibility that even the most intractable political problems can be solved not by carefully unpacking them but by eliminating all of their priors. Thus, virtual reality solves the problems with reality merely by being virtual, cryptocurrency solves every known problem with currency by not being currency, and transhumanism solves the problem of people by transcending humanity. Meanwhile, the present and its teething problems are somewhat diluted of reality: there is less need to worry so much about concrete, existing patterns of inequality or inefficiency, the idea goes, since technological breakthroughs will soon render them irrelevant. Such technofutures saturate the space of the possible with the absence of a coherent vision for society.

That paragraph can be taken as a trenchant reading of long-termism, which is obsessed with prediction and, by focusing attention on the needs for people in the future, tends to empty the present of all substance.

Nor does it matter that the predicted technofuture is, at best, highly problematic (p. 7):

In short, the more unfulfilled these technofutures go, the more pervasive and entrenched they become. When Elon Musk claims that his Neuralink AI can eliminate the need for verbal communication in five to ten years [...] the statement should not be taken as a meaningful claim about concrete future outcomes. Rather, it is a dutifully traditional performance that, knowingly or not, reenacts the participatory rituals of (quasi) belief and attachment that have been central to the very history of artificial intelligence. After all, Marvin Minsky, AI’s original marketer, had loudly proclaimed the arrival of truly intelligent machines by the 1970s. The significance of these predictions does not depend on their accurate fulfillment, because their function is not to foretell future events but to borrow legitimacy and plausibility from the future in order to license anticipatory actions in the present.

Here, “the future . . . functions as an ‘epistemic black market.’” The conceit of the open future furnishes a space of relative looseness in what kinds of claims are considered plausible, a space where unproven and speculative statements can be couched in the language of simulations, innovation, and revolutionary duty.

Merger between AT&T AND Times Warner failed because of incompatible corporate cultures

James b. Stewart, Was This $100 Billion Deal the Worst Merger Ever? NYTimes, Nov. 19, 2022.

This is a fascinating article, well worth reading. Here's some paragraphs that characterize the cultural difference between the two corporations:

That summer, Mr. Bewkes [Times Warner] accepted Mr. Stephenson’s [AT&T] invitation to address the AT&T board in Dallas. He ended up speaking for two hours, twice his allotted time. For sports-obsessed Texans, he chose a football analogy: AT&T is “a running team. You move slowly down the field, three yards and a cloud of dust. You depend on obedient execution.” By contrast, “we’re a passing team. We may miss two or three times. But when we complete a pass, we’re 50 yards down the line.”

He elaborated that AT&T employed hundreds of thousands of essentially fungible workers. Time Warner was different, with a relatively small number of executives given a high degree of discretion.

“We’re like a platoon fighting a guerrilla war in the jungle,” Mr. Bewkes recalled telling the board. “If you try to replace our team with a regimented army, you’re going to ruin all our network and studio businesses.”

More fundamentally, he warned that AT&T’s main strategy for competing with Netflix and Amazon was flawed: It wanted to funnel as much Warner content as possible to HBO Max.

On the contrary, he believed Time Warner’s strength was it was “like Switzerland,” selling to the highest and most appropriate bidder, both domestically and internationally, Mr. Bewkes contended. That’s why its television studio was so successful. Premium drama could go to HBO. But shows with mass market appeal, like “Friends” and “The Big Bang Theory” were better off at the ad-supported broadcast networks. Hollywood talent was attracted to Time Warner’s neutrality.

There's much more at the link.

Monday, November 14, 2022

An Astronaut

Wrote a little poem. It's for kids. #poem #poetry #kidlit

— Rolli (@rolliwrites) November 14, 2022

(Tips: https://t.co/SCJUKxdEgs) pic.twitter.com/X7Pcm1nyq3

Saturday, November 12, 2022

Calcification and chaos in contemporary American politics [Ezra Klein]

Ezra Klein, “Three Theories that Explain this Strange Moment,” The NYTimes, Nov. 12, 2022.

Calcification (and chaos):

The cause of this calcification is no mystery. As the national parties diverge, voters cease switching between them. That the Republican and Democratic Parties have kept the same names for so long obscures how much they’ve changed. I find this statistic shocking, and perhaps you will, too: In 1952, only 50 percent of voters said they saw a big difference between the Democratic and Republican Parties. By 1984, it was 62 percent. In 2004, it was 76 percent. By 2020, it was 90 percent.

The yawning differences between the parties have made swing voters not just an endangered species, but a bizarre one. How muddled must your beliefs about politics be to shift regularly between Republican and Democratic Parties that agree on so little? [...]

Calcification, on its own, would produce a truly frozen politics. In some states, it does, with effective one-party rule leading to a politics devoid of true accountability or competition. But nationally, political control teeters, election after election, on a knife’s edge. That’s another strange dynamic of our era: Persistent parity between the parties.

The concluding paragraph:

If you were looking for a three-sentence summary of American politics in recent years, I think you could do worse than this: The parties are so different that even seismic events don’t change many Americans minds. The parties are so closely matched that even minuscule shifts in the electoral winds can blow the country onto a wildly different course. And even in a time of profound economic dislocation, American politics has become less about which party is good for your wallet and more about whether the cultural changes of the past 50 years delight or dismay you.

There’s more at the link [connecting tissue].

Dreams of artificial people, through history [ontological ambiguity]

From an article by Stephen Marche, “The Imitation of Consciousness: On the Present and Future of Natural Language Processing,” Literary Hub, June 23, 2021.

The article is a general discussion of the title topic. This post is NOT a precis of that discussion. I simply want to highlight a few passages.

AI as existential threat

Engineers and scientists fear artificial intelligence in a way they have not feared any technology since the atomic bomb. Stephen Hawking has declared that “AI could be the worst event in the history of civilization.” Elon Musk, not exactly a technophobe, calls AI “our greatest existential threat.” Outside of AI specialists, people tend to fear artificial intelligence because they’ve seen it at the movies, and it’s mostly artificial general intelligence they fear, machine sentience. It’s Skynet from Terminator. It’s Data from Star Trek. It’s Ex Machina. It’s Her. But artificial general intelligence is as remote as interstellar travel; its existence is imaginable but not presently conceivable. Nobody has any idea what it might look like. Meanwhile, the artificial intelligence of natural language processing is arriving. In January, 2021, Microsoft filed a patent to reincarnate people digitally through distinct voice fonts appended to lingual identities garnered from their social media accounts. I don’t see any reason why it can’t work. I believe that, if my grandchildren want to ask me a question after I’m dead, they will have access to a machine that will give them an answer and in my voice. That’s not a “new soul.” It is a mechanical tongue, an artificial person, a virtual being. The application of machine learning to natural language processing achieves the imitation of consciousness, not consciousness itself, and it is not science fiction. It is now.

The Turing Test [1950]

The Turing test appeared in the October 1950 issue of Mind. It posed two questions. The first was simple and grand: “Can machines think?” The second took the form of the famous imitation game. An interrogator is faced with two beings, one human and the other artificial. The interrogator asks a series of questions and has to decide who is human and what is artificial. The questions Turing originally imagined for the imitation game have all been solved: “Please write me a sonnet on the subject of the Forth Bridge.” “Add 34957 to 70764.” “Do you play chess?” These are all triflingly easy by this point, even the composition of the sonnet. For Turing, “these questions replace our original, ‘Can machines think?’” But the replacement has only been temporary. The Turing test has been solved but the question it was created to answer has not. At the time of writing, this is a curious intellectual conundrum. In the near future, it will be a social crisis.

The actual text of Turing’s paper, after the initial proposition of the imitation game and a general introduction to the universality of digital computation, consists mainly in overcoming a series of objections to the premise that machines can think. These range from objections Turing dismisses out of hand—the theological objection that “thinking is a function of man’s immortal soul”—to those that are more serious, such as the limitations of discrete-state machines implied by Godel’s theory of sets. (This objection does not survive because the identical limitation applies to all conceivable expressions of human intelligence as well.)

Revised:

What is shocking about the artificial intelligence of natural language processing is not that we’ve created new consciousnesses, but that we’ve created machines we can’t tell apart from consciousnesses. The question isn’t going to be “Can machines think?” The question isn’t even going to be “How can you create a machine that imitates a person?” The question is going to be: “How can you tell a machine from a person?”

Norbert Weiner lists historical moments

This is the passage that originally caught my attention:

Early in Cybernetics, the foundational text of engineered communication, Norbert Weiner offers a brief history of the dream of artificial people. “At every stage of technique since Daedalus or Hero of Alexandria the ability of the artificer to produce a working simulacrum of a living organism has always intrigued people. This desire to produce and to study automata has always been expressed in terms of the living technique of the age,” he writes. “In the days of magic, we have the bizarre and sinister concept of the Golem, that figure of clay into which the Rabbi of Prague breathed life with the blasphemy of the Ineffable Name of God. In the time of Newton, the automaton becomes the clockwork music box, with the little offices pirouetting stiffly on top. In the nineteenth century, the automaton is a glorified heat engine, burning some combustible fuel instead of the glycogen of the human muscles. Finally, the present automaton opens doors by means of photocells, or points guns to the play at which a radar beam picks up an airplane, or competes the solution of a differential equation.” Ultimately, we’re just not that far from the Rabbi of Prague, breathing the Ineffable over raw material, fearful of the golems conjured to stalk the city. The golems have become digital. The rabbis breathe the ineffable over silicon rather than clay. The crisis is the same.

Mathematics over language

Here’s where we’re at: Capable of imitating consciousness through machines but not capable of understanding what consciousness is. This gap will define the spiritual condition of the near future. Mathematics, the language of nature, is overtaking human language. Two grounding assumptions underlying human existence are about to be shattered, that language is a human property and that language is evidence of consciousness. The era we are entering is not posthuman, as so many hoped and feared, but on the edge of the human, incapable, at least for the present, of going forward and unable to go back. Horror mingled with wonder is an appropriate response.

Hmmmm....

Wednesday, November 9, 2022

Thoughts on war and Ukraine @3QD

Back in Octobor I posted a short article on the Ukraine war at 3 Quarks Daily:

All roads lead to Ukraine [war] – Scattered fragments of a [nuclear] memoir

It’s hard to be optimistic about that war – it’s just so pointless and awful, like wars in general. This article is as hopeful as any I’ve read: Timothy Snyder, “How does the Russo-Ukrainian War end?”, Thinking About..., October 5, 2022. The last four paragraphs:

And so we can see a plausible scenario for how this war ends. War is a form of politics, and the Russian regime is altered by defeat. As Ukraine continues to win battles, one reversal is accompanied by another: the televisual yields to the real, and the Ukrainian campaign yields to a struggle for power in Russia. In such a struggle, it makes no sense to have armed allies far away in Ukraine who might be more usefully deployed in Russia: not necessarily in an armed conflict, although this cannot be ruled out entirely, but to deter others and protect oneself. For all of the actors concerned, it might be bad to lose in Ukraine, but it is worse to lose in Russia.

The logic of the situation favors he who realizes this most quickly, and is able to control and redeploy. Once the cascade begins, it quickly makes no sense for anyone to have any Russian forces in Ukraine at all. Again, from this it does not necessarily follow that there will be armed clashes in Russia: it is just that, as the instability created by the war in Ukraine comes home, Russian leaders who wish to gain from that instability, or protect themselves from it, will want their power centers close to Moscow. And this, of course, would be a very good thing, for Ukraine and for the world.

If this is what is coming, Putin will need no excuse to pull out from Ukraine, since he will be doing so for his own political survival. For all of his personal attachment to his odd ideas about Ukraine, I take it that he is more attached to power. If the scenario I describe here unfolds, we don't have to worry about the kinds of things we tend to worry about, like how Putin is feeling about the war, and whether Russians will be upset about losing. During an internal struggle for power in Russia, Putin and other Russians will have other things on their minds, and the war will give way to those more pressing concerns. Sometimes you change the subject, and sometimes the subject changes you.

Of course, all of this remains very hard to predict, especially at any level of detail. Other outcomes are entirely possible. But the line of development I discuss here is not only far better, but also far more likely, than the doomsday scenarios we fear. It is thus worth considering, and worth preparing for.

Very young children in Japan: “Old Enough” and “Kimono Mom” [Media Notes 81]

Two things here, both from Japan: Old Enough, a new Netflix series based on a series of short vignettes originally filmed in Japan between 1988 and 1994, and Kimono Mom, a YouTube channel that’s a little over two years old. The first is focused on very young children while the second involves a young, and very cute (kawaii!) girl. I end with a bonus video, a performance by an elementary school band.

Old Enough

I’ve watched about ten of the episodes Netflix has uploaded so far. All are relatively short. Each episode features a child between two and four or five setting out on an errand, generally delivering something or buying something. Some episodes are set in rural or suburban areas, others in a city. The errands are challenging to the children, but they manage to succeed. Each episode has an enthusiastic and sympathetic voice-over. A passage from a New York Times article captures my interest in the series: Jessica Grose, On Japan’s Adorable ‘Old Enough!’ Show and the State of American Childhoods (April 16, 2022):

In addition to being utterly charmed by how cute the show is, my response was: This wouldn’t fly in the United States. If there were an American version, parents who allowed their children to appear would probably be framed as irresponsible, or the kids would be shown to need parental support at every turn.

You’re probably also thinking: America is not Japan. And that’s correct. Our cultures are quite different. One glaring example is gun violence [...] Another difference is infrastructure. [...] But even given these differences, we should at least entertain the idea that Americans have over-rotated on protectiveness in the past few decades and need to reconsider letting their kids do more by themselves.

Christine Gross-Loh, the author of “Parenting Without Borders: Surprising Lessons Parents Around the World Can Teach Us,” who has lived in Japan and the United States with her kids, said that she had culture shock in both directions — first when she moved to Japan, and again when she moved back to the United States. “In school and parenting, all the assumptions of what children can do and should be learning, it’s almost inverted,” she said. In Japan, there’s a focus on “teaching children to pull their own weight from an early age, having these expectations that they’re capable of being independent, being left at home alone or cooking or using knives or walking to school at 6,” she said.

With that in mind, let’s take a look at Kimono Mom.

Kimono Mom

Moe a young Japanese woman with a young daughter, Sutan. She makes videos about Japanese home cooking, but also more generally about her life and family. Sutan is with her in all the videos; her husband, Moto, is in some of them (he’s generally at work). What I find most interesting is the interaction between Moe and Sutan, the way Moe actively recruits Sutan’s assistance in preparing the dish. For example (be sure to toggle the closed captions, at the lower right):

Note that at about 2:10 she shows Sutan how to peel the shrimp – note, as well, Sutan’s remark at 2:47: “Shrimp, thank you for coming to us today”. At 4:45 look at Sutan’s style in sprinkling salt on the shrimp. At 6:48 Sutan exhibits one of her favorite skills, cracking eggs. She then proceeds to beat the eggs.

That video is fairly typical of the 20 or so that I have watched. There are some where Sutan’s attention flags, where she becomes recalcitrant for whatever reason, but Moe is invariantly patient. There’s one video where Moe explains that she tries to treat Sutan as an equal – note that this may be from a video someone made about Moe and Sutan, I’ve watched a couple of those, rather than one of the Kimono Mom videos.

While Sutan’s cooking tasks are quite different from the errands the children undertake in Old Enough, the underlying cultural theme is the same: children are capable people and should be respected as such.

Bonus: Japanese Elementary School Band

And this brings me to one of my favorite videos, of Nakagurose Elementary School Band:

When I first heard this I couldn’t believe that these were elementary school children, and many of the commenters have had the same reaction. You don’t hear bands like that in America.

What struck me was the fundamental dignity underlying their performance. They may have been children, but when they were performing, they simply became human beings making music. No more, no less. It seems to me that they same attitude underlies the errands on Old Enough and Kimono Mom’s cooking demonstrations.

Awakening to the Meaning Crisis – An interesting Conversation

0:00 Signs of the so-called “meaning crisis”

3:50 How John addresses the meaning crisis in his own life

10:04 Bob gets empathy-circled—live!

18:48 How much of the meaning crisis is a crisis of community?

25:29 Wisdom as the antidote to self-deception

40:54 Is technological change outpacing our ability to adapt?

45:48 How and where might new sources of meaning emerge?

53:08 The conundrum of collective intelligence (or how should we talk about demons?)

59:57 Can video games give us meaning?

1:04:05 John: “Enlightenment” that fails to confront cognitive bias is meaningless

Robert Wright (Bloggingheads.tv, The Evolution of God, Nonzero, Why Buddhism Is True) and John Vervaeke (University of Toronto). Recorded October 27, 2022.

John Vervaeke's YouTube Channel: https://www.youtube.com/channel/UCpqDUjTsof-kTNpnyWper_Q