This is the heart of the story because it is during the 1970s when who knows? the discipline of academic literary criticism might have gone another way. But it didn’t. I came of age in the 1970s and headed in one direction while the discipline sorted itself out and went off in a different direction.

“The discipline” is of course both an abstraction and a reification. Literary criticism is not some one thing. It is a meshwork of people, documents, institutions, and organizations, all in turbulent Heraclitean flux. The discipline, then, does not speak with one voice. It speaks with many voices. And what those voices say changes by the year and the decade. Some themes and concerns are amplified while others are diminished. Here and there a new idea is voiced, while other ideas all but disappear.

When I talk about how the discipline changed during the 1970s, then, I am talking about a change in the mix of voices. My experience of the 1970s was dominated by my local environments, Johns Hopkins, SUNY Buffalo, and Rensselaer Polytechnic Institute. They were not homogeneous, nor like one another. Yet all three were, each in its way, alive with possibilities for change. And, I believe, so were other places. But the changes that began settling in at as the 1980s arrived were not the changes that most excited me at Hopkins and Buffalo. And so 40 years later we have a discipline where the article I submitted to New Literary History, Sharing Experience: Computation, Form, and Meaning in the Work of Literature, is strange and obtrusive rather than being unnecessary because the ideas and methods are widely known.

This is a long piece, over 5500 words, and with pictures, (aka visualizations)! If you want a shorter version, read two recent posts:

- The 70s, when literary criticism moved toward a world unto itself (w/ notes on computing), January 6, 2017, http://new-savanna.blogspot.com/2017/01/the-70s-when-literary-criticism-defined.html

- Time is Tricky: Looking Back at Looking Forward in Literary Criticism, December 26, 2016, http://new-savanna.blogspot.com/2016/12/time-is-tricky-looking-back-at-looking.html

The basic ideas are there. This post provides evidence and refinement.

First I take the long view, drawing on the recent study in which Andrew Goldstone and Ted Underwood examined the themes present in a century-long run of literary criticism. Then I look at some specific published in the 1970s to get a more fine-grained sense of attitudes. After that I look at the computational piece I published in MLN in 1976. The objective is to situate my work in its historical context, a time when the discipline was optimistic and energetic in a way that has disappeared...except, perhaps, for computational criticism, which we’ll get to in a later installment.

The triumph of interpretation (aka “reading”)

Roughly speaking, prior to World War II literary criticism in the American academy centered on philology, shading over to editorial work in one direction and literary history in a different direction. After the war interpretation became more and more important and by the 1960s it had become the focus of the discipline. But it was also becoming problematic. As more critics published about more texts it became clear that interpretations diverged. That prompted a couple decades of disciplinary self-examination and soul-searching.

What does it mean to interpret a text? What’s the relationship between the interpretation and the text? What’s the relationship between the critic and the text, or the critic and the reader? What about authorial intention and the text? And the reader’s intention? Can a text support more than one meaning? Why or why not? These questions and more kept theoreticians and methodologists busy for three decades. That’s the context in which Johns Hopkins hosted the famous structuralism conference in the fall of 1966.

The 1960s also saw the seminal work of Frederick Mosteller and David Wallace on the use of statistical techniques to identify the authors of twelve of The Federalist Papers [1] and the subsequent emergence of stylometrics in what was then called “humanities computing”. At the same time developments in linguistics, psycholinguistics and artificial intelligence were becoming more broadly visible and coalesced around the term “cognitive science” in the early 1970s.

With this background sketch in mind, let’s consult the study Goldstone and Underwood did of a century-long run of articles in seven journals: Critical Inquiry (1974–2013), ELH (1934–2013), Modern Language Review (1905–2013), Modern Philology (1903–2013), New Literary History (1969–2012), PMLA (1889–2007), and Review of English Studies (1925–2012) [2]. They use a relatively new method of analysis called topic modeling. This however is not the place to explain how that works [3]. Suffice it to say that the topic modeling depends on the informal idea that words which occur together across a wide variety of texts do so because they are about the same thing. Topic analysis thus involves examining the words in a collection of texts to see which words co-occur across many different texts in the corpus. A collection of such words is called a topic and is identified simply by listing the words in that topic along with their prevalence in the topic.

Goldstone and Underwood argue that the most significant change in the discipline happened in the quarter century or so after World War II (p. 372):

The model indicates that the conceptual building blocks of contemporary literary study become prominent as scholarly key terms only in the decades after the war—and some not until the 1980s. We suggest, speculatively, that this pattern testifies not to the rejection but to the naturalization of literary criticism in scholarship. It becomes part of the shared atmosphere of literary study, a taken-for-granted part of the doxa of literary scholarship. Whereas in the prewar decades, other, more descriptive modes of scholarship were important, the post-1970 discourses of the literary, interpretation, and reading all suggest a shared agreement that these are the true objects and aims of literary study—as the critics believed. If criticism itself was no longer the most prominent idea under discussion, this was likely due to the tacit acceptance of its premises, not their supersession.

That is, if criticism, by which they mean interpretive criticism, has its origins earlier in the century, it didn’t become fully accepted as the core activity of academic literary study until the third quarter of the century.

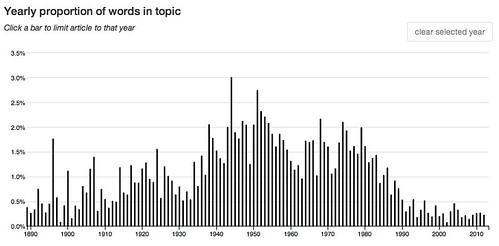

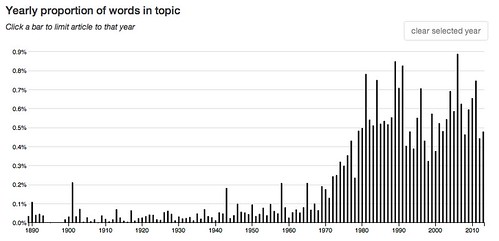

Consider Topic 16, which is a theory and method oriented topic where the following words are prominent: criticism work critical theory art critics critic nature method view. This graph shows how that topic evolved in prominence over time, with its high point at the mid-century and a rapid falloff in the 1980s:

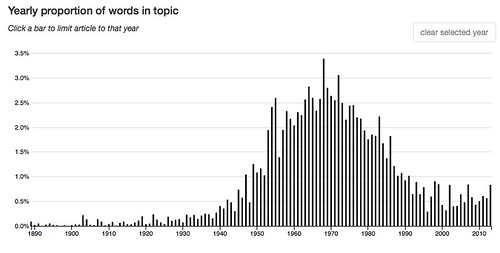

Now consider Topic 29: image time first images theme structure imagery final present pattern. Notice “structure” and “pattern” in that list. The structuralism conference was held at Johns Hopkins in the fall of 1966, just before the peak of the chart, while the conference proceedings (The Languages of Criticism and the Sciences of Man) were published in 1970, two years after the peak, 1968:

At that time structuralism was regarded as The Next Big Thing, hence the conference. Jacques Derrida was brought in as a last minute replacement, however, and his critique of Lévi-Strauss turned out to be the beginning of the end of structuralism and the beginning of poststructuralism and the various developments that came to be called capital “T” Theory. The structuralist moment, with its focus on language, signs, and system, was but a turning point.

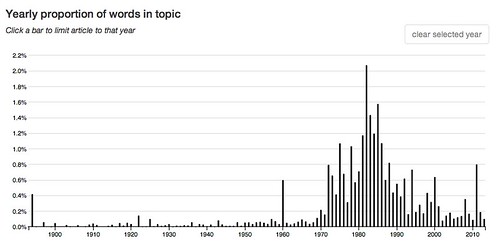

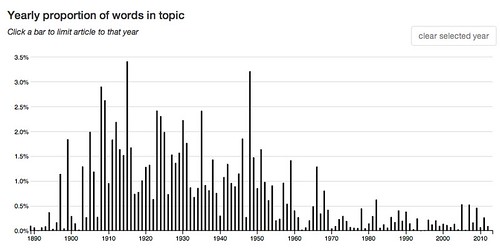

The next two charts show Theory firmly in place. Topic 39 centers on interpretation and meaning and peaks in the 1980s: interpretation meaning text theory intention interpretive interpretations context meanings literary.

Topic 143 emphasizes culture and critique, and rises through the eighties and continues strong into the new millennium (when Topic 39 had slacked off): new cultural culture theory critical studies contemporary intellectual political essay.

To conclude this part of the argument I want to look at two topics that center on reading, in two different senses. The central technique of interpretive criticism is, of course, “close reading” [4].

Close reading takes form through writing or speaking (in a classroom or at a conference) and does not ordinarily follow from reading literary texts in the ordinary sense of “reading”. One learns close reading through an apprenticeship that typically begins in undergraduate school and continues into graduate training. Close reading is practiced almost exclusively by intellectual specialists (that is, academic literary critics) while most people who enjoy literature (and television, film, drama) do not do it once they have finished their education.

One consequence of the rise of interpretation has been to elide distinction between ordinary reading and interpretive reading – in the next section we’ll see examples of how this elision has been actively executed. Critics “read” texts and so create “readings”. Though the interpretive reading of religious texts is an ancient activity, the routine interpretive reading of literary texts is relatively new. This use of “reading” has become so ingrained that critics will sometimes talk as though interpretive reading were the only real reading [5].

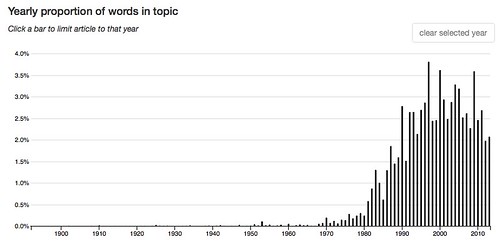

With this in mind, let us take a look at Topic 20. Judging from it most prominent words, it seems weighted toward current critical usage: reading text reader read readers texts textual woolf essay Virginia.

That usage becomes more obvious when we compare it with Topic 117, which seems to reflect a more prosaic usage, where reading is mostly just reading, not hermeneutics: text ms line reading mss other two lines first scribe. Note in particular the terms – ms line mss lines – which clearly reference a physical text.

This topic is most prominent prior to 1960 while Topic 20 rises to prominence after 1970. For what it’s worth – not much, but it’s what I can offer – that accords with my personal sense of things going back to my undergraduate years at Johns Hopkins in the later 1960s. I have vague memories of remarking (to myself) on how odd it seemed to refer to interpretative criticism as mere reading when, really, it wasn’t that at all.

This elision, I will argue in the next section, is not simply a matter convenience. It reflects active ideological shaping, if you will. This ideology of interpretive criticism has other aspects – concepts of the text, form, and “theory” – but we need not go into them here [6].

Modern ‘rithmatics and the naturalization of reading

Let me begin by repeating my standard passage from the preface to Structuralist Poetics (Cornell 1975), the one where Culler imagined a type of literary study that “would not be primarily interpretive; it would not offer a method which, when applied to literary works, produced new and hitherto unexpected meanings. Rather than a criticism which discovers or assigns meanings, it would be a poetics which strives to define the conditions of meaning” (p. xiv). Such a study, a poetics, seemed like a real possibility at the beginning of the decade, but that possibility was fading fast by the decade’s end while interpretation had became securely naturalized as reading.

That’s the significance of eliding the distinction between ordinary and interpretive reading. Interpretation ceases to be a special intellectual skill. It is merely reading – recall Topic 20 above.

With that in mind, let’s take a quick and crude look at the biggest critical hits of the 1970s. Consider a paragraph that Jeffrey Whalen published in an online symposium on “Theory” that took place in 2005 at an academic blog called The Valve [7]:

In an earlier post Michael Bérubé takes Bauerlein to task for claiming that theory has been in decline for 30 years [...], and stated that theory hadn’t really even arrived in America by then: “In 1975, the hottest items in the theory store were reader-response criticism (Wolfgang Iser, The Implied Reader, 1974), and structuralism (Jonathan Culler, Structuralist Poetics, 1975 [...]).” As a sophomore at Stanford in 1974-75 – and Stanford was hardly at the cutting edge! – I read Foucault’s The Order of Things and Barthes’s Critical Essays in one class, and Barthes’s Writing Degree Zero and Lyotard’s Discours, Figure in another. It was partly these courses that led me to switch my major to literary criticism (from mathematics), and to study in Paris the following year, where I took courses with Foucault, Barthes, and Todorov, among others. I think it was “French” theory, and not American (or German) syntheses, that was exciting people back in 1975.

Now let’s look the reader-response criticism of Stanley Fish. In his seminal essay, “Literature in the Reader: Affective Stylistics”, [8] Fish made a general point that the pattern of expectations, some satisfied and some not, which is set up in the process of reading literary texts is essential to the meaning of those texts. Hence any adequate analytic method must describe that essentially temporal pattern. Of his own method, Fish asserts:

Essentially what the method does is slow down the reading experience so that “events” one does not notice in normal time, but which do occur, are brought before our analytical attentions. It is as if a slow motion camera with an automatic stop action effect were recording our linguistic experiences and presenting them to us for viewing. Of course the value of such a procedure is predicated on the idea of meaning as an event, something that is happening between words and in the reader’s mind...

A bit further on Fish asserts that “What is required, then, is a method, a machine if you will, which in its operation makes observable, or at least accessible, what goes on below the level of self-conscious response.” What did he mean by that, “a method, a machine”? Could he have been thinking of a computer? What else could it be, a steam locomotive, a sewing machine, an electric drill?

While he doesn’t mention computers by name in that essay, he does examine some computational stylistics in another essay he wrote in the early 1970s, “What Is Stylistics and Why Are They Saying Such Terrible Things About It?” [9]. But I’m more interested in what he said about an article by the linguist, Michael Halliday, where he once again invokes the machine. He noted that that Halliday has a considerable conceptual apparatus (linguistics tends to be like that). After quoting a passage in which Halliday analyses a single sentence from Through the Looking Glass, Fish remarks (p. 80): “When a text is run through Halliday’s machine, its parts are first dissembled, then labeled, and finally recombined in their original form. The procedure is a complicated one, and it requires many operations, but the critic who performs them has finally done nothing at all.” Note, moreover, that he had framed Halliday’s essay as one of many lured on by “the promise of an automatic interpretive procedure” (p. 78), that is, computerized. While Fish is obviously skeptical of Halliday’s work, my point is simply that he examined it and that he was thinking about computation during the same period.

And then we have the publication of Geoffrey Hartman’s collection, The Fate of Reading (Chicago), also in 1975. Here’s what Hartman said in the title essay (p. 271):

I wonder, finally, whether the very concept of reading is not in jeopardy. Pedagogically, of course, we still respond to those who call for improved reading skills; but to describe most semiological or structural analyses of poetry as a “reading” extends the term almost beyond recognition.

Hartman goes on to observe: “modern ‘rithmatics’ — semiotics, linguistics, and technical structuralism — are not the solution. They widen, if anything, the rift between reading and writing” (272). It’s as though, if the critic gets too far from the text by using these modern ‘rithmatics (and what would a deconstructive critic do with the nostalgic echo implied by that term?), then it is impossible to maintain the fiction that interpretation, after all, is just reading. For what it’s worth, at that time I had the vague impression that it was one thing if Lévi-Strauss wanted to analyze South American myths in THAT way, with his tables and quasi-mathematical formula, but nosiree! we’re not going to do that with our (sacred) texts. Hartman doesn’t want any technical disciplines interfering with his reading.

It is this kind of discussion that authorizes and naturalizes the practice of eliding the distinction between reading and reading interpretive analysis. Every critic knows that interpretive reading requires training and practice, because they’ve been through it. But the emerging sense of the critical vocation somehow requires that we forget about that training or, better still, that we create a intellectual mythology that allows us to understand that training as bringing us closer to the text than we could ever have gotten without it.

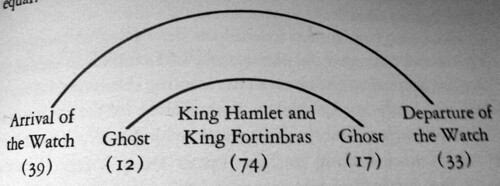

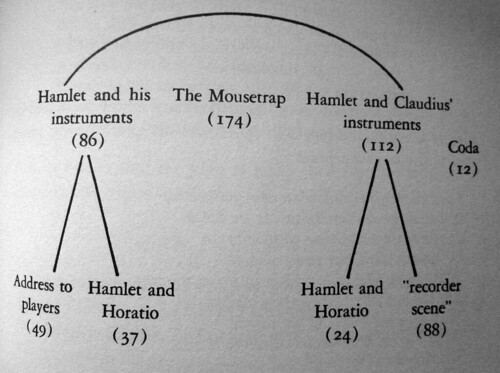

Finally, I want to look at a different kind of example, a piece of practical criticism that has little to do with structuralism, linguistics, or any of the other theories and methods that were roiling around in the 1970s. I chose this example because it betrays this emerging ideology of distance and reading. This example is from a slender volume of Shakespeare criticism, one however that it not about interpreting Shakespeare. It is about examining formal arrangements in the plays and includes discussion of such utterly banal matters as editorial practice with regard to scene divisions and stage directions. It is a study in poetics rather than interpretation.

I’m talking about Mark Rose’s 1972, Shakespearean Design (Harvard). Rose has these two paragraphs in his preface (viii):

A critic attempting to talk concretely about Shakespearean structure has two choices. He can create an artificial language of his own, which has the advantage of precision; or he can make do with whatever words seem most useful at each stage in the argument, which has the advantage of comprehensibility. In general, I have chosen the latter course.The little charts and diagrams may initially give a false impression. I included these charts only reluctantly, deciding that, inelegant as they are, they provide an economical way of making certain matters clear. The numbers, usually line totals, sprinkled throughout may also give a false impression of exactness. I indicate line totals only to give a rough idea of the general proportions of a particular scene or segment.

Here we have two of those offending diagrams, from the Hamlet chapter, pages 97 and 103 respectively; you can see those (unfortunate and misleading) line counts in parentheses:

What’s the fuss about? There aren’t many of these diagrams and all of them are simple. And, yes, without them, Rose’s accounts would be more difficult to understand. Indeed, without them, a reader would be tempted to sketch their own diagrams on convenient scraps of paper.

My best guess is that Rose’s misgivings about the numbers and the diagrams are symptoms of the emerging ideology of critical closeness. Numbers and diagrams get in the way of the illusion that our reading of this critical text is bringing us closer to the literary texts under discussion. They objectify the texts under discussion, just as the technicalities of Lévi-Straus’s structuralism (with its diagrams, tables, and quasi-mathematical formulas) and Chomsky’s generative grammar, which was the talk of the town (and had its logical propositions, derivations, and tree diagrams). Objectification breaks the (intellectual) mood, like breaking the fourth wall in a play or a movie.

In those paragraphs Rose is thus participating in the same ideological wrangling as Hartman, though the ‘rithmatics of structuralism and linguistics are nowhere to be found in his work. His argument forces him to use with diagrams, and so he apologizes for the intrusion. That apology has the effect of validating “closeness” as the proper stance of the literary critic.

What do we make of all this? First, recall the broad narrative: Criticism was on the rise after WWII and had become problematic by the 1960s. In that context, scholars were looking around for ideas and methods. Some of those ideas and methods were about investigating the conditions of meaning, poetics in Culler’s usage, while others were about establishing the meaning of texts.

Scholars concerned with interpreting texts worked to naturalize that activity, thereby assigning it primacy over poetics, by actively eliding the distinction between interpretation and reading. Abstractly considered, however, there is no need to choose between poetics and interpretation. Why not do both? But that’s not what happened. A choice was made. Why?

Consider the following phrase, which refers to two books (by J. Hillis Miller and Irving Howe) written in the 1960s: “...a sense of spiritual crisis forcing literature to play roles once reserved for theology and philosophy.” The phrase was written by Charlie Altieri and is in an essay he’s written about aesthetic issues in modernist poetry [10]. That’s also when “Death of God” theology was making national news, with Time magazine plastering the question – “Is God Dead” – on the cover of its April 8, 1966 edition [11]. A criticism that conceives of literature as secular religion is likely to think of critics as secular prophets, and so we have Hartman referring to “the minor mode of prophecy we call criticism” (The Fate of Reading, p. 267).

Literary criticism thus felt itself called to something far deeper than merely investigating how literary texts operate in the mind and society. As secular theology the remit of criticism was grand indeed and, of course, could brook no methodological or conceptual intrusion between the prophet and the text on which the prophecy is based. It must be as though prophet him or herself uttered the text.

Since we opened this section with Culler, let us close with him. In 2007 he gave an interview to Jeffrey Williams, then editor of the minnesota review which ranged over his career [12]. He notes “but I guess at some level I do still remain at least theoretically committed to the project of a poetics. I do think that poetics rather than more interpretation is what literary studies ought to be doing.” And yet:

I do think, especially in the context of the university and of graduate education, that theory remains the space in which people are debating the most interesting questions about what it is we’re doing and what we should be doing. [...] It is, as you say, harder to feel triumphalist about theory than it was when it was clearly succeeding and transforming literary study, opening it up to all sorts of discourses, such as psychoanalysis, Marxism, philosophy, linguistics. And of course theoretical enterprises, broadly conceived, opened it up to all kinds of political questions and the broadest questions of social justice that for many people, for a certain time, made literary studies a very exciting realm—something that smart kids gravitated to because they wanted to explore these issues and found that they could do it more interestingly and flexibly than in a department of philosophy or a department of political science.

That is criticism as secular theology. That is why interpretation won out over poetics.

Literary (and then cultural) criticism became a general way of exploring a broad range of issues, of doing philosophy in a broad synthetic sense. And it required a method in which one talked of literature in the same terms one used to talk about the world in general, whether those are the terms of secular or Christian humanism, Lacanian psychoanalysis, Marxist social theory, or any of the various critical approaches that arose in the 1980s and 1990s. By talking of literature in the same terms as talking of the world critics could pretend that in interpreting literary (and other) texts they were merely reading the world as text.

That’s where we ended up. But we hadn’t quite gotten there in the 1970s.

I want to end this section by looking at the article I published in MLN in 1976. In retrospect that article is crazy. In context, it was still very strange, but not so crazy.

Computing poetry in the MLN Centennial Issue

Back then anything was possible. Western metaphysics was in flames. New horizons opened up before us. Who knows, maybe even the cognitive sciences had something to say to literary criticism – Chomsky was certainly in the thick of it all. It was worth a shot.

That’s the spirit in which I was invited to contribute to the Centennial issue of MLN (Modern Language Notes). The journal had been founded in 1976, and was the second oldest academic literary journal in the United States – PMLA is the oldest. MLN was celebrating its centenary with a special issue devoted to criticism. Northrup Fry headlined the issue – (does anyone read him these days?) and Edward Said, Walter Benn Michaels, Louis Marin, Stanley Fish, and others contributed. My article was entitled “Cognitive Networks and Literary Semantics” [13]. Here’s what I said about my method (952):

Cognitive network theory has arisen from work in three domains: 1) computational linguistics, including the problem of automatic translation of natural languages, 2) artificial intelligence (i.e. how can we program a computer to act intelligently?), and 3) psycholinguistics and cognitive psychology. The first two areas are dominated by the technology of computers and the methods of mathematical linguistics and automata theory. The work in cognitive psychology and psycholinguistics is both theoretical and experimental. In this domain cognitive network theory ultimately becomes a neuropsychological theory about the information processing structures supporting mental processes. Cognitive network theory is thus an attempt to provide a formal account of how people understand the language they speak.

That would certainly seem to qualify it as a discipline seeking to “define the conditions of meaning”, to once again invoke Culler’s phrase.

That description is quite explicit about the connection between cognitive networks and computers: I was offering these literary critics a computational model. But that did not give anyone pause. Well, I’m sure that’s an exaggeration. But no one expressed any misgivings directly to me.

Computation wasn’t an issue when I had initial discussions with Dick Macksey and Sam Webber about the special issue – I was visiting Baltimore on holiday break in 1975. And it certainly wasn’t an issue in the English Department at SUNY Buffalo, where I was pursuing my PhD. They knew I was a member of David Hays’s research group in computational linguistics and that was fine. For that matter, and returning to Hopkins, I remember a brief conversation I had with the late Don Howard in my senior year. He was urging me to apply to the English Department for graduate study and, knowing of my interest in computers, mentioned computational stylometrics as something I might be interested in. I knew what he was talking about, but didn’t mention that I thought it rather pedestrian.

It’s not as though no one was skeptical about computing in those days. Hubert Dreyfus had published What Computers Can’t Do in 1972. Joseph Weizenbaum, who’d created the (in)famous ELIZA, published Computer Power and Human Reason in 1976. Skepticism, yes, but a threat to humanities discourse, not really. It was all so new.

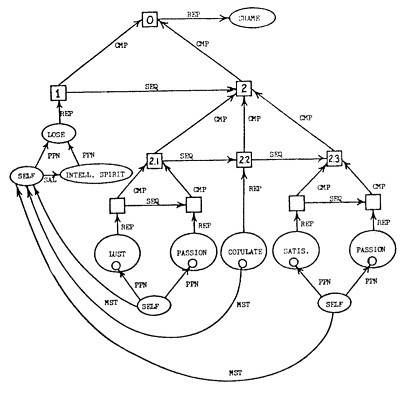

Returning to my article, the article offer cognitive networks as my methodological tool in examining Shakespeare’s well-known Sonnet 129, “The expense of spirit in a waste of shame”. Networks demand visualization and that article had 11 diagrams, some quite complex. I drew the diagrams first – they embodied the model and that’s where the main conceptual work took place, constructing the model – and then wrote the prose necessary to explicate them. Here’s one of the more complicated figures:

This is not the place to even begin to attempt an explanation of what’s going on in that diagram. Suffice it to say that it represents a fragment of a (hypothesized) structure in the mind of a reader, any reader, of the poem. Think of that structure as something like a road map. Think of a poem as tracing a path through the network from some starting point (say Red Bank, New Jersey) to an end point (say Spokane, Washington). That path would then be an account of the poem’s “conditions of meaning”. A different path would trace a different poem. Thus one model can provide the conditions of meaning for multiple poems. And none of this is at all like a “reading” of a poem as literary critics understand the term.

I’m afraid, Toto, that we’ve left Geoffrey Hartman’s Kansas far behind. This is a new world. Whether brave or crazy, who knows?

But let’s return to Stanley Fish and his computer imagery. In his affective stylistics essay he said we need “a method, a machine if you will, which in its operation makes observable, or at least accessible, what goes on below the level of self-conscious response.” That’s what a cognitive network model does. That’s why such models were developed, to reveal the mental processes through language is understood. In another essay he talked of “Halliday’s machine”, where parts of a text “are first dissembled, then labeled, and finally recombined in their original form.” Crudely put, that’s how computer systems “understand” or “process” (pick your word) text. Fish complains that “the procedure is a complicated one, and it requires many operations, but the critic who performs them has finally done nothing at all.” Well, duh! The point is that the critic, that is, the machine, was able to execute the procedure without a hitch. The model is a success. It is thus a plausible account of the poem’s conditions of meaning.

Three things need to be said. First, so far as I know, Fish never went on to develop that affective stylistics nor to talk more about that hypothesized machine. As I don’t have access to Fish’s mind, I don’t really know why he abandoned that line of thinking. My guess though would be that he didn’t know how to do it and whatever suspicions he had told him the task would take him far from literary criticism and theory, too far.

Second, I never actually programmed the model so I don’t know whether or not it could actually “understand” the poem. From that follows a potentially endless discussion that would be distracting in this context. For one thing, to enter into that discussion you need to know something about how such models are in fact tested, though that doesn’t get us into the thorny philosophical issues. For the sake of argument, though, let us assume for the sake of argument that that discussion goes well.

Third, to understand what such model has accomplished, you obviously have to understand how the model works. And that is quite different from understanding the poem itself, whatever that means. One can understand poems without understanding the model; people have been doing it for thousands of years, just as they’ve understood poems without benefit of close readings. But one can also understand the model, its parts and processes, without understanding the poem. One must understand the model independently of (and logically prior to) understanding how it is activated by the text.

I rather doubt that Culler had such an odd result in mind when he distinguished between poetics and interpretation. For that matter, I don’t think we need to go that far (to once again invoke the trope of distance) to constitute a valid poetics. But if you want to define the outermost boundary of the “space” in which one might formulate a poetics, that’s a reasonable approximation [14].

Let’s regroup.

Where we are today

Taken in context, my computational project was certainly unusual for a literary scholar. But it was not crazy. Change was in the air. Things were in flux.

In retrospect, though, it was too far – to invoke the ubiquitous trope of distance – from the center of literary criticism. That center was much closer to theology and prophecy than to computation and cognitive science. If the computational cast of my work did not give pause in my local environments – the Humanities Center at Johns Hopkins and the Department of English SUNY Buffalo – that is because those intellectual ecologies were unusually imaginative and generous [15].

However, computing wasn’t the ubiquitous presence in the 1970s that it has now become. Yes, thinkers were skeptical about whether or not the human mind could be simulated or even fully emulated by a computer, and rightly so – I was (and still am) skeptical. But that doesn’t mean that computing has nothing to teach us. And in any event, that was a relatively esoteric discussion back then.

Things have changed. Everyone uses computers in one form or another. We’ve had a decade or more of worry about people’s minds being rotted by online discourse. This plays directly to the anti-science and anti-technology strains in humanistic thought, as does the emergence of computer mediated instruction and distance learning.

And then we have the emergence of the digital humanities. These various disciplines for the most part avoid any suggestion of computers as models for the human mind. Computer use is purely instrumental. Yet, yet...it’s all so scary! The computational models that were merely strange and complex back in 1976 remain strange and complex now, but they may also be a threat to humanity, or at least the humanities.

The sense of threat, to be blunt, is silly and unjustified. But, alas, it is real. The upshot is that even as computational criticism is on the rise in the form of “distant reading” (there’s that trope of distance), the idea that the mind might have a computational aspect, that computation might give us fundamental insight into the workings of the literary mind, that idea seems threatening in a way that it was not back in 1976.

References

[1] Frederick Mosteller and David L. Wallace, “Inference in an Authorship Problem”. Journal of the American Statistical Association, Vol. 58, 1963, pp. 275-309.

[2] Andrew Goldstone and Ted Underwood, “The Quiet Transformation of Literary Studies”, New Literary History, 45 (3), 2014, 359-384. Goldstone has created a website where you can examine the topic model. That’s where I obtained the figures I’m using in this post. Here’s some explanatory prose, http://andrewgoldstone.com/blog/2014/05/29/quiet/

And here’s the model itself: http://www.rci.rutgers.edu/~ag978/quiet/

[3] See my post, Topic Models: Strange Objects, New Worlds, New Savanna (blog), January 10, 2013, URL: http://new-savanna.blogspot.com/2013/01/topic-models-strange-objects-new-worlds.html

[4] Close reading has been the subject of a number of interesting and important articles in the last half-dozen years or so, all of which are worth reading. But I single out the one by Barbara Herrnstein Smith because of her age. According to her Wikipedia entry she was born in 1932; her CV lists her B.A. in 1954 and her Ph.D. in 1963. That means that, while she is not old enough to have witnessed the early days of close reading, she reached intellectual maturing at a time when it still had to justify itself. That kind of direct personal experiences is important for the kind of argument I am making. Her article: “What Was “Close Reading”?” A Century of Method in Literary Studies”. Minnesota Review, 87, 2016, pp. 57-75, URL: https://www.academia.edu/29218401/What_Was_Close_Reading_A_Century_of_Method_in_Literary_Studies

[5] There is evidence, however, that a younger generation of scholars is skeptical about this elision. Back in 2011 Andrew Goldstone made a post to Stanford’s Arcade site,

Close Reading as Genre, July 25, 2011, URL: http://arcade.stanford.edu/blogs/close-reading-genre

His first sentence: “Just what is that infamous thing, a close reading?” After some introductory matter he proposes 19 features of close reading. In his fifth feature he notes that it “is prototypically written”. In her initial comment, Natalia Cecire observes: “What we're teaching, of course, is not the reading (‘close’) so much as the writing (of close reading), as you suggest, and I find the distinction you're making useful.” The whole discussion is worthwhile.

[6] For an examination of this ideology of criticism, for that is what it is, see my working paper, Prospects: The Limits of Discursive Thinking and the Future of Literary Criticism, November 2015, 72 pp., URL: https://www.academia.edu/17168348/Prospects_The_Limits_of_Discursive_Thinking_and_the_Future_of_Literary_Criticism

[7] Jeffrey Whalen, The Death and Discontent of Theory, The Valve (blog), Theory’s Empire symposium, Friday, July 15, 2005:

[8] Stanley Fish, “Literature in the Reader: Affective Stylistics”, New Literary History, Vol. 2, No. 1, A Symposium on Literary History (Autumn, 1970), pp. 123-162. It was republished in Is There a Text in This Class? Harvard, 1980, pp. 21-17.

[9] “What Is Stylistics and Why Are They Saying Such Terrible Things About It?” Is There a Text in This Class? Harvard, 1980, pp. 68-96. I believe it was originally published in 1973.

[10] Charles Altieri. “Aesthetics.” In Stephen Ross, ed. Modernism and Theory. Routledge: 2009. pp. 197-207. There’s a draft online, URL: http://socrates.berkeley.edu/~altieri/manuscripts/Modernism.html

[11] Death of God theology. Wikipedia. Accessed December 2, 2015. URL: https://en.wikipedia.org/wiki/Death_of_God_theology

[12] Jeffrey Williams with Jonathan Culler, The Conversant, online, URL: http://theconversant.org/?p=4447

[13] William Benzon, “Cognitive Networks and Literary Semantics”, MLN 91: 952-982, 1976, URL: https://www.academia.edu/235111/Cognitive_Networks_and_Literary_Semantics

[14] David Hays and I more or less did that in an article we published in 1976. He had been asked to review the computational linguistics literature for Computers and the Humnanities (which changed its name to Language Resources and Evaluation in 2005), which was then the primary journal in humanistic computing. In that article we imagined that at some future date we would be able to construct a computer system that would be able to read Shakespeare’s plays in some robust sense of the word “read”. That has not yet happened. The article: William Benzon and David G. Hays, Computational Linguistics and the Humanist, Computers and the Humanities, 10, 1976, pp. 265-274, URL: https://www.academia.edu/1334653/Computational_Linguistics_and_the_Humanist

[15] See “Three Elite Schools” in my working paper, The Genius Chronicles: Going Boldly Where None Have Gone Before, September 2016, 48 pp., URL: https://www.academia.edu/7974651/The_Genius_Chronicles_Going_Boldly_Where_None_Have_Gone_Before

You are written an impressive post. I now understand more about literary criticism than I did. Being in the communication field I have used computer programs to analyze text for fairly simple things like keyword in context and have seen people doing more sophisticated content analysis over the years. I am not much of a a text analyzer but appreciate that sort of work.

ReplyDeleteI do not like the trend I have been seeing in the communication field away from theory to more just job preparation. We certainly have several Ph.D. granting institutions in the field where students are taught and research is being done on theory. However, even many masters programs these days are nothing more than training people to be pubic relations hacks where very little attention is paid to theory. Way too many students just want the advanced degree so they can get the job and don't really care about the underlying principles. I appreciate the need for job training but have trouble calling that a college education when the curriculums are becoming more and more job specific.

Thanks, Jim. I don't have a very good sense of what communication is as an institutionalized academic discipline, but my impression is that it is a lot of different things. My one and only academic post was in the Department of Language, Literature, and Communication at RPI. RPI was and is an engineering school and so LL&C was a relatively minor omnibus kind of department. You had literature courses, foreign language instruction, empirical work in communication, and technical writing. Technical writing was the department's claim to fame as it was one of the first tech writing programs in the country, though they proliferated in the 1980s and after. Tech writing tends to be a very nuts and bolts field. Writing end-user software documentation (I suspect this was the main driver of the tech writing's growth as an academic field) is an exacting craft skill of a high order (I've done it professionally).

ReplyDeleteIndeed it is a lot of different things ranging from interpersonal communication to public speaking to all aspects of mass communication and departments are as different from each other as you might imagine. I was fortunate to be in the very first undergraduate program named communication at Michigan State from 68-72. In some schools you will have separate departments of communication, public relations, advertising, journalism, broadcasting, etc. In some schools everything is contained in one department.

ReplyDeleteSeveral things, Jim.

Delete1. The basic disciplinary organization of the academy goes back to the 19th century. Communication just makes a mess of that, so departments of communication can be all over the map.

2. Theory and practice is tricky. The department of LL&C at RPI had a basic course in communication theory that was required of all graduate students. Most of the graduate students were in a master's program in tech writing. But some of them were in a PhD program in, well, communications. One year I was offered the chance to teach this course, and I gladly accepted. I forget what I used as texts, but I certainly picked texts that 1) I found interesting and 2) were important more generally. Two or three weeks into the course I got complaints from tech writing students that these texts didn't seem to have much practical value.

I didn't know what to say. They had a point. But...the trouble is that you learn a craft like tech writing by doing a lot of tech writing. You imitate examples. I simply didin't know any theory that was both interesting and had direct application to writing, say, end-user software manuals.

3. English departments are strange beasts. Back when I was teaching (the late 70s and early to mid 80s) they earned much of their keep by teaching composition. That of course is very important. But it has little prestige within the discipline of literary criticism. And technical writing had even less prestige. The annual conference of the Modern Language Association (MLA, the major lit crit association in the USA) has a few sessions on composition. But it's mostly about literature, and that despite the fact that the members spend a lot of time teaching freshman comp. If you're really interested in and committed to composition, you join the CCCC (Conference on College Composition and Communication) in addiction to or instead of the MLA.

I assume there are professional associations devoted to communication.

4. And then we have film studies and media studies.

5. It's nuts!