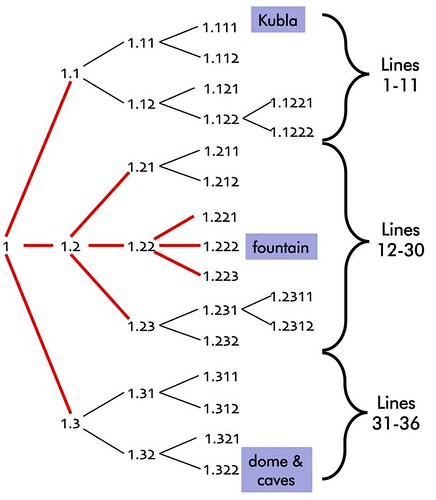

For me, those questions began when I was faced with the structure of “Kubla Khan”:

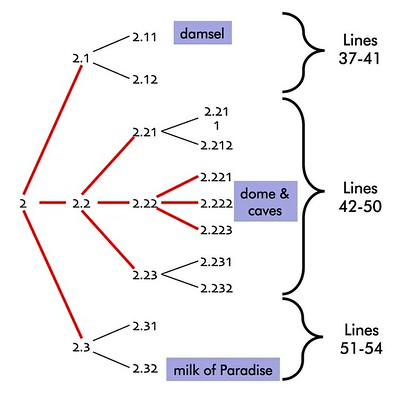

That’s just the first 36 lines, but the last 18 look the same:

That looked like the trace of some nested loops. If so, what then?

Let’s put that aside. After all, I’ve been working on that for a LONG time and still haven’t figure it out.

The problem I’m trying to figure out is this: How do we draw a principled line between those brain processes that are computational and those that are not?

Some (most, all?) computer scientists ‘seem to define a computer as “a physical mechanism that can theoretically calculate any computable function”.’ If I go with that notion, then it’s all computation and I have no reason to single out those tree structures in “Kubla Khan” for special attention, or any other linguistic tree structures for that matter. That’s not very helpful.

What if I think of the brain as a structured physical system? When Saty Chary advanced his Structured Physical System Hypothesis (SPSH) he was explicitly playing against the Physical System Hypothesis Hypothesis (PSSH) of Newell and Simon (1976). They advanced the PSSH as the central tenet of cognitive science and AI, and it echoes back to the 1943 paper in which McCulloch and Pitts argued that basic neuronal circuits are logic gates. It turns out that, no, they’re not. They’re quite a bit more complicated.

I like the SPSH. The question for me, then, is: Are their processes taking place in the brain that are NOT dominated by the internal physical activity of the nervous system? I should think so. The process of perception involves interaction with the external world, which necessarily implies that there are things happening in the brain that a driven, though not completely determined by, external physical circumstances. That, I believe, puts us in the world of William Powers, Behavior: The Control of Perception (1973). Language is among those circumstances; for humans it is one of the most important.

That, I think, will allow me to answer my question, though a proper answer will require more construction than I’m willing to undertake here. The relationship between the physical signifier and the signified is arbitrary. In particular, it is arbitrary with respect to the physical processes involved in both. It’s that arbitrary connection implies that language processes are not dominated by the internal physical dynamics of the brain. The basic language process is that of indexing, as Hays and I called it in Principles and Structure of Natural Intelligence. Newell and Simon called it designation.

Now, how can I formulate this idea so that it applies to large language models (in a useful way)? It seems to me that processes in LLMs are dominated by the statistics of the corpus that is modeled. That’s the nub of truth that’s captured by the otherwise reductive phrases, “stochastic parrots,” and “autocomplete on steroids.” The ideas that we require systems that both have access to the external world and that have robust symbolic capabilities, to a first approximation, those amount to freeing the system from being dominated by the statistics of some training corpus. Think about that, carefully.

More later.

No comments:

Post a Comment