I've just been reading through this post and its many comments, which is about digital humanities and cultural criticism, more or less. One topic under discussion: Is digital technology "transparent"? Thinking about that makes my head ache; after all, "digital technology" covers a lot of bases. Photography, whether analog or digital, is ofter treated as "transparent" in the sense that photographs register what is really and truly there, without bias. Any reasonably serious photographer knows that this is not true, at least not in the most obvious meaning. This old post discusses color, which is deeply problematic for any serious photographer. While I frame the discussion as being about subjectivity, it is equally about the technology.

* * * * *

It's time for another post from The Valve. This one's rather different from my current run of posts. It's about color, subjectivity, and digital cameras. I'm posting it here as a complement to a recent post by John Wilkins. It generated a fair amount of discussion over there, which I recommend to you. I've appended two short comments below. I've got two other posts that are related to this: this one looks at mid-town Manhattan from Hoboken, NJ, and this one looks at a pair of railroad signals in Jersey City.

This post is about color and subjectivity. It's not that I am deeply interested in the phenomenon of color; I'm not. Nor, in some sense, am I interested in subjectivity. But I am interested in literary meaning and beauty and so have to deal with subjectivity in that context. Meaning and beauty are subjective.

The purpose of this post is to think about a certain notion of subjectivity. All too often we identify subjectivity with the idea of unaccountable and-or idiosyncratic differences in the way people experience the world in general, or works of art in particular. I think such difference, though apparently quite common in human populations, is incidental to subjectivity. Things are subjective in that they can be apprehended only by subjects.

As I said in response to Joseph K's meditation on desert island aesthetics:

I take it that the color of objects is subjective in this sense. There is, for example, no direct relationship between the wavelengths of light reflected from a surface and the perceived color of that surface. Oddly enough, it is because the relationship between reflected wavelengths and perceived color is indirect that perceived color can be relatively constant under a wide variety of circumstances. It is also the case that, different subjects have different visual perceptual systems, they will perceive color differently-that's what color blindness is about.

Whatever literary experience is, however it works, it can happen only in subjects. Whereas the difference among subjects with respect to color perception is relatively small, though real, the difference among subjects with respect to literary taste is relatively large. But, so what? I do note, however, that taste can and does change.

I want to think about color because it seems to be much simpler than the meaning of literary texts. So simple in fact that some aspects of color phenomena can be externalized in cameras and computers. When a digital camera “measures” or “samples” the wave front of light incident upon its sensor, it is interacting with the external world in a relatively simple way. Relative, that is, to what happens in the interaction between wave fronts and the retinal membranes of, say, reptilian or mammalian perceptual systems.

With that in mind, let's look at a photograph.

On the morning of 25 November 2006 I walked to the shore of the Hudson River to take pictures of the sunrise over the south end of Manhattan Island. I used a Pentax K100D and, for the most part, let the camera set the parameters for each shot. I shot the pictures in so-called RAW format and then processed them on my computer.

Here's one of those photographs before I did anything to adjust the color:

Figure 1: Image without color adjustment

Figure 2: Color-adjusted image

There's quite a difference between the two images. I adjusted the color to match my sense of what I saw. I would like to have been able to compare the image on my monitor with the actual scene, but that, of course, was impossible. I took the picture at one time and place and processed it a few hours later in a different place. Perhaps I tried to match the image on my monitor to my recollection of the scene, or perhaps I just tried to make it accord with my sense of what's natural. It's not clear to me that there's much difference between those two.

Regardless of just what I was trying to achieve, it's doesn't make much sense to say that one of those images is more objective than the other in the sense that it more accurately represents the patterns of light that were actually there. What was actually there was a pattern of light rays of various wavelengths incident upon a certain patch in space where my camera happened to be. The camera's optical system focused that wave front on a sensor and the sensor took a bunch of readings at roughly six million points in the array. Those two images, Figures 1 and 2, represent two different ways of mapping that data onto color space. Color spaces exist only in nervous systems, and different nervous systems have different color spaces.

Had I chosen to over-ride the camera's automatic settings, the data set would have been different. If I'd used a different camera, it would have sampled the wave front differently. While it may be a little difficult to keep this stuff straight in one's mind, there's nothing deeply mysterious going on here. In particular, I'm not gearing up for an assault on Western metaphysics by arguing that it's all subjective. It isn't. But color is.

In the case of light, cameras, and image processing, the physics is well known - how light travels, interacts with surfaces, interacts with sensors or film, and so forth. Similarly, perceptual psychologists and neuro-psychologists know a great deal about color vision. My own views on color perception have been strongly influenced by Edwin Land's retinex (retina + cortex) theory, though I don't believe that it is a consensus view. For all I know, there may not be a consensus view on how human color vision works, but no one believes there is a direct mapping between wavelength and color. What we sense as color emerges from sophisticated interactions between eye, brain, and the external world.

Let's muddle on. Look at the sun in either image. It appears as though the sun's rays were boring a hole through the buildings in the background and a tree in the mid-ground. That didn't actually happen, of course, but that's what we see in the images; there's a spot where we know there's part of a building and part of the tree, but we can't see either, just sunlight. That's an artifact of the properties of the camera, it's optics and sensor. I might have been able to adjust the camera's properties so that that building fragment and those branches didn't disappear, but the rest of the image would have been very dark.

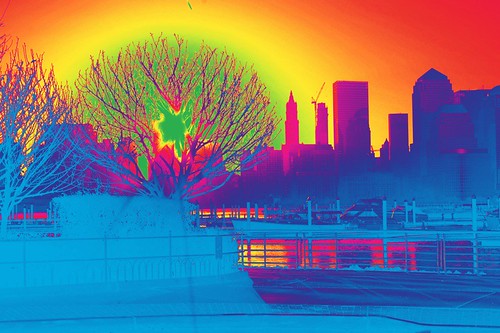

Look at this image, which I derived from the color-adjusted image by equalizing it:

Figure 3: Equalized image

Here's what Photoshop's Help file says about the equalize command:

The Equalize command redistributes the brightness values of the pixels in an image so that they more evenly represent the entire range of brightness levels. Equalize remaps pixel values in the composite image so that the brightest value represents white, the darkest value represents black, and intermediate values are evenly distributed throughout the grayscale.

The effect on the image is most obvious in the areas that had been darkest, such as the bushes beneath and around the tree. On the whole, this image is more intelligible than that if Figure 2. But it seems less faithful to what I actually saw that morning. As for what I actually saw, that's complicated and is best left alone.

My point in all this is simply that we cannot understand something as simple as color as being an objective property of an object or a scene. What's objective is wavelength. Just how wavelength becomes color depends on the details of the relevant system.

We have well-developed methods for creating objective knowledge about light and how it interacts with the world. Much of that knowledge is taken into account in the design of digital cameras and in image processing software. The same cannot be said for language or for literary texts. While we know a great deal about how the mind-brain deals with the physical signals of language - spoken words, written words, signed (gestured) words - the processes whereby meaning is constructed over those words is still deeply mysterious.

Yet if such a simple phenomenon as color is subjective, then so must the meaning of texts be subjective. For it too arises only in the interaction between the external world, in the form of a text, and the mind-brain. What will it take to create objective knowledge about such interactions?

ADDENDUM: Two comments from the discussion over at The Valve:

John Holbo: Bill, I think there’s a basic problem with your conceptual approach to ‘subjectivity’. You write: “Things are subjective in that they can be apprehended only by subjects.” But this will not do. Nothing can be apprehended by anything but a subject. Triangles, numbers, objects, quarks, rabbits, colors, light - all can be apprehended only by subjects. After all, if anything else apprehended these things, that fact would constitute the apprehender as a subject of some sort.

BB: Right John, it’s not apprehension or perception, it’s existence. Wavelength (and quarks and rabbits ...) exists independently of whether or not light is perceived; color does not.

No comments:

Post a Comment