“To study the Way is to study the self. To study the self is to forget the self. To forget the self is to be enlightened by all things. To be enlightened by all things is to remove the barriers between one's self and others.”

Monday, November 30, 2015

John Collins to judge 6TH annual 3QD philosophy prize

We are very honored and pleased to announce that John Collins has agreed to be the final judge for our 6th annual prize for the best blog and online-only writing in the category of philosophy. Details of the previous five philosophy (and other) prizes can be seen on our prize page.

Collins_JohnJohn Collins has a B.A. Hons. in Pure Mathematics and Philosophy from the University of Sydney (1982) and a Ph.D. from Princeton University (1991) under the supervision of David Lewis. He is an Associate Professor and the Director of Graduate Studies in the Department of Philosophy at Columbia University and an editor of The Journal of Philosophy. He works in decision theory, epistemology, and metaphysics. With Laurie Paul and Ned Hall, Collins co-edited Causation and Counterfactuals (MIT Press 2004). His most recent publications are ''Decision Theory After Lewis” in Schaffer and Loewer (eds) Blackwell Companion to David Lewis (2015), ''Neophobia'' in Res Philosophica (2015) and a review of Lara Buchak's Risk and Rationality for the Australasian Journal of Philosophy (2015). Collins is currently at work on a pair of papers on (so-called) Causal Decision Theory: ''What is the Significance of Newcomb's Problem?'' and ''Causal Decision Theory and Quasi-Transitivity.

As usual, this is the way it will work: the nominating period is now open. There will then be a round of voting by our readers which will narrow down the entries to the top twenty semi-finalists. After this, we will take these top twenty voted-for nominees, and the editors of 3 Quarks Daily will select six finalists from these, plus they may also add up to three wildcard entries of their own choosing. The three winners will be chosen from these by Dr. Collins.

The first place award, called the "Top Quark," will include a cash prize of 500 dollars; the second place prize, the "Strange Quark," will include a cash prize of 200 dollars; and the third place winner will get the honor of winning the "Charm Quark," along with a 100 dollar prize.

The schedule and rules:

November 30, 2015:

- The nominations are opened. Please nominate your favorite blog entry by placing the URL for the blog post (the permalink) in the comments section of this post. You may also add a brief comment describing the entry and saying why you think it should win. Do NOT nominate a whole blog, just one individual blog post.

- Blog posts longer than 4,000 words are strongly discouraged, but we might make an exception if there is something truly extraordinary.

- Each person can only nominate one blog post.

- Entries must be in English.

- The editors of 3QD reserve the right to reject entries that we feel are not appropriate.

- The blog entry may not be more than a year old. In other words, it must have been first published on or after November 30, 2014.

- You may also nominate your own entry from your own or a group blog (and we encourage you to).

- Guest columnists at 3 Quarks Daily are also eligible to be nominated, and may also nominate themselves if they wish.

- Nominations are limited to the first 100 entries.

- Prize money must be claimed within a month of the announcement of winners.

December 9, 2015

- The public voting will be opened.

December 14, 2015

- Public voting ends at 11:59 PM (NYC time).

December 15, 2015

- The semifinalists are announced

December 16, 2015

- The finalists are announced

December 28, 2015

- The winners are announced.

Sunday, November 29, 2015

Form is Hard to See, Even in Sentences*

Though, unfortunately, it is easy to blather about. And that’s what literary critics mostly do, talk around it, but never actually examine it.

To some extent I think that the interpretive mindset renders formal features invisible. It’s a professional blindness. But even without that mindset firmly bolted on, we need help in seeing formal features. It’s not enough to have an inquiring mind and a pure heart. Not only do you have to forget about interpreting the text, you’ve got to objectify the text. That requires an intellectual action of some kind.

I figure I got two things from Lévi-Strauss [2]: 1) permission to objectify the text, and 2) some tools to achieve it. That first is where critics balked. As for the second, I’m thinking of feature tables, quasi-formal equations and diagrams, and the search for binary opposition. The last is the only thing that stuck in literary criticism, but objectification gives it a different valence.

A few years ago Mark Liberman made some remarks on linguistic form that are germane [1]. Liberman isn’t a literary critic; he’s a linguist. He’s interested, not in the form of literary text, but in the form of sentences, and in the difficulty that students have in learning to analyze it.

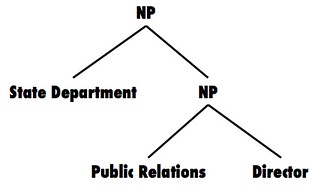

I've noticed over the years that a surprisingly large fraction of smart undergraduate students have a surprising amount of trouble with what seems to me like a spectacularly simple-minded idea: the simple parallelism between form and meaning that linguists generally call "recursive compositionality", and compiler writers call "syntax-directed translation".A trivial example of this would be the relation between form and meaning in arithmetic expressions: thus in evaluating (3+2)*5, you first add 3 and 2, and then multiply the result by 5; whereas in evaluating 3+(2*5), you first multiple 2 and 5, and then add 3 to the result. Similarly, in evaluating English complex nominals, the phrase stone traffic barrier normally means a traffic barrier made out of stone, not a barrier for stone traffic, and thus its meaning implies the structure (stone (traffic barrier)). A plausible way to think about this is that you first create the phrase traffic barrier, and the associated concept, and then combine that — structurally and semantically — with stone. In contrast, the phrase steel bar prices would most plausibly refer to the prices of steel bars, and thus implies the structure ((steel bar) prices).There are many formalisms for representing and relating linguistic form and meaning, but all of them involve some variant of this principle. It seems to me that understanding this simple idea is a necessary pre-condition for being able to do any sort of linguistic analysis above the level of morphemes and words. But when I first started teaching undergraduate linguistics, I learned that just explaining the idea in a lecture is not nearly enough. Without practice and feedback, a third to a half of the class will miss a generously-graded exam question requiring them to use parentheses, brackets, or trees to indicate the structure of a simple phrase like "State Department Public Relations Director".In fact, even a homework assignment with feedback is not always enough, even with a warning that the next exam will include such a question. For some reason that I don't understand, this simple analytic idea is surprisingly hard for some people to grasp.

So, “State Department Public Relations Director”: ((State Department) ((Public Relations) Director)). Or:

I'd say that anyone with a serious interest in describing the structure of literary texts, or movies for that matter, has to be thoroughly familiar with this notion. And, Liberman is correct, it's not enough simply to see the concept explained and demonstrated. You have to work through examples yourself.

[1] Mark Liberman. Two Brews. Language Log. Accessed Nov. 29, 2015. URL: http://languagelog.ldc.upenn.edu/nll/?p=2100

[2] William Benzon. Beyond Lévi-Strauss on Myth: Objectification, Computation, and Cognition. Working Paper. February 2015. 30 pp. URL: https://www.academia.edu/10541585/Beyond_Lévi-Strauss_on_Myth_Objectification_Computation_and_Cognition

*But really, that’s no excuse.

Friday, November 27, 2015

How Caproni is Staged in The Wind Rises

After my first time through The Wind Rises I had the impression that it alternated between dream sequences and live-action sequences. After all, it opens with a dream sequence [1], which is followed soon after by another, this time one featuring Gianni Caproni. We then get two more Caproni sequences and then one at the end. So that’s five dream sequences, no?

No. One of the Caproni sequences isn’t a dream sequence and of the other three, yes, we can call two of them dream sequences, but they differ from one another in significant ways. As for the last one, the one that ends the film, it’s not clear what it is. Though much of it takes place in the same green meadow as the two dream sequences, Miyazaki doesn’t mark it as a dream sequence. He doesn’t mark it at all. He just cuts to it.

First Caproni dream: Aeronautical Engineer

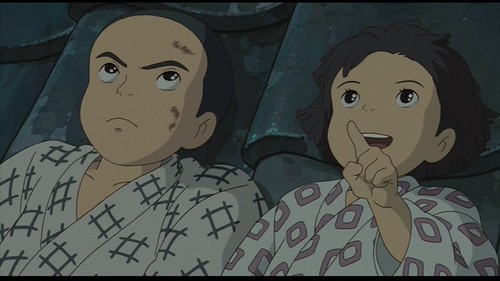

The first Caproni dream sequence, which is the second dream sequence in the film, begins with Horikoshi on the roof of his house looking up at the sky. He’s joined by his sister, who wonders why he isn’t wearing glasses. He says he’s heard that you can cure your vision by staring intently at the stars.

00:07:50

As they’re looking at what’s clearly a nighttime sky we see this, airplanes flying the colors of Italy in broad daylight:

00:08:00

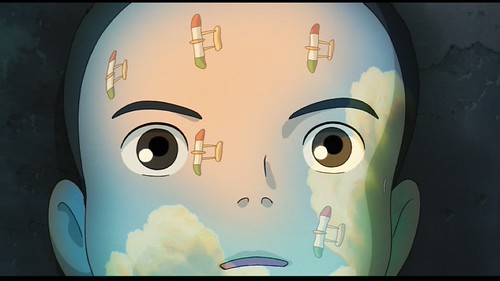

And then we have one of the loveliest images in the film; that previous sky projected on Horikoshi’s face.

00:08:05

Thursday, November 26, 2015

What a strange Thanksgiving...

...a post about torture and another about geisha.

Oh well, that's not all I've been up to. I've been working on another post about The Wind Rises and I've been out taking photos. Now I'm going to go to the Malibu Diner and have a Thanksgiving meal, of some kind. Maybe it will be turkey, maybe not. (I've already eaten quite a bit of turkey this week.)

Alas, the Malibu is closed. So I'm left to my own devices. Which, of course, is OK.

* * * * *

Alas, the Malibu is closed. So I'm left to my own devices. Which, of course, is OK.

Geisha

Long ago I learned that Geisha are not prostitutes. That men would pay good sums of money for the company of women who are not prostitutes, that does not compute. Though thinking about this and that, it's not so strange. Anyhow, here's a video about geisha from the Japanology series:

Administering torture to others does "moral injury" to the torturer

From the concluding paragraphs of Shane O'Mara, The interrogator's soul, Aeon:

And significantly, the most empathic interrogators are also the most vulnerable to terrible psychic damage after the fact. In his book Pay Any Price (2014), The New York Times Magazine correspondent James Risen describes torturers as ‘shell-shocked, dehumanised. They are covered in shame and guilt… They are suffering moral injury’.

A natural question is why this moral and psychic injury arises in soldiers who, after all, have the job of killing others. One response might be that the training, ethos and honour code of the solider is to kill those who might kill him. By contrast, a deliberate assault upon the defenceless (as occurs during torture) violates everything that a soldier is ordinarily called upon to do. Egregious violations of such rules and expectations give rise to expressions of disgust, perhaps in this case, principally directed at the self.

This might explain why, when torture is institutionalised, it becomes the possession of a self-regarding, self-supporting, self-perpetuating and self-selecting group, housed in secret ministries and secret police forces. Under these conditions, social supports and rewards are available to buffer the extremes of behaviour that emerge, and the acts are perpetrated away from public view. When torture happens in a democracy, there is no secret society of fellow torturers from whom to draw succor, social support, and reward. Engaging in physical and emotional assaults upon the defenceless and eliciting worthless confessions and dubious intelligence is a degrading, humiliating, and pointless experience. The units of psychological distance here can be measured down the chain of command, from the decision to torture being a ‘no-brainer’ for those at the apex to ‘losing your soul’ for those on the ground.

Compare this with these remarks on the difficulties mass killers face when confronting victims who never did them any harm and are not violent toward them:

Any kind of violent confrontation is emotionally difficult; the situation of facing another person whom one wants to harm produces confrontational tension/fear (ct/f); and its effect most of the time is to make violence abort, or to become inaccurate and ineffective. The usual micro-sociological patterns that allow violence to succeed are not present in a rampage killing; group support does not exist, because one or two killers confront a much larger crowd: in contrast, most violence in riots takes place in little clumps where the attackers have an advantage of around 6-to-1.

That's by sociologist Randall Collins, whom I'm quoting in a post, Rampage Killers, Suicide Bombers, and the Difficulty of Killing People Face-to-Face.

Wednesday, November 25, 2015

Tuesday, November 24, 2015

Two Friends: Wordsworth and Coleridge

Vivian Gornick reviews three books about friendship among poets. These opening paragraphs are about friendship in general:

In the centuries when most marriages were contracted out of economic and social considerations, friendship was written about with the kind of emotional extravagance that we, in our own time, have reserved for an ideal of romantic attachment. Montaigne, for instance, writing in the sixteenth century of his long dead, still mourned-for friend, Étienne de La Boétie, tells us that they were "one soul in two bodies." There was nothing his friend did, Montaigne says, not an act performed or a word spoken, for which "I could not immediately find the motive." Between the two young men communion had achieved perfection. This shared soul "pulled together in such unison," each half regarding the other with "such ardent affection" that "in this noble relationship, services and benefits, on which other friendships feed," were not taken into account. So great was the emotional benefit derived from the attachment that favors could neither be granted nor received. Privilege, for each of the friends, resided in being allowed to love, rather than in being loved.This is language that Montaigne does not apply to his feeling for his wife or his children, his colleagues or his patrons—all relationships that he considers inferior to a friendship that develops not out of sensual need or worldly obligation, but out of the joy one experiences when the spirit is fed; for only then is one closer to God than to the beasts. The essence of true friendship for Montaigne is that in its presence "the soul grows refined."

One book under review is about Coleridge and Wordsworth. Here's a bit of what she says about them:

In June 1797, some eighteen months after their initial meeting, Coleridge made a day trip to the Wordsworth home in the West Country, and stayed nearly a month. The two men could not get enough of the conversation, and it was, then and there, decided that the Wordsworths—William and his sister Dorothy—would move to the district in which Coleridge was living.There were crucial differences between them that, from the start, were self-evident. Wordsworth—grave, thin-skinned, self-protective—was, even then, steadied by a remarkable inner conviction of his own coming greatness as a poet. Coleridge—brilliant, explosive, self-doubting to the point of instability—was already into opium. No matter. A new world, a new poetry, a new way of being was forming itself and, at that moment, each, feeling the newness at work in himself, saw proof of its existence reflected in the very being of the other. In that reflection, each saw his own best self confirmed. Having been passionately enamored of the revolution in France and then passionately horrified by its subsequent murderousness, both were now convinced that it was poetry alone—their poetry—that would restore inner liberty to men and women everywhere. When they were together, Milton walked at their side.In the year and a half that followed, Wordsworth and Coleridge met almost daily, and were frequently together for weeks at a time without parting at all, Coleridge simply never going home. They talked, they read, they walked: nonstop. There developed between them a pattern of shared work in which each wrote under the inspirational excitement of the other's instantaneous feedback. Ideas and images passed back and forth between them so freely that it made them giddy to think that, very nearly, each of their poems was being written together. "This," as Adam Sisman tells us in his extremely serviceable biography, "was their annus mirabilis, when each man's talent would ripen into maturity, and bear marvellous fruit . . . each [writing] some of his finest poetry." Out of this extraordinary amalgam of shared thought and emotion, of course, came the 1798 publication of Lyrical Ballads, the seminal work of English literature's Romantic movement.Two years later the rapture was spent, and the friendship between Coleridge and Wordsworth was essentially over. Complicated bonds of work and family kept the men orbiting around one another for some years, and every now and then the intimacy seemed to flare up anew, but their time of magical communion was over, never to be recaptured or replaced. Within a decade they had stopped meeting; within another they were not speaking kindly of one another.

She also talks about friendships among John O'Hara, John Ashbery, Kenneth Koch, and James Schuyler (covered in one book) and about Alan Ginsburg and the beats (the third book). She frames the review with an account of one of her own friendships.

Monday, November 23, 2015

How NOT to run a major software project

The Atlantic has an interesting article about signals and control in NYC's labyrinthine subway system. Well into the article we find these paragraphs about a major software system they commissioned back in the 1990a:

The MTA thought that they could buy a software solution more or less off the shelf, when in fact the city’s vast signaling system demanded careful dissection and reams of custom code. But the two sides didn’t work together. The MTA thought the contractor should have the technical expertise to figure it out on their own. They didn’t. The contractor’s signal engineer gave their software developers a one-size-fits-all description of New York’s interlockings [electromechanical controls at switch points], and the software they wrote on the basis of that description—lacking, as it did, essential details about each interlocking—didn’t work.Gaffes like this weren’t caught early in part because the MTA “remained unconvinced of the usefulness of what seemed to them an endless review process in the early requirements and design stages. They had the perception that this activity was holding up their job.” They avoided visiting the contractor’s office, which, to make things worse, was overseas. In all, they made one trip. “MTA did not feel it was necessary to closely monitor and audit the contractor’s software-development progress.”The list goes on: Software prototypes were reviewed exclusively in PowerPoint, leading to interfaces that were hard to use. Instead of bringing on outside experts to oversee construction, the MTA tried to use its own people, who didn’t know how to work with the new equipment. Testing schedules kept falling apart, causing delays. The training documentation provided by the contractor was so vague as to be unusable.You get the impression that the two groups [the MTA and the software contractor] simply didn’t respect each other. Instead of collaborating, they lobbed work over a wall. The hope on each side, one gathers, was that the other side would figure it out.

Yikes! How much gray hair was created by this project?

Commensurability, Meaning, and Digital Criticism

What do I mean by “commensurate”? Well…psychoanalytic theory is not commensurate with language. Neither is semiotics. Nor is deconstruction. But digital criticism is, sorta. Cognitive criticism as currently practiced is not commensurate with language either.

Let me explain.

In the early 1950s the United States Department of Defense decided to sponsor research in machine translation; they wanted to use computers to translate technical documents in Russian into English. The initial idea/hope is that this would be a fairly straightforward process. You take a sentence in the source language, Russian, identify the appropriate English words for each word in the source text, and then add proper English syntax and voilà! your Russian sentence is translated into English.

Alas, it's not so simple. But researchers kept plugging away at it until the mid-1960s when, tired of waiting for practical results, the government pulled the plug on funding. That was the end of that.

Almost. The field renamed itself and became computational linguistics and continued research, making slow but steady progress. By the middle of the 1970s government funding began picking up and the DoD sponsored an ambitious project in speech understanding. The goal of the project was for the computer to understand “over 90% of a set of naturally spoken sentences composed from a 1000‐word lexicon” [1]. As I recall – I read technical reports from the project as they were issued – the system was hooked to a database of information about warships. So that 1000-word lexicon was about warships. Those spoken sentences were in the for of questions and the system demonstrated its understanding by producing a reasonable answer to the question.

The knowledge embodied in those systems – four research groups worked on the project for five years – is commensurate with language in the perhaps peculiar sense that I’ve got in mind. In order for those systems to answer questions about naval ships they had to be able to parse speech sounds into phonemes and morphemes, identify the syntactic relations between those morphemes, map the result into lexical semantics and from there hook into the database. And then the process had to run in reverse to produce an answer. To be sure, a 1000 word vocabulary in a strictly limited domain is a severe restriction. But without that restriction, the systems couldn’t function at all.

These days, of course, we have systems with much more impressive performance. IBM’s Watson is one example; Apple’s Siri is another. But let’s set those aside for the moment, for they’re based on a somewhat different technology than that used in those old systems from the Jurassic era of computational linguistics (aka natural language processing).

Those old systems were based on explicit theories about how the ear decoded speech sounds, how syntax worked, and semantics too. Taken together those theories supported a system that could take natural language as input and produce appropriate output without any human intervention between the input and the output. You can’t do that with psychoanalysis, semiotics, deconstruction, or any other theory or methodology employed by literary critics in the interpretation of texts. It’s in that perhaps peculiar sense that the theories with which we approach our work are not commensurate with the raw material, language, of the objects we study, literary texts.

About all we can say about the process through which meaning is conveyed between people by a system of physical signs is that it’s complex, we don’t understand it, and it is not always 100% reliable. The people who designed those old systems have a lot more to say about that process, even if all that knowledge has a somewhat limited range of application. They know something about language that we don’t. The fact that what they know isn’t adequate to the problems we face in examining literary texts should not overrun the fact that they really do know something about language and mind that we don’t.

Sunday, November 22, 2015

Personal Observations on Entering an Age of Computing Machines

Another working paper, link, abstract, and introduction below.

Academia.edu: https://www.academia.edu/18791499/Personal_Observations_on_Entering_an_Age_of_Computing_Machines

Computers and Me: What We Have and Haven’t Done 2

Academic Publishing, A Personal History 1: An Outside Move 6

Academic Publishing, A Personal History 2: To the Blogosphere and Beyond 12

I’m Lost in the Web & Digital Humanities is Sprouting All Over 21

The Diary of a Man and His Machines, Part 1: The 20th Century 24

The Diary of a Man and His Machines, Part 2: How’s this Stuff Organized on My Machines? 29

The Diary of a Man and His Machines, Part 3: The 21st Century 32

Have The Internets Rotted My Brain and Wrecked My Mind? 41

Finger Knowledge, A Note About Me and My Machines 50

Academia.edu: https://www.academia.edu/18791499/Personal_Observations_on_Entering_an_Age_of_Computing_Machines

* * * * *

Abstract: This recounts my history with personal computers, from a Z80 machine in 1981 through my Macintosh PowerBook Pro Retina today. I discuss the machines themselves, how I use them, the man-machine interface, and how computing has changed the way I work and afforded me new possibilities. Working as an independent scholar, the Internet has given me an intellectual life that would have otherwise been impossible. I have easy access to reference materials, to other scholars, and publishing opportunities that aren’t encumbered by old modes of thought or by the limitations of print publication.

CONTENTS

Computers and Me: What We Have and Haven’t Done 2

Academic Publishing, A Personal History 1: An Outside Move 6

Academic Publishing, A Personal History 2: To the Blogosphere and Beyond 12

I’m Lost in the Web & Digital Humanities is Sprouting All Over 21

The Diary of a Man and His Machines, Part 1: The 20th Century 24

The Diary of a Man and His Machines, Part 2: How’s this Stuff Organized on My Machines? 29

The Diary of a Man and His Machines, Part 3: The 21st Century 32

Have The Internets Rotted My Brain and Wrecked My Mind? 41

Finger Knowledge, A Note About Me and My Machines 50

My Computers and Me: What We Have and Haven’t Done

When I was born a few years after the end of World War II, “computer” likely referred to a person, that person was more likely than not a woman, and she performed calculations for a living. Yes, there were computing machines at the time – and I don’t mean mechanical calculators, but electronic computing machines ¬– but they were few and far between. They were also very large and expensive.

Electronic Brains

And they were sometimes called “electronic brains” a usage that, according to this Ngram chart, peaked around 1960:

By that time they were common enough that they would show up in the news in various ways. One of those ways was in news and propaganda about the Cold War. Who had the best computers, us or the Russians?

Thus I remember reading an article, either in Mechanix Illustrated or Popular Mechanics – I read both assiduously – about how Russian technology was inferior to American. It had a number of photographs, one of a Sperry Univac computer – or maybe it was just Univac, but it was one of those brands that no longer exists – and another, rather grainy on, of a Russian computer and taken from a Russian magazine. The Russian photo looked like a slightly doctored version of the Sperry Univac photo. That’s how it was back in the days of electronic brains.

When I went to college at Johns Hopkins in the later 1960s one of the minor curiosities in the freshman dorms was an image of a naked woman ticked out in “X”’s and “O”’s on computer print-out paper. People, me among them, actually went to some guy’s dorm room to see the image and to see the deck of punch cards that, when run through the computer, would cause that image to be printed out. Who’d have thought, a picture of a naked woman – well sorta’, that particular picture wasn’t very exciting, it was the idea of the thing – coming out of a computer. These days, of course, pictures of naked women, men too, circulate through computers around the world.

Two years after that, my junior year, I took a course in computer programming, one of the first in the nation. As things worked out, I never did much programming, though some of my best friends make their living at the craft. But I’ve been interested in computers and computing in one way or another for a bit over half a century and have invested a lot of time and energy in the idea that the human mind is computational in some fundamental sense.

Intellectual Appliances

But that’s not what this collection of posts is about. It’s about something a bit less exotic. The first two posts are about how I’ve used my personal computers, hooked to the Internet, as a vehicle for interacting with others and for publishing my ideas. Two more recent posts give a capsule history of the computers I’ve owned, from a NorthStar Horizon in the early 1980s to the MacBook Pro (with Retina screen) I’m using to write (and post) this introduction. In between these two pairs of posts is a somewhat shorter post about how using a computer has affected my style of working with words.

Saturday, November 21, 2015

Identity, Terrorism, and the Nation State

I’ve got one thought about terrorism, and it has to do with identity. If you live in a nation-state, such as the United States, such as the states that have dominated international affairs since the 19th century, you have been indoctrinated as a citizen of that nation. That citizenship is a central part of your identity. And it is through that identification that you see your position in world history. Your nation’s position in history is your position in history.

I am a citizen of a large, rich, technologically advanced country, the remaining superpower, the United State of America. I may not like some of the things this superpower does in my name, I may have complex and ambivalent feelings about this superpower, but the fact that I am a citizen of the USofA is part of my identity and it's a way I think of myself in the world and in history.

What if you’re living in a nation-state that doesn’t have a very prominent place in world affairs, but you’ve got a TV and radio and through them have some sense of the larger world? You know that there’s a world beyond your village, or town, or your neighborhood. How do you relate to that larger world?

What about someone living in, say, Lagos, Nigeria. That's a very different country. In the context of Africa, Nigeria may be rich, but a lot of that oil money ends up in a few hands. It's got the third largest film industry in the world (as measured by titles per year), though I'm not sure how many Nigerians know that, or what they think of it. I'm guessing that people who work in Nollywood (as it's called) know it quite well and are proud of that. But not every Nigerian lives in Lagos. Some Nigerians are aware of the world at large; they've got TV and whatever. Some are not. How do they think of themselves in relation to world history?

At the moment I'm deep in the analysis of Hayao Miyazaki's last film, The Wind Rises. It's set in Japan before WWII. One of the motifs is that Japan is a poor backward country. But the protagonist his Jiro Horikoshi, who is a real person, though his life is highly fictionalized in this film. Horikoshi was an aeronautical engineer and he designed the A6M Zero, which was on, say December 7, 1941, the most sophisticated fighter aircraft in the world. Hayao Miyazaki, born in 1941, is a pacifist; his father manufactured tail assemblies for the Zero. H.M. loves planes and is immensely proud of the Zero and the pilots who flew them. The interplay of identities and committments in this film is bewildering and wonderful.

Singing and Social bonding

Daniel Weinstein, Jacques Launay, Eiluned Pearce, Robin I.M. Dunbar, Lauren Stewart. Singing and social bonding: changes in connectivity and pain threshold as a function of group size. Evolution and Human Behavior. In press.

Abstract: Over our evolutionary history, humans have faced the problem of how to create and maintain social bonds in progressively larger groups compared to those of our primate ancestors. Evidence from historical and anthropological records suggests that group music-making might act as a mechanism by which this large-scale social bonding could occur. While previous research has shown effects of music making on social bonds in small group contexts, the question of whether this effect ‘scales up’ to larger groups is particularly important when considering the potential role of music for large-scale social bonding. The current study recruited individuals from a community choir that met in both small (n = 20–80) and large (a ‘megachoir’ combining individuals from the smaller subchoirs n = 232) group contexts. Participants gave self-report measures of social bonding and had pain threshold measurements taken (as a proxy for endorphin release) before and after 90 min of singing. Results showed that feelings of inclusion, connectivity, positive affect, and measures of endorphin release all increased across singing rehearsals and that the influence of group singing was comparable for pain thresholds in the large versus small group context. Levels of social closeness were found to be greater at pre- and post-levels for the small choir condition. However, the large choir condition experienced a greater change in social closeness as compared to the small condition. The finding that singing together fosters social closeness – even in large group contexts where individuals are not known to each other – is consistent with evolutionary accounts that emphasize the role of music in social bonding, particularly in the context of creating larger cohesive groups than other primates are able to manage.

Bruce Jackson on authenticity

Bruce Jackson does a number of things. for the purpose of this post let's say he's a folklorist and a photographer. I studied with him at SUNY Buffalo. This is an excerpt for an interview he gave to the Wall Street Journal a few years ago. It's about how he became an expert on prison lore.

The Wall Street Journal: How did you first begin to write about prisons?In 1960, I went to graduate school in Indiana and was part of the folk scene there. They had a folklore program. They all knew the songs I knew and they could play and sing better. I decided I would go and find some songs they didn’t know. So I started going to prisons. I went to Indiana State prison. I went to Missouri State Prison. I started recording those songs and realized I was full of s*** as a musician. Here I am this Jewish kid from Brooklyn singing black convict work songs. It was fine in New York, but when I got to Texas and I’m standing in an Oak grove with a bunch of convicts, I felt like a very silly person. So I never performed again. But I recorded those songs and Harvard published a book of them called “Wake Up Dead Man.”

Friday, November 20, 2015

Cultural Evolution: Pop Music in America 1960-2010

Matthias Mauch, Robert M. MacCallum, Mark Levy, Armand M. Leroi. The evolution of popular music: USA 1960–2010. Royal Society Open Science. 2015 2 150081; DOI: 10.1098/rsos.150081. Published 6 May 2015

* * * * *

The evolution of popular music: USA 1960–2010

Matthias Mauch, Robert M. MacCallum, Mark Levy, Armand M. Leroi

Published 6 May 2015.DOI: 10.1098/rsos.150081

Abstract

In modern societies, cultural change seems ceaseless. The flux of fashion is especially obvious for popular music. While much has been written about the origin and evolution of pop, most claims about its history are anecdotal rather than scientific in nature. To rectify this, we investigate the US Billboard Hot 100 between 1960 and 2010. Using music information retrieval and text-mining tools, we analyse the musical properties of approximately 17 000 recordings that appeared in the charts and demonstrate quantitative trends in their harmonic and timbral properties. We then use these properties to produce an audio-based classification of musical styles and study the evolution of musical diversity and disparity, testing, and rejecting, several classical theories of cultural change. Finally, we investigate whether pop musical evolution has been gradual or punctuated. We show that, although pop music has evolved continuously, it did so with particular rapidity during three stylistic ‘revolutions’ around 1964, 1983 and 1991. We conclude by discussing how our study points the way to a quantitative science of cultural change.

2. Introduction

The history of popular music has long been debated by philosophers, sociologists, journalists, bloggers and pop stars [1–7]. Their accounts, though rich in vivid musical lore and aesthetic judgements, lack what scientists want: rigorous tests of clear hypotheses based on quantitative data and statistics. Economics-minded social scientists studying the history of music have done better, but they are less interested in music than the means by which it is marketed [8–15]. The contrast with evolutionary biology—a historical science rich in quantitative data and models—is striking, the more so because cultural and organismic variety are both considered to be the result of modification-by-descent processes [16–19]. Indeed, linguists and archaeologists, studying the evolution of languages and material culture, commonly apply the same tools that evolutionary biologists do when studying the evolution of species [20–25].

Until recently, the single greatest impediment to a scientific account of musical history has been a want of data. That has changed with the emergence of large, digitized, collections of audio recordings, musical scores and lyrics. Quantitative studies of musical evolution have quickly followed [26–30]. Here, we use a corpus of digitized music to investigate the history of American popular music. Drawing inspiration from studies of organic and cultural evolution, we view the history of pop music as a ‘fossil record’ and ask the kinds of questions that a palaeontologist might: has the variety of popular music increased or decreased over time? Is evolutionary change in popular music continuous or discontinuous? And, if it is discontinuous, when did the discontinuities occur?

To delimit our sample, we focused on songs that appeared in the US Billboard Hot 100 between 1960 and 2010. We obtained 30-s-long segments of 17 094 songs covering 86% of the Hot 100, with a small bias towards missing songs in the earlier years. Because our aim is to investigate the evolution of popular taste, we did not attempt to obtain a representative sample of all the songs that were released in the USA in that period of time, but just those that were most commercially successful.

Like previous studies of pop-music history [28,30], our study is based on features extracted from audio rather than from scores. However, where these early studies focused on technical aspects of audio such as loudness, vocabulary statistics and sequential complexity, we have attempted to identify musically meaningful features. To this end, we adopted an approach inspired by recent advances in text-mining (figure 1). We began by measuring our songs for a series of quantitative audio features, 12 descriptors of tonal content and 14 of timbre (electronic supplementary material, M2–3). These were then discretized into ‘words’ resulting in a harmonic lexicon (H-lexicon) of chord changes, and a timbral lexicon (T-lexicon) of timbre clusters (electronic supplementary material, M4). To relate the T-lexicon to semantic labels in plain English, we carried out expert annotations (electronic supplementary material, M5). The musical words from both lexica were then combined into 8+8=16 ‘topics’ using latent Dirichlet allocation (LDA). LDA is a hierarchical generative model of a text-like corpus, in which every document (here: song) is represented as a distribution over a number of topics, and every topic is represented as a distribution over all possible words (here: chord changes from the H-lexicon, and timbre clusters from the T-lexicon). We obtain the most likely model by means of probabilistic inference (electronic supplementary material, M6). Each song, then, is represented as a distribution over eight harmonic topics (H-topics) that capture classes of chord changes (e.g. ‘dominant-seventh chord changes') and eight timbral topics (T-topics) that capture particular timbres (e.g. ‘drums, aggressive, percussive’, ‘female voice, melodic, vocal’, derived from the expert annotations), with topic proportions q. These topic frequencies were the basis of our analyses.

Friday Fotos: Thursday Morning

I had breakfast yesterday at my favorite diner, the Malibu, on 14th street in Hoboken. Here I'm sitting in my booth looking out at an apartment complex. The blue lights are inside the Malibu and you can see part of frosted "M" on the window.

After breakfast I decided to take a stroll. So I went over a bridge to Weehawken, where I saw a variety of graffiti. This next photo is deceptive. At first glance it appears out of focus, but then if you look closely you can see tiny lines and spatters on the surface.

I've now touched ground in Weehawken and am heading back to Hoboken. I see a row of birches along the way.

Now I'm walking along the river, past an abandoned pier. Some are in worse shape than this one. Some are entirely gone. And so have been preserved and fixed up. Looks like the gulls and geese are migrating for the winter.

Finally, I tracked this small tug and barge moving a crane down river. The tall building in the center is One World Center.

Problematic Identifications: The Wind Rises as a Japanese Film

When we say that a film is Japanese, what do we mean?

First of all, and most fundamentally, we mean that it is made by Japanese artisans in Japan. In that sense of course all of Miyazaki’s films are Japanese. But a number of them don’t appear to be Japanese. Castle in the Sky, Kiki’s Delivery Service, Porco Rosso, and Howl’s Moving Castle are all set in Europe and have European characters. The language, of course, is Japanese, but not in an English or French dub.

Then we have films like My Neighbor Tototoro, Princess Mononoke, Spirited Away, and Ponyo, which are set in Japan and have visibly Japanese characters. The first three also draw on Japanese folklore and religion, and perhaps the last as well, though the story was inspired by a European fairy tale, “The Little Mermaid”. If you walk in on a showing of any of these films you’ll know it is Japanese regardless of the language on the sound track.

And then we have The Wind Rises, which is Japanese in those first two senses. But it is Japanese in a third sense, one that’s a bit tricky to characterize. What makes it Japanese in this third sense is that it is problematically entangled with Japanese imperialist aggression in the 20th century. This is what critic Inkoo Kang had in mind when she said it “is a film whose meaning and power vary so greatly in different cultural and geographical contexts that Miyazaki should have fought for it to never leave his homeland” [1]. But “culture” is not the right word, for it’s not culture that makes the film problematic. It is the institution of the nation-state and the identifications entailed by it that make the film problematic.

Here I’m making a distinction between culture and society which is often elided. By society we mean a group of people; by culture we mean a whole range of beliefs, practices, and attitudes taken by people. But Japanese cultural practices exist all over the world, not just in Japan. It is not just that many societies have enclaves of Japanese emigrants who still speak Japanese and may live in Japanese ways, but that non-Japanese have adopted Japanese cultural practices as well, maybe not completely, not “through and through”, but to some extent. Nor is everyone who lives in Japan ethnically and culturally Japanese. Furthermore there are lots of cultural practices in Japan that aren’t Japanese. Are the chemistry and physics that are taught in Japanese schools and practiced in Japanese research institutes and companies, are they Japanese in any deep sense? What about baseball? Japanese have grown up playing baseball for well over a century and many think of it as a Japanese game. Is it, or isn’t it?

Which Japanese State?

The distinction between culture and society is a tricky one in practice, but then The Wind Rises is a tricky film. There’s nothing problematic about the fact that most of the characters are Japanese, or that most of the film is physically set in Japan. What makes the film problematic is its relation to the Japanese nation-state and through that to the international system of nation-states. The film paints a sympathetic portrait of a Japanese engineer, Jiro Horikoshi, who designed warplanes that were used by Japan in waging war in East Asia and in the Pacific. Japan lost the war in the Pacific to the United States (and its allies) and in consequence its governing institutions – those of the Empire of Japan (大日本帝國 Dai Nippon Teikoku) – were replaced by new ones imposed upon Japan in the constitution of 1947. Moreover Japan was physically occupied and ruled by the Allies from 1945 to 1954. If you will, the Japanese nation is still the one that had emerged in the Meiji Restoration of 1868 [2] but the state is new.

Sorting this out is tricky. Miyazaki’s parents were born and reached adulthood under the imperial regime. While Miyazaki was born under that regime in 1941, he was so young that that would have had little direct effect on his sense of identity [3]. For all practical purposes Miyazaki himself is a citizen of the new regime, but this film is about his parent’s world and the state in which they lived the first part of their lives. What’s the relationship he proposes between these two regimes? Crudely put, Miyazaki admires the technology embodied in the A6M Zero, Horikoshi’s best-known design, but abhors the use to which that magnificent plane was put.

Thursday, November 19, 2015

Messenger

I really like this image. In part for its purely visual qualities. But also, and essentially, for what it depicts. You need both of them together. It's a freight car, and a rather battered one at that. Look at the layers of paint, the layers of age. This car works hard. But it also tells of men (perhaps women, but men most likely) who like design, and like their names. Egos floridly on display on a cross-country journey. Created under the discipline of "don't get caught or your ass is in jail." And odd macho sort of aesthetic. But real, if not ultimate. What does this car carry? Toilet tissue, flat-screen TVs? No doubt the load varies from trip to trip. As do the names, over time.

But that's not all. In the foreground, dried grass, and a glimpse of a (scuzzy) pond. Behind, a utility pole connecting to the local grid, which connects to the regional, which connects to the national. Grid. And then there's the sky. Odd to say it's the background. Compositionally, that's what it is. In reality, it's all over.

I like it that the grass colors echo in a lighter hue the tones painted on the side of the car. From dirty yellow to light beige-yellow. Against the blue-white sky.

Piaget, Reflective Abstraction and the Evolution of Literary Criticism

While Piaget is best known for investigating conceptual development in children, he also looked at conceptual development on the historical time scale. In both contexts he talked of reflective abstraction “in which the mechanisms of earlier ideas become objects manipulated by newer emerging ideas. In a series of studies published in the 1980s and 1990s the late David Hays and I developed similar ideas about the long-term cultural evolution of ideas” [1]. I think lots of intellectual disciplines are undergoing such a process and that it’s been going on for some time.

It’s certainly what I think is going on in literary studies. The discipline initially constituted itself from in America philology and literary history; that happened in the late 19th and first half of the 20th century. Then interpretive criticism moved to center-stage after World War II and ran into problems in the 1960s. Interpretation wasn’t producing reliable results from one scholar to another? What to do?

The discipline became intensely self-conscious and reflected on its own methods, and then sort of gave up. In the process a number of distinctions collapsed and practical criticism, critical theory, and theory of literature became one thing, with new historicism trotting alongside. That’s the story I told in the early part of this working paper, but without reference to Piaget.

What I’m wondering is if “form” is the name of a CONCEPTUAL MECHANISM in the old/current regime that is in the process of becoming an OBJECT OF THOUGHT under a new emerging regime. Along these lines, I just barely have a conception of what philology is. It’s not something I studied, though some of my undergraduate teachers were certainly versed in it (I’m thinking particularly of Don Cameron Allen). But I’ve recently come across web conversations about it, have seen an article or two explaining it, and note that last year saw the publication of James Turner, Philology: The Forgotten Origins of the Modern Humanities (Princeton), which one the 2015 Christian Gauss Award and is blurbed from here to Sunday. Until the middle of the 20th century, that’s what literary scholars did. But now it’s slid so deep into the past it has itself become an object of study.

Is this vague? Yes, thinking about thought is treacherously difficult. The point is, though, that there is a long-term historical process going on. And we’re not just slipping from one épistème to another. We’re moving to a new KIND of épistème.

* * * * *

[1] The quote is from a relatively short working paper, Redefining the Coming Singularity – It’s not what you think: https://www.academia.edu/8847096/Redefining_the_Coming_Singularity_It_s_not_what_you_think

Here’s a somewhat fuller statement from that paper:

When the work of developmental psychologist Jean Piaget finally made its way into the American academy in the middle of the last century the developmental question became: Is the difference between children’s thought and adult thought simply a matter of accumulated facts or is it about fundamental conceptual structures? Piaget, of course, argued for the latter. In his view the mind was constructed in “layers” where the structures of higher layers were constructed over and presupposed those of lower layers. It’s not simply that 10-year olds knew more facts than 5-year olds, but that they reasoned about the world in a more sophisticated way. No matter how many specific facts a 5-year old masters, he or she cannot think like a 10-year old because he or she lacks the appropriate logical forms. Similarly, the thought of 5-year olds is more sophisticated than that of 2-year olds and that of 15-year olds is more sophisticated than that of 10-year olds.This is, by now, quite well known and not controversial in broad outline, though Piaget’s specific proposals have been modified in many ways. What’s not so well known is that Piaget extended his ideas to the development of scientific and mathematical ideas in history in the study of genetic epistemology. In his view later ideas developed over earlier ones through a process of reflective abstraction in which the mechanisms of earlier ideas become objects manipulated by newer emerging ideas. In a series of studies published in the 1980s and 1990s the late David Hays and I developed similar ideas about the long-term cultural evolution of ideas (see appendix for abstracts).

Wednesday, November 18, 2015

The Wind Rises: A Note About Failure, Human and Natural

Horikoshi interacts with Gianni Caproni, the Italian aircraft designer, three times in the film. The first, third, and fourth times seem to involve dream states [1]. The second is different. Horikoshi is in Japan doing one thing (putting out fires after the Great Kanto Earthquake) while Caproni is in Italy doing something else (testing a new plane). But they can talk to one another and have a short conversation:

C: “What do you think, Japanese Boy, is the wind still rising?” [In Italy]

H: “Yes, it’s a gale.” [In Japan]

C: “Well then, you must live. Le vent se lève, il faut tenter de vivre.” [In Italy]

Caproni is filming the first flight of a new plane, one with nine wings and intended to carry 100 passengers across the Atlantic:

00:23:38

That’s Caproni in the dark suit; his cameraman is next to him. The plane fails:

00:24:01

And Caproni grabs the camera, yanks the film out, and tosses it overboard:

00:24:28

It’s when he’s tossing the film overboard that he quotes that line from Paul Valéry, the line that gives the film its title, and the line that Horikoshi and Naoko Satomi shared when they first met, a bit earlier during the earthquake sequence.

Why does Miyazaki juxtapose these two events, a natural disaster that destroys Tokyo, and a technical failure that dashes one man’s hopes?

While you’re thinking about that, you might want to watch this clip of that very plane, the Ca.60 Noviplano:

That clip’s been on YouTube since 2007, so Miyazaki could have seen it there, if not from some other source. And you just know he’s familiar with that clip, don’t you?

I found out about the clip in very interesting post by StephenM, The Kami-Electrified World: Hayao Miyazaki and The Wind Rises, which discusses the film in the context of Miyazaki’s general body of work, including work he did before Ghibli. You might also want to check out another post, Just a little more of The Wind Rises, from frame-grab comparisons between this film and other Miyazaki films.

Respective URLs:

* * * * *

[1] I discuss the first, second, and third times in this post at 3 Quarks Daily, Why Miyazaki’s The Wind Rises is not Morally Repugnant, URL: http://www.3quarksdaily.com/3quarksdaily/2015/11/is-miyazakis-the-wind-rises-morally-repugnant.html

I also discuss the first here, From Concept to First Flight: The A5M Fighter in Miyazaki’s The Wind Rises, URL: http://new-savanna.blogspot.com/2015/11/from-concept-to-first-flight-a5m.html

Disney Landmark: Steamboat Willie debuted on this day back in 1928

On this day back in 1928 Walt Disney released Steamboat Willie. It was the first Disney cartoon with synchronized sound:

Disney understood from early on that synchronized sound was the future of film. Steamboat Willie was the first cartoon to feature a fully post-produced soundtrack which distinguished it from earlier sound cartoons such as Inkwell Studios' Song Car-Tunes (1924–1927) and Van Beuren Studios' Dinner Time (1928). Steamboat Willie would become the most popular cartoon of its day.

It put Disney and his studio on fast-forward. Here's a link to Steven J. Gould's "A Biological Homage to Mickey Mouse", in which he discusses Mickey's evolution in biological terms.

H/t Jerry Coyne.

Self-awareness, self-regulation, and self-transcendence

REVIEW ARTICLE

Front. Hum. Neurosci., 25 October 2012 | http://dx.doi.org/10.3389/fnhum.2012.00296

Self-awareness, self-regulation, and self-transcendence (S-ART): a framework for understanding the neurobiological mechanisms of mindfulness

- Functional Neuroimaging Laboratory, Department of Psychiatry, Brigham and Women's Hospital, Boston, MA, USA

Mindfulness—as a state, trait, process, type of meditation, and intervention has proven to be beneficial across a diverse group of psychological disorders as well as for general stress reduction. Yet, there remains a lack of clarity in the operationalization of this construct, and underlying mechanisms. Here, we provide an integrative theoretical framework and systems-based neurobiological model that explains the mechanisms by which mindfulness reduces biases related to self-processing and creates a sustainable healthy mind. Mindfulness is described through systematic mental training that develops meta-awareness (self-awareness), an ability to effectively modulate one's behavior (self-regulation), and a positive relationship between self and other that transcends self-focused needs and increases prosocial characteristics (self-transcendence). This framework of self-awareness, -regulation, and -transcendence (S-ART) illustrates a method for becoming aware of the conditions that cause (and remove) distortions or biases. The development of S-ART through meditation is proposed to modulate self-specifying and narrative self-networks through an integrative fronto-parietal control network. Relevant perceptual, cognitive, emotional, and behavioral neuropsychological processes are highlighted as supporting mechanisms for S-ART, including intention and motivation, attention regulation, emotion regulation, extinction and reconsolidation, prosociality, non-attachment, and decentering. The S-ART framework and neurobiological model is based on our growing understanding of the mechanisms for neurocognition, empirical literature, and through dismantling the specific meditation practices thought to cultivate mindfulness. The proposed framework will inform future research in the contemplative sciences and target specific areas for development in the treatment of psychological disorders.

Subscribe to:

Comments (Atom)