Monday, October 31, 2016

Cultural Logic or Transcendental Interpretation? – Golumbia on Chomsky’s Computationalism

New working paper. Title above, download sites, abstract, TOC, and Introduction below.

Academia.edu: https://www.academia.edu/29570825/Cultural_Logic_or_Transcendental_Interpretation_Golumbia_on_Chomskys_Computationalism

Abstract: In The Cultural Logic of Computation David Golumbia offers a critique of Chomsky and of computational linguistics that is rendered moot by his poor understanding of those ideas. He fails to understand and appreciate the distinction between the abstract theory of computation and real computation, such as that involved in machine translation; he confuses the Chomsky hierarchy of language types with hierarchical social organization; he misperceives the conceptual value of computation as a way of thinking about the mind; he ignores the standard account of the defunding of machine translation in the 1960s (it wasn’t working) in favor of obscure political speculations; he offers casual remarks about the demographics of linguistics without any evidence, thus betraying his ideological preconceptions; and he seems to hold a view of analog phenomena that is at odds with the analog/digital distinction as it is used in linguistics, computation, and the cognitive sciences.

CONTENTS

Introduction: How can you critique what you don’t understand? 3

Computing, Abstract and Real 3

Computationalism 5

Why Chomsky’s Ideas Were so Popular 8

Hierarchy in Theory and Society 10

The Demise of MT: Blow-Back on Chomsky? 11

White Males, Past Presidents of the ACL, and a Specious Binary 13

Is David Ferrucci a Neoliberal Computationalist? 15

Conclusion: Cultural Logic as Transcendental Interpretation? 17

Appendix 1: Pauline Jacobson on Linguistics and Math 18

Appendix 2: Analog vs. Digital: How general is the contrast? 19

Introduction: How can you critique what you don’t understand?

As many of you know, David Golumbia is one of three authors of an article in the Los Angeles Review of Books that that offered a critique of the digital humanities (DH): Neoliberal Tools (and Archives): A Political History of Digital Humanities. The article sparked such vigorous debate within the DH community that I decided to investigate Golumbia’s thinking. I’ve known about him for some time but had not read his best known book:

David Golumbia. The Cultural Logic of Computation. Harvard University Press, 2009.

I’ve still not read it in full. But I’ve read enough to arrive at a conclusion: Golumbia’s grasp of computation is shaky.

That conclusion was based on a careful reading of Golumbia’s second chapter, “Chomsky’s Computationalism,” with forays into the first, “The Cultural Functions of Computation,” and a look at the fourth, “Computationalist Linguistics”. I published my remarks to the web on July 15, 2010 under the title "Golumbia Fails to Understand Chomsky, Computation, and Computational Linguistics". Since then I’ve made other posts about Golumbia and participated in discussions about his conception of computing at Language Log, a group blog devoted to linguistics. I’ve taken those discussions and added them to my original post to produce this working paper.

* * * * *

Let’s begin where I started in July, with a question: Why Chomsky?

Chomsky is one of the seminal thinkers in the human sciences in the last half of the twentieth century. The abstract theory of computation is at the center of his work. His early work played a major role in bringing computational thinking to the attention of linguists, psychologists, and philosophers and thus helped catalyze the so-called cognitive revolution. At the same time Chomsky has been one of our most visible political essayists. This combination makes him central to Golumbia’s thinking, which is concerned with the relationship between the personal and the political as mediated by ideology. Unfortunately his understanding of Chomsky’s thinking is so tenuous that his critique of Chomsky tells us more about him than about Chomsky or computation.

First I consider the difference between abstract computing theory and real computing, a distinction to which Golumbia gives scant attention. Then I introduce his concept of computationalist ideology and criticize his curious assertion that computational linguistics “is almost indistinguishable from Chomskyan generativism” (p. 47). Next comes an account of Chomsky’s popularity that takes issue with Golumbia’s rather tortured account, which emphasizes their appeal to a neoliberal ideology that is at variance with Chomsky’s professed politics. From there I move to his treatment of the Chomsky Hierarchy, pointing out that it is a different kind of beast from hierarchical power relations in society. The next two sections examine remarks that are offered almost as casual asides. The first remark is a speculation about the demise of funding for machine translation in the late 1960s. Golumbia gets it wrong, though he lists a book in his bibliography that gets it right. Then I offer some corrective observations in response to his off-hand speculation about the ideological demographics of linguistics.

Sunday, October 30, 2016

Demographic statistics, punch card machines, historiography

Briefly, a group of 19th century American historians, journalists, and census chiefs used statistics, historical atlases, and the machinery of the census bureau to publicly argue for the disintegration of the U.S. Western Frontier in the late 19th century.These moves were, in part, made to consolidate power in the American West and wrestle control from the native populations who still lived there. They accomplished this, in part, by publishing popular atlases showing that the western frontier was so fractured that it was difficult to maintain and defend.The argument, it turns out, was pretty compelling.Part of what drove the statistical power and scientific legitimacy of these arguments was the new method, in 1890, of entering census data on punched cards and processing them in tabulating machines. The mechanism itself was wildly successful, and the inventor’s company wound up merging with a few others to become IBM. As was true of punched-card humanities projects through the time of Father Roberto Busa, this work was largely driven by women.It’s worth pausing to remember that the history of punch card computing is also a history of the consolidation of government power. Seeing like a computer was, for decades, seeing like a state. And how we see influences what we see, what we care about, how we think.

It is my crude impression that, while WWII and the use of computers for military purposes (code breaking, calculating artillery tables, simulating atomic explosions) figure prominently in a wide-spread narrative about the origins of computing, punchcard tabulation is not so widely recognized, and yet it was earlier by half a century and very important.

H/t Alan Liu:

"Ours is a digital history steeped in the the values of the cultural turn..." https://t.co/QrV9nNU0LC— Alan Liu (@alanyliu) October 30, 2016

Fiction as simulation

In a paper published in Trends in Cognitive Science, the psychologist and novelist Keith Oatley lays out his stall, arguing that fiction, and especially literary fiction, is a beneficial force in our lives....

Oatley bases his claim on various experimental evidence of his own and others, most of which has been conducted in the last 20 years. Among the reported effects of reading fiction (and in some cases other fiction with involving narratives, such as films and even videogames) are more empathetic responses – as self-reported by the participant, or occasionally demonstrated by increased helping behaviour afterwards – reductions in sexual and racist stereotyping, and improvements in figuring out the mental states of others.Another interesting set of findings come from fMRI measurements of brain activation: we know that people have a tendency to engage in a kind suppressed imitation of the actions of others they are around. The same thing happens when reading about people’s actions: if a character in a story is said to pull a light cord, for example, the reader’s brain activates in areas associated with the initiation of grasping behaviour.Many of these techniques involve testing people just after they have read something.

Saturday, October 29, 2016

Reading, the "official" word

The MLA (Modern Language Association) is putting together a site, Digital Pedagogy in the Humanities: Concepts, Models, and Experiment, "a curated collection of reusable and remixable pedagogical artifacts for humanities scholars in development by the Modern Language Association." It consists of a bunch of keywords, plus commentary and supporting materials.

I'm interested in the keyword, "reading," w/ materials curated by Rachel Sagner Buurma. First paragraph:

In the beginning, we learn to read; after we are literate, we read to learn. In this received wisdom of early childhood education, reading is only temporarily difficult, material, intractable; afterwards, it recedes into the background, becoming the transparent skill through which we access worlds of knowledge. But we are in fact always learning to read and always learning about reading as we encounter new languages, genres, and forms, and mediums.

Moving along, we get to machine reading, which

Machine reading has a longer history than we sometimes assume. Work on the nineteenth- and twentieth-century history of talking books and other reading machines shows how reading technologies developed to convert text into sound for blind readers questioned assumptions about the nature of reading while also contributing to the development of machine reading technologies like optical character recognition (OCR). In the realm of literary interpretation as “reading,” of course, Stephen Ramsay has famously emphasized the continuities between human and machine. For Ramsay, literary criticism already contains “elements of the algorithmic.”

And there you have it, the standard professional conflation of plain old reading and interpretive reading. Here's what she quotes from Ramsay (his book Reading Machines: Towards an Algorithmic Criticism, 16):

Any reading of a text that is not a recapitulation of that text relies on a heuristic of radical transformation. The critic who endeavors to put forth a “reading” puts forth not the text, but a new text in which the data has been paraphrased, elaborated, selected, truncated, and transduced. This basic property of critical methodology is evident not only in the act of “close reading” but also in the more ambitious project of thematic exegesis.

Yep, that's the catechism.

Friday, October 28, 2016

How Do We Understand Literary Criticism?

This is a follow-up to Monday’s post, Once More, Why is Literary Form All But Invisible to Literary Criticism? (With a little help from Foucault). For, despite its name, the post really was about how we understand literary criticism. How could that be? you ask. Simple, the mechanism – there’s that word – is such that form is irrelevant or distracting. Certain schools of criticism invoked form as an isolating device, but they weren’t actually interested in describing it.

I note that I’m just making this up. I like it, but it’s very crude. I want to think about it.

Schemas, Assimilation and Accommodation

So, how is it that we understand anything? Piaget talks of intellectual growth as an interplay of assimilation and accommodation. He imagines the mind as being populated with schemas, so:

A schema refers to both mental and physical actions in understanding and knowing. In cognitive development theory, a schema includes both a category of knowledge and the process of obtaining that knowledge. The process by which new information is taken into the previously existing schema is known as assimilation. Alteration of existing schemas or ideas as a result of new knowledge is known as accommodation. Therefore the main difference between assimilation and accommodation is that in assimilation, the new idea fits in with the already existing ideas while, in accommodation, the new idea changes the already existing ideas.

I would further add that he thought of play as involving a priority of assimilation over accommodation. Let us think of literature as a kind of play.

We may then ask of literature itself, what is the body of schemas to which we assimilate literary texts? Whatever the answer to that might be, I assert that we assimilate literary criticism to the same body of schemes. And that is why it has been so easy for literary critics to elide the difference between ordinary reading, which every literate person does, and interpretive critical reading, which is confined to professional literary critics and, of course, their students – though most of those students (think of the undergraduates) do not go on to become professionals.

I will further assert that in order for the detailed description of the form of individual works, their morphology, to become a routine and foundational practice, critics must learn to assimilate their understanding of texts to a different base of schemas. In fact, such description is the way to establish that different base of schemes.

Schema Bases, Primary and Secondary (Round Earth)

By the primary schema base I mean first of all the body of schemas that begins developing at birth. This is the schema base in which, among other things, we will ‘lay down’ our personal history. This schema base is grounded in direct experience, though not necessarily confined to it.

For the purposes of this post I will simply assert that we understand literary texts by assimilating them to our primary schema base. We understand the actions of fictional beings by assimilating them to actions we have seen and experienced ourselves. Of course, when we encounter imaginary acts that are unlike those we’ve seen and done ourselves, we may well accommodate ourselves to them if they are, shall we say, within range. But assimilation prevails.

Given that we have dreams and that we learn things through talking with others, we must accommodate these within the prime base. Dreams we experience directly, of course, but they are in a realm different from day-to-day life. We experience conversation directly, as well, but we do not necessarily have direct experience of the contents of conversation. We may, we may not; it depends on who we’re talking with and what we’re talking about.

I want to note that, but I want to set it aside for them moment. I want to develop the notion of a secondary base. And then best way to do that is through an example. How is it that we know the earth is round? That knowledge, I assert, rests on a secondary base.

Thursday, October 27, 2016

Human sounds convey emotions clearer and faster than words

It takes just one-tenth of a second for our brains to begin to recognize emotions conveyed by vocalizations, according to researchers from McGill. It doesn’t matter whether the non-verbal sounds are growls of anger, the laughter of happiness or cries of sadness. More importantly, the researchers have also discovered that we pay more attention when an emotion (such as happiness, sadness or anger) is expressed through vocalizations than we do when the same emotion is expressed in speech.The researchers believe that the speed with which the brain ‘tags’ these vocalizations and the preference given to them compared to language, is due to the potentially crucial role that decoding vocal sounds has played in human survival.“The identification of emotional vocalizations depends on systems in the brain that are older in evolutionary terms,” says Marc Pell, Director of McGill’s School of Communication Sciences and Disorders and the lead author on the study that was recently published in Biological Psychology. “Understanding emotions expressed in spoken language, on the other hand, involves more recent brain systems that have evolved as human language developed.”

The primary research report:

M.D. Pella, b, K. Rothermich, P. Liu, S. Paulmann, S. Sethi, S. Rigoulot, Preferential decoding of emotion from human non-linguistic vocalizations versus speech prosody, Biological Psychology, Volume 111, October 2015, Pages 14–25Abstract: This study used event-related brain potentials (ERPs) to compare the time course of emotion processing from non-linguistic vocalizations versus speech prosody, to test whether vocalizations are treated preferentially by the neurocognitive system. Participants passively listened to vocalizations or pseudo-utterances conveying anger, sadness, or happiness as the EEG was recorded. Simultaneous effects of vocal expression type and emotion were analyzed for three ERP components (N100, P200, late positive component). Emotional vocalizations and speech were differentiated very early (N100) and vocalizations elicited stronger, earlier, and more differentiated P200 responses than speech. At later stages (450–700 ms), anger vocalizations evoked a stronger late positivity (LPC) than other vocal expressions, which was similar but delayed for angry speech. Individuals with high trait anxiety exhibited early, heightened sensitivity to vocal emotions (particularly vocalizations). These data provide new neurophysiological evidence that vocalizations, as evolutionarily primitive signals, are accorded precedence over speech-embedded emotions in the human voice.

Wednesday, October 26, 2016

Tuesday, October 25, 2016

Monday, October 24, 2016

Once More, Why is Literary Form All But Invisible to Literary Criticism? (With a little help from Foucault)

Yes, because literary criticism is oriented toward the interpretation of meaning, and form cannot, in general be “cashed out” in terms of meaning. But why is literary criticism oriented toward this ‘meaning’ in the first place? That’s what we need to know.

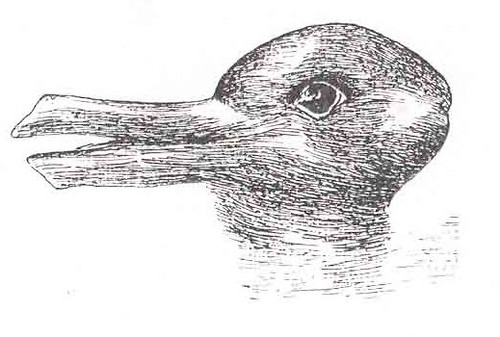

And the answer is that “meaning” is couched in terms more or less commensurate with life those we (that is, literary critics) use to understand life itself (how we live). Such a simple thing, you might say, Well duh! It’s the old gestalt switch:

All along I’d been looking at it as a duck, but now I can see it as a rabbit. Same stuff, different organization.

The reset came when I decided to examine some passages from Foucault’s The Order of Things that I’d long ago noted, but hadn’t read in years. The passages are in the chapter “Classifying,” which concerns the emergence of natural history in the Early Modern Era (aka Renaissance); a couple of centuries later, of course, natural history evolves into biology.

You see why this is relevant, don’t you? In talking about the need to describe literary form I make a comparison to biology. Biology is built on the meticulous description of life forms and their ways of life. Similarly, my argument goes, we need meticulous descriptions of literary texts. So, why not take a look at how morphological description emerged in intellectual history? That’s what Foucault is going in this chapter.

This is the first passage I spotted when I opened the book – well, I didn’t exactly open a codex, rather I scanned through a PDF (The Order of Things, p. 142-43):

The documents of this new history are not other words, texts or records, but unencumbered spaces in which things are juxtaposed: herbariums, collections, gardens; the locus of this history is a non-temporal rectangle in which, stripped of all commentary, of all enveloping language, creatures present themselves one beside another, their surfaces visible, grouped according to their common features, and thus already virtually analysed, and bearers of nothing but their own individual names. It is often said that the establishment of botanical gardens and zoological collections expressed a new curiosity about exotic plants and animals. In fact, these had already claimed men’s interest for a long while. What had changed was the space in which it was possible to see them and from which it was possible to describe them. To the Renaissance, the strangeness of animals was a spectacle: it was featured in fairs, in tournaments, in fictitious or real combats, in reconstitutions of legends in which the bestiary displayed its ageless fables. The natural history room and the garden, as created in the Classical period, replace the circular procession of the ‘show’ with the arrangement of things in a ‘table’.

That’s it, said I to myself, context! The context has to change. It is only when plants and animals are removed from on-going life and considered only in relation to one another that their forms, and their differences, can become salient. The same is true of texts. We must remove them from life so that they can come into their own as objects for examination. Meaning, after all, is always meaning for life.

That’s why critics talk about texts in the same terms they use to talk about life. That’s why Theory grounds itself in general philosophical and quasi-philosophical texts, texts created to understand life and the world in general, but not literature in particular. That’s why critics talk about fictional characters as though they were just like real live people – when they’re not; they’re puppets.

Once more into the breach: Interpretation IS NOT reading (contra Hartman and Fish)

Christopher Ricks, In theory, London Review of Books, Vol. 3 No. 7 · 16 April 1981. Once he gets going Ricks starts riding one of my favorite hobbyhorses, that interpretation (aka reading) is NOT (ordinary reading). And he rides it against one of my favorite antagonists, Geoffrey Hartman:

The betraying moment comes when Hartman juxtaposes real reading with what passes for it in the real world: ‘We have talked for a long time, and unself-consciously, of the work of art; we may come to talk as naturally of the work of reading ... I would suggest that as in work generally there is something provocative of or even against nature in reading: something which develops but also spoils our (more idle) enjoyment of literature. Hence the tone of weariness and the famous acedia that characterise the professional reader even when he has the force to recycle his readings as writing.’ How anybody can enjoy literature, even idly, other than by reading it: this remains unattended-to (as does the sudden use of ‘unself-consciously’ in an approbatory way, happily equivalent to ‘naturally’), because what matters is the supersession of readers by professional readers. There is, naturally, nothing wrong with the admission that a critic is a professional reader: but there is something very wrong with saying that non-professional reading isn’t really reading at all, and is the ‘more idle’ ‘enjoyment of literature’. It is this double impulse – to occlude non-professional reading, and, complementarily, to occlude the fact that writing is something done by writers prior to critics – which animates not literary theory in general but the reigning usurpers.

And then there's the "text" and the irrepressible Mr. Fish:

The attack on not just the authority of authors but their very existence is inseparable from the attack on intention in literature. Fish is very persuasive on the inescapability of interpretation, and he shows that if you seem to meet an utterance which doesn’t have to be interpreted, that is because you have interpreted it already. But it is a fundamental objection to the extreme of hermeneuticism today that, in its adept slighting of authorial intention, it leaves itself no way of establishing the text of a text. To put it gracelessly like this is to bring out that some of the convenience of the word ‘text’ for the current theorists is its preemptive strike against the word ‘text’ as involving the establishing of the words of a text and their emending if need be. For on Fish’s principles, need could never be. There are no facts independent of interpretation: moreover every interpretative strategy can make – cannot but make – perfect sense, according to its lights, of every detail of every text.To put it at its simplest, Fish’s theory has no way of dealing with misprints.

Friday, October 21, 2016

Neural basis of auditory entrainment

In PsyPost:

The auditory brainstem responds more consistently to regular sound sequences than irregular sound sequences, according to a recent study published this September in Neuroscience. The study adds to our understanding of how the brain processes regular sound patterns. [...]The study, by Alexandre Lehmann (University of Montreal and McGill University), Diana Jimena Arias (University of Quebec at Montreal) and Marc Schönwiesner of (University of Montreal), traced entrainment-related processes along the auditory pathway. They simultaneously recorded the EEG (electrical activity in the brain) responses of 15 normal-hearing participants in their brainstem and cortex whilst regular and irregular sequences of a sound were played. There were then random omissions during the sequences.The results revealed that the auditory cortex responded strongly to omissions whereas the auditory brainstem did not. However, auditory brainstem responses in the regular sound sequence were more consistent across trials than in the irregular sequence. They also found stronger adaptation in the cortex and brainstem of responses to stimuli that preceded omissions than those that followed omissions.

This makes sense. I'm thinking in particular of the observations that William Condon made about neonatal entrainment. That implied brain stem mediation as the auditory cortex is not mature at birth.

Here's the original article:

Lehmann A, Jimena Arias D, Schönwiesner M. Tracing the Neural Basis of Auditory Entrainment. Neuroscience. PMID 27667358 DOI: 10.1016/j.neuroscience.2016.09.011Abstract: Neurons in the auditory cortex synchronize their responses to temporal regularities in sound input. This coupling or "entrainment" is thought to facilitate beat extraction and rhythm perception in temporally structured sounds, such as music. As a consequence of such entrainment, the auditory cortex responds to an omitted (silent) sound in a regular sequence. Although previous studies suggest that the auditory brainstem frequency-following response (FFR) exhibits some of the beat-related effects found in the cortex, it is unknown whether omissions of sounds evoke a brainstem response. We simultaneously recorded cortical and brainstem responses to isochronous and irregular sequences of consonant-vowel syllable /da/ that contained sporadic omissions. The auditory cortex responded strongly to omissions, but we found no evidence of evoked responses to omitted stimuli from the auditory brainstem. However, auditory brainstem responses in the isochronous sound sequence were more consistent across trials than in the irregular sequence. These results indicate that the auditory brainstem faithfully encodes short-term acoustic properties of a stimulus and is sensitive to sequence regularity, but does not entrain to isochronous sequences sufficiently to generate overt omission responses, even for sequences that evoke such responses in the cortex. These findings add to our understanding of the processing of sound regularities, which is an important aspect of human cognitive abilities like rhythm, music and speech perception

KEYWORDS: auditory cortex; electro-encephalography; human brainstem; rhythmic entrainment; stimulus omissions; temporal regularity

Laughter, real and fake

Kate Murphy, The Science of the Fake Laugh, The NYTimes:

Laughter at its purest and most spontaneous is affiliative and bonding. To our forebears it meant, “We’re not going to kill each other! What a relief!” But as we’ve developed as humans so has our repertoire of laughter, unleashed to achieve ends quite apart from its original function of telling friend from foe. Some of it is social lubrication — the warm chuckles we give one another to be amiable and polite. Darker manifestations include dismissive laughter, which makes light of something someone said sincerely, and derisive laughter, which shames.The thing is, we still have the instinct to laugh when we hear laughter, no matter the circumstances or intent. Witness the contagion of mirthless, forced laughter at cocktail parties. Or the uncomfortable laughter that follows a mean-spirited or off-color joke. Sexual harassers can elicit nervous laughter from victims, which can later be used against them.

Confused?

Researchers at the University of California, Los Angeles, found that people mistake fake for genuine laughter a third of the time, which is probably a low estimate as the fake laughter that subjects heard in the experiment was not produced in natural social situations. While laughing on command is rarely convincing (think of sitcom laugh tracks), “your average person is pretty good at fake laughing in certain circumstances,” said the study’s lead author, Greg Bryant, a cognitive psychologist who studies laughter vocalization and interpretation. “It’s like when people say, ‘I’m not a good liar,’ but everyone is a good liar if they have to be.”

The real deal:

Genuine laughter, real eruptions of joy, are generated by different neural pathways and musculature than so-called volitional laughter. Contrast the sound of someone’s helpless belly laugh in response to something truly amusing to a more throaty “ah-ha-ha,” that might signify agreement or a nasal “eh-heh-heh” when someone might be feeling uneasy. “A fake laugh is produced more from areas used for speech so it has speech sounds in it,” Dr. Bryant said.There is also a big difference in how you feel after a genuine laugh. It produces a mild euphoria thanks to endorphins released into your system, which research indicates increases our tolerance to pain. Feigned laughter doesn’t have the same feel-good result. In fact, you probably feel sort of drained from having to pretend. Recall your worst blind date.

Thursday, October 20, 2016

Wednesday, October 19, 2016

Yikes! This is post number 4072

And I hadn't even noticed I'd passed 4000.

2500 is he last milestone I noted, and that was back on July 14, 2014, when I wrote a brief analysis to what I've been posting. Won't do that now. But I don't think my overall range of subjects has changed much since then.

Life as Jamie Knows It @3QD, with some remarks about intelligence

I was half-considering tacking “aka writing about a retard” to the end of my title, right after “3QD”, but the word “retard” is just too ugly and there’s no way of indicating that I’m not serious about it. And yet in a way I am, almost.

Jamie, as you likely know by know, is Jamie Bérubé, who has Down syndrome. His father, Michael, has just written a book about him, Life as Jamie Knows It, and I’ve reviewed the book for 3 Quarks Daily. Writing this review is the first time I have had to actively think about mental disability.

The word is “actively.” Sure, back in secondary school there were “special ed.” classes, and the students in them had educational difficulties. But those classes were somewhere “over there”, so those kids weren’t really a part of my daily life. Beyond that, I am generally aware of the Special Olympics and this that and the other.

Moreover I was already somewhat familiar with Jamie’s story. Michael ran a blog between 2004 and 2010, which I started following late in 2005. Jamie was a frequent topic of conversation. There are stories in Life as Jamie Knows It that I first read on Michael’s blog.

Still, all that is just background. To review Michael’s book I was going to have to think seriously about Jamie, about Down syndrome, and about disability more generally. And then I would have to write words which would appear in public and which I would therefor have to own, as they say. When I asked Michael to have a copy sent to me I didn’t anticipate any problems at all. I knew he was an intelligent man, an incisive thinker, a good writer, and a bit of a wiseguy. I was looking forward to reading the book and writing about it.

But, I hadn’t anticipated Jamie’s art. I noticed that Michael had posted a lot of it online and I started looking through it. Pretty interesting, thought I to myself, why don’t I write a series of posts here at New Savanna as a lead up to the review over there at 3QD? And that’s what I did. The penultimate (aka next to the last) post was about work that Michael termed “geometrics” and I had decided to call “biomorphics.” I concluded that those biomorphic images were interesting indeed. And so I found myself writing:

Does this make Jamie a topologist? No, not really. But it makes it clear that he’s an intelligent human being actively exploring and making sense of the world.

That word “intelligent” brought me up short once I’d typed it. Did I really want to use that word? I asked myself. Yes, I replied, without thinking about it.

And THEN I started thinking.

Kids these days...need a place to play

When I was a kid I lived in a suburban neighborhood that was on the border of a semi-rural area. A neighbor up the road had a small wheat field. If I walked a quarter of a mile in one direction I cam to a small wooded ares, but large enough so we could go in there and get lost, build hide-outs and stuff. A mile in another direction there was a much larger and more mysterious wooded area, right next to the mink farm. And I was allowed to roam the neighborhood freely. We all were.

Yes, there were rules. I had to be home for dinner, get my homework done, and get into bed at the proper time. And there were restrictions as to where I could go, restrictions that got loosed as I grew older. And that was true for my friends as well.

I keep reading, though, that that's over, that kids these days are scheduled and regulated in a way I find, well, borderline pathological. Anyhow, the NYTimes addresses this in an interesting story:

The Anti-Helicopter Parent’s Plea: Let Kids Play!

A Silicon Valley dad decided to test his theories about parenting

by turning his yard into a playground where children can take

physical risks without supervision. Not all of his neighbors were thrilled.

By MELANIE THERNSTROM

The whole thing's worth reading. Here's one representative passage:

As part of Mike’s quest for a playborhood, he began doing research and visiting neighborhoods in different parts of the country that he thought might fit his vision. The first place he visited was N Street in Davis, Calif., a cluster of around 20 houses that share land and hold regular dinners together. Children wander around freely, crossing backyards and playing in the collective spaces: Ping-Pong table, pizza oven and community garden. Mike told me the story of Lucy, a toddler adopted from China by a single mom who lived on N Street. When Lucy was 3, her mother died of cancer. But before she died, her mother gave every house a refrigerator magnet with a picture of Lucy on it. While the founders of N Street formally adopted Lucy, the entire community supported her. Mike pointed out that the childhood Lucy was having on N Street may be akin to one she might have enjoyed in a village in rural China, but it was extraordinary in suburban America. Lucy could wander around fearlessly, knowing she had 19 other houses where she could walk right in and expect a snack.Mike spent some time in the Lyman Place neighborhood in the Bronx, where grandmothers and other residents organized to watch the streets — so dangerous that children were afraid to play outside — and block them off in the summer to create a neighborhood camp, staffed by local teenagers and volunteers. Mike also found his way to Share-It Square in Southeast Portland, Ore., a random intersection that became a community when a local architect mobilized neighbors to convert a condemned house on one corner into a “Kids’ Klubhouse”: a funky open-air structure that features a couch, a message board, a book-exchange box, a solar-powered tea station and toys.

Tuesday, October 18, 2016

Universality of facial experssions is being questioned

For or more than a century, scientists have wondered whether all humans experience the same basic range of emotions—and if they do, whether they express them in the same way. In the 1870s, it was the central question Charles Darwin explored in The Expression of the Emotions in Man and Animals. By the 1960s, emeritus psychologist Paul Ekman, then at the University of California (UC) in San Francisco, had come up with an accepted methodology to explore this question. He showed pictures of Westerners with different facial expressions to people living in isolated cultures, including in Papua New Guinea, and then asked them what emotion was being conveyed. Ekman’s early experiments appeared conclusive. From anger to happiness to sadness to surprise, facial expressions seemed to be universally understood around the world, a biologically innate response to emotion.

That conclusion went virtually unchallenged for 50 years, and it still features prominently in many psychology and anthropology textbooks, says James Russell, a psychologist at Boston College and corresponding author of the recent study. But over the last few decades, scientists have begun questioning the methodologies and assumptions of the earlier studies. [...]

Based on his research, Russell champions an idea he calls “minimal universality.” In it, the finite number of ways that facial muscles can move creates a basic template of expressions that are then filtered through culture to gain meaning. If this is indeed the case, such cultural diversity in facial expressions will prove challenging to emerging technologies that aspire to decode and react to human emotion, he says, such as emotion recognition software being designed to recognize when people are lying or plotting violence.

“This is novel work and an interesting challenge to a tenet of the so-called universality thesis,” wrote Disa Sauter, a psychologist at the University of Amsterdam, in an email. She adds that she’d like to see the research replicated with adult participants, as well as with experiments that ask people to produce a threatening or angry face, not just interpret photos of expressions. “It will be crucial to test whether this pattern of ‘fear’ expressions being associated with anger/threat is found in the production of facial expressions, since the universality thesis is primarily focused on production rather than perception.”

Ethical Criticism and “Darwinian” Literary Study

Angus Fletcher, Another Literary Darwinism, Critical Inquiry, Vol. 40, No. 2, Winter 2014, 450-469.

It opens (450):

There are, Jonathan Kramnick has remarked, just two problems with literary Darwinism: it isn’t literary and it isn’t Darwinism. By allying itself with Evolutionary Psychology, it has not only eliminated most of the nuance from contemporary neo-Darwinism but reduced all literature to stories, taking so little account of literary form that it equates “Pleistocene campfire” tales with Mrs. Dalloway (“ALD,” p. 327).

Most of the article is intellectual history: Julian Huxley, H. G. Wells and the “New Biographers” in the early 20th century. From my point of view it’s all preliminary throat-clearing until very near the end.

What has caught Fletcher’s attention is the interest in behavior (p. 467):

There are no religious commandments or categorical imperatives or natural rights or anything else to give a spine to human life. This state of absence, as the Victorians discovered (and more recent thinkers such as Thomas Nagel have lamented), is unlivable. Because we are participants in a physical world, we must do something, and rather than surrendering us to the blind impulses of nature or the idols of moral idealism Huxley’s literary Darwinism reminds us of a neglected source of practical ethics: behavior. Over the past fifty years, the traditional place of behavior in ethics has been diminished by new trends in both the biological sciences and literary criticism.

Continuing on (p. 468):

To begin with, behavior is purely physical, and so it survives Darwinism’s metaphysical purge untouched. Moreover, as the New Biography demonstrates, the behaviors (or to use a more literary term, the practices) encouraged by literature can foster a sense of purpose, meaning, and hope that Darwin’s theory cannot. Such practices are not absolute or prescriptive — the more we explore the diversity of our literary traditions, the more we recover a library of different possibilities — but they do allow us to transition from a theoretical existentialism into a practical experimentalism. Where raw Darwinism carries us to a state of general tolerance, literature can help us seek the practices that allow us to thrive in our own particular fashion.

And now we get the payoff, ethical criticism (468):

If Darwinism leads toward a negative approach to ethics, and if literature’s role in this ethics is behavioral, then a major focus of literary Darwinism will be to identify literary forms that increase our ethical range by inhibiting intolerant behaviors. Many such behaviors originate in what seem to be permanent features of our brains: our emotional egoism, for example, or our diminished empathy for people of a different phenotype. Nevertheless, these behaviors can be reined in by other areas of our cortex, and if literature could uniquely facilitate such reining in, then it could be claimed as a Darwinian remedy for some of the antipluralist outcomes of natural selection.

And (469):

Where existing cognitive studies of literature have suggested that literature can exploit or improve our existing mental faculties, this behavioral approach to literary Darwinism thus opens the possibility that literary form might liberate us from certain aspects of our evolved nature. That is, instead of being a biological adaptation, literature could help us adapt our biology. This possibility is, of course, speculative. To test it would require intensive collaboration between literary scholars and biologists, and, as Kramnick points out, recent literary Darwinists have done little to foster the mutual respect necessary for such cooperation to occur. Yet here again, Huxley can offer us hope. Rather than displaying a “literary . . . resistance to biology,”84 his version of literary Darwinism clears away Lamarckism, vitalism, and other forms of pseudoscience. And rather than reducing literature to Pleistocene stories, it shows that works such as Eminent Victorians can encourage original ways to respond to our natural condition. Huxley, in short, makes evolution more Darwinian and life more literary, so, unlike the literary Darwinism that inspires Kramnick’s critique, Huxley’s version does not imply a zero-sum contest between aesthetic and biological value. Instead, it does for Darwinism and literature what it did for the warring subfields of evolutionary biology in the 1940s. Revealing them as partners, it urges them to embrace the comic opportunity of life.

Just what this means in practical detail is not at all obvious to me, nor is it to Fletcher. He seems to have some idea that, yes, evolution has bequeathed us a human nature, but we’re not necessarily stuck with it.

I’m with him. My preferred metaphor for thinking about this is that of board games, such as checkers, chess, or Go. Biology provides the board, the game pieces, and the basic rules of the game. But to play a competitive game you need to know much more than those rules. The rules tell you whether or not a move is legal, but they don’t tell you whether or not a move is a good one. That’s a higher kind of knowledge. Call it culture. Culture is the tactics and strategy of the game.

Literature, among other cultural practices, is a compendium of tactical and strategic practices. But a psychology that is going to tell us how those practices are constituted out of, constructed over, the raw stuff of biology will have to be more robust than any psychology currently being employed either by the Darwinians or the cognitivists. It will need the constructive capacities inherent in computational approaches, a matter which I’ve discussed often enough, see e.g. my working paper On the Poverty of Cognitive Criticism and the Importance of Computation and Form, or these posts.

Monday, October 17, 2016

High tech partnership to ease public anxiety about computers and robots talking all the jobs

From the NYTimes, Sept 28:

The Partnership on AI, unites Amazon, Facebook, Google, IBM and Microsoft in an effort to ease public fears of machines that are learning to think for themselves and perhaps ease corporate anxiety over the prospect of government regulation of this new technology.The organization has been created at a time of significant public debate about artificial intelligence technologies that are built into a variety of robots and other intelligent systems, including self-driving cars and workplace automation. [...]The group released eight tenets that are evocative of Isaac Asimov’s original “Three Laws of Robotics,” which appeared in a science fiction story in 1942. The new principles include high-level ideals such as, “We will seek to ensure that A.I. technologies benefit and empower as many people as possible.”

Michelle Obama and the Stealth Transition: Gardens Across America

Just a reminder about the out-going First Lady. Originally posted on 8.31.12.

The way I see it, the smart money is on First Lady Michelle Obama, not to do a Hillary and go for the Big One in a later Presidential Ritual Contest (aka election). No, to do an Eleanor (Roosevelt) and have a major effect on the nation while her husband does the military strut.

How, pray tell, will she do this? you ask. With her garden, says I, with her garden.

Veggies for Health

As you may know, she’s very much interested in gardening, in particular, growing vegetables. She’s set up a garden on the Whitehouse lawn, grown veggies, had them served in the Whitehouse and has recently published a book on gardening and nutrition.

That’s her angle on gardening. Vegetables are good and good for you. They’re essential to a proper diet, and a proper diet is necessary to prevent childhood obesity.

Her interest in and commitment to gardening is deep, predating her husband’s nomination:

"Back then, it was really just the concept of, I wonder if you could grow a garden on the South Lawn?" Michelle Obama says. "If you could grow a garden, it would be pretty visible and maybe that would be the way that we could begin a conversation about childhood health, and we could actually get kids from the community to help us plant and help us harvest and see how their habits changed."She was a city kid from Chicago's South Side who had never had a garden herself, though her mother recalls a local victory garden created to produce vegetables during World War II. One childhood photo included in the book shows Michelle as an infant in her mother's arms — the resemblance between Marian Robinson in the picture and Michelle as an adult is striking — and another depicts a young Michelle practicing a headstand in the backyard.... After the election, she broached her idea to start the first vegetable garden on the White House grounds since Eleanor Roosevelt's victory garden in the 1940s.Since the garden's groundbreaking in 2009 — just two months after the inauguration — she has hosted seasonal waves of students from local elementary schools that help plant the seeds. Groundskeepers and dozens of volunteers weed and tend the garden. Charlie Brandts, a White House carpenter who is a hobbyist beekeeper, has built a beehive a few feet away to pollinate the plants and provide honey that Michelle Obama says "tastes like sunshine."

So, the First Lady has a garden on the Whitehouse lawn and she’s preaching the garden gospel. Good enough.

Gardens in the Transition

The thing is, regardless of why a family or a community decides to grow a vegetable garden, the moment they start doing so, they’re also participating in the Transition Movement. By that I mean the movement started by Rob Hopkins in England and that now has groups all over the world:

The Transition Movement is comprised of vibrant, grassroots community initiatives that seek to build community resilience in the face of such challenges as peak oil, climate change and the economic crisis. Transition Initiatives differentiate themselves from other sustainability and "environmental" groups by seeking to mitigate these converging global crises by engaging their communities in home-grown, citizen-led education, action, and multi-stakeholder planning to increase local self reliance and resilience. They succeed by regeneratively using their local assets, innovating, networking, collaborating, replicating proven strategies, and respecting the deep patterns of nature and diverse cultures in their place. Transition Initiatives work with deliberation and good cheer to create a fulfilling and inspiring local way of life that can withstand the shocks of rapidly shifting global systems.

An Empirical Study of Abstract Concepts

Felix Hill, Anna Korhonen, Christian Bentz, A Quantitative Empirical Analysis of the Abstract/Concrete Distinction, Cognitive Science 38 (2014) 162–177.

Abstract: This study presents original evidence that abstract and concrete concepts are organized and rep- resented differently in the mind, based on analyses of thousands of concepts in publicly available data sets and computational resources. First, we show that abstract and concrete concepts have differing patterns of association with other concepts. Second, we test recent hypotheses that abstract concepts are organized according to association, whereas concrete concepts are organized according to (semantic) similarity. Third, we present evidence suggesting that concrete representations are more strongly feature-based than abstract concepts. We argue that degree of feature-based structure may fundamentally determine concreteness, and we discuss implications for cognitive and computational models of meaning.

From the concluding remarks (173-174):

I note that they say nothing about conceptual metaphor theory.Instead of a strong feature-based structure, abstract representations encode a pattern of relations with other concepts (both abstract and concrete). We hypothesize that the degree of feature- based structure is the fundamental cognitive correlate of what is intuitively understood as concreteness.By this account, computing the similarity of two concrete concepts would involve a (asymmetric) feature comparison of the sort described by Tversky. In contrast, computing the similarity of abstract concepts would require a (more symmetric) comparison of rela- tional predicates such as analogy (Gentner & Markman, 1997; Markman & Gentner, 1993). Because of their representational structure, the feature-based operation would be simple and intuitive for concrete concepts, so that similar objects (of close taxonomic cat- egories) come to be associated. On the other hand, for abstract concepts, perhaps because structure mapping is more complex or demanding, the items that come to be associated are instead those that fill neighboring positions in the relational structure specified by that concept (such as arguments of verbs or prepositions). Intuitively, this would result in a larger set of associates than for concrete concepts, as confirmed by Finding 1. Moreover, such associates would not in general be similar, as supported by Finding 2. [...]

Linguists and psychologists have long sought theories that exhaustively capture the empirical facts of conceptual meaning. Approaches that fundamentally reflect association, such as semantic networks and distributional models, struggle to account for the reality of categories or prototypes. On the other hand, certain concepts evade satisfactory charac- terization within the framework of prototypes and features, the concept game being a prime example, as Wittgenstein (1953) famously noted. The differences between abstract and concrete concepts highlighted in this and other recent work might indicate why a general theory of concepts has proved so elusive. Perhaps we have been guilty of trying to find a single solution to two different problems.

The Art of Jamie Bérubé

I’ve consolidated my posts about the art of Jamie Bérubé into a single document with the title Jamie’s Investigations: The Art of a Young Man with Down Syndrome. You can download it at the usual places:

Academica.edu: https://www.academia.edu/29195347/Jamie_s_Investigations_The_Art_of_a_Young_Man_with_Down_Syndrome

Abstract, table of contents, and introduction are below.

* * * * *

Abstract

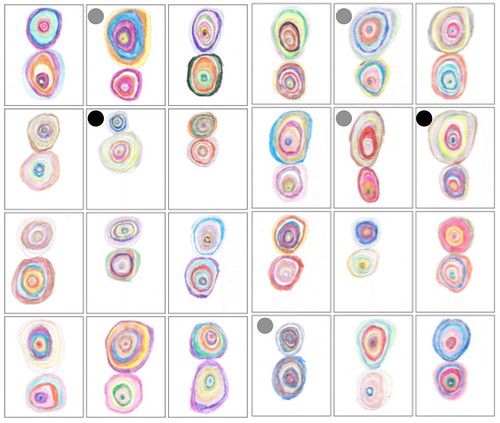

Jamie Bérubé is a young man with Down syndrome in his early twenties; he has been drawing abstract art since he was eleven. So far he has explored five types of imagery: 1) fields of colored dots in a roughly rectangular array, 2) tall slender towers with brightly colored horizontal ‘cells’, 3) pairs of concentric colored bands with one pair of concentrics above the other, 4) colored letterforms above a set of colored concentric bands, and 5) arrangements of fairly complex biomorphic forms. Taken together types 1&2 exhibit one approach to the problem of composing a page: place a large number of small objects on the page in a regular array. Types 3&4 exhibit a different approach: position relatively large objects in the center of the page. In some ways Bérubé’s fifth approach, arrangements of biomorphic objects, can be considered a synthesis of the two other approaches. Moreover the individual objects appear life-like and their arrangement on the page is dynamic, properties not otherwise evident in Bérubé’s art. It is his most recent form of art and may be considered the product of long-term experimentation.

CONTENTS

About Jamie Bérubé and His Art 2

Emergence: Jamie Discovers Art 3

On Discovering Jamie’s Principle 9

Scintillating Rhythm: Towers of Color 14

Composition: Concentrics and Letterforms 21

What We Have Discovered? 39

Jamie’s Evolution (so far) 39

Biomorphic Complexity 40

Color, Eye Movement, and Composition 42

About Jamie Bérubé and His Art

This is about the art of Jamie Bérubé, who has Down syndrome. He is the son of Michael Bérubé, who has just published a book about him: Life as Jamie Knows It: An Exceptional Child Grows Up (Beacon Press, 2016). Michael–I can call him that, as he is a friend–wanted to include a generous selection of Jamie’s art in the book, but, as you know, color reproduction is expensive. Instead, he put it online . I took a look, became fascinated, and decided to write a series of posts about it.

Jamie has settled on a few themes and motifs and has covered countless sheets of paper with them. He has no interest in figurative art; all his work is abstract. I suspect that is because the relationship between what you see on the page, as an artist, and what you do as you draw is quite direct in the case of abstract art, but oddly remote for figurative art.

I suspect that is because the relationship between what you see on the page, as an artist, and what you do as you draw is quite direct in the case of abstract art, but oddly remote for figurative art. While natural scenes often have a complexity that is easily avoided in abstract imagery, that is not, I suspect, the primary problem. The primary problem is that the world exists in three dimensions while drawing surfaces have only two dimensions. Figuring out how to project the 3D world into a 2D surface is difficult, so difficult that artists have at times taken to mechanical means, such as the camera obscura, a pinhole device used to project a scene onto a flat surface where the artist can then simply trace the image. Some years ago David Hockney caused a minor scandal in the art world when he suggested that the some of the old masters used mechanical aids, such as the camera obscura, rather than drawing guided only by the unaided eye.

But that’s a digression. The point is simply that figuring out how to place a line on a flat surface so that it accurately traces the outline of what one sees, that is a very difficult thing to do, so difficult that many artistic traditions have simply ignored the problem. By choosing to work only with abstract imagery, Jamie sidesteps that problem. He has chosen to work with round dots, concentric bands, rectangular towers and cells, and intriguingly biomorphic geometric objects. While that is a limitation, we should note that many fine artists have opted for abstraction over the last century and that a major family of aesthetic traditions, those is many Islamic nations, have eschewed representational art (as sacrilegious) and developed rich traditions of calligraphy and geometric patterning.

Jamie has chosen materials he is comfortable with and has thrived from doing so. What of others with Down syndrome? The late Judith Scott had Down syndrome and she achieved international acclaim as a fiber artist. Michael informs me, however, that he doesn’t know of anyone else with Down syndrome who draws as extensively as Jamie does. Is Jamie’s level of artistic skill that rare among those with Down syndrome? I certainly have no way of knowing.

What would happen if children with Down syndrome were exposed to Jamie’s art, perhaps even watch him doing it (most likely through video or film)? Would they imitate him and develop their own preferred motifs and themes? Could people be trained to teach the Jamie method?

Sunday, October 16, 2016

Ursula Le Guin profiled in The New Yorker

Ursula absorbed these stories, together with the books she read: children’s classics, Norse myths, Irish folktales, the Iliad. In her father’s library, she discovered Romantic poetry and Eastern philosophy, especially the Tao Te Ching. She and her brother Karl supplemented these with science-fiction magazines. With Karl, the closest to her in age of her three brothers, she played King Arthur’s knights, in armor made of cardboard boxes. The two also made up tales of political intrigue and exploration set in a stuffed-toy world called the Animal Kingdom. This storytelling later gave her a feeling of kinship with the Brontës, whose Gondal and Angria, she says, were “the ‘genius version’ of what Karl and I did.”

Gifts:

In fact, it was the mainstream that ended up transformed. By breaking down the walls of genre, Le Guin handed new tools to twenty-first-century writers working in what Chabon calls the “borderlands,” the place where the fantastic enters literature. A group of writers as unlike as Chabon, Molly Gloss, Kelly Link, Karen Joy Fowler, Junot Díaz, Jonathan Lethem, Victor LaValle, Zadie Smith, and David Mitchell began to explore what’s possible when they combine elements of realism and fantasy. The fantasy and science-fiction scholar Brian Attebery has noted that “every writer I know who talks about Ursula talks about a sense of having been invited or empowered to do something.” Given that many of Le Guin’s protagonists have dark skin, the science-fiction writer N. K. Jemisin speaks of the importance to her and others of encountering in fantasy someone who looked like them. Karen Joy Fowler, a friend of Le Guin’s whose novel “We Are All Completely Beside Ourselves” questions the nature of the human-animal bond, says that Le Guin offered her alternatives to realism by bringing the fantastic out of its “underdog position.” For writers, she says, Le Guin “makes you think many things are possible that you maybe didn’t think were possible.”

Saturday, October 15, 2016

Jamie’s Investigations, Part 6: What We Have Discovered

When I started investigating Jamie Bérubé’s art I had no particular expectations, just a reasonable belief that it would be worthwhile. Now that I’ve examined each of his genres it is time to review the investigation. I want to begin with a quick overview of his aesthetic evolution, and then take up some details.

Jamie’s Evolution (so far)

We can divide Jamie’s work into roughly three phases: 1) Preliminary, 2) Compositions, and 3) Biomorphs. By Preliminary I mean Jamie’s early work, which we examined in On Discovering Jamie’s Principle. Jamie did this between 11 and 14 years old. In this phase the sheet of paper was simply where he made marks of various kinds. Jamie doesn’t have any conception of a sheet of paper as the locus of a particular ‘work’, if you will. Nor did he have any sense of overall composition; he simply placed objects wherever there was room, and, with the exception of some sheets of dots, different kinds of objects could exist on the same sheet.

In the second phase, which we examined in Investigations 1, 3, and 4, Jamie conceives of an individual sheet of paper as the locus for a coherent set of objects. First we examined his dots images, then towers of color, and finally (in a single post) pairs of concentric bands and rows of letterforms paired with a set of concentric bancs. In both the dots and towers schemes Jamie approached the problem of composition with a scheme he could execute with his attention focused on the local area where he is drawing. In the concentric schemes local attention is not sufficient. Jamie has to attend to all four edges when making his initial marks, some of which will not be near one or more edges.

Note that I haven’t observed Jamie drawing. My observations follow from the inherent logic of the drawing situation and take into consideration variations in the images themselves.

For a dots sheet Jamie can start at a top corner and then either move down the sheet, following the adjacent edge or move across the sheet following the top edge. Each successive dot goes next to the previous one; dots are of the same size and same distance apart. When he has finished one row Jamie can begin the next one, right next to the one he has just completed. Jamie continues drawing row after row until either the sheet if filled or until he otherwise stops (perhaps because he is interrupted).

This is Jamie’s simplest kind of drawing. The tower procedure is more complex. I note that we saw examples of dots sheets in his Preliminary phase and towers sheets as well, though the towers were horizontal rather than vertical.

While visually similar, a large number of more or less equally spaced color patches, the towers of color are more complex. For each tower Jamie 1) uses a pen to draw a tall slender rectangle, then 2) he crosses the rectangle with a large number of lines from one side of the rectangle to the other. The cross lines almost always extend beyond the original rectangle. Finally, 3) Jamie uses crayons to fill each cell with color, not bothering to stay strictly within the lines. Starting at either the left of the right edge, Jamie moves across the sheet until it is filled (or he is interrupted).

The two kinds of concentrics sheets are similar in that, in order to draw a set of concentric bands centered on the midline Jamie must attend to the left and right edges. Not having observed him draw I don’t know whether he draws the innermost circle first or the outmost; the latter makes more sense, though, as it make it easier to position the two sets of bands with respect to one another (in one case) or one sect with respect to the letterforms above and the edges left, right, and below. This is a more complex composition problem than Jamie faces with either the dots or the towers. Here Jamie has to conceive of the entire composition before he makes his first marks in a way that isn’t necessary for the dots and the towers. In those cases he has a local procedure which, if followed, will produce a good result. Jamie’s attention has to be more global for the concentrics.

On the whole was Jamie drawing dots sheets and towers sheets before the two types of concentrics sheets? In a sense the answer is yes, because we see such sheets in his early work. We see letterforms and concentric bands in the early work, but they are on sheets with various other graphic objects. We don’t see those compositional forms covering early-phase sheets. If we examined the distribution of sheet-types over the course of months and years, what would we see? What I’m wondering, of course, is whether or not the concentrics began to proliferate only has Jamie had gained confidence from drawing dots and towers. Michael notes, moreover, “He stopped making these towers a few years ago, but recently resumed them, much to our delight.” What’s up with that?

Finally, I have placed the biomorphs in a third phase (though I assume that he’s been doing other kinds of sheets even as he does these). For one thing, as I have noted before, Michael tells me these are his most recent type, “dating over the last couple of years.” Moreover, as I have argued in my post about them, these are more sophisticated than Jamie’s earlier schemes.

Biomorphic Complexity

For one thing, the individual graphic elements are fairly complex. They are irregularly shaped; no two are alike (no, I’ve not checked, but this does seem to be the principle); and each of them has an interior line from one side to another. These objects are individualized in the way that letterforms are (notice that Jamie doesn’t repeat letterforms in the concentrics&letterform sheets). Each is its own little world.

Subscribe to:

Comments (Atom)