Now that we’ve got two modes of semantic investigation on the table, statistical semantics and computational semantics, it’s time to add a third to the group. It’s been there all along, of course: close-reading. But it’s not about semantics at all, at least not as I’m using the term in this discussion. It’s about meaning. Semantics and meaning, that’s our first binary distinction.

Semantics and meaning

I hold that meaning – the meaning of literary works or of any work of art – is inherently subjective. Semantic analysis, in contrast, takes place in the object realm, if you will. That doesn’t mean that semantic analysis is necessarily or inherently objective in the sense of objective truth. That’s a different issue.

Here we need a distinction made by John Searle [1]. Both subjective and objective must be considered in ontological and epistemological senses. Color is ontologically subjective in this sense though, allowing for color blindness (which is fairly well understood), it is epistemologically objective. That is, color exists in minds of perceivers, not in phenomena, but the perceptual apparatus of perceivers is such that they agree on colors, where agreement is ascertained by matching color swatches (are they the same or different?), rather than naming the colors, which can vary from one individual to another, not to mention variation in cultural conventions about color names.

The meaning of texts is subjective in the ontological sense. That is the kind of phenomenon meaning is; it exists within the minds of subjects. Attempts to assay meaning through critical essays, moreover, seem to be subjective in the epistemological sense; they vary from one literary critic to another. I note, however, subjects may and often do converse with one another; intersubjectivity is possible.

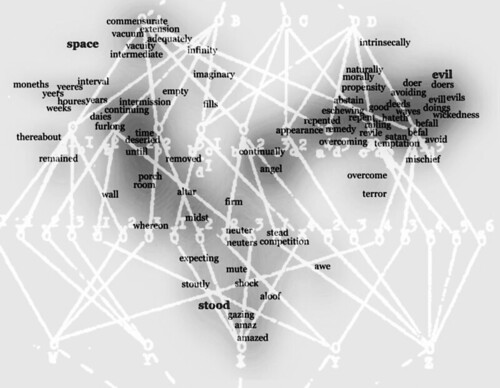

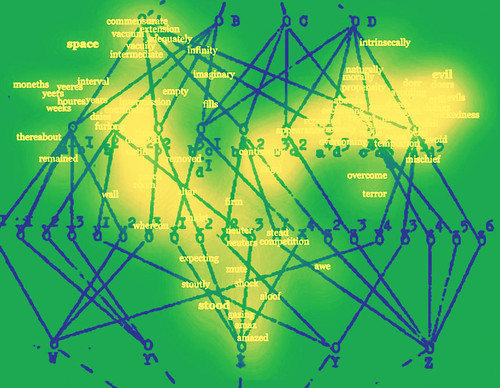

Now consider Gavin’s vector semantics, or any type of statistical semantics. Whatever the specific statistical technique, it is grounded in a corpus. In Gavin’s case the corpus consists of 18,351 documents from 1640 to 1699 [2]. Gavin then constructed a Word Space using an explicit set of procedures. It is that Word Space that Gavin queried with words and sets of words from Paradise Lost, again using explicit procedures.

First, there is a clear and explicit distinction between the words of Milton’s text and the constructions of Gavin’s Word Space model and his queries against that model. The visualizations Gavin uses are one thing, Milton’s texts is another. They are different objects, though related by an explicit procedure [3].

That is not the case with ordinary interpretation. Whatever the critic believes may being going on in an author’s or a reader’s mind, the critic has no way of talking about that except through the words in the text under discussion – plus any interpretive apparatus they bring to bear on those words. The relationship between the terms and concepts of that interpretive apparatus and the words in the text exists in the critic’s mind and is not open to public inspection and, for that matter, resists introspection as well.

Moreover what Gavin did is, at least in theory, reproducible. In practice, not quite. He has noted in supporting material [4]:

Here's where I must apologize to any readers interested in recreating the exact images that appear in the article. I failed to preserve the scripts used when generating the charts and so I'm not sure exactly which parameters I used. As a result, following the commands below will result in slightly different layouts and slightly different most-similar word lists. These differences do not, I trust, affect the main points I'm hoping to make in the article, but they are worth noting. Following the instructions below will closely but not perfectly replicate what appears in Critical Inquiry. Also, it's worth noting that when creating the images in R, I exported them to PDF and tweaked them in Inkscape, adjusting the font and spacing for readability.

If he’d preserved those scripts, then others could run them and get the same results.

The point then is that we’re working in a world where there’s a great deal of explicit inference and construction, far more than is the case with traditional criticism – a point David Ramsey has made somewhere, though I’ve forgotten the citation.

The some is true for computational semantics. However a computational model is constructed, it is clearly a different conceptual object from and text one applies it to. In the example I gave in my previous post [5] the computational model I outlined is clearly distinct from the words of Shakespeare’s sonnet. Just how and why a specific construction is included in the model – there may be a reason grounded in empirical evidence from psychology, or there may be a more or less arbitrary computational reason – that’s a secondary issue at this point. My only point here is that these models are explicit, open to public inspection, and distinctly different from any text. Whether or not they represent are valid accounts of the human mind, that’s a different issue. It’s an important issue, but not my immediate concern.

Two kinds of semantic model

As I’ve already discussed the differences between statistical semantics and computational semantics in previous posts [6] I won’t go into any detail here. Statistical semantics is a way of analyzing semantic relationships between words. Computational semantics was devised to enact a semantic process. Some investigators conceive of that process as a simulation of a human mind while others are content to think of it as an artificially conceived process designed to achieve a practical result. In the large that’s an important difference, but for the purposes of this post, that difference is not so significant. What’s important is that we conceive of language processes as being computational in kind.

I note that there is a discipline called computational narratology [7] that draws on this work. The people who program computer games draw on techniques pioneered in this work. And why not, they’re in the business of telling stories, albeit interactively with the help of game players.

I note as well the Ryan Heuser has raised an issue where computational semantics might give some insight, but statistical semantics does not. Here’s a recent tweet:

working through a counterintuitive DH finding that C18 novels often have more abstract words and fewer concrete ones than C17 romances. so, trying to pry apart realism from "concrete particularity" and think about how abstraction & abs language creates its own kind of realism.— Ryan Heuser (@quadrismegistus) August 10, 2018

So, how are abstract words defined? Statistical semantics will tell you something about the relationships between word meanings, whether abstract or not, but it won’t tell you how the meanings of words are constructed. Computational semantics can speak to that; that’s what I was doing in my analysis of the Shakespeare sonnet.

Where are we? All roads lead to Rome

One standard rubric for discussing the relationship between so-called “close reading” and “distant reading” (I’m skeptical of this rhetoric of space and distance) is that of scale. Close reading is small scale and distant reading is large, maybe even huge, scale. I’ve never found this very compelling. I’ve spent too much time thinking about biology.

Biologists must work and conceptualize at many scales (if not worlds within worlds) from individual molecules on the micro end to the course of life on earth on the macro end. But then biologists have a conceptual framework, evolution, that not only accommodates all scales, but allows one to tease out causal relationships across scales. Literary studies lacks such a framework. So there’s work to be done. What else is new?

Moreover the discussion of scale seems often not about scale at all, but about differing modes of thought, one mode oriented toward meaning (traditional literary criticism) and a different one oriented toward semantics (computational criticism). That’s an issue, but one of a different kind. This issue is often addressed straight-on as a plea for meaning directed by traditional critics to computational critics. While this may reflect a reasonable desire that computational critics produce something that traditional critics can readily understand, I fear that all too often it masks a mean-spirited refusal to grant intellectual credence to work the critic has no interest in understanding. On this matter let me quote Gavin (p. 673):

We need to learn how to learn from them, though, because how they write is, at the level of style and genre, so far out- side the bounds of humanistic scholarship that it’s difficult to recognize as theory. Like Satan at the foot of Eve, the digital humanities stand stupidly good. Hence the proliferation of uninspiring calls to import cultural criticism to the field, as if familiar modalities of thinking will get things moving (or reveal more than they mystify).

Finally, what of computational semantics?

When I undertook my apprenticeship in computational semantics back in the mid-1970s I had a specific objective in mind, to understand the nested structures I had discovered in Coleridge’s “Kubla Khan”. That didn’t happen, but I did some nice work, that is, work that I liked, on a Shakespeare sonnet (a bit of which we’ve seen in this series), and a few other things, a fragment from Patterson, Book V, a bit of the temporal structure of Oedipus the King, and a whole pile of miscellaneous constructions. It changed the way I think about language, thought, and the mind.

Out of that change I imagined there would be at time we would have computer models powerful enough to ‘read’ a Shakespeare play, for example, an Austen novel, yes, a Coleridge poem. You get the idea. But that hasn’t happened yet, nor do I see it on the predictable horizon.

What then?

Let us think a minute. Statistical semantics starts with a corpus, a collection of texts. Each of those texts was created by a human agent; the structure in each text exist through the intentions of the agent that produced it. And computational semantics, at least in some versions, is ultimately about the structures of the human mind. It follows that statistical semantics is a way of indirectly examining the structures of human minds. Statistical semantics is thus a bit like figuring out the operations of an internal combustion engine by listening to the sounds it makes while computational semantics is an attempt to build (toy) models of such engines so that we may listen to the sounds they make.

Thus, just as the statistical semanticist and the computational semanticist can trace their lineages back to Warren Weaver’s 1949 memorandum on translation, so they are on paths that ultimately converge at the same destination. Do literary texts contain signs that point the way? If so, can we afford to neglect any devices that will help us read those signs?

References

[1] John Searle, The Construction of Social Reality, Penguin Books, 1995.

[2] Michael Gavin, Vector Semantics, William Empson, and the Study of Ambiguity, Critical Inquiry 44 (Summer 2018) 641-673: https://www.journals.uchicago.edu/doi/abs/10.1086/698174

Ungated PDF: http://modelingliteraryhistory.org/wp-content/uploads/2018/06/Gavin-2018-Vector-Semantics.pdf

[3] See my earlier post, Gavin 2: The meanings of words are intimately interlinked, New Savanna, August 1, 2018: https://new-savanna.blogspot.com/2018/08/gavin-2-meanings-of-words-are.html

[4] Michael Gavin, Vector Semantics Walkthrough, August 2, 2018: https://github.com/michaelgavin/empson/blob/master/ci/walkthrough.Rmd

[5] William Benzon, Gavin 4: Some computational semantics for a Shakespeare sonnet, New Savanna, August 8, 2018: https://new-savanna.blogspot.com/2018/08/gavin-4-some-computational-semantics.html

[6] William Benzon, Gavin 1.1: Warren Weaver (1949) and statistical semantics, New Savanna, July 26, 2018: https://new-savanna.blogspot.com/2018/07/gavin-11-warren-weaver-1949-and.html

William Benzon, Gavin 1.2: From Warren Weaver 1949 to computational semantics, New Savanna, July 40, 2018: https://new-savanna.blogspot.com/2018/07/gavin-12-warren-weaver-1949-and.html

[7] Inderjeet Mani, Computational Narratology, The Living Handbook of Narratology, 28 January 2013: https://wikis.sub.uni-hamburg.de/lhn/index.php/Computational_Narratology

No comments:

Post a Comment