There's a new working paper, title above. Links etc. below.

Social Science Research Network (SSRN): http://ssrn.com/abstract=2698719

Abstract: There is a loose historical continuity in themes and concerns running from the origins of “close” reading in the early 20th century through machine translation and computational linguistics in the third quarter and “distant” reading in the present. Distant reading is the only current form of literary criticism that is presenting us with something new in the way that telescopes once presented astronomers with something new. Moreover it is the only form of criticism that is directly commensurate with the material substance of language. In the long-term it will advance in part by recouping and reconstructing earlier work in symbolic computation of natural language.

CONTENTS

Computation in Literary Study: The Lay of the Land 2

Digital Criticism @ 3QD 5

The Only Game in Town: Digital Criticism Comes of Age 7

Distant Reading and Embracing the Other 7Reading and Interpretation as Explanation 8A Mid-1970s Brush with the Other 9The Center is Gone 10Current Prospects: Into the Autonomous Aesthetic 11

Commensurability, Meaning, and Digital Criticism 13

* * * * *

Computation in Literary Study: The Lay of the Land

It was the best of times, it was the worst of times, it was the age of wisdom, it was the age of foolishness, it was the epoch of belief, it was the epoch of incredulity, it was the season of Light, it was the season of Darkness, it was the spring of hope, it was the winter of despair, we had everything before us, we had nothing before us, we were all going direct to Heaven, we were all going direct the other way …– Charles DickensOh yeah I'll tell you somethingI think you'll understandWhen I say that somethingI wanna hold your hand– John Lennon and Paul McCartney

I have a somewhat different perspective on digital humanities than most DH practitioners have and than humanists who know of it – and who doesn’t? – but are not practitioners. “Digital Humanities” covers a range of practices which are quite different from one another and are united only by the fact that they are computer intensive. A digital archive of medieval manuscripts is quite different from a 3D graphic re-creation of an ancient temple both are different from a topic analysis of 19th-century British novels. It is the last that most interests me, and I think of it as an example of computational criticism.

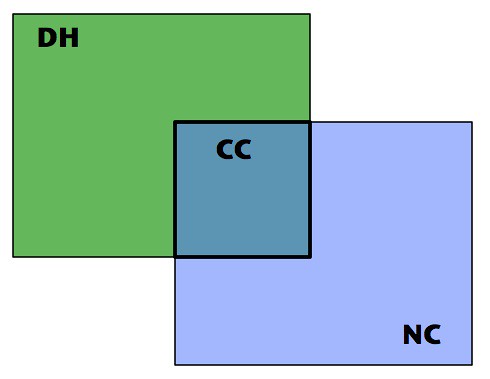

So, in the following diagram we see the computational criticism (CC) is a subset of digital humanities (DH):

But it is also a subset of naturalist criticism (NC). The naturalist critic treats literary phenomena as existing in the natural world along with other phenomena such as asteroids, clouds, squid, yeast, thunder, fireflies, and of course human beings.

I’ve been doing naturalist criticism since the mid-1970s when I studied computational linguistics with David Hays while getting my degree in English literature [1]. Hays was one of the original researchers in machine translation and coined the term “computational linguistics” in the early 1960s. At that time, and well into the 1980s, researchers created hand-coded symbolic models of linguistic processes: phonology, morphology, syntax, semantics, and pragmatics. In the 1980s, however, that research style was displaced by one that emphasized machine learning over large data sets using statistical models. And those statistical methods are, in turn, the foundation of much current work in computational criticism.

Thus, while I do not myself work with those models, there is a historical connection between them and what I was doing four decades ago. Even though they are quite different from the models I worked with, they are in the same intellectual milieu, one I’ve worked in for most of my career. It’s the world of social and behavioral sciences as practiced under the aegis of computation. It’s a world of naturalistic study.

Today’s computational critics, however, show no interest in those old symbolic models. For one thing, they aren’t of direct use to them [2]. Moreover, since they propose that, in some way, the human mind is itself computational in kind, they may be too hot to handle – though Willard McCarty has been handling it for years, as I discuss in “The Only Game in Town”. In many humanistic circles its bad enough that these researchers are using computers for more than word-processing and email, but to seriously propose that the human mind is computational – no, that’s not worth the risk. And yet, in the long run, I don’t think the idea can be held at bay. Humanists will have to confront it and when they do, well, I don’t know what will happen, though I myself have been comfortable with the idea for years.

As I was drafting this piece, I got pinged about a post that Ted Underwood just put up pointing our increasing methodological overlap between literary history and sociology [3]. There you’ll find paragraphs like this one:

Close reading? Well, yes, relative to what was previously possible at scale. Content analysis was originally restricted to predefined keywords and phrases that captured the “manifest meaning of a textual corpus” (2). Other kinds of meaning, implicit in “complexities of phrasing” or “rhetorical forms,” had to be discarded to make text usable as data. But according to the authors, more recent approaches to text analysis “give us the ability to instead consider a textual corpus in its full hermeneutic complexity,” going beyond the level of interpretation Kenneth Burke called “semantic” to one he considered “poetic” (3-4). This may be interpretation on a larger scale than literary scholars are accustomed to, but from the social-scientific side of the border, it looks like a move in the direction of rhetorical complexity.

The closer you get to rhetorical complexity, the more likely that sooner or later you’re going to bump into phenomena at a scale appropriate to those old symbolic models, not to mention the kind of hand-crafted description of individual texts that I’ve been advocating [4].

But that’s a diversion. I was really headed toward a recent article by Yohei Igarashi, Statistical Analysis at the Birth of Close Reading [5]. Here’s some sentences from the opening paragraph (p. 485):

It may be instructive to remember, then, that close and non-close reading, far from crossing paths for the first time recently, encountered one another in the early twentieth century on the terrain of educational “word lists.” The genre of the word list, a list of words usually taking word frequency as its elemental measure, shaped close reading at its founding. In fact, the founder of close reading, I. A. Richards, believed that one such word list, Basic English—a parsimonious but usable version of the English language reduced to only 850 words—was the best tool for teaching students to be better close readers.

So, at its historical inception, “close reading” shares a thematic fellow traveler with “distant reading”, language statistics. And in an endnote Igarashi notes that “machine translation, from its advent at midcentury to the present day, has adopted Basic English’s logics of functionality, performativity, and universalism” (p. 501). That’s where I was with David Hays in the 1970s at Buffalo and the notion of a limited vocabulary from which all else could be derived, that was certainly an important one in a number of those models in one form or another. And why not? It’s all language, no?

While Igarashi’s article is historical, in recounting a particular history it is also outlining a boundary that encompasses Ogden and Richards in the first quarter of the 20th century, machine translation and computational linguistics in the third quarter, and “distant” reading in the first quarter of the 21th century. That’s the world of phenomena that we’ll be investigating and reconstructing for the foreseeable future. That’s the land before us.

* * * * *

[1] See, e.g. my Cognitive Networks and Literary Semantics. MLN 91: 952-982, 1976. URL: https://www.academia.edu/235111/Cognitive_Networks_and_Literary_Semantics

William Benzon and David Hays, Computational Linguistics and the Humanist. Computers and the Humanities 10: 265 - 274, 1976. URL: https://www.academia.edu/1334653/Computational_Linguistics_and_the_Humanist

[2] I have a working paper in which I sketch-out some connections between those older models and these newer ones, Toward a Computational Historicism: From Literary Networks to the Autonomous Aesthetic (2014) 27 pp. URL: https://www.academia.edu/7776103/Toward_a_Computational_Historicism_From_Literary_Networks_to_the_Autonomous_Aesthetic

[3] Ted Underwood. Emerging conversations between literary history and sociology. The Stone and the Shell, blog post. December 3, 2015. URL: http://tedunderwood.com/2015/12/02/emerging-conversations-between-literary-history-and-sociology/

[4] For example, see my most recent working paper on description where I discuss the relationship between symbolic models from the 1970s and 1980s and the formal structure of texts, Description 3: The Primacy of Visualization, Working Paper, October 2015. URL: https://www.academia.edu/16835585/Description_3_The_Primacy_of_Visualization

[5] Yohei Igarashi. Statistical Analysis at the Birth of Close Reading. New Literary History, Volume 46, Number 3, Summer 2015, pp. 485-504. DOI: 10.1353/nlh.2015.0023

No comments:

Post a Comment